当前位置:网站首页>Let's see through the network i/o model from beginning to end

Let's see through the network i/o model from beginning to end

2022-07-07 01:13:00 【Linux server development】

We've talked about it before socket Inside Communications , Also understand the network I/O There will be many blocking points , Blocking I/O As the number of users increases, more requests can only be processed by adding threads , Threads not only occupy memory resources, but also too many thread competitions will lead to frequent context switching and huge overhead .

therefore , Blocking I/O Can't meet the demand any more , So the big guys in the back continue to optimize and evolve , Put forward a variety of I/O Model .

stay UNIX Under the system , There are five kinds I/O Model , Today we'll have a plate of it !

But in the introduction I/O Before the model , We need to understand the pre knowledge first .

Kernel state and user state

Our computers may run a lot of programs at the same time , These programs come from different companies .

No one knows whether a program running on a computer will go crazy and do some strange operations , Such as clearing the memory regularly .

therefore CPU It is divided into non privileged instructions and privileged instructions , Done permission control , Some dangerous instructions are not open to ordinary programs , It will only be open to privileged programs such as the operating system .

You can understand that our code can't call those that may produce “ dangerous ” operation , The kernel code of the operating system can call .

these “ dangerous ” Operation finger : Memory allocation recycling , Disk file read / write , Network data reading and writing, etc .

If we want to perform these operations , You can only call the... Opened by the operating system API , Also called system call .

It's like we go to the administration hall , Those sensitive operations are handled by official personnel for us ( system call ), So the reason is the same , The purpose is to prevent us from ( Ordinary procedure ) fuck around .

Here are two more nouns :

User space

Kernel space .

The code of our ordinary program runs in user space , The operating system code runs in kernel space , User space cannot directly access kernel space . When a process runs in user space, it is in user state , Running in kernel space is in kernel state .

When a program in user space makes a system call , That is, call the information provided by the operating system kernel API when , The context will be switched , Switch to kernel mode , It is also often called falling into kernel state .

Then why introduce this knowledge at the beginning ?

Because when the program requests network data , Need to go through two copies :

The program needs to wait for the data to be copied from the network card to the kernel space .

Because the user program cannot access kernel space , So the kernel has to copy the data to user space , In this way, programs in user space can access this data .

Introducing so much is to let you understand why there are two copies , And system calls have overhead , So it's best not to call... Frequently .

Then what we said today I/O The gap between the models is that the implementation of this copy is different !

Today we are going to use read call , That is, read network data as an example to expand I/O Model .

Start !

Synchronous blocking I/O

When the thread of the user program calls read When getting network data , First of all, the data must have , That is, the network card must first receive the data from the client , Then the data needs to be copied to the kernel , Then it is copied to user space , This whole process, the user thread is blocked .

Suppose no client sends data , Then the user thread will be blocked and waiting , Until there's data . Even if there's data , Then the process of two copies has to be blocked .

So this is called synchronous blocking I/O Model .

Its advantages are obvious , Simple . call read After that, it doesn't matter , Until the data comes and is ready for processing .

The disadvantages are obvious , One thread corresponds to one connection , Has been occupied , Even if the network card has no data , Also synchronous blocking waiting .

We all know that threads are heavy resources , It's a bit of a waste .

So we don't want it to wait like this .

So there is synchronous non blocking I/O.

【 Article Welfare 】 In addition, Xiaobian also sorted out some C++ Back-end development interview questions , Teaching video , Back end learning roadmap for free , You can add what you need : Click to join the learning exchange group ~ Group file sharing

Xiaobian strongly recommends C++ Back end development free learning address :C/C++Linux Server development senior architect /C++ Background development architect

Synchronous nonblocking I/O

We can clearly see from the picture , Synchronous nonblocking I/O Based on synchronous blocking I/O optimized :

When there is no data, you can no longer wait foolishly , It's a direct return of the error , Inform that there is no ready data !

Pay attention here , Copy from kernel to user space , The user thread will still be blocked .

This model is compared to synchronous blocking I/O Relatively flexible in terms of , For example, call read If there is no data , Then the thread can do other things first , Then continue to call read See if there's any data .

But if your thread just fetches data and processes it , No other logic , Then there is something wrong with this model .

It means you keep making system calls , If your server needs to handle a large number of connections , Then you need a large number of threads to call , Frequent context switching ,CPU Will also be busy , Do useless work and die busy .

What to do with that ?

So there was I/O Multiplexing .

I/O Multiplexing

From the picture , It seems to be synchronized with the above non blocking I/O Almost , It's actually different , The threading model is different .

Since synchronization is non blocking I/O It's too wasteful to call frequently under too many connections , Then hire a specialist .

The job of this specialist is to manage multiple connections , Help check whether data on the connection is ready .

in other words , You can use only one thread to see if data is ready for multiple connections .

Specific to the code , This commissioner is select , We can go to select Register the connection that needs to be monitored , from select To monitor whether data is ready for the connection it manages , If so, you can notify other threads to read Reading data , This read Same as before , It will still block the user thread .

In this way, a small number of threads can be used to monitor multiple connections , Reduce the number of threads , The memory consumption is reduced and the number of context switches is reduced , Very comfortable .

You must have understood what is I/O Multiplexing .

The so-called multi-channel refers to multiple connections , Reuse means that so many connections can be monitored with one thread .

See this , Think again , What else can be optimized ?

Signal driven I/O

above select Although it's not blocked , But he has to always check to see if any data is ready , Can the kernel tell us that the data has arrived instead of polling ?

Signal driven I/O You can do this , The kernel tells you that the data is ready , Then the user thread goes read( It's still blocking ).

Does it sound better than I/O Multiplexing is good ? Then why do you seem to hear little signal drive I/O?

Why are they all used in the market I/O Multiplexing instead of signal driving ?

Because our applications usually use TCP agreement , and TCP Agreed socket There are seven events that can generate signals .

In other words, it is not only when the data is ready that the signal will be sent , Other events also signal , And this signal is the same signal , So our application has no way to distinguish what events produce this signal .

Then it's numb !

So our applications can't be driven by signals I/O, But if your application uses UDP agreement , That's OK , because UDP Not so many events .

therefore , In this way, for us, signal driven I/O Not really .

asynchronous I/O

Signal driven I/O Although the TCP Not very friendly , But the idea is right : To develop asynchronously , But it's not completely asynchronous , Because the back part read It will still block the user thread , So it's semi asynchronous .

therefore , We have to figure out how to make it fully asynchronous , That is the read That step also saves .

In fact, the idea is very clear : Let the kernel copy the data directly to the user space, and then tell the user thread , To achieve true non blocking I/O!

So asynchronous I/O In fact, it is called by the user thread aio_read , Then it includes the step of copying data from the kernel to user space , All operations are done by the kernel , When the kernel operation is complete , Call the callback set before , At this point, the user thread can continue to perform subsequent operations with the data that has been copied to the user control .

In the whole process , The user thread does not have any blocking points , This is the real non blocking I/O.

So here comes the question :

Why do you still use I/O Multiplexing , Not asynchronously I/O?

because Linux For asynchronous I/O Insufficient support for , You can think that it has not been fully realized , So you can't use asynchronous I/O.

Someone here may be wrong , image Tomcat It's all done AIO Implementation class of , In fact, components like these or some class libraries you use seem to support AIO( asynchronous I/O), In fact, the underlying implementation uses epoll Simulated Implementation .

and Windows Is the realization of real AIO, However, our servers are generally deployed in Linux Upper , So the mainstream is still I/O Multiplexing .

Reference material

Recommend a zero sound education C/C++ Free open courses developed in the background , Personally, I think the teacher spoke well , Share with you :C/C++ Background development senior architect , The content includes Linux,Nginx,ZeroMQ,MySQL,Redis,fastdfs,MongoDB,ZK, Streaming media ,CDN,P2P,K8S,Docker,TCP/IP, coroutines ,DPDK Etc , Learn now

original text : Let us , From a to Z , Be transparent I/O Model

边栏推荐

- Installation of torch and torch vision in pytorch

- 第六篇,STM32脉冲宽度调制(PWM)编程

- Implementation principle of waitgroup in golang

- 【案例分享】网络环路检测基本功能配置

- Installation and testing of pyflink

- 深度学习简史(二)

- Let's talk about 15 data source websites I often use

- golang中的WaitGroup实现原理

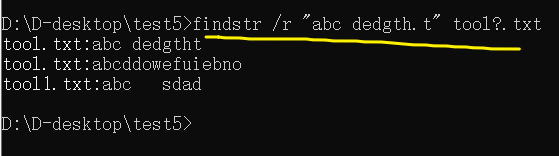

- [Batch dos - cmd Command - Summary and Summary] - String search, find, Filter Commands (FIND, findstr), differentiation and Analysis of Find and findstr

- Explain in detail the matrix normalization function normalize() of OpenCV [norm or value range of the scoped matrix (normalization)], and attach norm_ Example code in the case of minmax

猜你喜欢

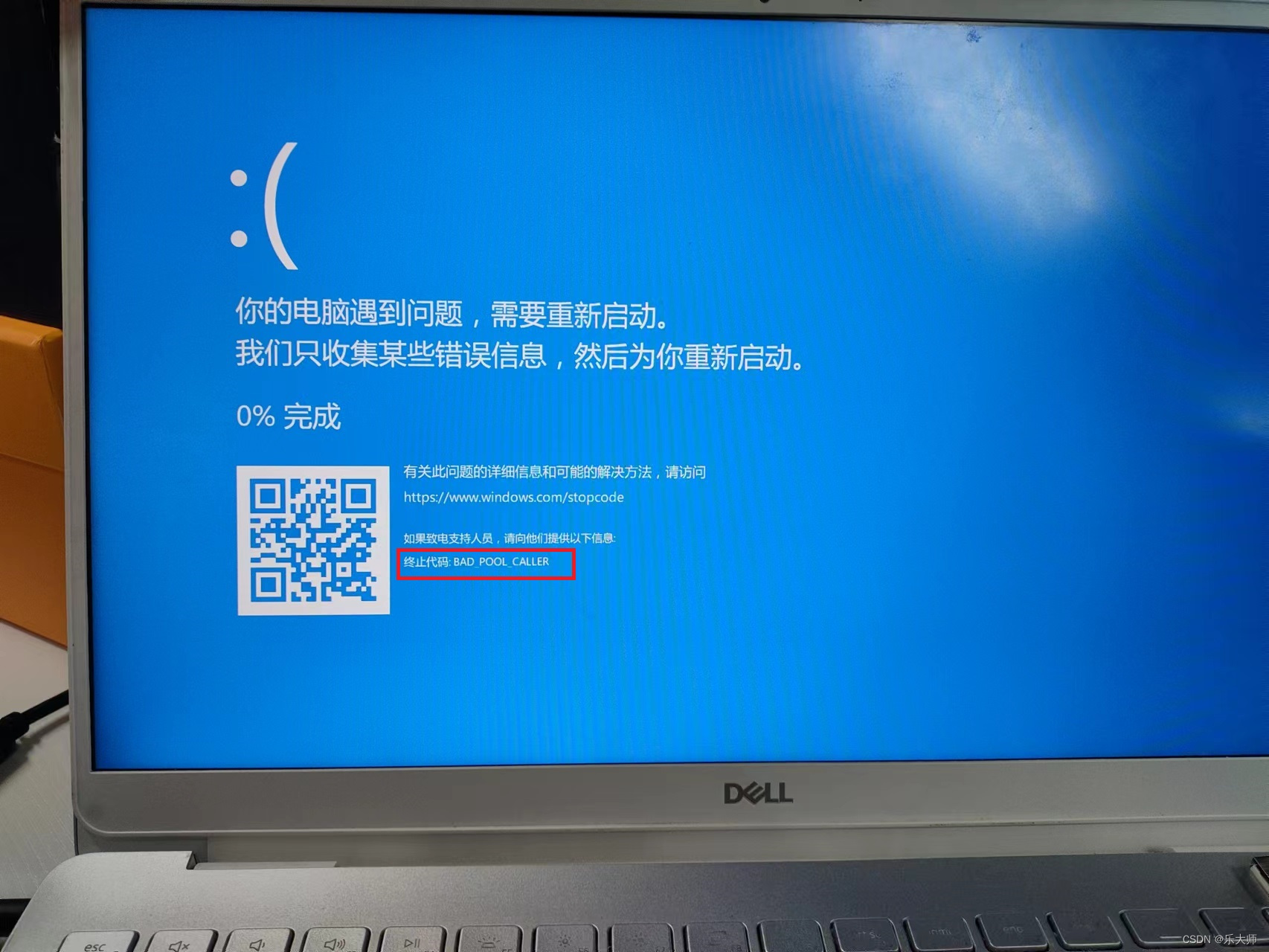

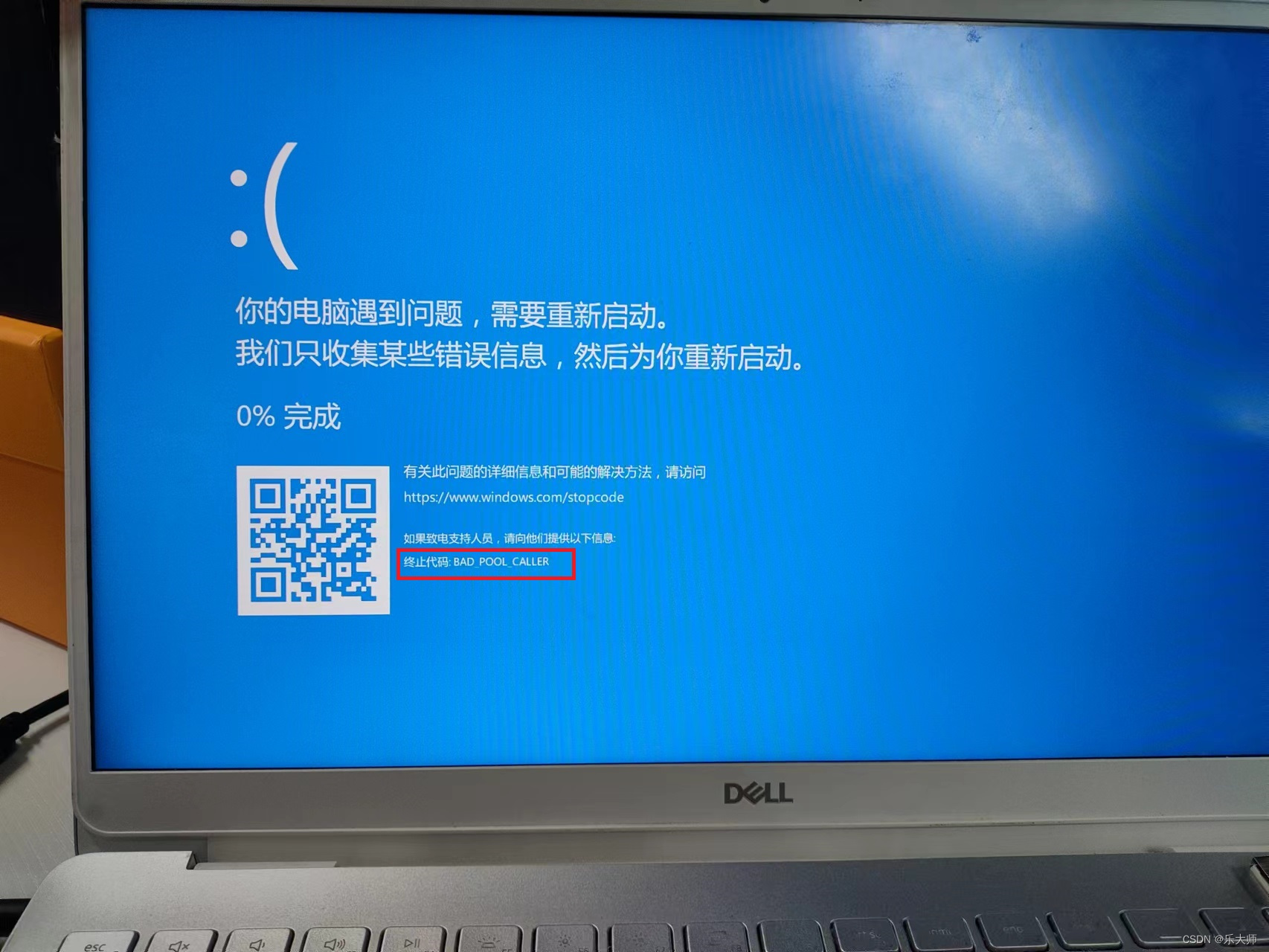

Periodic flash screen failure of Dell notebook

![Explain in detail the matrix normalization function normalize() of OpenCV [norm or value range of the scoped matrix (normalization)], and attach norm_ Example code in the case of minmax](/img/87/3fee9e6f687b0c3efe7208a25f07f1.png)

Explain in detail the matrix normalization function normalize() of OpenCV [norm or value range of the scoped matrix (normalization)], and attach norm_ Example code in the case of minmax

LLDP兼容CDP功能配置

Dell Notebook Periodic Flash Screen Fault

【批处理DOS-CMD命令-汇总和小结】-字符串搜索、查找、筛选命令(find、findstr),Find和findstr的区别和辨析

![[牛客] [NOIP2015]跳石头](/img/9f/b48f3c504e511e79935a481b15045e.png)

[牛客] [NOIP2015]跳石头

![[batch dos-cmd command - summary and summary] - string search, search, and filter commands (find, findstr), and the difference and discrimination between find and findstr](/img/4a/0dcc28f76ce99982f930c21d0d76c3.png)

[batch dos-cmd command - summary and summary] - string search, search, and filter commands (find, findstr), and the difference and discrimination between find and findstr

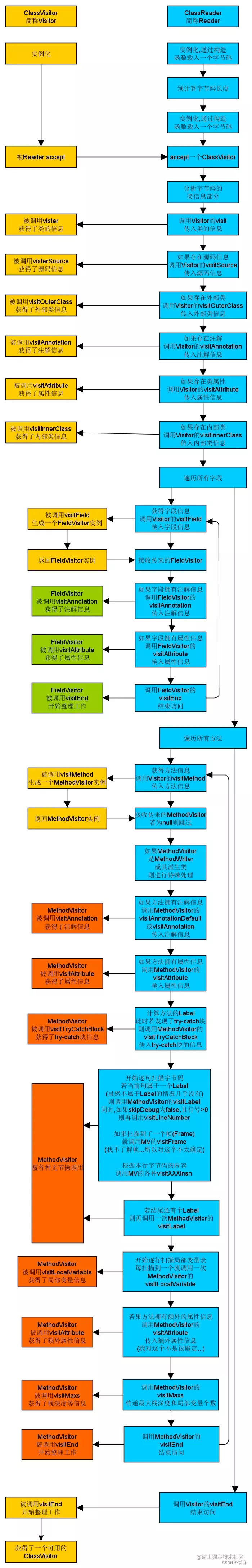

Deeply explore the compilation and pile insertion technology (IV. ASM exploration)

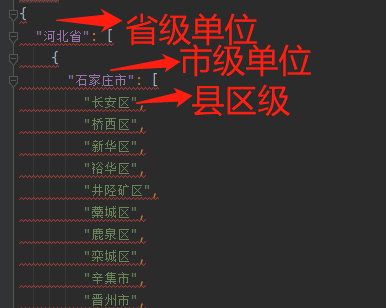

批量获取中国所有行政区域经边界纬度坐标(到县区级别)

![[user defined type] structure, union, enumeration](/img/a5/d6bcfb128ff6c64f9d18ac4c209210.jpg)

[user defined type] structure, union, enumeration

随机推荐

NEON优化:性能优化经验总结

Segmenttree

【批处理DOS-CMD命令-汇总和小结】-字符串搜索、查找、筛选命令(find、findstr),Find和findstr的区别和辨析

gnet: 一个轻量级且高性能的 Go 网络框架 使用笔记

[Niuke] [noip2015] jumping stone

Dell笔记本周期性闪屏故障

Boot - Prometheus push gateway use

golang中的atomic,以及CAS操作

详解OpenCV的矩阵规范化函数normalize()【范围化矩阵的范数或值范围(归一化处理)】,并附NORM_MINMAX情况下的示例代码

[牛客] [NOIP2015]跳石头

Windows installation mysql8 (5 minutes)

[100 cases of JVM tuning practice] 04 - Method area tuning practice (Part 1)

Part VI, STM32 pulse width modulation (PWM) programming

windows安装mysql8(5分钟)

Trace tool for MySQL further implementation plan

HMM 笔记

让我们,从头到尾,通透网络I/O模型

Atomic in golang, and cas Operations

NEON优化:矩阵转置的指令优化案例

Grc: personal information protection law, personal privacy, corporate risk compliance governance