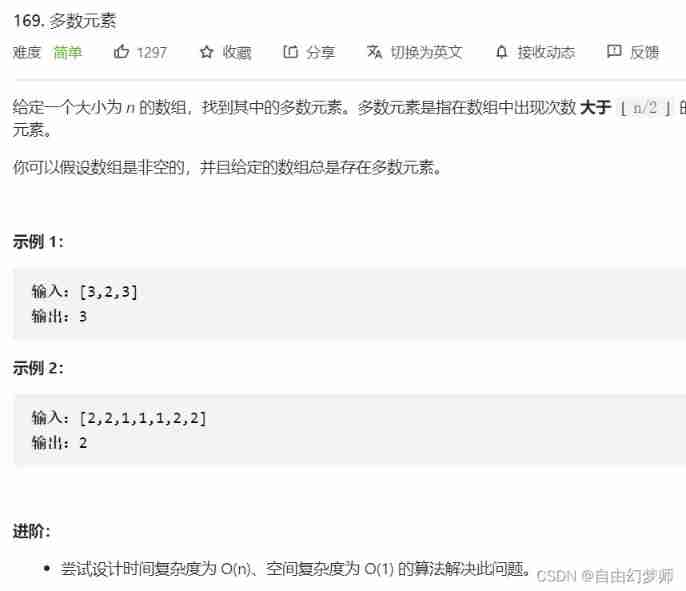

当前位置:网站首页>Complete linear regression manually based on pytoch framework

Complete linear regression manually based on pytoch framework

2022-07-07 08:07:00 【Students who don't want to be bald】

Pytorch Complete linear regression

hello, Dear friends, I haven't seen you for a long time , I am busy with the final exam recently , Continue to update our after now Pytorch Frame learning notes

The goal is

- know

requires_gradThe role of - Know how to use

backward - Know how to do linear regression manually

1. Forward calculation

about pytorch One of them tensor, If you set its properties .requires_grad by True, Then it will track all operations on the tensor . Or it can be understood as , This tensor It's a parameter , The gradient will be calculated later , Update this parameter .

1.1 The calculation process

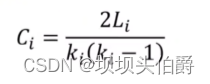

Suppose the following conditions (1/4 Mean value ,xi There is 4 Number ), Use torch The process of completing its forward calculation

KaTeX parse error: No such environment: align* at position 8: \begin{̲a̲l̲i̲g̲n̲*̲}̲ &o = \frac{1}{…

If x Is the parameter , The gradient needs to be calculated and updated

that , Set randomly at the beginning x The value of , Need to set his requires_grad The attribute is True, Its The default value is False

import torch

x = torch.ones(2, 2, requires_grad=True) # Initialize parameters x And set up requires_grad=True Used to track its computing history

print(x)

#tensor([[1., 1.],

# [1., 1.]], requires_grad=True)

y = x+2

print(y)

#tensor([[3., 3.],

# [3., 3.]], grad_fn=<AddBackward0>)

z = y*y*3 # square x3

print(x)

#tensor([[27., 27.],

# [27., 27.]], grad_fn=<MulBackward0>)

out = z.mean() # Calculating mean

print(out)

#tensor(27., grad_fn=<MeanBackward0>)

As can be seen from the above code :

- x Of requires_grad The attribute is True

- Each subsequent calculation will modify its

grad_fnattribute , Used to record operations done- Through this function and grad_fn It can form a calculation diagram similar to the previous section

1.2 requires_grad and grad_fn

a = torch.randn(2, 2)

a = ((a * 3) / (a - 1))

print(a.requires_grad) #False

a.requires_grad_(True) # Modify in place

print(a.requires_grad) #True

b = (a * a).sum()

print(b.grad_fn) # <SumBackward0 object at 0x4e2b14345d21>

with torch.no_gard():

c = (a * a).sum() #tensor(151.6830), here c No, gard_fn

print(c.requires_grad) #False

Be careful :

To prevent tracking history ( And using memory ), You can wrap code blocks in with torch.no_grad(): in . Especially useful when evaluating models , Because the model may have requires_grad = True Trainable parameters , But we don't need to calculate the gradient of them in the process .

2. Gradient calculation

about 1.1 Medium out for , We can use backward Method for back propagation , Calculate the gradient

out.backward(), Then we can find the derivative d o u t d x \frac{d out}{dx} dxdout, call x.gard Can get the derivative value

obtain

tensor([[4.5000, 4.5000],

[4.5000, 4.5000]])

because :

d ( O ) d ( x i ) = 3 2 ( x i + 2 ) \frac{d(O)}{d(x_i)} = \frac{3}{2}(x_i+2) d(xi)d(O)=23(xi+2)

stay x i x_i xi be equal to 1 The value is 4.5

Be careful : When the output is a scalar , We can call the output tensor Of backword() Method , But when the data is a vector , call backward() You also need to pass in other parameters .

Many times our loss function is a scalar , So we won't introduce the case where the loss is a vector .

loss.backward() Is based on the loss function , For parameters (requires_grad=True) To calculate his gradient , And add it up and save it to x.gard, Its gradient has not been updated at this time

Be careful :

tensor.data:stay tensor Of require_grad=False,tensor.data and tensor Equivalent

require_grad=True when ,tensor.data Just to get tensor Data in

tensor.numpy():require_grad=TrueCannot convert directly , Need to usetensor.detach().numpy()

3. Linear regression realizes

below , We use a custom data , To use torch Implement a simple linear regression

Suppose our basic model is y = wx+b, among w and b All parameters , We use y = 3x+0.8 To construct data x、y, So finally, through the model, we should be able to get w and b Should be close to 3 and 0.8

- Prepare the data

- Calculate the predicted value

- Calculate the loss , Set the gradient of the parameter to 0, Back propagation

- Update parameters

import torch

import numpy as np

from matplotlib import pyplot as plt

#1. Prepare the data y = 3x+0.8, Prepare parameters

x = torch.rand([50])

y = 3*x + 0.8

w = torch.rand(1,requires_grad=True)

b = torch.rand(1,requires_grad=True)

def loss_fn(y,y_predict):

loss = (y_predict-y).pow(2).mean()

for i in [w,b]:

# Set the gradient to... Before each back propagation 0

if i.grad is not None:

i.grad.data.zero_()

# [i.grad.data.zero_() for i in [w,b] if i.grad is not None]

loss.backward()

return loss.data

def optimize(learning_rate):

# print(w.grad.data,w.data,b.data)

w.data -= learning_rate* w.grad.data

b.data -= learning_rate* b.grad.data

for i in range(3000):

#2. Calculate the predicted value

y_predict = x*w + b

#3. Calculate the loss , Set the gradient of the parameter to 0, Back propagation

loss = loss_fn(y,y_predict)

if i%500 == 0:

print(i,loss)

#4. Update parameters w and b

optimize(0.01)

# Drawing graphics , Observe the predicted value and real value at the end of training

predict = x*w + b # Use the trained w and b Calculate the predicted value

plt.scatter(x.data.numpy(), y.data.numpy(),c = "r")

plt.plot(x.data.numpy(), predict.data.numpy())

plt.show()

print("w",w)

print("b",b)

The graphic effect is as follows :

[ Failed to transfer the external chain picture , The origin station may have anti-theft chain mechanism , It is suggested to save the pictures and upload them directly (img-EPoO4Eha-1656763156468)(…/images/1.2/ Linear regression 1.png)]

Print w and b, Can have

w tensor([2.9280], requires_grad=True)

b tensor([0.8372], requires_grad=True)

You know ,w and b Already very close to the original preset 3 and 0.8

The graphic effect is as follows :

[ Outside the chain picture transfer in ...(img-EPoO4Eha-1656763156468)]

Print w and b, Can have

```python

w tensor([2.9280], requires_grad=True)

b tensor([0.8372], requires_grad=True)

You know ,w and b Already very close to the original preset 3 and 0.8

边栏推荐

- Linux server development, redis source code storage principle and data model

- The legend about reading the configuration file under SRC

- Real time monitoring of dog walking and rope pulling AI recognition helps smart city

- Qt学习27 应用程序中的主窗口

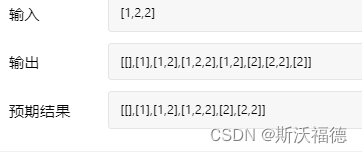

- Leetcode 90: subset II

- Bugku CTF daily one question chessboard with only black chess

- Recursive construction of maximum binary tree

- C language queue

- 通俗易懂单点登录SSO

- 2022茶艺师(初级)考试题模拟考试题库及在线模拟考试

猜你喜欢

随机推荐

buureservewp(2)

Leetcode 40: combined sum II

2022焊工(初级)判断题及在线模拟考试

[quickstart to Digital IC Validation] 15. Basic syntax for SystemVerilog Learning 2 (operator, type conversion, loop, Task / Function... Including practical exercises)

【数字IC验证快速入门】12、SystemVerilog TestBench(SVTB)入门

【数字IC验证快速入门】13、SystemVerilog interface 和 program 学习

Qt学习27 应用程序中的主窗口

【数字IC验证快速入门】11、Verilog TestBench(VTB)入门

Chip design data download

Linux Installation MySQL 8.0 configuration

JS复制图片到剪切板 读取剪切板

The charm of SQL optimization! From 30248s to 0.001s

Cnopendata geographical distribution data of religious places in China

ZCMU--1492: Problem D(C语言)

Empire CMS collection Empire template program general

Cnopendata list data of Chinese colleges and Universities

Find the mode in the binary search tree (use medium order traversal as an ordered array)

快解析内网穿透助力外贸管理行业应对多种挑战

调用 pytorch API完成线性回归

Linux server development, MySQL process control statement