当前位置:网站首页>keras model.compile Loss function and optimizer

keras model.compile Loss function and optimizer

2020-11-06 01:22:00 【Elementary school students in IT field】

Loss function

summary

Loss function is the goal of model optimization , So it's also called objective function 、 Optimize the scoring function , stay keras in , Parameters for model compilation loss Class of loss function specified , There are two ways of specifying :

model.compile(loss='mean_squared_error', optimizer='sgd')

perhaps

from keras import losses

model.compile(loss=losses.mean_squared_error, optimizer='sgd')

Available loss function

Available loss objective function :

mean_squared_error or mse

mean_absolute_error or mae

mean_absolute_percentage_error or mape

mean_squared_logarithmic_error or msle

squared_hinge

hinge

categorical_hinge

binary_crossentropy( Also called logarithmic loss ,logloss)

logcosh

categorical_crossentropy: Also known as multi class logarithmic loss , Note when using this objective function , The label needs to be transformed into a shape like (nb_samples, nb_classes) Binary sequence of

sparse_categorical_crossentrop: Above , But accept sparse tags . Be careful , When using this function, you still need to have the same dimension as the output value , You may need to add a dimension to the tag data :np.expand_dims(y,-1)

kullback_leibler_divergence: From the probability distribution of predicted values Q To the truth probability distribution P Information gain of , To measure the difference between two distributions .

poisson: namely (predictions - targets * log(predictions)) The average of

cosine_proximity: That is, the inverse number between the predicted value and the average cosine distance of the real label

Loss function formula

https://zhuanlan.zhihu.com/p/34667893

Two classification - Report errors

On the loss function of the report error :

use Keras Do text classification , I always have mistakes like this ,

My category is 0 or 1, But the mistake told me it couldn't be 1.

See :Received a label value of 1 which is outside the valid range of [0, 1) - Python, Keras

loss function The problem of .

It used to be sparse_categorical_crossentropy,

Change it to binary_crossentropy Problem solving .

Optimizer

https://www.cnblogs.com/xiaobingqianrui/p/10756046.html

版权声明

本文为[Elementary school students in IT field]所创,转载请带上原文链接,感谢

边栏推荐

- ipfs正舵者Filecoin落地正当时 FIL币价格破千来了

- Face to face Manual Chapter 16: explanation and implementation of fair lock of code peasant association lock and reentrantlock

- 中小微企业选择共享办公室怎么样?

- 熬夜总结了报表自动化、数据可视化和挖掘的要点,和你想的不一样

- 合约交易系统开发|智能合约交易平台搭建

- 一篇文章带你了解CSS3图片边框

- 10 easy to use automated testing tools

- PHP应用对接Justswap专用开发包【JustSwap.PHP】

- Do not understand UML class diagram? Take a look at this edition of rural love class diagram, a learn!

- 加速「全民直播」洪流,如何攻克延时、卡顿、高并发难题?

猜你喜欢

How do the general bottom buried points do?

合约交易系统开发|智能合约交易平台搭建

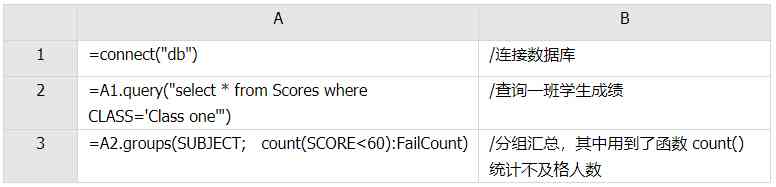

Examples of unconventional aggregation

在大规模 Kubernetes 集群上实现高 SLO 的方法

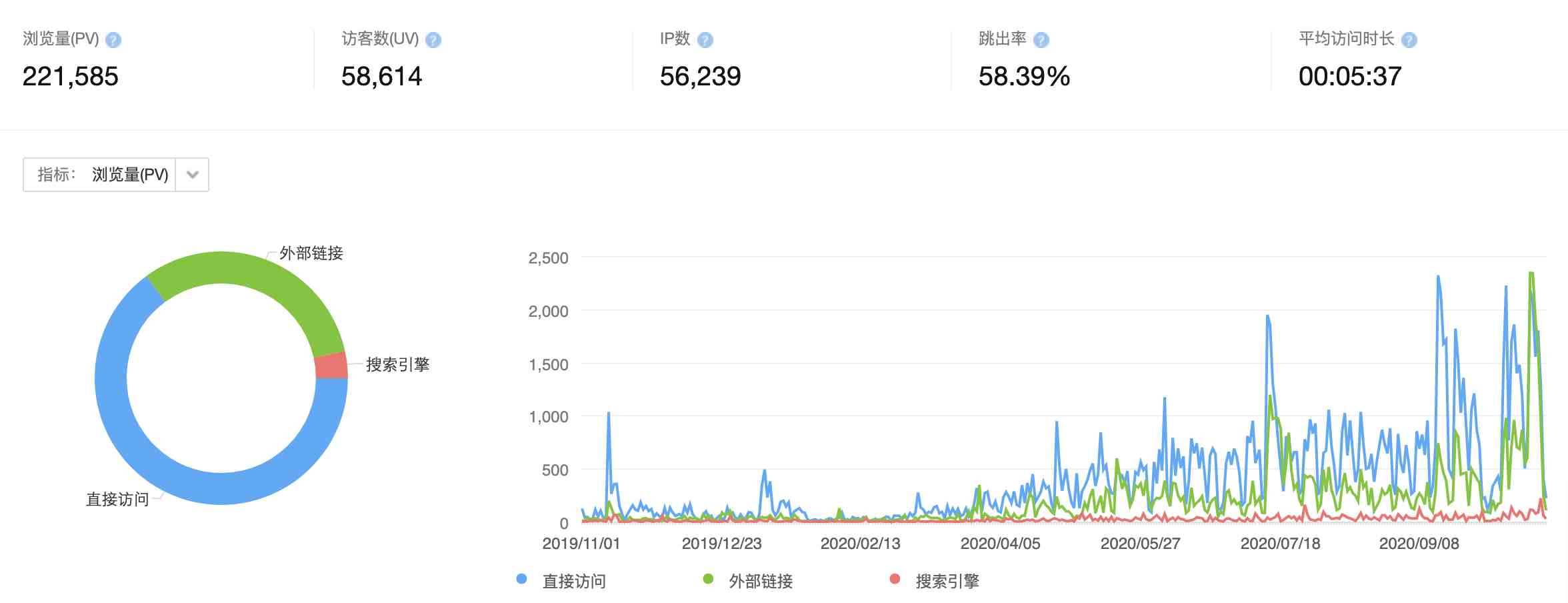

速看!互联网、电商离线大数据分析最佳实践!(附网盘链接)

I think it is necessary to write a general idempotent component

每个前端工程师都应该懂的前端性能优化总结:

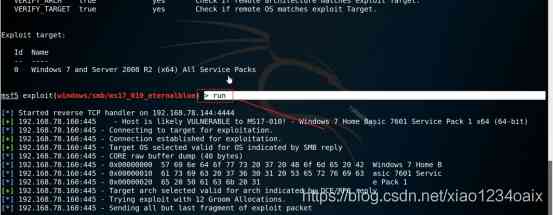

Network security engineer Demo: the original * * is to get your computer administrator rights! 【***】

Did you blog today?

中小微企业选择共享办公室怎么样?

随机推荐

Python自动化测试学习哪些知识?

Python + appium automatic operation wechat is enough

Working principle of gradient descent algorithm in machine learning

大数据应用的重要性体现在方方面面

This article will introduce you to jest unit test

PN8162 20W PD快充芯片,PD快充充电器方案

Not long after graduation, he earned 20000 yuan from private work!

6.1.1 handlermapping mapping processor (1) (in-depth analysis of SSM and project practice)

Python download module to accelerate the implementation of recording

How to select the evaluation index of classification model

快快使用ModelArts,零基础小白也能玩转AI!

[C / C + + 1] clion configuration and running C language

一篇文章带你了解CSS3图片边框

Network security engineer Demo: the original * * is to get your computer administrator rights! 【***】

A debate on whether flv should support hevc

PHP应用对接Justswap专用开发包【JustSwap.PHP】

Vuejs development specification

多机器人行情共享解决方案

Aprelu: cross border application, adaptive relu | IEEE tie 2020 for machine fault detection

Network security engineer Demo: the original * * is to get your computer administrator rights! 【***】