当前位置:网站首页>Ideas of high concurrency and high traffic seckill scheme

Ideas of high concurrency and high traffic seckill scheme

2022-07-07 06:12:00 【Life goes on and battles go on】

Concept : What is seckill

Seckill scenes are usually held in e-commerce or on holidays 12306 I met... When I was grabbing tickets on the website . For some scarce or special products in the website , E-commerce websites will generally carry out Limited sales at the agreed time , Because of the particularity of these products , Will attract a large number of users to rush to buy , And it will rush to buy on the seckill page at the agreed time

Characteristics of seckill system scenario

1) A large number of users will rush to buy at the same time , Website instant traffic surge .

2) Seckill is generally the number of access requests is far greater than the number of inventory , Only a small number of users can kill successfully

3) Seckill business process is relatively simple , Generally, it is to place an order to reduce the inventory .

Second kill architecture design concept

Current limiting : Given that only a small number of users can kill successfully , So we should limit part of the flow , Only a small amount of traffic is allowed to enter the server backend .

Peak elimination : Corresponding to the seckill system, a large number of users will rush into the system , So there will be a very high instantaneous peak at the beginning of rush buying . High peak flow is an important principle for crushing the system , Therefore, how to turn the instantaneous high traffic into a stable traffic is also a very important idea in the design of secsha system , The common method to realize peak elimination uses cache and message oriented middleware technology

Asynchronous processing : Seckill system is a high concurrency system , Using asynchronous processing mode can greatly improve the system concurrency , In fact, asynchronous processing is an implementation of peak elimination

Memory cache : The biggest bottleneck of seckill system is generally database reading and writing , Because database read and write belong to disk IO, Very low performance , If we can transfer some data or business logic to memory cache , Efficiency will be greatly improved

Extensibility : Of course, if we want to support more users , More concurrency , It is best to design the system to be elastic and extensible , If the flow comes , Just expand the machine , Like Taobao , JD's "double 11" event will add a large number of machines to cope with the trading peak

Design thinking

Block requests upstream of the system , Reduce downflow pressure : The secsha system is characterized by a large amount of concurrency , However, the number of successful requests is very small , Therefore, if not intercepted at the front end, the database read / write lock conflict may occur , Even lead to deadlock , The final request timed out , It even causes the system to crash

Make the most of the cache : Using cache can greatly improve the read and write speed of the system

Message queue : Message queues can be peaked , Will intercept a large number of concurrent requests , This is also an asynchronous process , Background business according to their own processing power , Pull the request message from the message queue for business processing

Front end solution

Browser side (js):

1) Page static : Static all the static elements on the active page , And minimize dynamic elements , adopt CDN To resist peaks

2) Do not submit again : The button is grayed out after the user submits , Do not submit again

3) User current limiting : Users are only allowed to submit requests once at a time , For example, we can take IP Current limiting

Back end solution

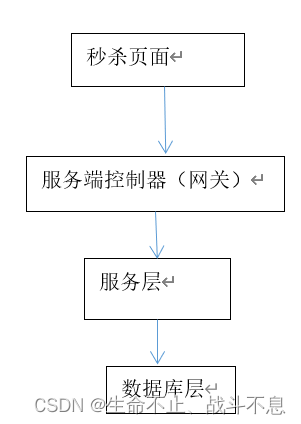

Server controller layer ( Gateway layer )

Limit UID(userID) Access frequency : We intercepted the browser's access request , But it must be against some malicious requests and attacks or other plug-ins , The server control layer should be aligned with the same uid, Limit access frequency

Service layer

Only part of the requests are intercepted , When the number of seckill users is very large , Even if there is only one request per user , The number of requests to the service layer is still large . For example, we have 100w Users rush to buy at the same time 100 Taiwan mobile phone , The concurrent request pressure of the service layer is at least 100w.

1) Using message queuing to cache requests : Since the server layer knows that only 100 Taiwan mobile phone , There is no need to put 100w All requests are passed to the database , You can write these requests to the message queue and cache them , The database layer subscribes to messages to reduce inventory , Successful inventory reduction request returns seckill success , Fail to return to the end of the second kill

2) Use cache to respond to read requests : Yes, similar 12306 Wait for the ticket business , It's a typical business of reading more and writing less , Most requests are queries , So we can use cache to share the database pressure

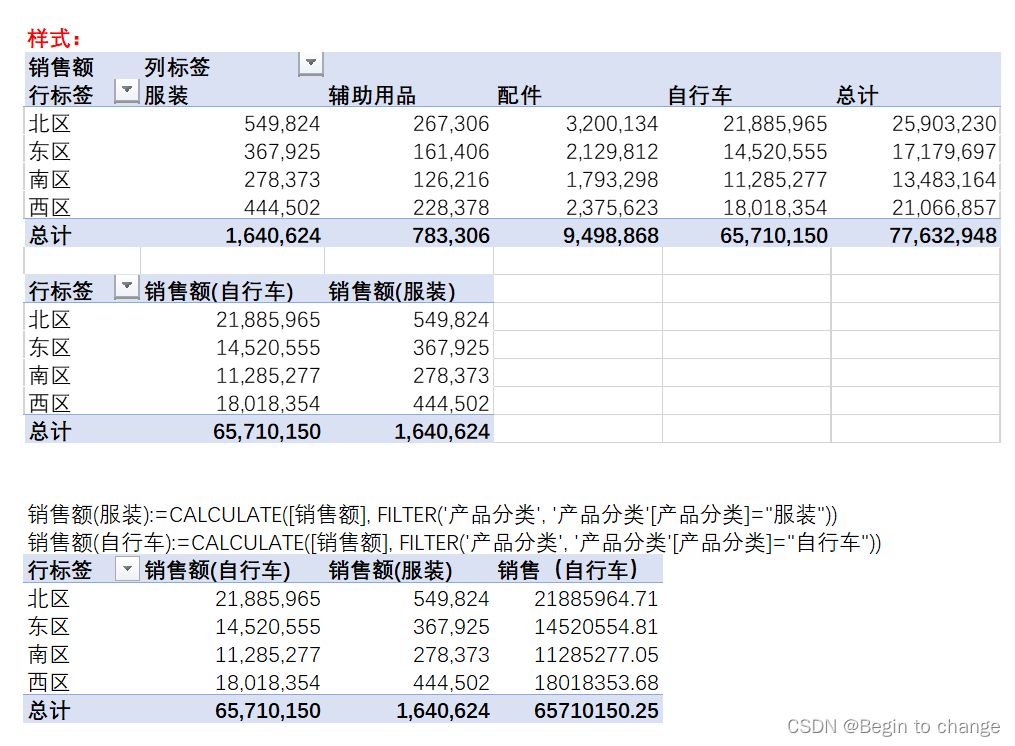

3) Write requests using cache : The cache can also handle write requests , For example, we can migrate the inventory data in the database to Redis In cache , All inventory reduction operations are in Redis In the middle of , Then through the background process Redis The user seckill request in is synchronized to the database

Database layer

The database layer is the most vulnerable layer , Generally, in the application design, the upstream needs to intercept the request , The database layer only undertakes “ In one's power ” Access requests for . therefore , Above, the queue and cache introduced in the service layer , Let the underlying database rest easy

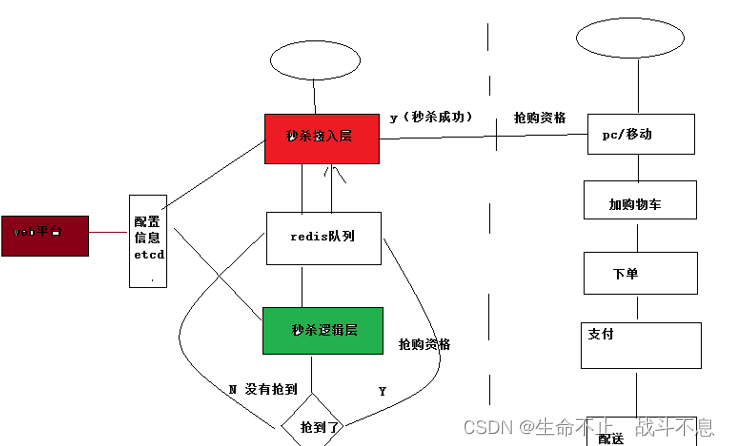

Case study : Using message oriented middleware and cache to realize simple description of the system

Redis It's a distributed caching system , Support multiple data structures , We can use Redis Easily realize a powerful seckill system .

We can use Redis The simplest key-value data structure , Use the value of an atomic variable as key, Put the user id As value, The stock quantity is the maximum value of the atomic variable , Second kill for each user , We use RPUSH key value Insert second kill request , When the number of inserted seckill requests reaches the maximum , Stop all subsequent inserts .

Then we can start multiple worker threads in the background , Use LPOP key Read the second kill winner's id, Then make the final order and reduce the stock in the operation database

Of course ,Redis Can be replaced by Message middleware ActiveMQ,RabbitMQ, You can also combine caching and message oriented middleware , The caching system is responsible for receiving and recording user requests , The message middleware is responsible for synchronizing the requests in the cache to the database

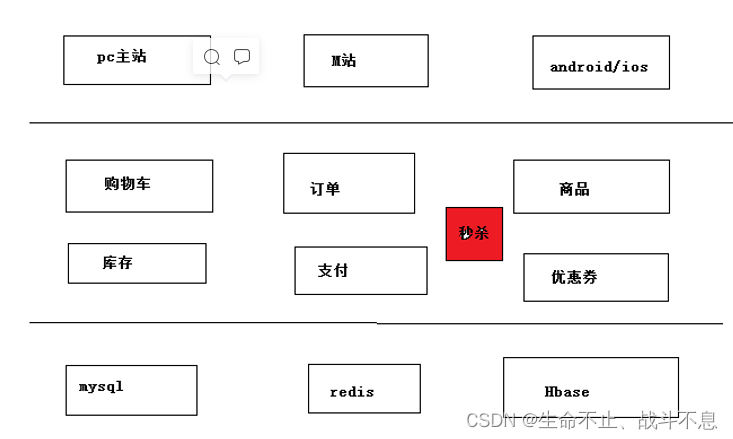

General structure of e-commerce websites

Second kill the first version

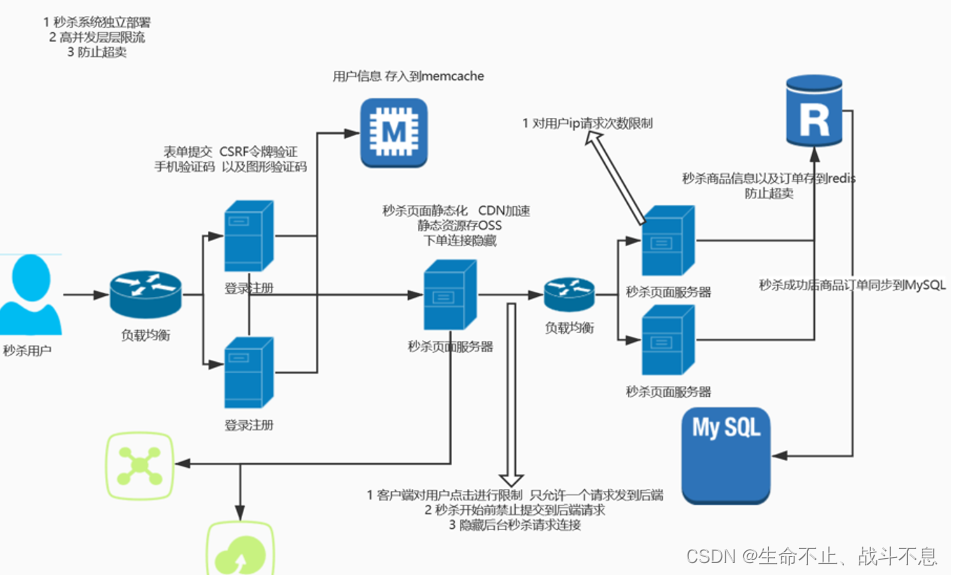

Secsha system architecture diagram

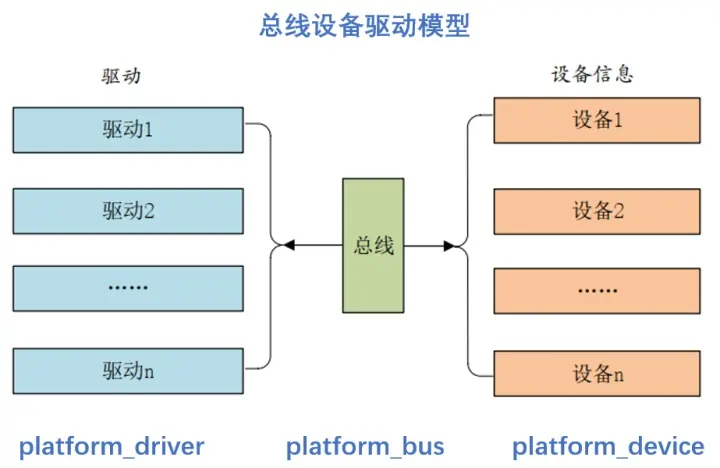

Seckill system needs to be independent

Second kill problem

Impact on existing business ( Separate seckill services )

High traffic and high concurrency (redis Cache handling 、MQ Process order requests asynchronously )

A sudden increase in network bandwidth ( Increase bandwidth during seckill activity 、CDN+OSS Cache static files )

Oversold (redis The queue caches the quantity of goods to prevent oversold )

Prevent scalpers (IP Restriction times )

Solution

Separate deployment

Current limiting Fuse

cache

CDN+OSS

Message queue

边栏推荐

- Cloud acceleration helps you effectively solve attack problems!

- [InstallShield] Introduction

- 【FPGA教程案例14】基于vivado核的FIR滤波器设计与实现

- Sequential storage of stacks

- Cf:c. column swapping [sort + simulate]

- 【GNN】图解GNN: A gentle introduction(含视频)

- 那些自损八百的甲方要求

- Mac version PHP installed Xdebug environment (M1 version)

- The boss always asks me about my progress. Don't you trust me? (what do you think)

- 每秒10W次分词搜索,产品经理又提了一个需求!!!(收藏)

猜你喜欢

随机推荐

那些自损八百的甲方要求

@Detailed differences between pathvariable and @requestparam

[云原生]微服务架构是什么?

如果不知道这4种缓存模式,敢说懂缓存吗?

Swagger3 configuration

10W word segmentation searches per second, the product manager raised another demand!!! (Collection)

Flask1.1.4 Werkzeug1.0.1 源碼分析:啟動流程

Storage of dental stem cells (to be continued)

PTA 天梯赛练习题集 L2-003 月饼 测试点2,测试点3分析

vim映射大K

CMD permanently delete specified folders and files

[InstallShield] Introduction

EMMC print cqhci: timeout for tag 10 prompt analysis and solution

Rk3399 platform development series explanation (WiFi) 5.53, hostapd (WiFi AP mode) configuration file description

Go语学习笔记 - gorm使用 - 原生sql、命名参数、Rows、ToSQL | Web框架Gin(九)

Industrial Finance 3.0: financial technology of "dredging blood vessels"

Jstat of JVM command: View JVM statistics

yarn入门(一篇就够了)

On the discrimination of "fake death" state of STC single chip microcomputer

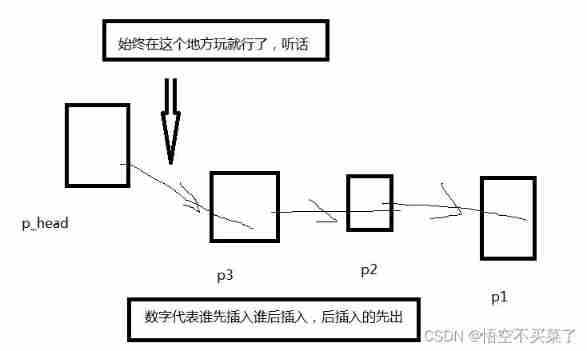

Chain storage of stack