当前位置:网站首页>MapReduce instance (VI): inverted index

MapReduce instance (VI): inverted index

2022-07-06 09:33:00 【Laugh at Fengyun Road】

MR Realization Inverted index

Hello everyone , I am Fengyun , Welcome to my blog perhaps WeChat official account 【 Laugh at Fengyun Road 】, In the days to come, let's learn about big data related technologies , Work hard together , Meet a better self !

The principle of inverted index

- " Inverted index " It is the most commonly used data structure in document retrieval system , It is widely used in full text search engine .

- It's mainly used to store a word ( Or phrases ) Mapping of storage locations in a document or group of documents , That is, it provides a basis " Content to find documents " The way . Because it is not based on " Document to determine what the document contains " The content of , Instead, do the opposite , So it's called inverted index (Inverted Index)

- Realization " Inverted index " The main information of concern is : word 、 file URL And word frequency

Inverted index is mainly used to store a word ( Or phrases ) Mapping of storage locations in a document or group of documents , That is, it provides a basis " Content to find documents " The way .

Realize the idea

according to MapReduce The design idea of inverted index is given :

(1)Map The process

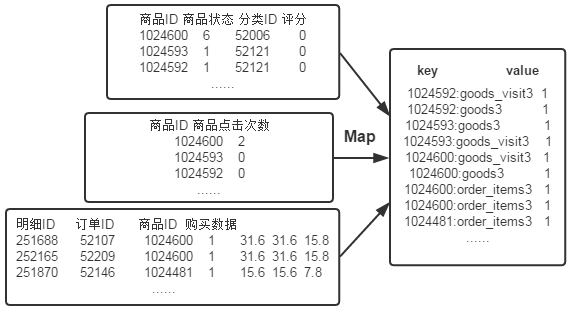

First, use the default TextInputFormat Class to process the input file , Get the offset of each line in the text and its content . obviously ,Map The process must first analyze the input <key,value> Yes , Get the three information needed in the inverted index : word 、 file URL And word frequency , Then we use the read data Map Operation for pretreatment , As shown in the figure below :

There are two problems :

First of all ,<key,value> Yes, there can only be two values , Without using Hadoop In the case of custom data types , Two of these values need to be combined into one value according to the situation , As key or value value .

second , Through one Reduce The process cannot complete word frequency statistics and generate document list at the same time , So we must add one Combine Process complete word frequency statistics .

Here's the product ID and URL form key value ( Such as "1024600:goods3"), Will word frequency ( goods ID Number of occurrences ) As value, The advantage of this is that you can take advantage of MapReduce The frame comes with Map End sort , Make a list of the word frequencies of the same words in the same document , Pass to Combine The process , The implementation is similar to WordCount The function of .

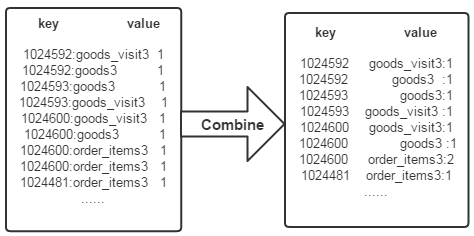

(2)Combine The process

after map Method after treatment ,Combine The process will key Same value value Value accumulation , Get the word frequency of a word in the document , As shown in the figure below . If you directly use the output shown in the following figure as Reduce Input to the process , stay Shuffle The process will face a problem : All records with the same word ( By word 、URL And word frequency ) It should be handed over to the same Reducer Handle , But the current key Value does not guarantee this , So we have to modify key Values and value value . This time, put the word ( goods ID) As key value ,URL And word frequency value value ( Such as "goods3:1"). The advantage of this is that you can take advantage of MapReduce Frame default HashPartitioner Class completion Shuffle The process , Send all records of the same word to the same Reducer To deal with .

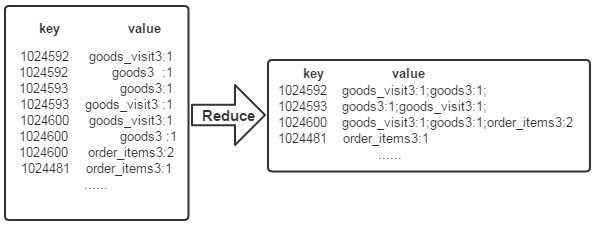

(3)Reduce The process

After the above two processes ,Reduce The process only needs to be the same key All that's worth value The values are combined into the format required by the inverted index file , The rest can be handed over directly to MapReduce The framework deals with . As shown in the figure below

Code writing

Map Code

First, use the default TextInputFormat Class to process the input file , Get the offset of each line in the text and its content . obviously ,Map The process must first analyze the input <key,value> Yes , Get the three information needed in the inverted index : word 、 file URL And word frequency , There are two problems : First of all ,<key,value> Yes, there can only be two values , Without using Hadoop In the case of custom data types , Two of these values need to be combined into one value according to the situation , As key or value value . second , Through one Reduce The process cannot complete word frequency statistics and generate document list at the same time , So we must add one Combine Process complete word frequency statistics .

public static class doMapper extends Mapper<Object, Text, Text, Text>{

public static Text myKey = new Text(); // Store words and URL Combine

public static Text myValue = new Text(); // Stored word frequency

//private FileSplit filePath; // Storage Split object

@Override // Realization map function

protected void map(Object key, Text value, Context context)

throws IOException, InterruptedException {

String filePath=((FileSplit)context.getInputSplit()).getPath().toString();

if(filePath.contains("goods")){

String val[]=value.toString().split("\t");

int splitIndex =filePath.indexOf("goods");

myKey.set(val[0] + ":" + filePath.substring(splitIndex));

}else if(filePath.contains("order")){

String val[]=value.toString().split("\t");

int splitIndex =filePath.indexOf("order");

myKey.set(val[2] + ":" + filePath.substring(splitIndex));

}

myValue.set("1");

context.write(myKey, myValue);

}

}

Combiner Code

after map Method after treatment ,Combine The process will key Same value value Value accumulation , Get the word frequency of a word in the document . If the output is directly used as Reduce Input to the process , stay Shuffle The process will face a problem : All records with the same word ( By word 、URL And word frequency ) It should be handed over to the same Reducer Handle , But the current key Value does not guarantee this , So we have to modify key Values and value value . This time use the word as key value ,URL And word frequency value value . The advantage of this is that you can take advantage of MapReduce Frame default HashPartitioner Class completion Shuffle The process , Send all records of the same word to the same Reducer To deal with .

public static class doCombiner extends Reducer<Text, Text, Text, Text>{

public static Text myK = new Text();

public static Text myV = new Text();

@Override // Realization reduce function

protected void reduce(Text key, Iterable<Text> values, Context context)

throws IOException, InterruptedException {

// Count the frequency of words

int sum = 0 ;

for (Text value : values) {

sum += Integer.parseInt(value.toString());

}

int mysplit = key.toString().indexOf(":");

// To reset value Values are determined by URL And word frequency

myK.set(key.toString().substring(0, mysplit));

myV.set(key.toString().substring(mysplit + 1) + ":" + sum);

context.write(myK, myV);

}

}

Reduce Code

After the above two processes ,Reduce The process only needs to be the same key It's worth it value The values are combined into the format required by the inverted index file , The rest can be handed over directly to MapReduce The framework deals with .

public static class doReducer extends Reducer<Text, Text, Text, Text>{

public static Text myK = new Text();

public static Text myV = new Text();

@Override // Realization reduce function

protected void reduce(Text key, Iterable<Text> values, Context context)

throws IOException, InterruptedException {

// Generate document list

String myList = new String();

for (Text value : values) {

myList += value.toString() + ";";

}

myK.set(key);

myV.set(myList);

context.write(myK, myV);

}

}

Complete code

package mapreduce;

import java.io.IOException;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.Mapper;

import org.apache.hadoop.mapreduce.Reducer;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.input.FileSplit;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

public class MyIndex {

public static void main(String[] args) throws IOException, ClassNotFoundException, InterruptedException {

Job job = Job.getInstance();

job.setJobName("InversedIndexTest");

job.setJarByClass(MyIndex.class);

job.setMapperClass(doMapper.class);

job.setCombinerClass(doCombiner.class);

job.setReducerClass(doReducer.class);

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(Text.class);

Path in1 = new Path("hdfs://localhost:9000/mymapreduce9/in/goods3");

Path in2 = new Path("hdfs://localhost:9000/mymapreduce9/in/goods_visit3");

Path in3 = new Path("hdfs://localhost:9000/mymapreduce9/in/order_items3");

Path out = new Path("hdfs://localhost:9000/mymapreduce9/out");

FileInputFormat.addInputPath(job, in1);

FileInputFormat.addInputPath(job, in2);

FileInputFormat.addInputPath(job, in3);

FileOutputFormat.setOutputPath(job, out);

System.exit(job.waitForCompletion(true) ? 0 : 1);

}

public static class doMapper extends Mapper<Object, Text, Text, Text>{

public static Text myKey = new Text();

public static Text myValue = new Text();

//private FileSplit filePath;

@Override

protected void map(Object key, Text value, Context context)

throws IOException, InterruptedException {

String filePath=((FileSplit)context.getInputSplit()).getPath().toString();

if(filePath.contains("goods")){

String val[]=value.toString().split("\t");

int splitIndex =filePath.indexOf("goods");

myKey.set(val[0] + ":" + filePath.substring(splitIndex));

}else if(filePath.contains("order")){

String val[]=value.toString().split("\t");

int splitIndex =filePath.indexOf("order");

myKey.set(val[2] + ":" + filePath.substring(splitIndex));

}

myValue.set("1");

context.write(myKey, myValue);

}

}

public static class doCombiner extends Reducer<Text, Text, Text, Text>{

public static Text myK = new Text();

public static Text myV = new Text();

@Override

protected void reduce(Text key, Iterable<Text> values, Context context)

throws IOException, InterruptedException {

int sum = 0 ;

for (Text value : values) {

sum += Integer.parseInt(value.toString());

}

int mysplit = key.toString().indexOf(":");

myK.set(key.toString().substring(0, mysplit));

myV.set(key.toString().substring(mysplit + 1) + ":" + sum);

context.write(myK, myV);

}

}

public static class doReducer extends Reducer<Text, Text, Text, Text>{

public static Text myK = new Text();

public static Text myV = new Text();

@Override

protected void reduce(Text key, Iterable<Text> values, Context context)

throws IOException, InterruptedException {

String myList = new String();

for (Text value : values) {

myList += value.toString() + ";";

}

myK.set(key);

myV.set(myList);

context.write(myK, myV);

}

}

}

-------------- end ----------------

WeChat official account : Below scan QR code or Search for Laugh at Fengyun Road Focus on

边栏推荐

- Mapreduce实例(八):Map端join

- Global and Chinese market of bank smart cards 2022-2028: Research Report on technology, participants, trends, market size and share

- QML control type: Popup

- [daily question] Porter (DFS / DP)

- Redis cluster

- Redis geospatial

- 运维,放过监控-也放过自己吧

- QML control type: menu

- Full stack development of quartz distributed timed task scheduling cluster

- Appears when importing MySQL

猜你喜欢

![[oc]- < getting started with UI> -- common controls uibutton](/img/4d/f5a62671068b26ef43f1101981c7bb.png)

[oc]- < getting started with UI> -- common controls uibutton

In depth analysis and encapsulation call of requests

Advanced Computer Network Review(4)——Congestion Control of MPTCP

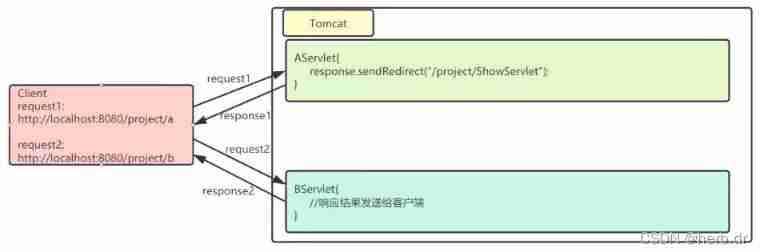

Servlet learning diary 7 -- servlet forwarding and redirection

为拿 Offer,“闭关修炼,相信努力必成大器

Mapreduce实例(九):Reduce端join

What is MySQL? What is the learning path of MySQL

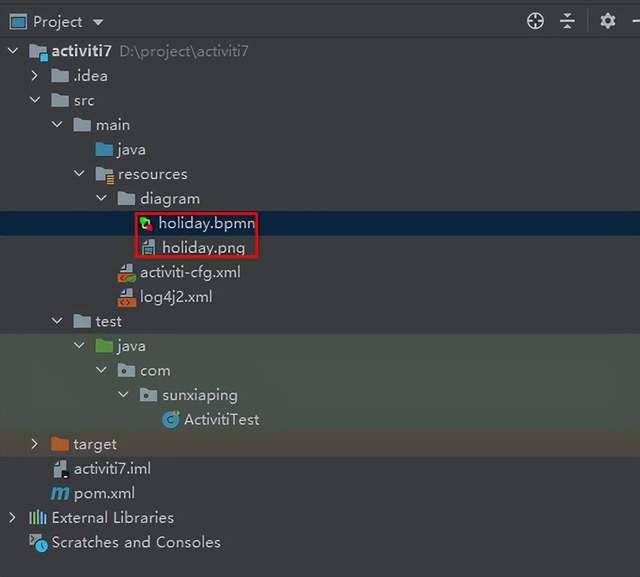

Use of activiti7 workflow

Solve the problem of inconsistency between database field name and entity class attribute name (resultmap result set mapping)

【深度学习】语义分割:论文阅读:(2021-12)Mask2Former

随机推荐

Redis之主从复制

Redis cluster

Mapreduce实例(九):Reduce端join

Redis connection redis service command

Kratos战神微服务框架(二)

小白带你重游Spark生态圈!

Detailed explanation of cookies and sessions

The five basic data structures of redis are in-depth and application scenarios

go-redis之初始化連接

Basic usage of xargs command

解决小文件处过多

Kratos战神微服务框架(一)

Connexion d'initialisation pour go redis

068.查找插入位置--二分查找

Webrtc blog reference:

五层网络体系结构

Global and Chinese market of AVR series microcontrollers 2022-2028: Research Report on technology, participants, trends, market size and share

Research and implementation of hospital management inpatient system based on b/s (attached: source code paper SQL file)

[shell script] use menu commands to build scripts for creating folders in the cluster

运维,放过监控-也放过自己吧