当前位置:网站首页>[personnel density detection] matlab simulation of personnel density detection based on morphological processing and GRNN network

[personnel density detection] matlab simulation of personnel density detection based on morphological processing and GRNN network

2022-07-02 03:41:00 【FPGA and MATLAB】

1. Software version

matlab2015b

2. Description of algorithm

The population density is divided into three levels ,(1) Green reminder in rare and uncrowded situations .(2) In a crowded situation , Yellow warning .(3) Very crowded , Red alarm . Different crowd densities are displayed on the interface in real time through corresponding alarm levels

There are two ways to classify population density :

(1) Estimate the number of people on the scene , According to the number of people , Judge the population density .

(2) Extract and analyze the overall characteristics of the population , The training sample , Use classifier to learn classification .

First, texture extraction of video , The method used is gray level co-occurrence matrix :

http://wenku.baidu.com/view/d60d9ff5ba0d4a7302763ae1.html?from=search

And then through GRNN Neural network training recognition algorithm :

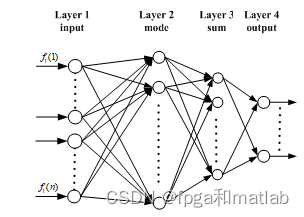

Generalized regression neural network (Generalized regression neural network, GRNN) It is a neural network based on nonparametric kernel regression , Calculate the probability density function between the independent variable and the dependent variable by observing samples .GRNN Structure is shown in figure 1 Shown , The whole network consists of four layers of neurons : Input layer 、 Pattern layer 、 Summation layer and output layer .

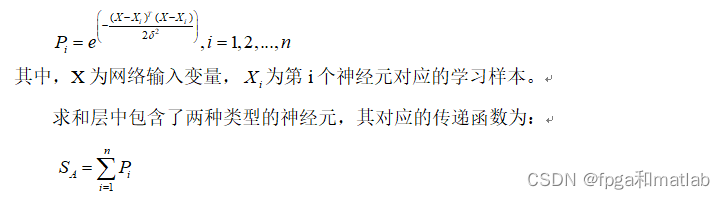

GRNN The performance of neural networks , It is mainly set by the smoothing factor of the kernel function of its implicit regression unit , Different smoothing factors can obtain different network performance . The number of neurons in the input layer is related to the dimension of the input vector in the learning sample m equal . Each neuron corresponds to a different learning sample , In the mode layer i The transfer function of neurons is :

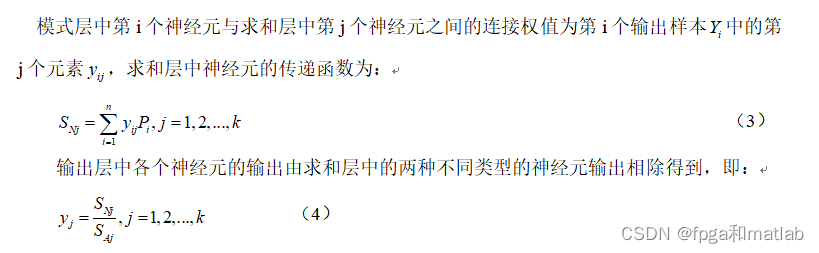

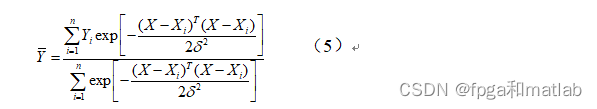

From this we can see that , After selecting the learning sample ,GRNN The structure and weight of the network are completely determined , So train GRNN Network is better than training BP The Internet and RBF The network is much more convenient . Based on the above GRNN The output calculation formula of each layer of the network , Whole GRNN The output of the network can be expressed as :

3. Part of the source code

function pushbutton2_Callback(hObject, eventdata, handles)

% hObject handle to pushbutton2 (see GCBO)

% eventdata reserved - to be defined in a future version of MATLAB

% handles structure with handles and user data (see GUIDATA)

global frameNum_Original;

global frameNum_Originals;

global Obj;

%%

% Parameter initialization

% Process video size

RR = 200;

CC = 300;

K = 3; % Components

Alpha = 0.02; % Adapt weight speed

Rho = 0.01; % Adaptive weighted velocity covariance

Deviation_sq = 49; % The threshold is used to find a match

Variance = 2; % The initial variance is the newly placed component

Props = 0.00001; % Initially for new placement

Back_Thresh = 0.8; % The proportion of body weight must account for the background model

Comp_Thresh = 10; % Filter out the smaller dimensions of the connecting components

SHADOWS =[0.7,0.25,0.85,0.95]; % Set the shadow removal threshold

CRGB = 3;

D = RR * CC;

Temps = zeros(RR,CC,CRGB,'uint8');

Temps = imresize(read(Obj,1),[RR,CC]);

Temps = reshape(Temps,size(Temps,1)*size(Temps,2),size(Temps,3));

Mus = zeros(D,K,CRGB);

Mus(:,1,:) = double(Temps(:,:,1));

Mus(:,2:K,:) = 255*rand([D,K-1,CRGB]);

Sigmas = Variance*ones(D,K,CRGB);

Weights = [ones(D,1),zeros(D,K-1)];

Squared = zeros(D,K);

Gaussian = zeros(D,K);

Weight = zeros(D,K);

background = zeros(RR,CC,frameNum_Original);

Shadows = zeros(RR,CC);

Images0 = zeros(RR,CC,frameNum_Original);

Images1 = zeros(RR,CC,frameNum_Original);

Images2 = zeros(RR,CC,frameNum_Original);

background_Update = zeros(RR,CC,CRGB,frameNum_Original);

indxx = 0;

for tt = frameNum_Originals

disp(' The current frame number ');

tt

indxx = indxx + 1;

pixel_original = read(Obj,tt);

pixel_original2 = imresize(pixel_original,[RR,CC]);

Temp = zeros(RR,CC,CRGB,'uint8');

Temp = pixel_original2;

Temp = reshape(Temp,size(Temp,1)*size(Temp,2),size(Temp,3));

image = Temp;

for kk = 1:K

Datac = double(Temp)-reshape(Mus(:,kk,:),D,CRGB);

Squared(:,kk) = sum((Datac.^ 2)./reshape(Sigmas(:,kk,:),D,CRGB),2);

end

[junk,index] = min(Squared,[],2);

Gaussian = zeros(size(Squared));

Gaussian(sub2ind(size(Squared),1:length(index),index')) = ones(D,1);

Gaussian = Gaussian&(Squared<Deviation_sq);

% Parameters are updated

Weights = (1-Alpha).*Weights+Alpha.*Gaussian;

for kk = 1:K

pixel_matched = repmat(Gaussian(:,kk),1,CRGB);

pixel_unmatched = abs(pixel_matched-1);

Mu_kk = reshape(Mus(:,kk,:),D,CRGB);

Sigma_kk = reshape(Sigmas(:,kk,:),D,CRGB);

Mus(:,kk,:) = pixel_unmatched.*Mu_kk+pixel_matched.*(((1-Rho).*Mu_kk)+(Rho.*double(image)));

Mu_kk = reshape(Mus(:,kk,:),D,CRGB);

Sigmas(:,kk,:) = pixel_unmatched.*Sigma_kk+pixel_matched.*(((1-Rho).*Sigma_kk)+repmat((Rho.* sum((double(image)-Mu_kk).^2,2)),1,CRGB));

end

replaced_gaussian = zeros(D,K);

mismatched = find(sum(Gaussian,2)==0);

for ii = 1:length(mismatched)

[junk,index] = min(Weights(mismatched(ii),:)./sqrt(Sigmas(mismatched(ii),:,1)));

replaced_gaussian(mismatched(ii),index) = 1;

Mus(mismatched(ii),index,:) = image(mismatched(ii),:);

Sigmas(mismatched(ii),index,:) = ones(1,CRGB)*Variance;

Weights(mismatched(ii),index) = Props;

end

Weights = Weights./repmat(sum(Weights,2),1,K);

active_gaussian = Gaussian+replaced_gaussian;

% Background segmentation

[junk,index] = sort(Weights./sqrt(Sigmas(:,:,1)),2,'descend');

bg_gauss_good = index(:,1);

linear_index = (index-1)*D+repmat([1:D]',1,K);

weights_ordered = Weights(linear_index);

for kk = 1:K

Weight(:,kk)= sum(weights_ordered(:,1:kk),2);

end

bg_gauss(:,2:K) = Weight(:,1:(K-1)) < Back_Thresh;

bg_gauss(:,1) = 1;

bg_gauss(linear_index) = bg_gauss;

active_background_gaussian = active_gaussian & bg_gauss;

foreground_pixels = abs(sum(active_background_gaussian,2)-1);

foreground_map = reshape(sum(foreground_pixels,2),RR,CC);

Images1 = foreground_map;

objects_map = zeros(size(foreground_map),'int32');

object_sizes = [];

Obj_pos = [];

new_label = 1;

% Compute connected region

[label_map,num_labels] = bwlabel(foreground_map,8);

for label = 1:num_labels

object = (label_map == label);

object_size = sum(sum(object));

if(object_size >= Comp_Thresh)

objects_map = objects_map + int32(object * new_label);

object_sizes(new_label) = object_size;

[X,Y] = meshgrid(1:CC,1:RR);

object_x = X.*object;

object_y = Y.*object;

Obj_pos(:,new_label) = [sum(sum(object_x)) / object_size;

sum(sum(object_y)) / object_size];

new_label = new_label + 1;

end

end

num_objects = new_label - 1;

% Remove shadows

index = sub2ind(size(Mus),reshape(repmat([1:D],CRGB,1),D*CRGB,1),reshape(repmat(bg_gauss_good',CRGB,1),D*CRGB,1),repmat([1:CRGB]',D,1));

background = reshape(Mus(index),CRGB,D);

background = reshape(background',RR,CC,CRGB);

background = uint8(background);

if indxx <= 500;

background_Update = background;

else

background_Update = background_Update;

end

background_hsv = rgb2hsv(background);

image_hsv = rgb2hsv(pixel_original2);

for i = 1:RR

for j = 1:CC

if (objects_map(i,j))&&...

(abs(image_hsv(i,j,1)-background_hsv(i,j,1))<SHADOWS(1))&&...

(image_hsv(i,j,2)-background_hsv(i,j,2)<SHADOWS(2))&&...

(SHADOWS(3)<=image_hsv(i,j,3)/background_hsv(i,j,3)<=SHADOWS(4))

Shadows(i,j) = 1;

else

Shadows(i,j) = 0;

end

end

end

Images0 = objects_map;

objecs_adjust_map = Shadows;

Images2 = objecs_adjust_map;

%%

% According to the size proportion of the region where the pixel is located and the texture feature analysis, the human density is obtained

% Corrosion treatment

se = strel('ball',6,6);

Images2BW = floor(abs(imdilate(Images2,se)-5));

Images3BW = zeros(size(Images2BW));

X1 = round(168/2);

X2 = round(363/2);

Y1 = round(204/2);

Y2 = round(339/2);

if indxx > 80;

% Calculate the pixel value in the region

S1 = sum(sum(Images2BW(Y1:Y2,X1:X2)));

S2(indxx-80) = S1/((X2-X1)*(Y2-Y1));

end

Images3BW(Y1:Y2,X1:X2) = Images2BW(Y1:Y2,X1:X2);

Images3Brgb = pixel_original2(Y1:Y2,X1:X2,:);

% Texture detection

% Calculate texture

[A,B] = func_wenli(rgb2gray(Images3Brgb));

% Select energy Entropy as the basis of judgment

if indxx > 80;

F1(indxx-80) = A(1);

F2(indxx-80) = A(2);

F3(indxx-80) = A(3);

end

if indxx > 80;

load train_model.mat

P = [S2(indxx-80);F2(indxx-80)];

y = round(NET(P));

if y == 1

set(handles.text2,'String',' Low density ');

set(handles.text2,'ForegroundColor',[0 1 0]) ;

end

if y == 2

set(handles.text2,'String',' Medium density ');

set(handles.text2,'ForegroundColor',[1 1 0]) ;

end

if y == 3

set(handles.text2,'String',' high-density ');

set(handles.text2,'ForegroundColor',[1 0 0]) ;

end

end

axes(handles.axes1)

imshow(pixel_original2);

% title(' Locate the detection area ');

hold on

line([X1,X2],[Y1,Y1],'LineWidth',1,'Color',[0 1 0]);

hold on

line([X2,X2],[Y1,Y2],'LineWidth',1,'Color',[0 1 0]);

hold on

line([X2,X1],[Y2,Y2],'LineWidth',1,'Color',[0 1 0]);

hold on

line([X1,X1],[Y2,Y1],'LineWidth',1,'Color',[0 1 0]);

axes(handles.axes2)

imshow(uint8(background_Update));

% title(' Background gain ');

axes(handles.axes3)

imshow(Images0,[]);

% title(' Dynamic background extraction ');

axes(handles.axes4)

imshow(Images3BW,[]);

% title(' Dynamic background extraction ( Within the detection area )');

pause(0.0000001);

end

4. Simulation results

边栏推荐

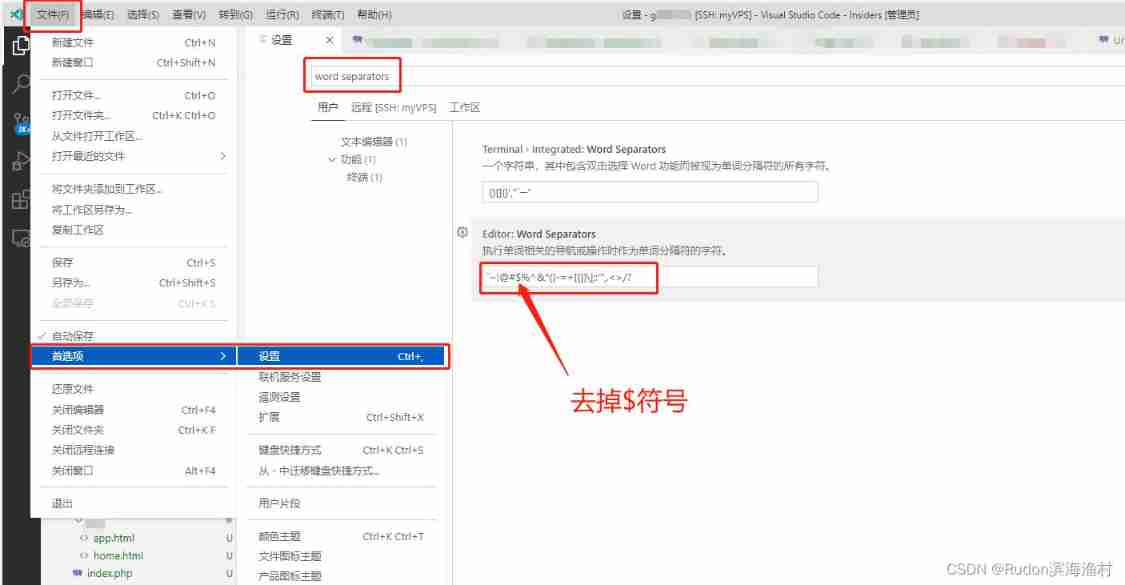

- Set vscode. When double clicking, the selected string includes the $symbol - convenient for PHP operation

- Custom classloader that breaks parental delegation

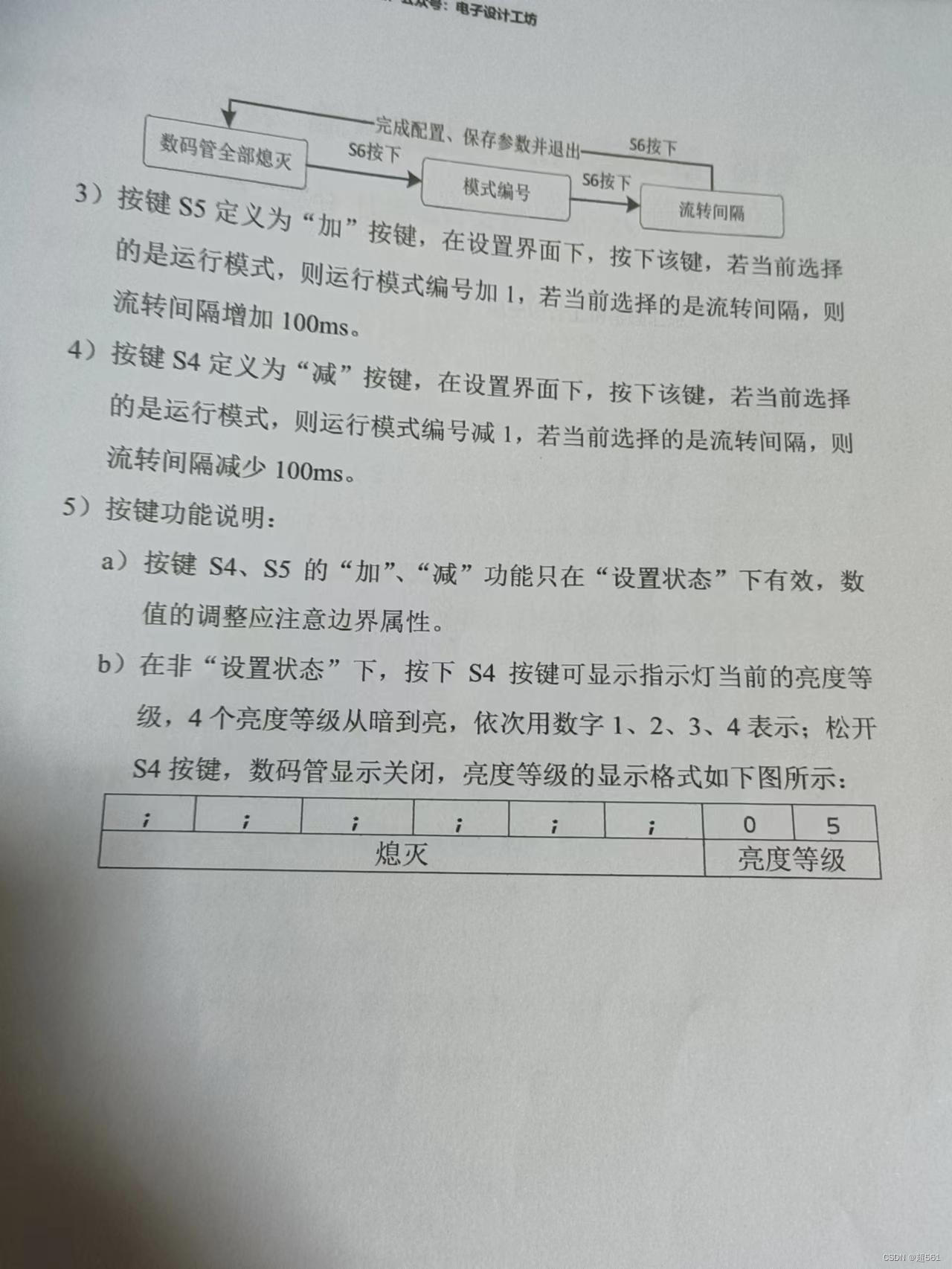

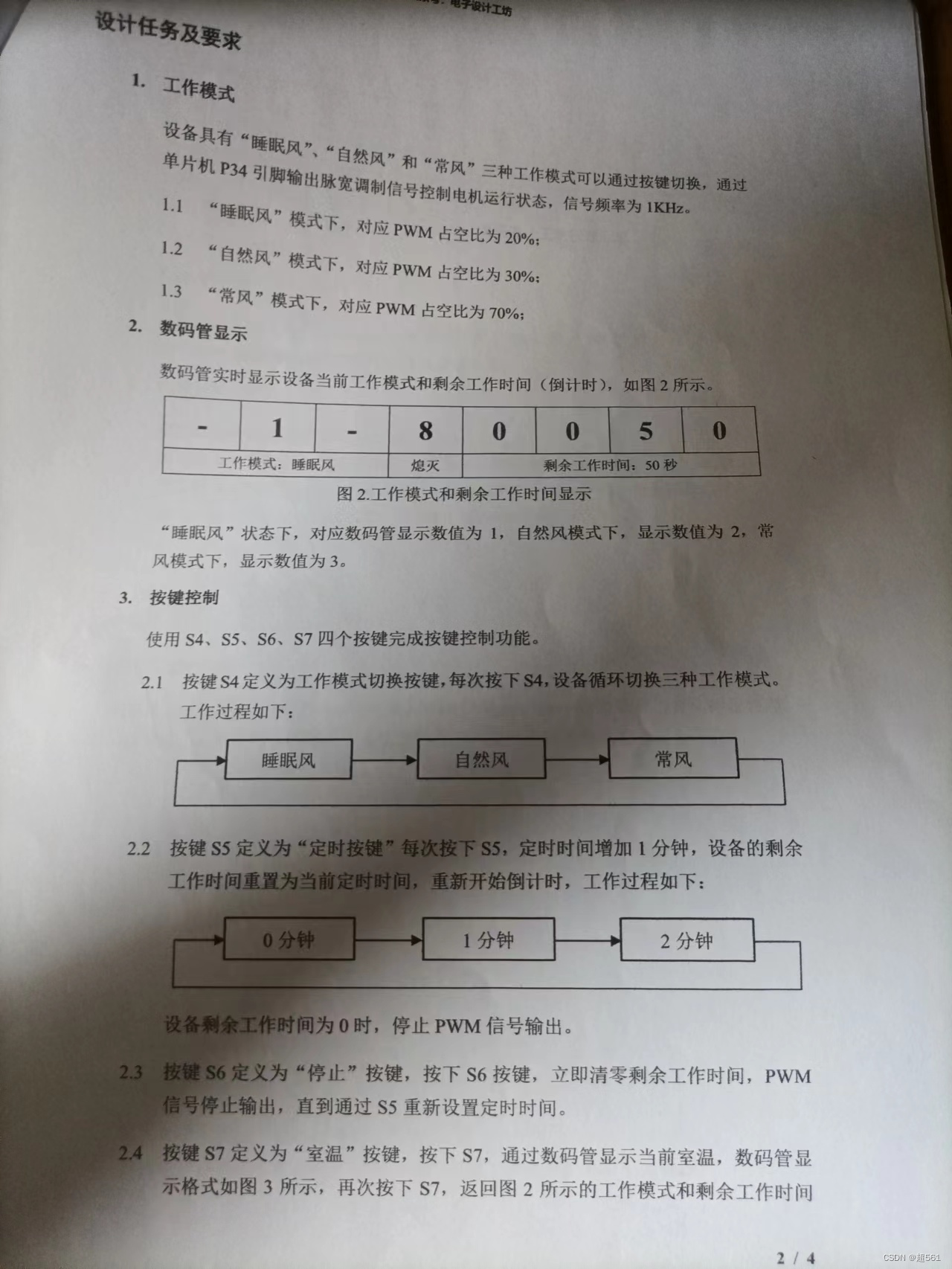

- The 9th Blue Bridge Cup single chip microcomputer provincial competition

- MySQL advanced (Advanced) SQL statement (II)

- 蓝桥杯单片机省赛第十二届第二场

- Unity脚本的基础语法(7)-成员变量和实例化

- 【直播回顾】战码先锋首期8节直播完美落幕,下期敬请期待!

- Generate random numbers that obey normal distribution

- Getting started with MQ

- VS2010 plug-in nuget

猜你喜欢

The 9th Blue Bridge Cup single chip microcomputer provincial competition

Unity脚本的基础语法(6)-特定文件夹

The second game of the 12th provincial single chip microcomputer competition of the Blue Bridge Cup

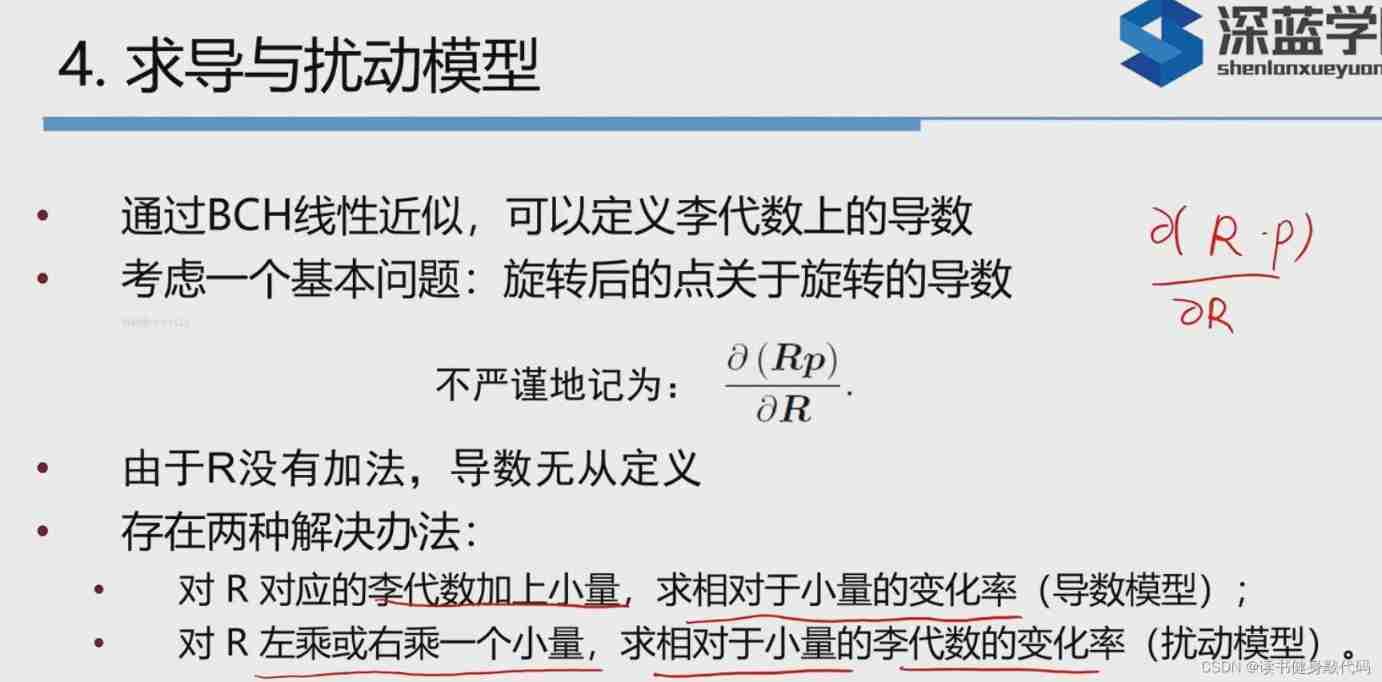

Visual slam Lecture 3 -- Lie groups and Lie Algebras

How to do medium and long-term stocks, and what are the medium and long-term stock trading skills?

u本位合约爆仓清算解决方案建议

一天上手Aurora 8B/10B IP核(5)----从Framing接口的官方例程学起

蓝桥杯单片机省赛第七届

Pycharm2021 delete the package warehouse list you added

Set vscode. When double clicking, the selected string includes the $symbol - convenient for PHP operation

随机推荐

[punch in] flip the string (simple)

知物由学 | 自监督学习助力内容风控效果提升

Basic syntax of unity script (8) - collaborative program and destruction method

js生成随机数

微信小程序中 在xwml 中使用外部引入的 js进行判断计算

Global and Chinese market of autotransfusion bags 2022-2028: Research Report on technology, participants, trends, market size and share

Kotlin basic learning 15

蓝桥杯单片机省赛第十届

Vite: scaffold assembly

滴滴开源DELTA:AI开发者可轻松训练自然语言模型

Visual slam Lecture 3 -- Lie groups and Lie Algebras

蓝桥杯单片机省赛第七届

近段时间天气暴热,所以采集北上广深去年天气数据,制作可视化图看下

The 9th Blue Bridge Cup single chip microcomputer provincial competition

MySQL之账号管理

蓝桥杯单片机省赛第十一届第二场

Lost a few hairs, and finally learned - graph traversal -dfs and BFS

Network connection mode of QT

接口调试工具模拟Post上传文件——ApiPost

Which of PMP and software has the highest gold content?