当前位置:网站首页>JIT deep analysis

JIT deep analysis

2022-07-02 03:14:00 【xjk201】

Cross language ( Language independence ):JVM Identify bytecode only ( So it's powerful across languages ), therefore JVM In fact, it is decoupled from language , That is, there is no direct connection ,JVM Running is not translation Java file , It's about identifying class file , This is commonly called bytecode . And something like that. Groovy 、Kotlin、Scala Wait for the language , They are also compiled into bytecode , So they can also be in JVM Run up , This is JVM Cross language features of .Java To some extent, it has laid a very strong foundation for java Language ecosystem .

Explain execution and JIT

Java When the program is running , It mainly executes bytecode instructions , Generally, these instructions will be interpreted and executed in order , This is interpretation and execution .

But the code that is called frequently , For example, the number of calls is very high or in for The code in the loop , If the explanation is followed , Efficiency is very low .( This is Java Used to be C、C++ The reason why developers roast slowly )

These codes are called hotspot codes . therefore , In order to improve the execution efficiency of hotspot code , At run time , The virtual machine will compile the code into machine code related to the local platform , And carry out various levels of optimization .

The compiler that does this , It's called just in time compiler (Just In Time Compiler), abbreviation JIT compiler .

C1、C2 And Graal compiler

stay JDK1.8 in HotSpot In the virtual machine , Two built-in JIT, Respectively C1 The compiler and C2 compiler .

C1 compiler

C1 The compiler is a simple and fast compiler , The main focus is on local optimization , It is applicable to programs with short execution time or requirements for startup performance , for example ,GUI Applications have certain requirements for interface startup speed ,C1 Also known as Client Compiler.

C1 The compiler rarely optimizes the code

C2 compiler

C2 A compiler is a compiler that does performance tuning for long-running server-side applications , It is applicable to programs with long execution time or peak performance requirements . According to their suitability , This just in time compilation is also known as Server Compiler.

however C2 The code is super complex , No one can maintain ! That's why we developed Java Compiling Graal Compiler replaces C2(JDK10 Start )

Layered compilation

stay Java7 Before , You need to select the corresponding... According to the characteristics of the program JIT, By default, the virtual machine uses the interpreter to work with one of the compilers .

Java7 And later introduced layered compilation , This approach combines C1 Start up performance advantages and C2 Peak performance advantage of , Of course, we can also specify the real-time compilation mode of the virtual machine through parameters .

stay Java8 in , Layered compilation is enabled by default .

adopt java -version The command line can directly view the compilation mode used by the current system ( Default layered compilation )

Use “-Xint” Parameter forces the virtual machine to run in interpreter only compilation mode

Use “-Xcomp” Force virtual machines to run on only JIT In compile mode

Hot code

Hot code , It's the code that is called frequently , For example, the number of calls is very high or in for The code in the loop . The recompiled machine code is cached , For next use , But for code that executes very few times , This kind of compiling action is a waste .

JVM A parameter is provided “-XX:ReservedCodeCacheSize”, Used to restrict CodeCache Size . in other words ,JIT The compiled code will be placed in CodeCache in .

If this space is insufficient ,JIT You can't continue compiling , Compilation execution will become interpretation execution , Performance will be reduced by an order of magnitude . meanwhile ,JIT The compiler will always try to optimize the code , And that's why CPU Occupancy rises .

adopt java -XX:+PrintFlagsFinal –version Inquire about :

Hot spot detection

stay HotSpot Hot spot detection in virtual machines is JIT Optimized conditions , Hotspot detection is a counter based hotspot detection , The virtual opportunity using this method establishes a counter for each method and counts the execution times of the method , If the number of executions exceeds a certain threshold, it is considered as “ Hot methods ”

The virtual machine prepares two types of counters for each method : Method call counter (Invocation Counter) And back counter (Back Edge Counter). On the premise of determining the operating parameters of virtual machine , Both counters have a certain threshold , When the counter exceeds the threshold, it overflows , It will trigger JIT compile .

Method call counter

Used to count the number of times a method is called , The default threshold for the method call counter in client mode is 1500 Time , In the server mode, it is 10000 Time ( We use the server ,java –version Inquire about ), It can be done by -XX: CompileThreshold To set

adopt java -XX:+PrintFlagsFinal –version Inquire about

Back counter

It is used to count the execution times of loop body code in a method , The instruction that jumps after encountering control flow in bytecode is called “ Back to the side ”(Back Edge), This value is used to calculate whether to trigger C1 Threshold of compilation , Without enabling layered compilation , In the server mode, it is 10700.

How to calculate ! Refer to the following formula ( If you are interested, you can understand ):

Back edge counter threshold = Method call counter threshold (CompileThreshold)×(OSR ratio (OnStackReplacePercentage)- Interpreter monitor ratio (InterpreterProfilePercentage)/100

adopt java -XX:+PrintFlagsFinal –version Query first related parameters :

among OnStackReplacePercentage The default value is 140,InterpreterProfilePercentage The default value is 33, If you take the default value , that Server The threshold value of the edge back counter of the mode virtual machine is 10700.

Back edge counter threshold =10000×(140-33)=10700

Compile Optimization Technology

JIT Compilation uses some classic compilation optimization techniques to optimize the code , That is, optimize... Through some routine checks , You can intelligently compile the best performance code at run time .

Method Inlining

The optimization behavior of method inlining is to copy the code of the target method into the calling method , Avoid real method calls .

For example, the following methods :

It will eventually be optimized to :

JVM Will automatically identify hot spots , And optimize their use of method inlining .

We can go through -XX:CompileThreshold To set the threshold of the hotspot method .

But one thing to emphasize , Hot spot methods are not necessarily JVM Do inline optimization , If the method body is too large ,JVM No inline operation will be performed .

The size threshold of the method body , We can also optimize... Through parameter setting :

Frequently executed methods , By default , Method body size is less than 325 Bytes are inlined , We can go through -XX:FreqInlineSize=N To set the size value ;

Methods that are not often performed , By default , Method size is less than 35 Bytes will be inlined , We can also pass -XX:MaxInlineSize=N To reset the size value .

So we are familiar with the other party's Dharma body such as Ali reduction The parameter name should not exceed a few Because the underlying compilation involved here is

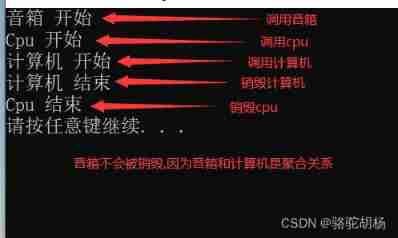

Code demonstration

Set up VM Parameters :-XX:+PrintCompilation -XX:+UnlockDiagnosticVMOptions

-XX:+PrintInlining

-XX:+PrintCompilation // Print the compilation process information on the console -XX:+UnlockDiagnosticVMOptions // Unlock pair JVM Options and parameters for diagnosis . The default is off , When enabled, some specific parameter pairs are supported JVM Make a diagnosis -XX:+PrintInlining // Print out the inline method

If there are too few cycles , Method inlining will not be triggered

The optimization of hot spot method can effectively improve the system performance , Generally, we can improve method inlining in the following ways :

- By setting JVM Parameter to reduce the hotspot threshold or increase the method body threshold , So that more methods can be inlined , But this approach means taking up more memory ;

- In programming , Avoid writing a lot of code in a method , Get used to using small methods ;

- Use as much as possible final、private、static Keyword modification method , Coding method because of inheritance , Additional type checks will be required .

Lock elimination

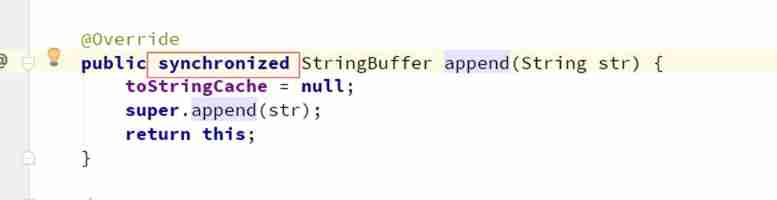

In non thread safe situations , Try not to use thread safe containers , such as StringBuffer. because StringBuffer Medium append Method is Synchronized Keyword modification , The lock will be used , This leads to performance degradation .

But actually , In the following code tests ,StringBuffer and StringBuilder There is little difference in performance . This is because the object created in the local method can only be accessed by the current thread , Cannot be accessed by other threads , There must be no competition for reading and writing this variable , This is the time JIT The compilation will lock the method lock of this object .

Under code test ,StringBuffer and StringBuilder There is little difference in performance . This is because the object created in the local method can only be accessed by the current thread , Cannot be accessed by other threads , There must be no competition for reading and writing this variable , This is the time JIT The compilation will lock the method lock of this object .

We remove the lock and close it --- The test found that the performance difference was a little big

-XX:+EliminateLocks Open lock to eliminate (jdk1.8 Default on , Other versions are not tested )

-XX:-EliminateLocks Close the lock to eliminate

Scalar substitution

Escape analysis proves that an object will not be accessed externally , If this object can be split , This object may not be created when the program actually executes , Instead, create its member variables directly . After splitting the object , The member variables of the object can be allocated on the stack or register , The original object does not need to allocate memory space . This compilation optimization is called scalar substitution ( The premise is to start escape analysis ).

Such as in the figure above foo Method if scalar substitution is used , Then the final execution is foo1 Effect of the method .

-XX:+DoEscapeAnalysis Open escape analysis (jdk1.8 Default on )

-XX:-DoEscapeAnalysis Close escape analysis

-XX:+EliminateAllocations Turn on scalar substitution (jdk1.8 Default on )

-XX:-EliminateAllocations Close scalar substitution

Escape analysis technology

The principle of escape analysis : Analysis object dynamic scope , When an object is defined in a method , It may be referenced by external methods .

such as : Call parameters to other methods , This is called method escape . It may even be accessed by an external thread , for example : Assign values to variables accessed in other threads , This is called thread escape .

Never escape to method escape to thread escape , It is called the different escape degrees of the object from low to high .

If you make sure an object doesn't escape from the thread , So having objects allocate memory on the stack can improve JVM The efficiency of .

Of course, escape analysis technology belongs to JIT Optimization technology , So it must conform to the hotspot code ,JIT Will be optimized , In addition, if the object is to be allocated to the stack , You need to split the object , This compilation optimization is called scalar substitution .

To put it bluntly , With hot spot detection , There is escape analysis , With escape analysis , Scalar substitution , With scalar substitution, there is stack allocation .

Escape analysis code example

This code is in the process of calling Myboject This object belongs to cannot escape ,JVM You can do on stack distribution , So it runs very fast !

JVM Escape analysis will be done by default 、 Scalar substitution will occur , Will allocate on the stack .

Then turn off escape analysis

-XX:-DoEscapeAnalysis

Then turn off escape analysis

-XX:-EliminateAllocations

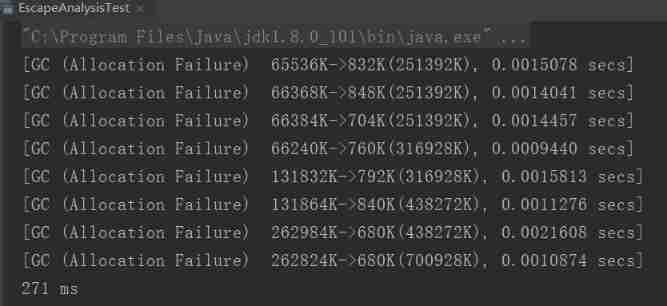

The test results are as follows , Turning on escape analysis has a great impact on the performance of code execution ! So why does it have this effect ?

Escape analysis

If the escape analysis object can be allocated on the stack , Then the life cycle of the object follows the thread , There's no need to recycle , If you call this rule frequently, you can get a great performance improvement .

After using escape analysis , Objects satisfying escape are allocated on the stack

Escape analysis is not turned on , Objects are all allocated on the heap , Garbage collection will be triggered frequently ( Garbage collection can affect system performance ), Causes code to run slowly

Code validation

Turn on GC Print log

-XX:+PrintGC

Open escape analysis

You can see there is no GC journal ( Because of the allocation on the stack )

Close escape analysis

You can see that escape analysis is turned off ,JVM In frequent garbage collection (GC), It is the operation of this block that leads to a big difference in performance .

边栏推荐

- tarjan2

- [JVM] detailed description of the process of creating objects

- Design details of SAP e-commerce cloud footernavigationcomponent

- Xiaomi, a young engineer, was just going to make soy sauce

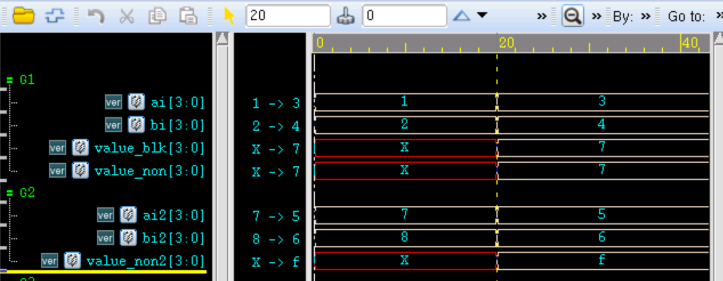

- 命名块 verilog

- 2022-2028 global manual dental cleaning equipment industry research and trend analysis report

- Verilog parallel block implementation

- Verilog reg register, vector, integer, real, time register

- buu_ re_ crackMe

- 小米青年工程师,本来只是去打个酱油

猜你喜欢

Après le mariage

Detailed explanation of the difference between Verilog process assignment

2022-2028 global encryption software industry research and trend analysis report

Just a few simple steps - start playing wechat applet

Verilog avoid latch

![Find duplicates [Abstract binary / fast and slow pointer / binary enumeration]](/img/9b/3c001c3b86ca3f8622daa7f7687cdb.png)

Find duplicates [Abstract binary / fast and slow pointer / binary enumeration]

Mmsegmentation series training and reasoning their own data set (3)

Common means of modeling: aggregation

浅谈线程池相关配置

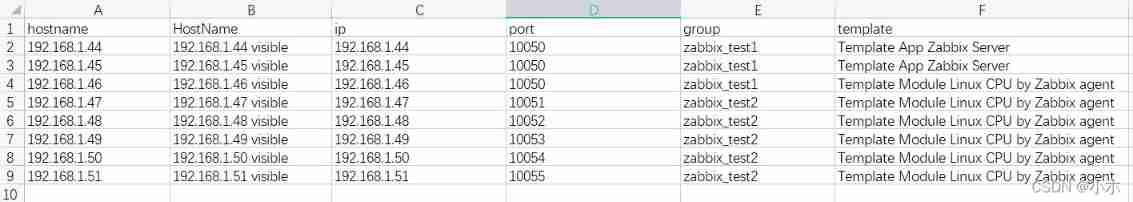

ZABBIX API creates hosts in batches according to the host information in Excel files

随机推荐

venn圖取交集

【JVM】创建对象的流程详解

After marriage

QT environment generates dump to solve abnormal crash

ZABBIX API creates hosts in batches according to the host information in Excel files

2022-2028 global manual dental cleaning equipment industry research and trend analysis report

4. Find the median of two positive arrays

West digital decided to raise the price of flash memory products immediately after the factory was polluted by materials

Intersection vengraph

[C Advanced] brother Peng takes you to play with strings and memory functions

OSPF LSA message parsing (under update)

C#聯合halcon脫離halcon環境以及各種報錯解决經曆

Xiaomi, a young engineer, was just going to make soy sauce

Common means of modeling: aggregation

On redis (II) -- cluster version

tarjan2

How to create an instance of the control defined in SAP ui5 XML view at runtime?

Special symbols in SAP ui5 data binding syntax, and detailed explanation of absolute binding and relative binding concepts

C reflection practice

Tupu software has passed CMMI5 certification| High authority and high-level certification in the international software field