当前位置:网站首页>Pytorch Basics

Pytorch Basics

2022-07-02 05:29:00 【Star soul is not a dream】

1. The difference between dynamic graph and static graph ? What is automatic differentiation ?

2. course

https://pytorch.apachecn.org/#/docs/1.7/043

Building neural networks

- Define neural networks , Get one model

- Define the loss function : torch.nn.xxLoss

- Define optimizer , Construct a optimizer object : torch.optim.xx

Training neural network

- Positive communication

- Calculate according to the loss function loss

- Back propagation :loss.backward()

- gradient descent :optimizer.step()

- The gradient goes to zero :optimizer.zero_grad()

tensor

- Generating tensor

- torch.tensor(data)

- torch.from_numpy(np_array)

- torch.xx_like(data, dtype=torch.float) # rewrite data Data type of

- torch.xx(shape) Such as :torch.rand(shape)

- attribute

- tensor.shape、tensor.dtype、tensor.device

- operation (tensor = tensor.to('cuda') # tensor Import GPU Inside )

- Index and slice

- Splicing : torch.cat

- Multiply elements by element : *, Matrix multiplication :@

- inplace operation : Such as : tensor.add_ ( Provincial memory , But error prone )

- numpy <--> torch ( Total memory area , Change at the same time )

- The tensor is transformed into Numpy array: tensor.numpy()

- Numpy array The array is transformed into a tensor :torch.from_numpy(xx)

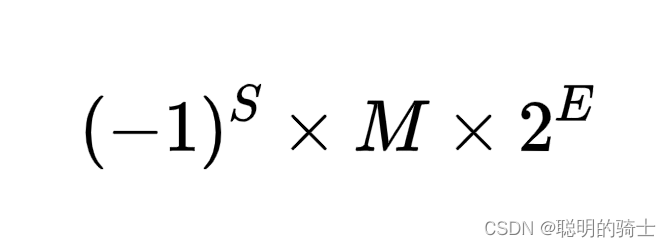

Automatic derivation (Autograd): PyTorch Automatic differential engine

loss = (prediction - labels).sum()

loss.backward() # backward pass When we call on the error tensor .backward() when , Start backpropagation . then ,Autograd Will calculate for each model parameter gradient And its Stored in the of the parameter .grad attribute in .

optim.step() #gradient descent call .step() start-up gradient descent . Optimizer pass .grad The gradient stored in is used to adjust each parameter .

Creating a tensor requires deriving it :

a = torch.tensor([2., 3.], requires_grad=True)loss.backward() Two cases :

- if loss It's a vector :

external_grad = torch.tensor([1., 1.])

loss.backward(gradient=external_grad)

# gradient Is with the loss Tensors of the same shape , It said loss Gradient relative to itself , All are 12. if loss It's scalar :

loss.sum().backward()Calculation chart :

Concept : Directed acyclic graph . Like an inverted number . Here is the input tensor , The root is the output tensor .

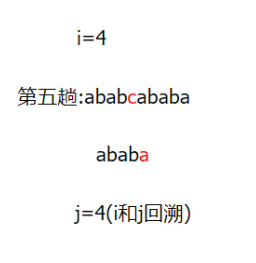

problem 1: What is a dynamic graph ?

Every time .backward() After call , Automatically build new graphs . So we can ask python Like breakpoint debugging .

Even if only one input tensor has requires_grad=True, The output tensor of the operation Gradients will also be required .

.requires_grad = False : Freeze parameters , Used to fine tune the model .

torch.no_grad() The context manager in can freeze parameters .

x = torch.tensor([1], requires_grad=True)

with torch.no_grad():

y = x * 2

y.requires_grad # False

@torch.no_grad()

def doubler(x):

return x * 2

z = doubler(x)

z.requires_grad # Falseneural network :torch.nn

Defining network :

class Net(nn.Module):

def __init__(self):

super(Net, self).__init__()

# Custom layer

# self. Layer name = ...

def forward(self, x):

# operation

return x

net = Net()

print(net) Just define forward function , You can use autograd Automatically define for you backward function ( Calculate the gradient

The learnable parameters of the model are determined by net.parameters() return

torch.nn Only small batches are supported , Input of a single sample is not supported .

for example ,nn.Conv2d Will adopt (b,c,h,w) Of 4D tensor .

If there is only one sample , Just use input.unsqueeze(0) Add a fake batch size (1,c,h,w).

Loss function :nn.MSELoss

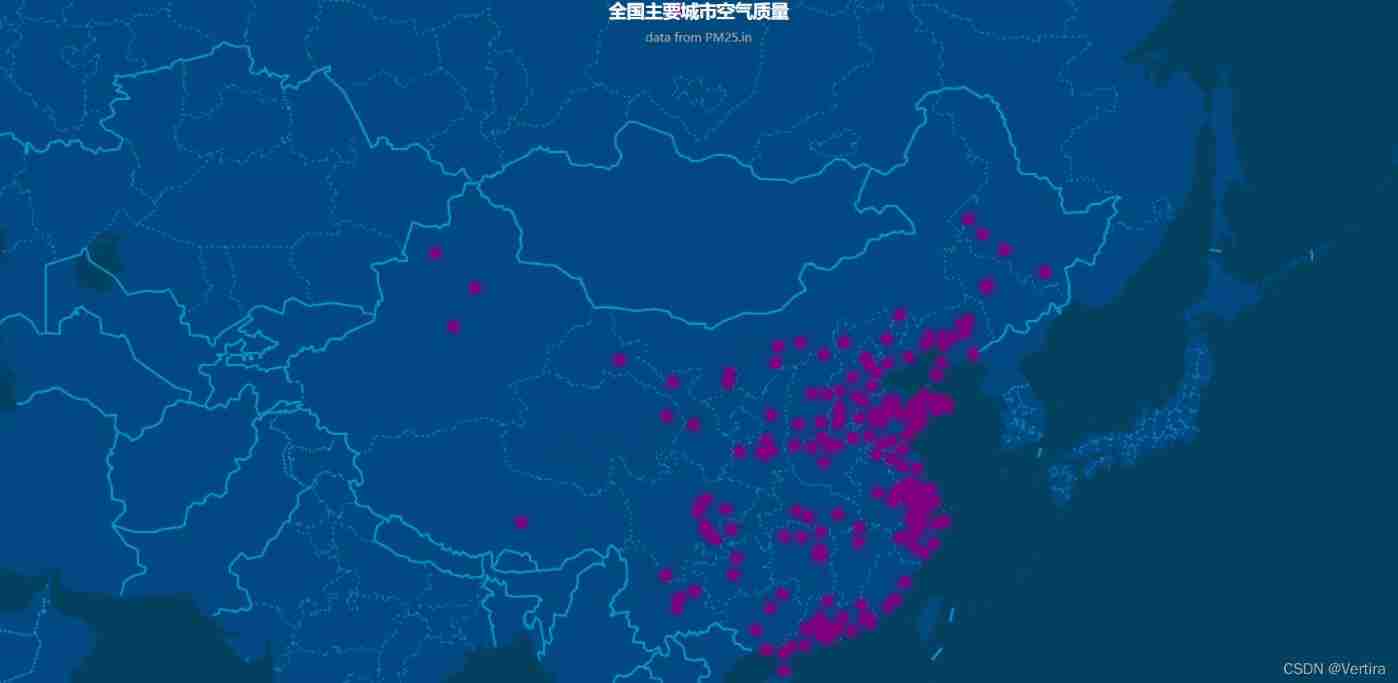

CV: torchvision Package has a :torchvision.datasets and torch.utils.data.DataLoader.

GPU Training

- net.to(device)

- inputs, labels = data[0].to(device), data[1].to(device)

Define a new Autograd function

By definition torch.autograd.Function Subclass and implementation of forward and backward Function to easily define your own Autograd Operator . then , We can call new by constructing an instance and calling it like a function Autograd Operator , And pass the tensor containing the input data .

class LegendrePolynomial3(torch.autograd.Function):

"""

We can implement our own custom autograd Functions by subclassing

torch.autograd.Function and implementing the forward and backward passes

which operate on Tensors.

"""

@staticmethod

def forward(ctx, input):

"""

In the forward pass we receive a Tensor containing the input and return

a Tensor containing the output. ctx is a context object that can be used

to stash information for backward computation. You can cache arbitrary

objects for use in the backward pass using the ctx.save_for_backward method.

"""

ctx.save_for_backward(input)

return 0.5 * (5 * input ** 3 - 3 * input)

@staticmethod

def backward(ctx, grad_output):

"""

In the backward pass we receive a Tensor containing the gradient of the loss

with respect to the output, and we need to compute the gradient of the loss

with respect to the input.

"""

input, = ctx.saved_tensors

return grad_output * 1.5 * (5 * input ** 2 - 1)Use :

P3 = LegendrePolynomial3.apply

y_pred = a + b * P3(c + d * x)You can use the regular Python Flow control to achieve circulation ( Such as for), And you can define forward Simply reuse the same parameters many times to achieve weight sharing

Use Dataset

TensorDataset Is a data set packing tensor . By defining the length and manner of the index , This also provides us with iterations along the first dimension of the tensor , Indexing and slicing methods .

from torch.utils.data import TensorDataset

train_ds = TensorDataset(x_train, y_train)

xb,yb = train_ds[i*bs : i*bs+bs]

Use DataLoader

DataLoader Responsible for batch management , DataLoader Make iterations easier .

from torch.utils.data import DataLoader

train_dl = DataLoader(train_ds, batch_size=bs)

for xb,yb in train_dl:

pred = model(xb)

# Verification set

valid_ds = TensorDataset(x_valid, y_valid)

valid_dl = DataLoader(valid_ds, batch_size=bs * 2) Always call before training model.train(), And call before reasoning model.eval(), Because such as nn.BatchNorm2d and nn.Dropout Such layers will use them , To ensure that these different stages of behavior are correct .

model.eval()

with torch.no_grad():

valid_loss = sum(loss_func(model(xb), yb) for xb, yb in valid_dl)establish fit() and get_data()

def loss_batch(model, loss_func, xb, yb, opt=None):

loss = loss_func(model(xb), yb)

if opt is not None:

loss.backward()

opt.step()

opt.zero_grad()

return loss.item(), len(xb)

import numpy as np

def fit(epochs, model, loss_func, opt, train_dl, valid_dl):

for epoch in range(epochs):

model.train()

for xb, yb in train_dl:

loss_batch(model, loss_func, xb, yb, opt)

model.eval()

with torch.no_grad():

losses, nums = zip(

*[loss_batch(model, loss_func, xb, yb) for xb, yb in valid_dl]

)

val_loss = np.sum(np.multiply(losses, nums)) / np.sum(nums)

print(epoch, val_loss)

def get_data(train_ds, valid_ds, bs):

return (

DataLoader(train_ds, batch_size=bs, shuffle=True),

DataLoader(valid_ds, batch_size=bs * 2),

)

train_dl, valid_dl = get_data(train_ds, valid_ds, bs)

model, opt = get_model()

fit(epochs, model, loss_func, opt, train_dl, valid_dl)nn.Sequential

Sequential Object runs each module contained in it in a sequential manner

From the given function definition Custom layer , And then use Sequential This layer can be used when defining the network .

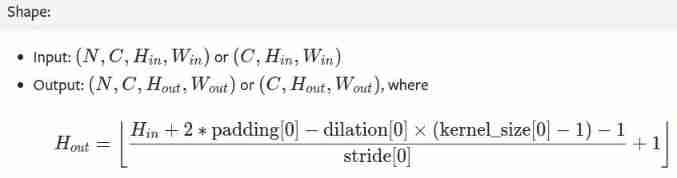

1. Conv2d

CLASS torch.nn.Conv2d(in_channels, out_channels, kernel_size, stride=1, padding=0, dilation=1, groups=1, bias=True, padding_mode='zeros', device=None, dtype=None)

dilation: Default: 1

2. MaxPool2d

CLASS torch.nn.MaxPool2d(kernel_size, stride=None, padding=0, dilation=1, return_indices=False, ceil_mode=False)

边栏推荐

- Using QA band and bit mask in Google Earth engine

- Fabric. JS three methods of changing pictures (including changing pictures in the group and caching)

- Fabric.js 精简JSON

- Fabric. JS right click menu

- Collectors. Groupingby sort

- Appnuim environment configuration and basic knowledge

- Youth training camp -- database operation project

- 7.1 Résumé du concours de simulation

- Gee series: unit 7 remote sensing image classification using GEE [random forest classification]

- Draw a wave chart_ Digital IC

猜你喜欢

随机推荐

No logic is executed after the El form is validated successfully

Gee: explore the characteristics of precipitation change in the Yellow River Basin in the past 10 years [pixel by pixel analysis]

Gee series: Unit 2 explore datasets

7.1 Résumé du concours de simulation

el form 表单validate成功后没有执行逻辑

LeetCode 241. Design priorities for operational expressions (divide and conquer / mnemonic recursion / dynamic programming)

Gee: analyze the change of spatial centroid of remote sensing image [centroid acquisition analysis]

Disable access to external entities in XML parsing

LeetCode 1175. 质数排列(质数判断+组合数学)

Briefly introduce chown command

Online English teaching app open source platform (customized)

青训营--数据库实操项目

Fabric. JS centered element

Foreign trade marketing website system development function case making

6. Network - Foundation

Fabric.js 圆形笔刷

Paddlepaddle project source code

ERP management system development and design existing source code

Php/js cookie sharing across domains

操作符详解