当前位置:网站首页>Overview of spark RDD

Overview of spark RDD

2022-07-06 02:04:00 【Diligent ls】

One 、 What is? RDD

RDD(Resilient Distributed Dataset) It's called elastic distributed data sets , yes Spark The most basic data abstraction in .

The code is an abstract class , It represents a flexible 、 immutable 、 Divisible 、 A set of elements that can be calculated in parallel .

1. elastic :

Storage flexibility : Automatic switching between memory and disk

The resilience of fault tolerance : Data loss can be recovered automatically

Elasticity of calculation : Calculation error retrial mechanism

The elasticity of slices : It can be re sliced as needed

2. Distributed

Data is stored on different nodes of the big data cluster

3. Datasets do not store data

RDD Encapsulates the computational logic , Do not save datasets

4. Data abstraction

RDD It's an abstract class , You need a subclass to implement that

5. immutable

RDD Encapsulates the computational logic , It's unchangeable , Want to change can only produce new RDD, In the new RDD Encapsulate computing logic

6. Divisible , Parallel operation

notes : all RDD Operator related operations are Executor End execution ,RDD Operations other than operators are Driver End execution .

stay Spark in , Only meet action Equal action operator , Will execute RDD Arithmetic , That is, delay calculation

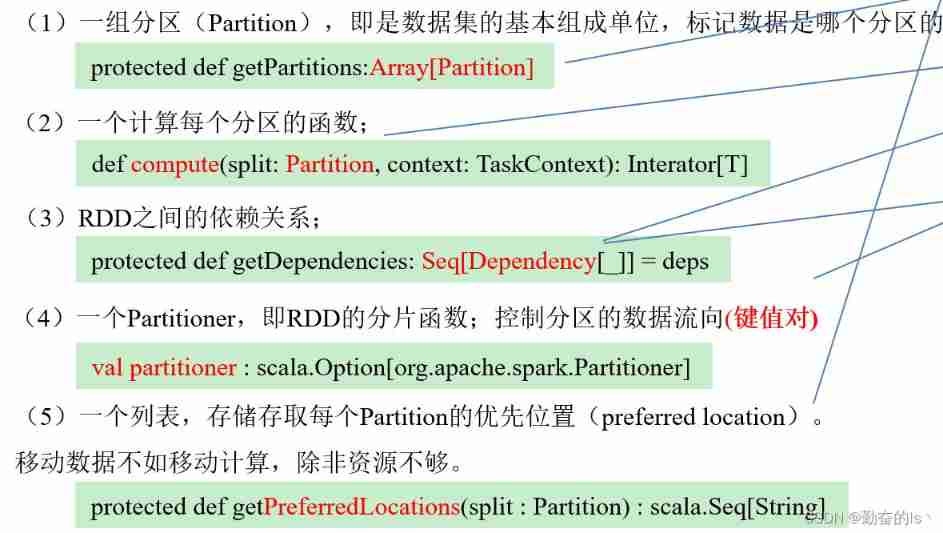

Two 、RDD Five characteristics of

1)A list of partitions

RDD By many partition constitute , stay spark in , Calculation formula , How many? partition It corresponds to how many task To execute

2)A function for computing each split

Yes RDD Do calculations , It's equivalent to RDD Each split or partition Do calculations

3)A list of dependencies on other RDDs

RDD There's a dependency , It can be traced back to

4)Optionally, a Partitioner for key-value RDDs (e.g. to say that the RDD is hash-partitioned)

If RDD The data stored in it is key-value form , You can pass a custom Partitioner Re zoning , For example, you can press key Of hash Value partition

5)Optionally, a list of preferred locations to compute each split on (e.g. block locations for an HDFS file)

The best position to calculate , That is, the locality of data

Calculate each split when , stay split Run locally on the machine task It's the best , Avoid data movement ;split There are multiple copies , therefore preferred location More than one

Where is the data , Priority should be given to scheduling jobs to the machine where the data resides , Reduce data IO And network transmission , In this way, we can better reduce the running time of jobs ( Barrel principle : The running time of the job depends on the slowest task Time required ), Improve performance

The feature introduction is reproduced from (https://www.jianshu.com/p/650d6e33914b)

边栏推荐

- 【Flask】响应、session与Message Flashing

- 竞价推广流程

- How to use C to copy files on UNIX- How can I copy a file on Unix using C?

- [Jiudu OJ 09] two points to find student information

- Sword finger offer 12 Path in matrix

- [flask] official tutorial -part2: Blueprint - view, template, static file

- 【网络攻防实训习题】

- Blue Bridge Cup embedded_ STM32_ New project file_ Explain in detail

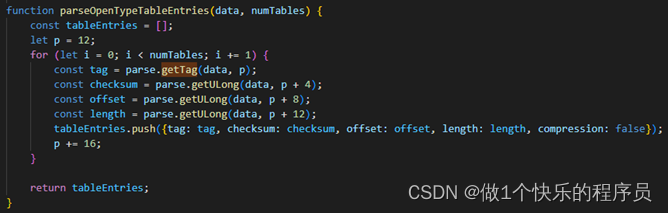

- Extracting key information from TrueType font files

- 同一个 SqlSession 中执行两条一模一样的SQL语句查询得到的 total 数量不一样

猜你喜欢

![NLP fourth paradigm: overview of prompt [pre train, prompt, predict] [Liu Pengfei]](/img/11/a01348dbfcae2042ec9f3e40065f3a.png)

NLP fourth paradigm: overview of prompt [pre train, prompt, predict] [Liu Pengfei]

![[solution] every time idea starts, it will build project](/img/fc/e68f3e459768abb559f787314c2124.jpg)

[solution] every time idea starts, it will build project

![[understanding of opportunity-39]: Guiguzi - Chapter 5 flying clamp - warning 2: there are six types of praise. Be careful to enjoy praise as fish enjoy bait.](/img/3c/ec97abfabecb3f0c821beb6cfe2983.jpg)

[understanding of opportunity-39]: Guiguzi - Chapter 5 flying clamp - warning 2: there are six types of praise. Be careful to enjoy praise as fish enjoy bait.

PHP campus movie website system for computer graduation design

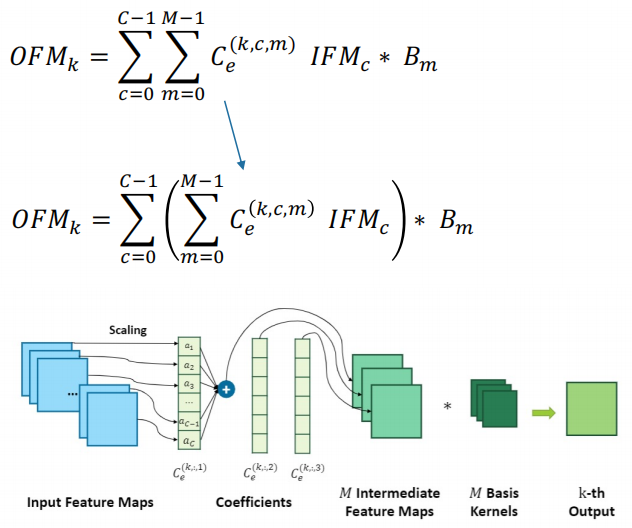

干货!通过软硬件协同设计加速稀疏神经网络

Extracting key information from TrueType font files

![Grabbing and sorting out external articles -- status bar [4]](/img/1e/2d44f36339ac796618cd571aca5556.png)

Grabbing and sorting out external articles -- status bar [4]

02.Go语言开发环境配置

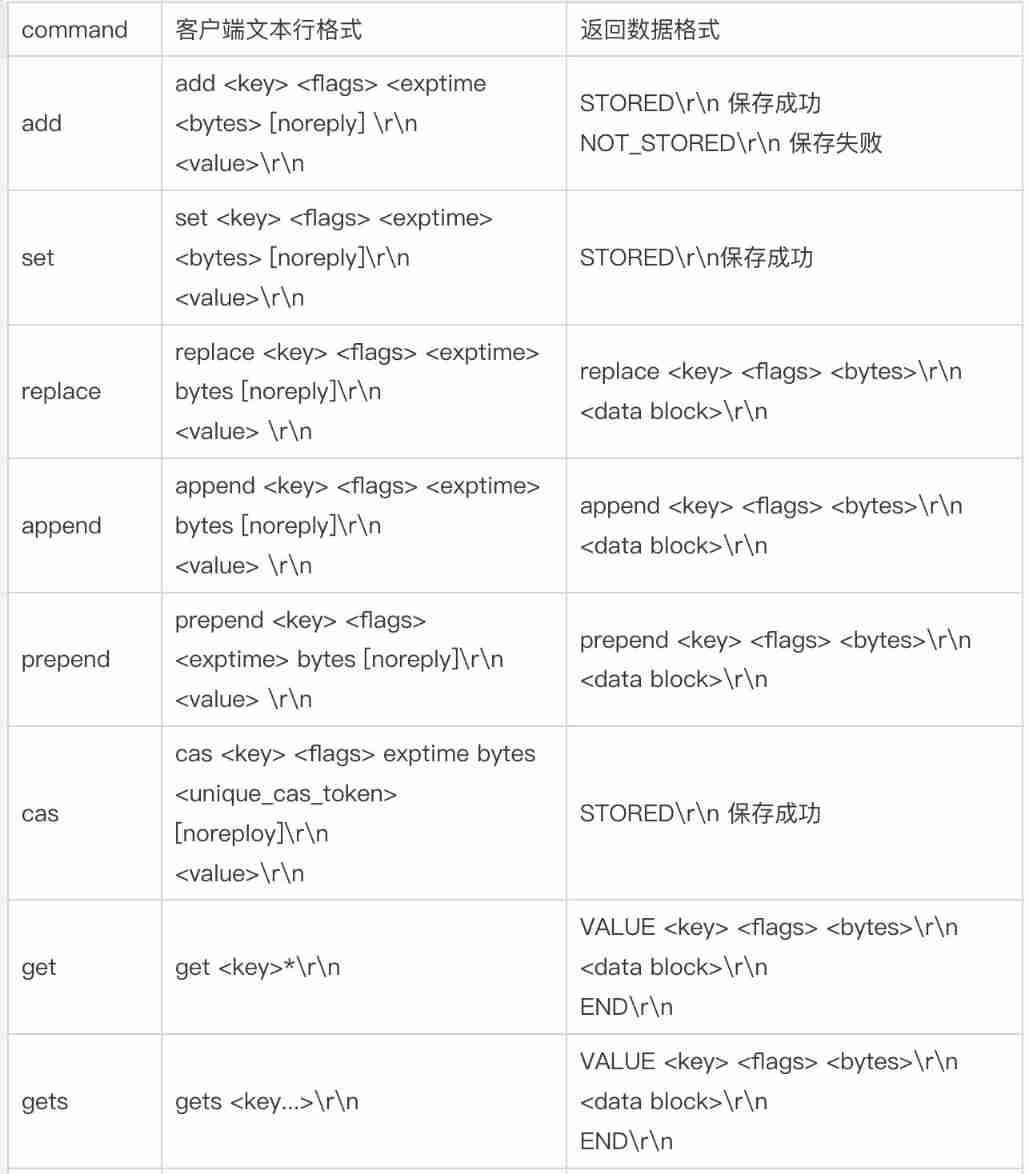

500 lines of code to understand the principle of mecached cache client driver

SQL statement

随机推荐

[Jiudu OJ 09] two points to find student information

Cadre du Paddle: aperçu du paddlelnp [bibliothèque de développement pour le traitement du langage naturel des rames volantes]

Basic operations of database and table ----- set the fields of the table to be automatically added

阿里测开面试题

leetcode-2.回文判断

Leetcode skimming questions_ Sum of squares

Redis-字符串类型

Basic operations of databases and tables ----- primary key constraints

Competition question 2022-6-26

1. Introduction to basic functions of power query

NLP fourth paradigm: overview of prompt [pre train, prompt, predict] [Liu Pengfei]

Leetcode3, implémenter strstr ()

干货!通过软硬件协同设计加速稀疏神经网络

[network attack and defense training exercises]

dried food! Accelerating sparse neural network through hardware and software co design

SPI communication protocol

TrueType字体文件提取关键信息

Computer graduation design PHP part-time recruitment management system for College Students

ClickOnce does not support request execution level 'requireAdministrator'

How to set an alias inside a bash shell script so that is it visible from the outside?