当前位置:网站首页>Let's see how to realize BP neural network in Matlab toolbox

Let's see how to realize BP neural network in Matlab toolbox

2022-07-07 01:30:00 【Old cake explanation BP neural network】

Catalog

One 、 Source code reproduction effect

3、 ... and 、 Analysis of the source of effect difference

Four 、 Comparison of the effects of different training methods

Original article , Reprint please indicate from 《 Lao Bing explains -BP neural network 》bp.bbbdata.com

If we directly use the gradient descent method to solve BP neural network ,

Often there is no matlab The effect of the toolbox is so good .

This problem has troubled the author for a long time ,

Then we might as well dig out the source code and have a look ,matlab How the toolbox is implemented BP Neural network ,

Why is there no toolbox for our self written training effect BP Neural network is good .

One 、 Source code reproduction effect

Pick out matlab Toolbox gradient drops traingd Algorithm source code , After sorting out the algorithm process ,

One obtained by writing code 2 Cryptic layer BP The weight of the neural network

Call the toolbox newff The weight obtained :

You can see , The two results are the same , It shows that you fully understand and reproduce the BP Neural network training logic .

Two 、 Training mainstream

BP The main process of neural network gradient descent method is as follows

First initialize the weight threshold ,

Then iterate the weight threshold with gradient ,

If the termination conditions are met, quit training

The termination condition is : The error has reached the requirement 、 The gradient is too small or reaches the maximum number

The code is as follows :

function [W,B] = traingdBPNet(X,y,hnn,goal,maxStep)

%------ Variable pre calculation and parameter setting -----------

lr = 0.01; % Learning rate

min_grad = 1.0e-5; % Minimum gradient

%--------- initialization WB-------------------

[W,B] = initWB(X,y,hnn); % initialization W,B

%--------- Start training --------------------

for t = 1:maxStep

% Calculate the current gradient

[py,layerVal] = predictBpNet(W,B,X); % Calculate the predicted value of the network

[E2,E] = calMSE(y,py); % Calculation error

[gW,gB] = calGrad(W,layerVal,E); % Calculate the gradient

%------- Check whether the exit conditions are met ----------

gradLen = calGradLen(gW,gB); % Calculate the gradient value

% If the error has reached the requirement , Or the gradient is too small , Then quit training

if E2 < goal || gradLen <=min_grad

break;

end

%---- Update weight threshold -----

for i = 1:size(W,2)-1

W{i,i+1} = W{i,i+1} + lr * gW{i,i+1};% Update gradient

B{i+1} = B{i+1} + lr * gB{i+1};% Update threshold

end

end

end( In the code reproduction here, we shield the normalization processing 、 Generalization verifies the general operation of these two algorithms )

3、 ... and 、 Analysis of the source of effect difference

Source of effect difference

There is no difference between the main process and the conventional algorithm tutorial ,

So why matlab The result will be better ,

The main reason is initialization ,

A lot of tutorials , Random initialization is recommended ,

But in fact ,matlab The tool box uses nguyen_Widrow Method to initialize

nguyen_Widrow Law

nguyen_Widrow The idea of method initialization is as follows :

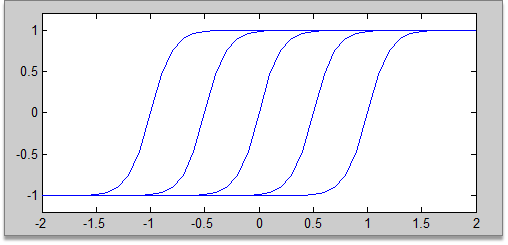

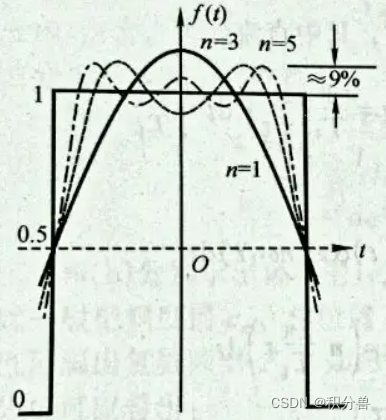

Take the single input network as an example , It initializes the network in the following form :

Its purpose is to make all hidden nodes evenly distributed in the range of input data .

The reason is , If BP Finally, every neuron of neural network is fully utilized ,

Then it should be more similar to the above distribution ( Full coverage of input range 、 Every neuron is fully utilized ),

Instead of randomly initializing and slowly adjusting , It's better to give such an initialization at the beginning .

The original text of this method is :

Derrick Nguyen and Bernard Widrow Of 《Improving the learning Speed of 2-Layer Neural Networks by Choosing Initial Values of the Adaptive Weights 》

Four 、 The effect of different training methods is different

Effect comparison

And the author uses traingd、traingda、trainlm Compare the effect ,

Find the same problem ,

traingd Training will not come out ,traingda Can train ,

and traingda Training will not come out ,trainlm Can train again .

That is, in the training effect

traingd< traingda < trainlm

that , If we directly use the gradient descent method written by ourselves ,

It is still far inferior to what we use matlab The tool box works well .

matlab Of BP The default neural network is trainlm Algorithm

A brief account of the cause

that traingda Why is it better than traingd Strong ,trainlm Why is it better than that traingda Strong ?

After source code analysis , Mainly traingda Adaptive learning rate is added in ,

and trainlm It uses the information of the second derivative , Make learning faster .

5、 ... and 、 Related articles

See :

《 rewrite traingd Code ( Gradient descent method )》

See :

This is it. matlab The algorithm logic of gradient descent method in neural network toolbox , It's so simple ~!

边栏推荐

猜你喜欢

子网划分、构造超网 典型题

对C语言数组的再认识

【信号与系统】

Body mass index program, entry to write dead applet project

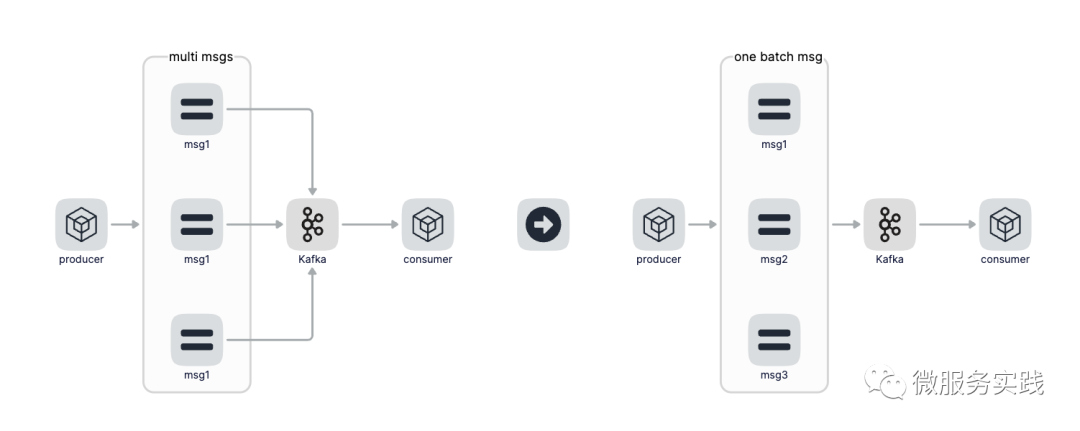

Go zero micro service practical series (IX. ultimate optimization of seckill performance)

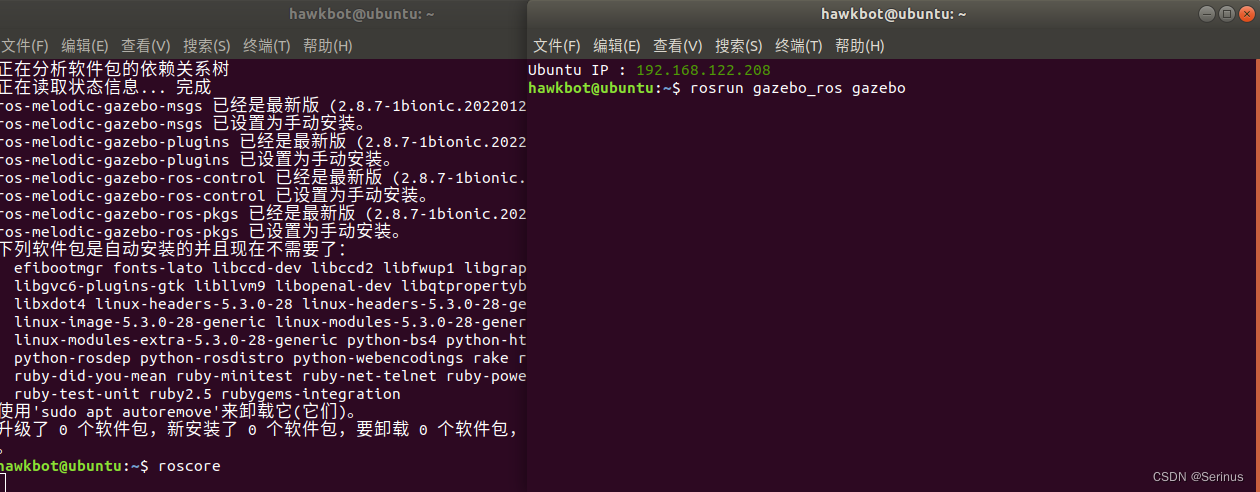

Gazebo的安装&与ROS的连接

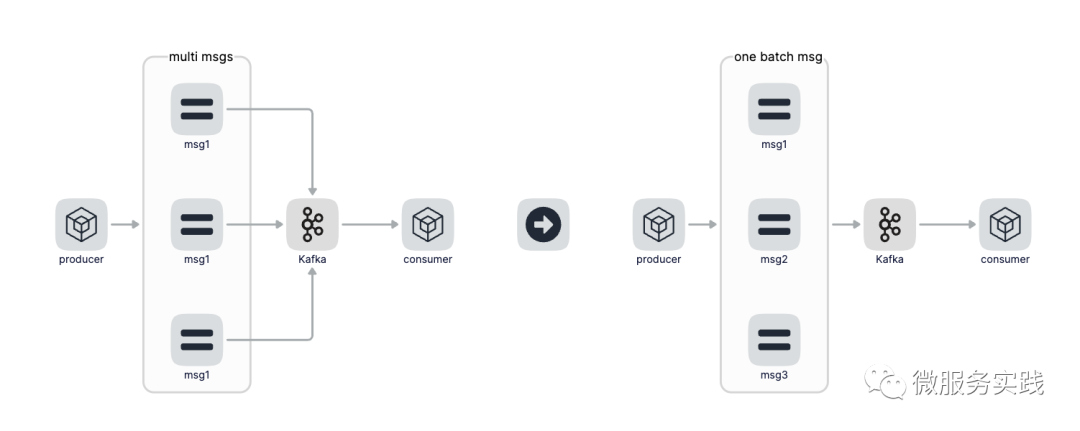

go-zero微服务实战系列(九、极致优化秒杀性能)

Lldp compatible CDP function configuration

Yunna - work order management system and process, work order management specification

![JS reverse -- ob confusion and accelerated music that poked the [hornet's nest]](/img/40/da56fe6468da64dd37d6b5b0082206.png)

JS reverse -- ob confusion and accelerated music that poked the [hornet's nest]

随机推荐

The cost of returning tables in MySQL

Machine learning: the difference between random gradient descent (SGD) and gradient descent (GD) and code implementation.

一起看看matlab工具箱内部是如何实现BP神经网络的

云呐-工单管理制度及流程,工单管理规范

Taro 小程序开启wxml代码压缩

json学习初体验–第三者jar包实现bean、List、map创json格式

C language instance_ four

taro3.*中使用 dva 入门级别的哦

Force buckle 1037 Effective boomerang

从零开始匹配vim(0)——vimscript 简介

Send template message via wechat official account

Metauniverse urban legend 02: metaphor of the number one player

Make Jar, Not War

域分析工具BloodHound的使用说明

Dark horse notes - exception handling

NEON优化:性能优化常见问题QA

分享一个通用的so动态库的编译方法

Let's see through the network i/o model from beginning to end

Table table setting fillet

What are the differences between Oracle Linux and CentOS?