当前位置:网站首页>Implementation of crawling web pages and saving them to MySQL using the scrapy framework

Implementation of crawling web pages and saving them to MySQL using the scrapy framework

2022-07-07 15:17:00 【1024 questions】

Hello everyone , In this issue, abin will share with you Scrapy Crawler framework and local Mysql Use . Today, the web page ah bin crawled is Hupu Sports Network .

(1) Open Hupu Sports Network , Analyze the data of the web page , Use xpath Positioning elements .

(2) After the first analysis of the web page, start creating a scrapy Reptile Engineering , Execute the following command at the terminal :

“scrapy startproject huty( notes :‘hpty’ Is the name of the crawler project )”, Get the project package shown in the figure below :

(3) Enter into “hpty/hpty/spiders” Create a crawler file called ‘“sww”, Execute the following command at the terminal : “scrapy genspider sww” (4) After the first two steps , Edit the crawler files related to the whole crawler project . 1、setting Editor of the document :

The gentleman agreement was originally True Change it to False.

Then open this line of code that was originally commented out .

2、 Yes item File for editing , This file is used to define data types , The code is as follows :

# Define here the models for your scraped items## See documentation in:# https://docs.scrapy.org/en/latest/topics/items.htmlimport scrapyclass HptyItem(scrapy.Item): # define the fields for your item here like: # name = scrapy.Field() players = scrapy.Field() The team = scrapy.Field() ranking = scrapy.Field() Average score = scrapy.Field() shooting = scrapy.Field() Three-point percentage = scrapy.Field() Free throw percentage = scrapy.Field()3、 Edit the most important crawler files ( namely “hpty” file ), The code is as follows :

import scrapyfrom ..items import HptyItemclass SwwSpider(scrapy.Spider): name = 'sww' allowed_domains = ['https://nba.hupu.com/stats/players'] start_urls = ['https://nba.hupu.com/stats/players'] def parse(self, response): whh = response.xpath('//tbody/tr[not(@class)]') for i in whh: ranking = i.xpath( './td[1]/text()').extract()# ranking players = i.xpath( './td[2]/a/text()').extract() # players The team = i.xpath( './td[3]/a/text()').extract() # The team Average score = i.xpath( './td[4]/text()').extract() # score shooting = i.xpath( './td[6]/text()').extract() # shooting Three-point percentage = i.xpath( './td[8]/text()').extract() # Three-point percentage Free throw percentage = i.xpath( './td[10]/text()').extract() # Free throw percentage data = HptyItem( players = players , The team = The team , ranking = ranking , Average score = Average score , shooting = shooting , Three-point percentage = Three-point percentage , Free throw percentage = Free throw percentage ) yield data4、 Yes pipelines File for editing , The code is as follows :

# Define your item pipelines here## Don't forget to add your pipeline to the ITEM_PIPELINES setting# See: https://docs.scrapy.org/en/latest/topics/item-pipeline.html# useful for handling different item types with a single interfacefrom cursor import cursorfrom itemadapter import ItemAdapterimport pymysqlclass HptyPipeline: def process_item(self, item, spider): db = pymysql.connect(host="Localhost", user="root", passwd="root", db="sww", charset="utf8") cursor = db.cursor() players = item[" players "][0] The team = item[" The team "][0] ranking = item[" ranking "][0] Average score = item[" Average score "][0] shooting = item[" shooting "] Three-point percentage = item[" Three-point percentage "][0] Free throw percentage = item[" Free throw percentage "][0] # Three-point percentage = item[" Three-point percentage "][0].strip('%') # Free throw percentage = item[" Free throw percentage "][0].strip('%') cursor.execute( 'INSERT INTO nba( players , The team , ranking , Average score , shooting , Three-point percentage , Free throw percentage ) VALUES (%s,%s,%s,%s,%s,%s,%s)', ( players , The team , ranking , Average score , shooting , Three-point percentage , Free throw percentage ) ) # Commit transaction operations db.commit() # Close cursor cursor.close() db.close() return item(5) stay scrapy After the frame is designed , Come first mysql Create a file called “sww” The database of , Create a database named “nba” Data sheet for , The code is as follows : 1、 Create database

create database sww;2、 Create data table

create table nba ( players char(20), The team char(10), ranking char(10), Average score char(25), shooting char(20), Three-point percentage char(20), Free throw percentage char(20));3、 You can see the structure of the table by creating the database and data table :

(6) stay mysql After creating the data table , Return to the terminal again , Enter the following command :“scrapy crawl sww”, The result

This is about using Scrapy The frame crawls the web page and saves it to Mysql This is the end of the article on the implementation of , More about Scrapy Crawl the web page and save the content. Please search the previous articles of SDN or continue to browse the relevant articles below. I hope you will support SDN in the future !

边栏推荐

- asp. Netnba information management system VS development SQLSERVER database web structure c programming computer web page source code project detailed design

- Bye, Dachang! I'm going to the factory today

- CTFshow,信息搜集:web5

- CTFshow,信息搜集:web2

- With 8 modules and 40 thinking models, you can break the shackles of thinking and meet the thinking needs of different stages and scenes of your work. Collect it quickly and learn it slowly

- Infinite innovation in cloud "vision" | the 2022 Alibaba cloud live summit was officially launched

- Notes HCIA

- Guangzhou Development Zone enables geographical indication products to help rural revitalization

- 15、文本编辑工具VIM使用

- 【深度学习】语义分割实验:Unet网络/MSRC2数据集

猜你喜欢

随机推荐

微信小程序 01

buffer overflow protection

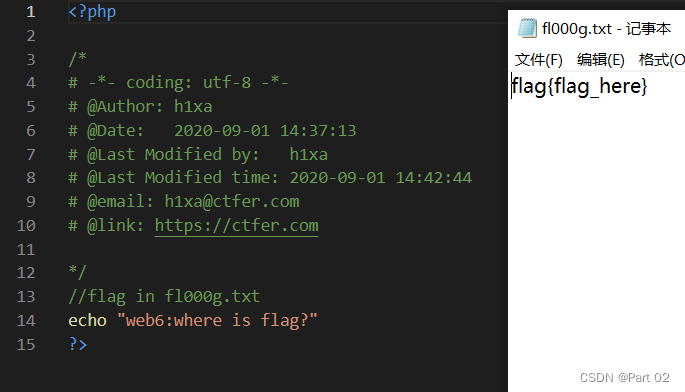

CTFshow,信息搜集:web6

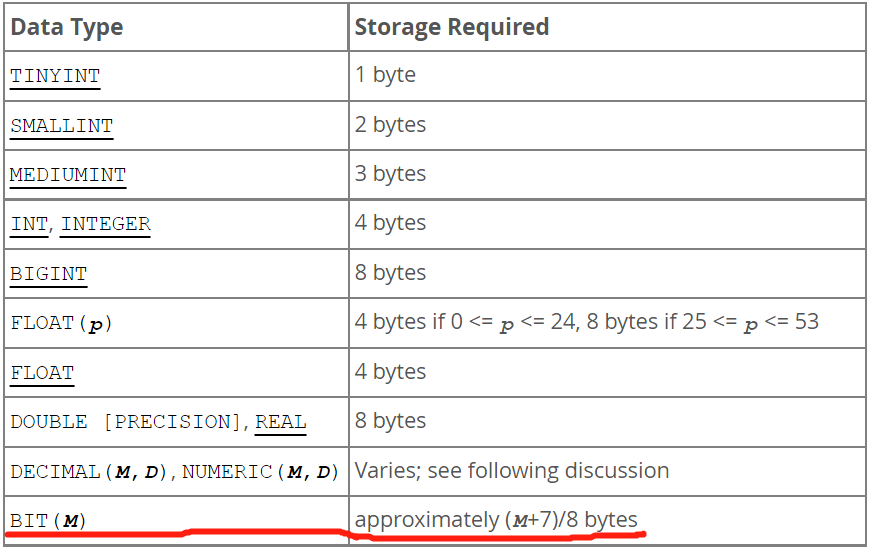

Bits and Information & integer notes

CTFshow,信息搜集:web10

Why do we use UTF-8 encoding?

数学建模——什么是数学建模

What are the safest securities trading apps

如何在opensea批量发布NFT(Rinkeby测试网)

[机缘参悟-40]:方向、规则、选择、努力、公平、认知、能力、行动,读3GPP 6G白皮书的五层感悟

CTFshow,信息搜集:web4

Cocoscreator resource encryption and decryption

@ComponentScan

【服务器数据恢复】戴尔某型号服务器raid故障的数据恢复案例

Niuke real problem programming - day15

Apache multiple component vulnerability disclosure (cve-2022-32533/cve-2022-33980/cve-2021-37839)

Compile advanced notes

【搞船日记】【Shapr3D的STL格式转Gcode】

Delete a whole page in word

Notes HCIA

![[机缘参悟-40]:方向、规则、选择、努力、公平、认知、能力、行动,读3GPP 6G白皮书的五层感悟](/img/38/cc5bb5eaa3dcee5ae2d51a904cf26a.png)