当前位置:网站首页>Hands on deep learning pytorch version exercise solution-3.3 simple implementation of linear regression

Hands on deep learning pytorch version exercise solution-3.3 simple implementation of linear regression

2022-07-03 10:20:00 【Innocent^_^】

- If the total loss of small batch is replaced by the average loss of small batch , How do you need to change the learning rate ?

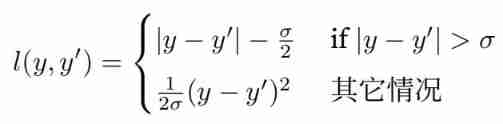

Explain : The default is actually the average (mean squared), The question is wrong , Do the opposite . The learning rate is divided by batch_size that will do - View the deep learning framework ⽂ files , What loss functions and initializations do they provide ⽅ Law ?⽤Huber The loss replaces the original loss , namely

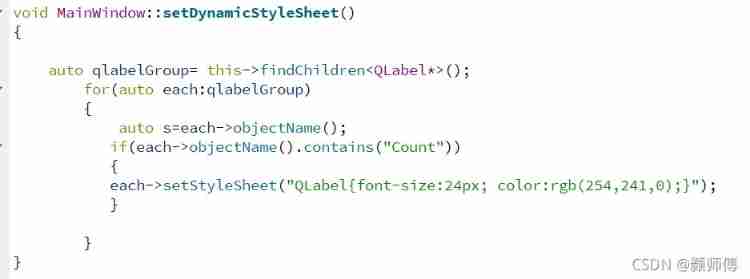

Provided loss As shown in the figure below , If you need specific understanding, you can use help(torch.nn.xxxLoss) Or Baidu query

# huber Loss correspondence Pytorch Of SmoothL1 Loss

loss = nn.SmoothL1Loss(beta=0.5)

num_epochs = 3

for epoch in range(num_epochs):

for X, y in data_iter:

l = loss(net(X), y)

# Start calculating the gradient

trainer.zero_grad()

l.backward()

trainer.step() # Update all parameters

print("epoch: {}, loss:{}".format(epoch + 1, l))

# Start with 1 when loss The larger , Change to 0.5 Run again to reduce , It may be related to the number of iterations

''' epoch: 1, loss:0.00011211777746211737 epoch: 2, loss:0.00013505184324458241 epoch: 3, loss:4.4465217797551304e-05 '''

- How do you access the gradient of linear regression ?

net[0].weight.grad,net[0].bias.grad

''' (tensor([[-0.0040, 0.0027]]), tensor([0.0015])) '''

For observability , This time, in addition to highlighting loss Of , The code output of other problems is put into the code block .

边栏推荐

- 20220609其他:多数元素

- [combinatorics] combinatorial existence theorem (three combinatorial existence theorems | finite poset decomposition theorem | Ramsey theorem | existence theorem of different representative systems |

- CV learning notes - deep learning

- [LZY learning notes dive into deep learning] 3.4 3.6 3.7 softmax principle and Implementation

- openCV+dlib实现给蒙娜丽莎换脸

- 20220607其他:两整数之和

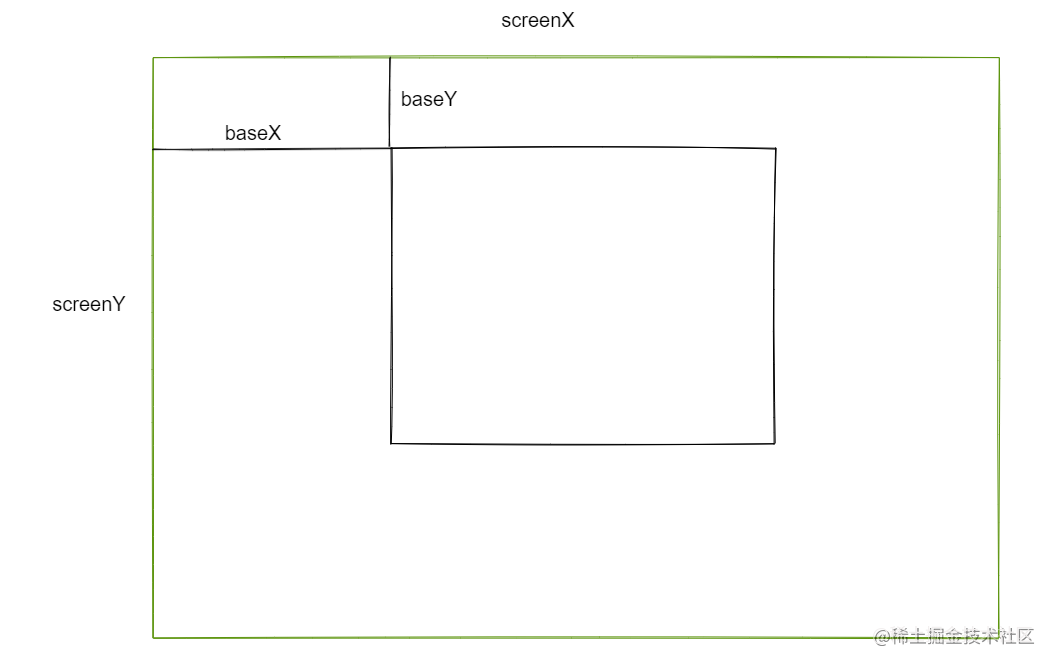

- Implementation of "quick start electronic" window dragging

- MySQL root user needs sudo login

- LeetCode - 933 最近的请求次数

- Rewrite Boston house price forecast task (using paddlepaddlepaddle)

猜你喜欢

Rewrite Boston house price forecast task (using paddlepaddlepaddle)

Secure in mysql8.0 under Windows_ file_ Priv is null solution

『快速入门electron』之实现窗口拖拽

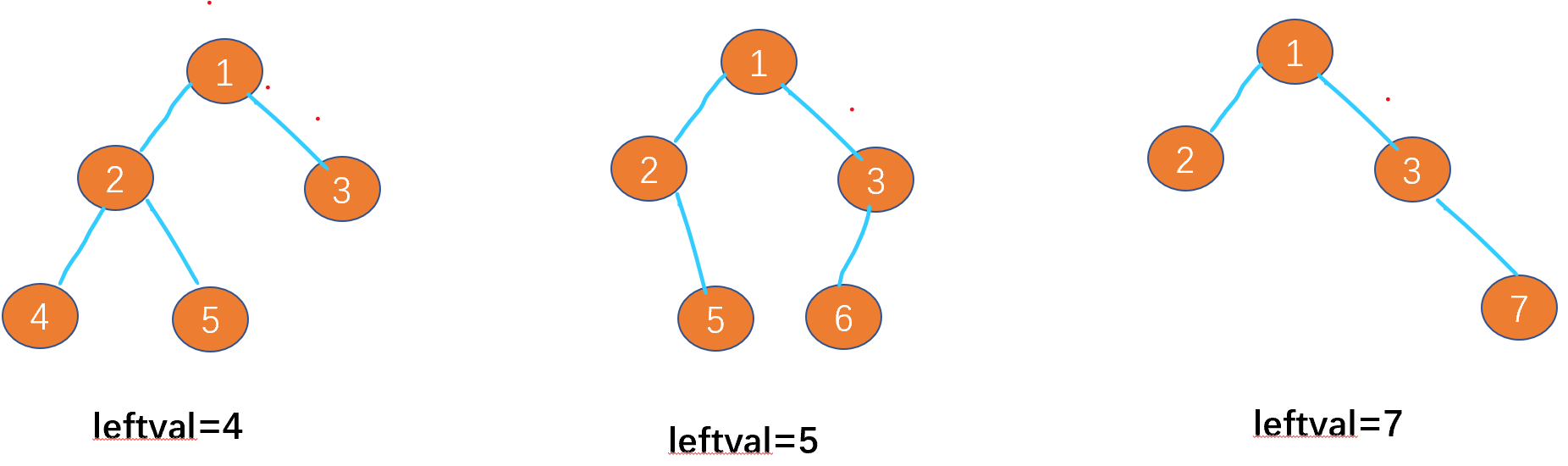

Leetcode-513: find the lower left corner value of the tree

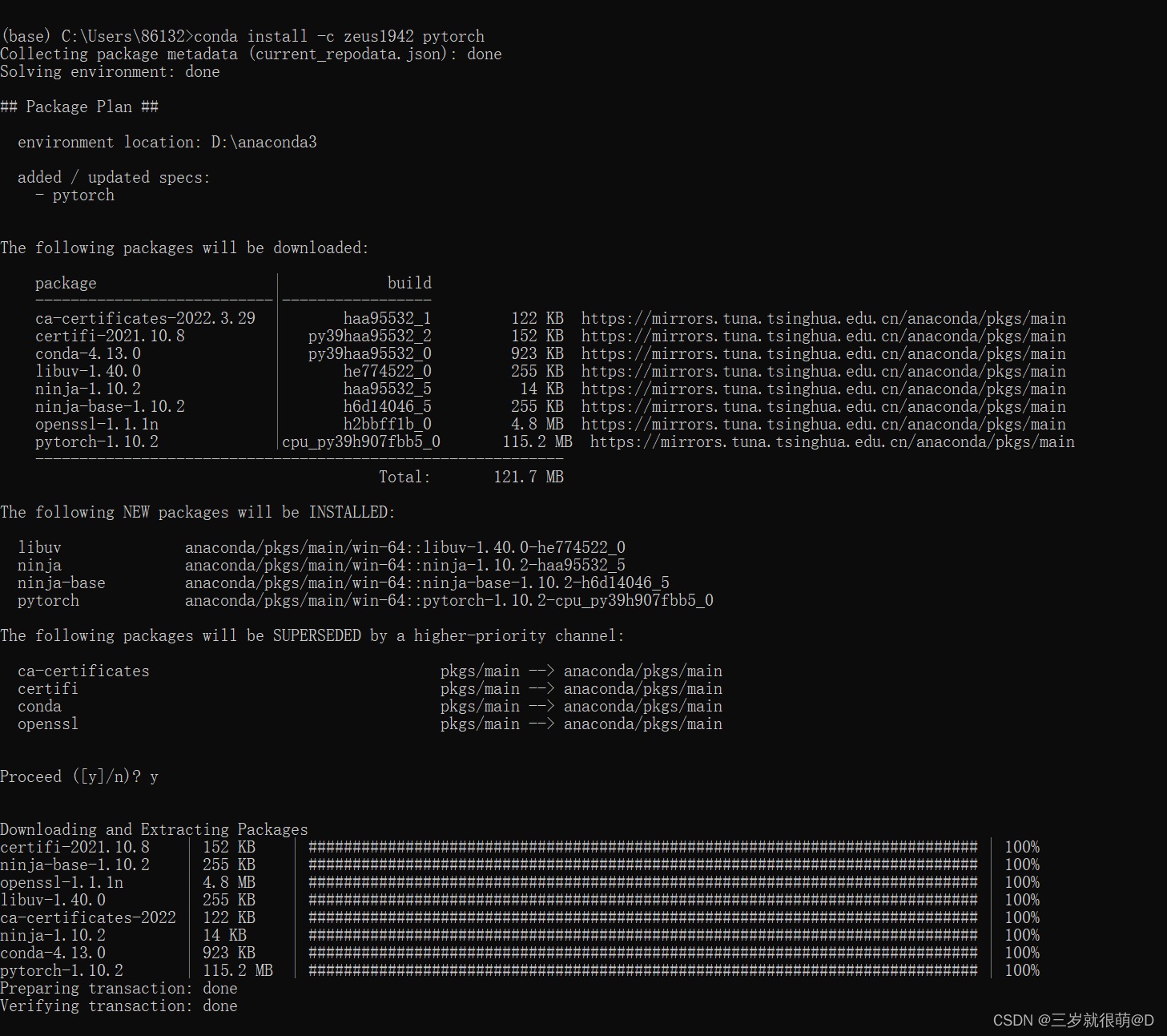

Anaconda installation package reported an error packagesnotfounderror: the following packages are not available from current channels:

Configure opencv in QT Creator

![[LZY learning notes -dive into deep learning] math preparation 2.5-2.7](/img/57/579357f1a07dbe179f355c4a80ae27.jpg)

[LZY learning notes -dive into deep learning] math preparation 2.5-2.7

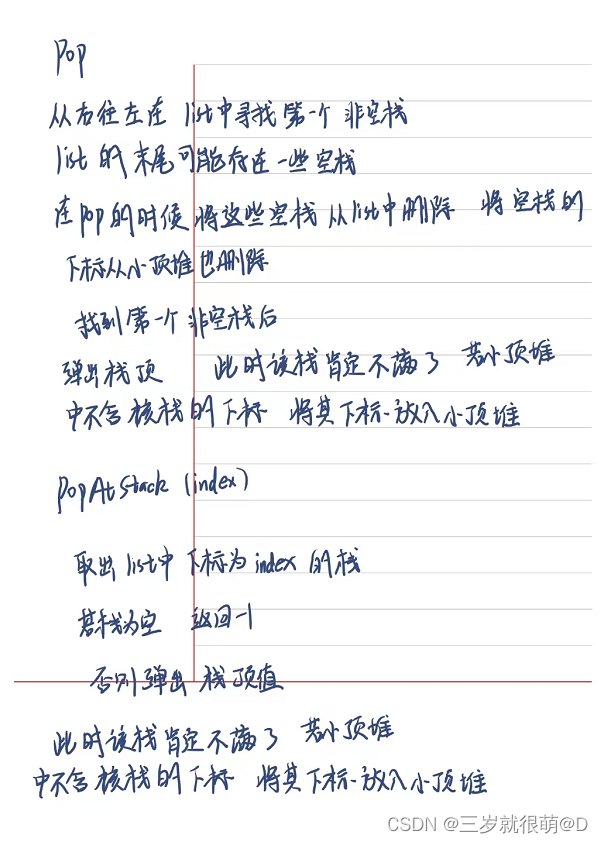

QT is a method of batch modifying the style of a certain type of control after naming the control

Leetcode - 1172 plate stack (Design - list + small top pile + stack))

LeetCode - 508. 出现次数最多的子树元素和 (二叉树的遍历)

随机推荐

20220604 Mathematics: square root of X

Retinaface: single stage dense face localization in the wild

What did I read in order to understand the to do list

Leetcode-112: path sum

20220610 other: Task Scheduler

20220602 Mathematics: Excel table column serial number

Wireshark use

OpenCV Error: Assertion failed (size.width>0 && size.height>0) in imshow

CV learning notes - clustering

After clicking the Save button, you can only click it once

Leetcode 300 longest ascending subsequence

Label Semantic Aware Pre-training for Few-shot Text Classification

LeetCode - 5 最长回文子串

Opencv feature extraction - hog

Leetcode-404: sum of left leaves

LeetCode - 1670 设计前中后队列(设计 - 两个双端队列)

Standard library header file

Discrete-event system

Leetcode - 705 design hash set (Design)

3.2 Off-Policy Monte Carlo Methods & case study: Blackjack of off-Policy Evaluation