当前位置:网站首页>Naive Bayes in machine learning

Naive Bayes in machine learning

2022-07-03 06:10:00 【Master core technology】

One 、 Basic concepts and ideas

Naive Bayes (naive Bayes) Law is Based on Bayes theorem And Independent hypothesis of characteristic conditions Generation model classification method .

Build a model : For training datasets , Firstly, the joint probability distribution of input and output is learned based on the assumption of characteristic condition independence P(X,Y), Joint probability can be converted into a priori probability multiplied by conditional probability , Use training data sets to learn P(X|Y) and P(Y) Of Maximum likelihood estimation , because P(X|Y) The learning parameter of increases exponentially with the number of feature values and the number of features , In practical application, the parameter value is often larger than the number of training samples , in other words , Some sample values do not appear in training, resulting in an estimated probability of 0, and “ Not observed ” and “ The probability of occurrence is 0” It is often different to the concept , Therefore, the conditional independence assumption can reduce the learning parameters to linear growth , You can learn a classification model .

Forecast classification : And then based on this model , For the given input x, Use Bayesian theorem to find Maximum a posteriori probability P(Y|X) Output y, This is equivalent 0-1 The risk is expected to be minimized during the loss function .

Two 、 Principles and methods

The training data set is

T = { ( x 1 , y 2 ) , ( x 2 , y 2 ) , . . . ( x n , y n ) } T=\{(x_1,y_2),(x_2,y_2),...(x_n,y_n)\} T={ (x1,y2),(x2,y2),...(xn,yn)}

Naive Bayes learns joint probability distributions by training data sets P ( X , Y ) P(X,Y) P(X,Y).

According to the multiplication formula of probability theory : P ( X , Y ) = P ( Y ) P ( X ∣ Y ) P(X,Y)=P(Y)P(X|Y) P(X,Y)=P(Y)P(X∣Y)

Learning joint probability can be transformed into learning prior probability distribution and conditional probability distribution .

A priori probability distribution :

P ( Y = c k ) , k = 1 , 2 , . . . , K P(Y=c_k),k=1,2,...,K P(Y=ck),k=1,2,...,K

According to the law of large numbers : When there's enough data , The frequency of direct use can be estimated as probability , So we think that the prior probability can be calculated when the data is sufficient .

Conditional probability distribution :

P ( X = x ∣ Y = c k ) = P ( X ( 1 ) = x ( 1 ) , . . . X ( n ) = x ( n ) ∣ Y = c k ) , k = 1 , 1... K P(X=x|Y=c_k)=P(X^{(1)}=x^{(1)},...X^{(n)}=x^{(n)}|Y=c_k),k=1,1...K P(X=x∣Y=ck)=P(X(1)=x(1),...X(n)=x(n)∣Y=ck),k=1,1...K

notes : The superscript number represents the number in a sample n Attributes or characteristics .

But conditional probability distribution P ( X = x ∣ Y = c k ) P(X=x|Y=c_k) P(X=x∣Y=ck) There are exponentially many parameters , Its estimation is actually infeasible . in fact , hypothesis x ( j ) x^{(j)} x(j) There are S j S_j Sj individual ,j=1,2,…n,Y There are K individual , Then the number of parameters is K ∏ j = 1 n S j K\prod_{j=1}^nS_j K∏j=1nSj, in application , This value is much larger than the number of training samples , in other words , Some values do not appear in the training set , It is obviously not feasible to estimate probability by frequency ,“ Not observed ” and “ The probability of occurrence is zero ” It's a different concept .

Naive Bayes assumes conditional independence of conditional probability distribution , This is a strong assumption , Hence the name naive Bayes . The assumption is :

P ( X = x ∣ Y = c k ) = P ( X ( 1 ) = x ( 1 ) , . . . X ( n ) = x ( n ) ∣ Y = c k ) = ∏ j = 1 n P ( X ( j ) = x ( j ) ∣ Y = c k ) P(X=x|Y=c_k)=P(X^{(1)}=x^{(1)},...X^{(n)}=x^{(n)}|Y=c_k)=\prod_{j=1}^nP(X^{(j)}=x^{(j)}|Y=c_k) P(X=x∣Y=ck)=P(X(1)=x(1),...X(n)=x(n)∣Y=ck)=∏j=1nP(X(j)=x(j)∣Y=ck)

Through this assumption, the parameter is reduced to K ∑ j = 1 n S j K\sum_{j=1}^nS_j K∑j=1nSj

Illustrate with examples : If the value of attribute one is 0,1, The value of attribute 2 is A,B,C, label Y There is only one value of , If we do not use the conditional independence assumption , Then the parameters to be solved are P(0,A),P(0,B),P(0,C),P(1,A),P(1,B),P(1,C)6 individual , The assumption of conditional independence is only P(0),P(1),P(A),P(B),P(C )5 individual , When there are more values for attributes , When there are more attributes , This gap will be even greater .

It is obvious that naive Bayes first modeled joint probability , Then the maximum posterior probability is obtained , It is a typical generative model

A posteriori probability is based on Bayesian theorem :

P ( Y = c k ∣ X = c k ) = P ( X = x ∣ Y = c k ) P ( Y = c k ) ∑ 1 k P ( X = x ∣ Y = c k ) P ( Y = c k ) P(Y=c_k|X=c_k)=\frac{P(X=x|Y=c_k)P(Y=c_k)}{\sum_1^kP(X=x|Y=c_k)P(Y=c_k)} P(Y=ck∣X=ck)=∑1kP(X=x∣Y=ck)P(Y=ck)P(X=x∣Y=ck)P(Y=ck)

Introduce the conditional independence assumption :

P ( Y = c k ∣ X = c k ) = P ( Y = c k ) ∏ 1 j P ( X ( j ) = x ( j ) ∣ Y = c k ) ∑ 1 k P ( Y = c k ) ∏ 1 j P ( X ( j ) = x ( j ) ∣ Y = c k ) P(Y=c_k|X=c_k)=\frac{P(Y=c_k)\prod_1^jP(X^{(j)}=x^{(j)}|Y=c_k)}{\sum_1^kP(Y=c_k)\prod_1^jP(X^{(j)}=x^{(j)}|Y=c_k)} P(Y=ck∣X=ck)=∑1kP(Y=ck)∏1jP(X(j)=x(j)∣Y=ck)P(Y=ck)∏1jP(X(j)=x(j)∣Y=ck)

Naive Bayesian classifier can be expressed as :

y = f ( x ) = a r g m a x P ( Y = c k ) ∏ 1 j P ( X ( j ) = x ( j ) ∣ Y = c k ) ∑ 1 k P ( Y = c k ) ∏ 1 j P ( X ( j ) = x ( j ) ∣ Y = c k ) y=f(x)=arg max\frac{P(Y=c_k)\prod_1^jP(X^{(j)}=x^{(j)}|Y=c_k)}{\sum_1^kP(Y=c_k)\prod_1^jP(X^{(j)}=x^{(j)}|Y=c_k)} y=f(x)=argmax∑1kP(Y=ck)∏1jP(X(j)=x(j)∣Y=ck)P(Y=ck)∏1jP(X(j)=x(j)∣Y=ck)

For the denominator, all c k c_k ck It's all the same , And we only care about the size relationship and don't care about the specific value , Therefore, the denominator can be omitted and written as :

y = f ( x ) = a r g m a x P ( Y = c k ) ∏ 1 j P ( X ( j ) = x ( j ) ∣ Y = c k ) y=f(x)=arg maxP(Y=c_k)\prod_1^jP(X^{(j)}=x^{(j)}|Y=c_k) y=f(x)=argmaxP(Y=ck)∏1jP(X(j)=x(j)∣Y=ck)

3、 ... and 、 The maximum meaning of posterior probability

To be updated

Four 、 Algorithm steps

To be updated

5、 ... and 、 Extension and improvement of naive Bayes

Bayesian estimation : Using maximum likelihood estimation, the probability value to be estimated may be 0 To the situation . This will affect the posterior probability and the calculation results , Make the classification deviate , The Bayesian estimate random variables are given a positive number on the frequency of each value , This is called Laplacian smoothing .

Semi naive Bayes : People try to relax the assumption of attribute conditional independence to a certain extent , This leads to a class called “ Semi naive Bayesian classifier ”, Properly consider the interdependent information between some attributes , Therefore, there is no need for complete joint probability calculation , Not to completely ignore the relatively strong attribute dependency .

Bayesian networks : With the help of directed acyclic graph, we can describe the dependency relationship between attributes , The conditional probability table is used to describe the joint probability distribution of attributes

Naive Bayes can often achieve good performance explanation :

(1) For sorting tasks , As long as the conditional probability of each category is sorted correctly , Accurate probability values are not required to lead to correct classification results

(2) If dependencies between attributes have the same impact on all categories , Or the dependence relationship can offset each other , Then the attribute conditional independence assumption will not have a negative impact on performance when reducing the computational overhead .

边栏推荐

- Solve the problem of automatic disconnection of SecureCRT timeout connection

- Kubesphere - build MySQL master-slave replication structure

- ODL framework project construction trial -demo

- Detailed explanation of findloadedclass

- Loss function in pytorch multi classification

- [system design] proximity service

- Zhiniu stock -- 03

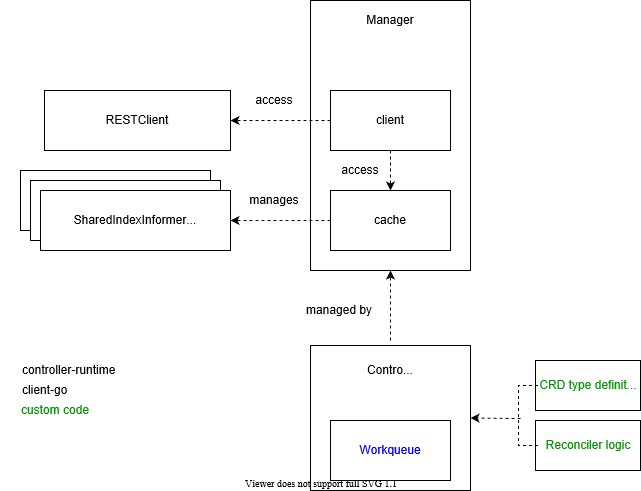

- 深入解析kubernetes controller-runtime

- Kubernetes notes (VI) kubernetes storage

- Fluentd facile à utiliser avec le marché des plug - ins rainbond pour une collecte de journaux plus rapide

猜你喜欢

Project summary --01 (addition, deletion, modification and query of interfaces; use of multithreading)

Project summary --2 (basic use of jsup)

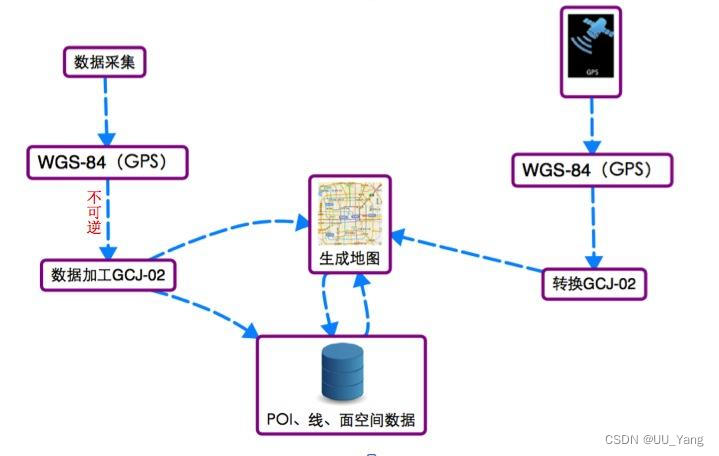

GPS坐标转百度地图坐标的方法

Svn branch management

Kubernetes notes (IX) kubernetes application encapsulation and expansion

The most responsible command line beautification tutorial

Understand the first prediction stage of yolov1

Kubernetes notes (IV) kubernetes network

In depth analysis of kubernetes controller runtime

【系统设计】邻近服务

随机推荐

Apifix installation

智牛股项目--04

Kubernetes notes (10) kubernetes Monitoring & debugging

Method of converting GPS coordinates to Baidu map coordinates

伯努利分布,二项分布和泊松分布以及最大似然之间的关系(未完成)

[teacher Zhao Yuqiang] redis's slow query log

Installation du plug - in CAD et chargement automatique DLL, Arx

[teacher Zhao Yuqiang] Flink's dataset operator

[teacher Zhao Yuqiang] calculate aggregation using MapReduce in mongodb

Oauth2.0 - user defined mode authorization - SMS verification code login

Leetcode solution - 01 Two Sum

项目总结--04

[teacher Zhao Yuqiang] RDB persistence of redis

Cesium entity (entities) entity deletion method

Bio, NiO, AIO details

[teacher Zhao Yuqiang] MySQL flashback

Pytorch builds the simplest version of neural network

pytorch 搭建神经网络最简版

卷积神经网络CNN中的卷积操作详解

Skywalking8.7 source code analysis (II): Custom agent, service loading, witness component version identification, transform workflow