当前位置:网站首页>Unified Focal loss: Generalising Dice and cross entropy-based losses to handle class imbalanced medi

Unified Focal loss: Generalising Dice and cross entropy-based losses to handle class imbalanced medi

2022-07-06 22:47:00 【Never_ Jiao】

Unified Focal loss: Generalising Dice and cross entropy-based losses to

handle class imbalanced medical image segmentation

Journal Publishing :Computerized Medical Imaging and Graphics’

Time of publication :2022 year

Abstract

Automatic segmentation method is an important progress in medical image analysis . Machine learning technology , Especially deep neural networks , It is the latest technology in most medical image segmentation tasks . The problem of category imbalance poses a major challenge to medical data sets , Relative to background , Lesions usually occupy a relatively small volume . The loss function used in the training of deep learning algorithm is different in robustness to class imbalance , It has a direct impact on the convergence of the model . The most commonly used segmentation loss function is based on cross entropy loss 、Dice Loss or a combination of the two . We put forward a unified Focal Loss , This is a new hierarchical framework , It sums up Dice And dealing with class imbalance based on the loss of cross entropy . We are in five publicly available 、 Evaluate our proposed loss function on class unbalanced medical imaging data sets :CVC-ClinicDB、 Digital retinal image for blood vessel extraction (DRIVE)、2017 Breast ultrasound (BUS2017)、2020 Brain tumor segmentation in (BraTS20) and 2019 Kidney tumor segmentation (KiTs 19). We compare our loss function performance with six Dice Or the loss function based on cross entropy , stride across 2D Two classification 、3D II. Classification and 3D Multi class segmentation tasks , It is proved that the loss function proposed by us is robust to class imbalances , And it is always better than other loss functions . The source code is located in :https://github.com/mlyg/unified-focal-loss.

Keywords: Loss function, Class imbalance, Machine learning, Convolutional neural networks, Medical image segmentation

Introduction

Image segmentation involves dividing the image into meaningful regions according to the characteristics of regional pixels , Identify the objects of interest (Pal and Pal,1993). This is a basic task in computer vision , It has been widely used in face recognition 、 Automatic driving and medical image processing . especially , Automatic segmentation method is an important progress in medical image analysis , Can include ultrasound (US)、 Computed tomography (CT) And MRI (MRI) Divide the structure in a series of imaging modes .

Classical image segmentation methods include direct region detection ( For example, segmentation and merging and region growth algorithms (Rundo et al., 2016))、 Graph based approach (Chen and Pan, 2018)、 Active contour and horizontal setting model (Khadidos wait forsomeone ,2017 year ). Later methods focus on the application and adjustment of traditional machine learning technology (Rundo wait forsomeone ,2020b), For example, support vector machines (SVM)(Wang and Summers,2012 year )、 Unsupervised clustering (Ren wait forsomeone ,2019 year ) And atlas are based on segmentation (Wachinger and Gol Land,2014 year ). However , In recent years , Great progress has been made in deep learning (Ker wait forsomeone ,2018 year ;Rueckert and Schnabel,2019 year ;Castiglioni wait forsomeone ,2021 year ).

The most famous architecture in image segmentation U-Net (Ronneberger et al., 2015) Convolution neural network (CNN) The architecture is changed to encoder - Decoder network , Be similar to SegNet (Badrinarayanan et al., 2015). , 2017), It supports end-to-end feature extraction and pixel classification . Since its establishment , Many have been proposed based on U-Net Variants of Architecture (Y. Liu et al., 2020; L. Liu et al., 2020; Rundo et al., 2019a)—— Include 3D U-Net( Cicek et al., 2016)、Attention U-Net (Schlemper et al., 2019) and V-Net (Mil letari et al., 2016)—— And integrated into the condition generation countermeasure network (Kessler et al., 2020) ;Armanious wait forsomeone ,2020 year ).

In order to train deep neural network , Back propagation updates the model parameters according to the optimization objectives defined by the loss function . Cross entropy loss is usually the most widely used loss function in classification problems (L. Liu et al., 2020; Y. Liu et al., 2020), And applied to U-Net(Ronneberger et al., 2015) , 3D U-Net (Cicek et al., 2016) and SegNet (Badrinarayanan et al., 2017). by comparison ,Attention U-Net (Schlemper et al., 2019) and V-Net (Milletari et al., 2016) utilize Dice loss, It is based on the most commonly used metrics for evaluating segmentation performance , Therefore, it means that the direct loss of sending a form is minimized . In a broad sense , The loss function used in image segmentation can be divided into distribution based loss ( For example, cross entropy loss )、 Area based losses ( for example Dice Loss )、 Boundary based losses ( For example, boundary loss )(Kervadec etc. al., 2019), And recent compound losses . Composite loss combines multiple independent loss functions , for example Combo Loss , It is Dice And cross entropy loss (Taghanaki wait forsomeone ,2019).

One of the main problems in medical image segmentation is to deal with class imbalance , This refers to the uneven distribution of foreground and background elements . for example , Automatic organ segmentation usually involves organ sizes that are an order of magnitude smaller than the scan itself , This leads to a biased distribution in favor of the background elements (Roth et al., 2015). This problem is more common in oncology , The size of the tumor itself is usually significantly smaller than the relevant organ of origin .

Tahanaki et al (2019) Distinguish between input and output imbalances , The former is as mentioned above , The latter refers to the classification deviation produced in the reasoning process . These include false positives and false negatives , They describe the background pixels incorrectly classified as foreground objects and foreground objects incorrectly classified as backgrounds . Both are particularly important in the context of medical image segmentation ; In the case of image guided intervention , False positives may lead to larger radiation fields or excessive surgical margins , conversely , False negatives may lead to insufficient radiation delivery or incomplete surgical resection . therefore , It is very important to design a loss function that can be optimized to deal with input and output imbalance .

Even though it's important , But careful selection of loss function is not a common practice , And usually choosing a suboptimal loss function will have a performance impact . In order to inform the choice of loss function , It is very important to compare large-scale loss functions . stay CVC-EndoSceneStill( Gastrointestinal polyp segmentation ) Seven loss functions are compared on the data set , Among them, the loss based on region is the best , conversely , Cross entropy loss is the worst (S´anchez-Peralta wait forsomeone ,2020). Again , Use NBFS Skull-stripped Data sets (Jadon, 2020)( brain CT Division ) Yes 15 Compare loss functions , The dataset also introduces log-cosh Dice Loss , The conclusion is that Focal Tversky Loss and Tversky Loss , Are based on regional losses , Usually the best (Jadon,2020). The most comprehensive loss function comparison to date further supports this , In four data sets ( The liver 、 Liver tumor 、 Pancreas and multiple organ segmentation ) Compared with 20 Loss functions , The best performance based on composite loss is observed , It uses DiceTopK and DiceFocal The loss observed is the most consistent performance (Ma et al., 2021). It is obvious from these studies , Compared with distribution based losses , Performance based on regional or composite losses is always better . However , It is not clear which of the area based or composite losses to choose , There is no agreement between the above . A major confounding factor is the degree of class imbalance in the data set ,NBFS Skull-stripping The category imbalance in the data set is low , And in the ( Ma et al ,2021)CVC-EndoSceneStill The category imbalance in the data set is moderate .

In medical image data sets , Those datasets involving tumor segmentation are related to a high degree of class imbalance . Manually depicting tumors is time-consuming and operator dependent . The automatic method of tumor delineation aims to solve these problems , And public data sets , For example, for breast tumors Breast Ultrasound 2017 (BUS2017) Data sets (Yap et al., 2017)、 Kidney tumor segmentation for kidney tumor 19 (KiTS19) Data sets ( Heller wait forsomeone ,2019 year ) And brain tumor segmentation for brain tumors 2020(BraTS20)(Menze wait forsomeone ,2014 year ) It has accelerated the process of achieving this goal . in fact , Recently, in the BraTS20 Progress has been made in translating datasets into clinical and scientific practice (Kofler wait forsomeone ,2020 year ).

BUS2017 The current state-of-the-art model of the dataset includes attention gates , Refine the skip connection by using the context information from the gating signal , Highlight areas of interest , This may provide benefits in case of category imbalance (Abraham and Khan,2019 ). In addition to the attention gate ,RDAU-NET It also combines residual element and extended convolution to enhance information transmission and increase receptive field respectively , And use Dice I'm going to train (Zhuang et al., 2019). Input more attention U-Net Combine attention gate with in-depth supervision , And introduced Focal Tversky Loss , This is a region based loss function , Designed to deal with class imbalance (Abraham and Khan,2019).

about BraTS20 Data sets , A popular method is to use multi-scale architecture , Different receptive field sizes allow independent processing of local and global context information (Kamnitsas wait forsomeone ,2017;Havaei wait forsomeone ,2017). Kamnitsas wait forsomeone . (2017) A two-stage training process is used , This includes initial upsampling of classes that are not sufficiently representative , Then there is the second stage , The output layer is retrained on more representative samples . Again ,Havaei wait forsomeone . (2017) Apply equal probability foreground or background pixels at the center of the patch using sampling rules , The cross entropy loss is used to optimize .

about KiTS19 Data sets , At present, the most advanced is “no-newNet”(nnU-Net)(Isensee wait forsomeone ,2021,2018), This is an automatically configured segmentation method based on deep learning , Involving integration 2D、3D And cascade 3D U-Nets. The framework uses Dice And cross entropy loss . lately , A method based on integration has obtained nnU-Net Quite a result , And involves using Dice Loss of training 2D U-Nets The segmentation of renal organs and renal tumors was initially treated independently , Then suppress false positive prediction of kidney tumor segmentation using trained kidney organ segmentation network for psychological analysis (Fatemeh wait forsomeone ,2020 year ). When the data set size is small , Use CNN An active learning based method for correcting labels ( Also used Dice I'm going to train ) The results show that nnU-Net Higher segmentation accuracy (Kim wait forsomeone ,2020).

Obviously , For all three data sets , Class imbalance is mainly handled by changing the training or input data sampling process , Rarely solved by adjusting the loss function . However , Popular methods —— For example, upsampling classes that are not sufficiently representative —— Essentially, it is related to the increase of false positive prediction , And more complicated 、 Usually, the multi-stage training process requires more computing resources .

The most advanced solutions usually use the unmodified version Dice Loss 、 Cross entropy loss or a combination of the two , Even if the available loss function is used to deal with class imbalance , for example Focal Tversky Loss , It can also continuously improve the performance that has not been observed (Ma et al., 2021). It is difficult to decide which loss function to use , Because there are not only a large number of loss functions to choose from , And it's not clear how each loss function correlates . Understanding the relationship between loss functions is the key to providing heuristic methods to inform the choice of loss functions in the case of class imbalance .

In this paper , We make the following contributions :

1、 We summarize and expand the knowledge provided by previous studies , These studies compare loss functions to solve the context of class imbalance , By using five class imbalance datasets with different degrees of class imbalance , Include 2D Binary system 、3D Binary sum 3D Multi class segmentation , Across multiple imaging modes .

2、 We defined Dice And the hierarchical classification of loss function based on cross entropy , And use it to derive a unified Focal Loss , It generalizes based on Dice And the loss function based on cross entropy to deal with class unbalanced data sets .

3、 Our proposed loss function consistently improves the segmentation quality of the other six related loss functions , Related to better recall accuracy balance , And it is robust to class imbalance .

The paper is organized as follows . The first 2 Section summarizes the loss functions used , Including proposed unification Focal Loss . The first 3 Section describes the selected medical imaging data set and defines the segmentation evaluation indicators used . The first 4 The experimental results are introduced and discussed in section . Last , The first 5 Section provides concluding comments and future directions .

Background

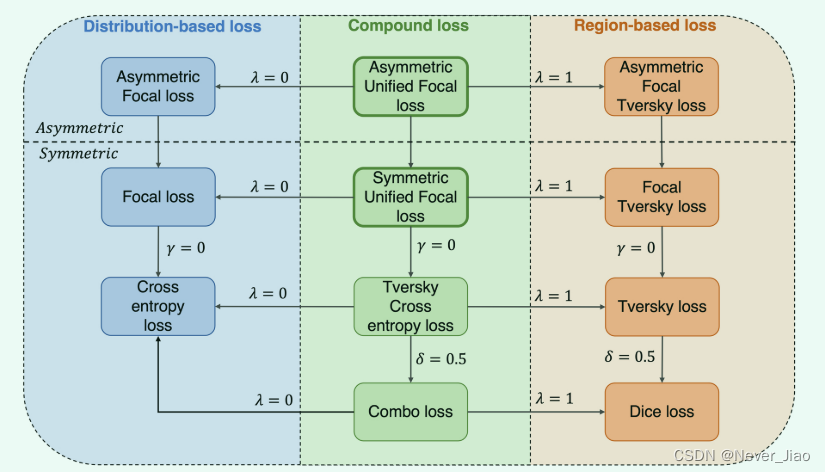

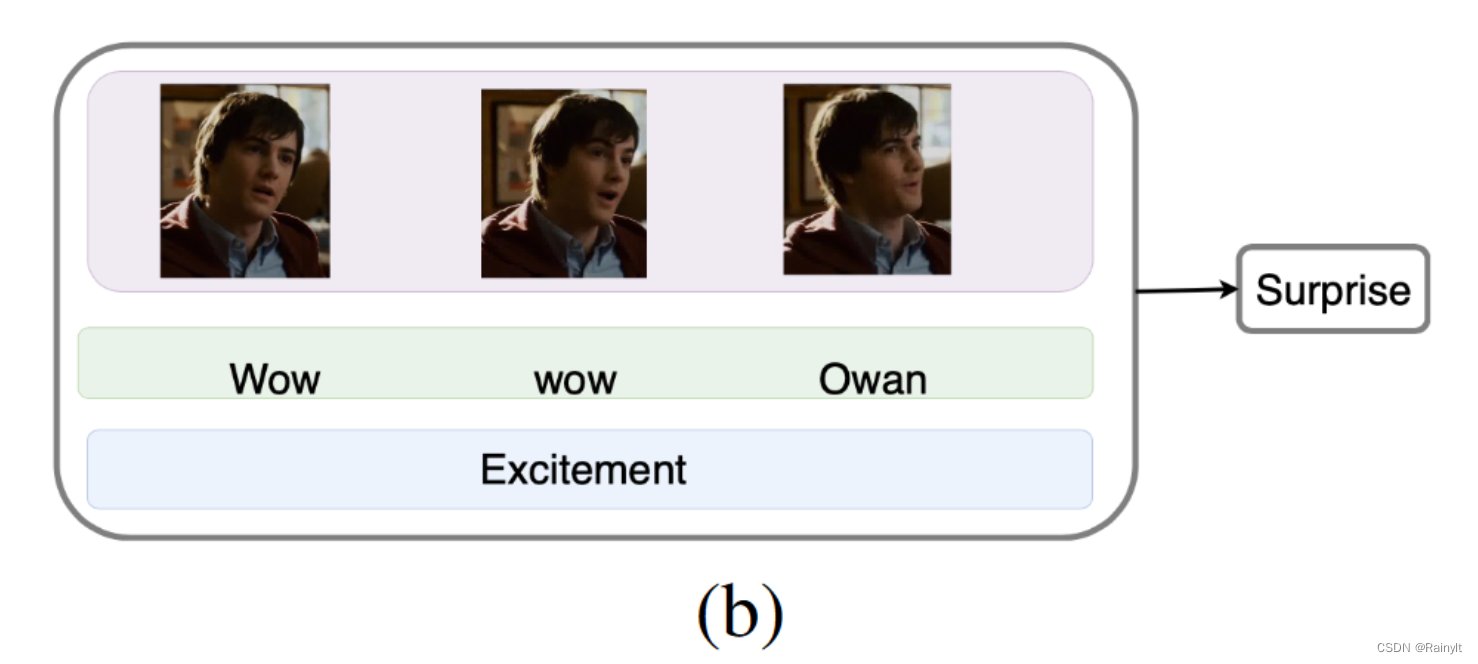

The loss function defines the optimization problem , It directly affects the model convergence in the training process . This article focuses on semantic segmentation , This is a sub field of image segmentation , Among them, pixel level classification is directly performed , In contrast to instance segmentation that requires additional object detection stages . We describe seven loss functions , They are based on distribution 、 Compound losses based on area or a combination of the two . chart 1 Provides a graphical overview of loss functions in these categories , And how to unify Focal Derived from the loss . First , Introduced the function based on distribution , Then there is the loss function based on region , Finally, it is concluded that there is a compound loss function .

Fig.1 Our proposed framework unifies all kinds of distribution based 、 Based on region and composite loss function . The arrows and the associated hyperparametric values represent the hyperparametric values required for the previous loss function settings , In order to recover the loss function .

Cross entropy loss

Cross entropy loss is one of the most widely used loss functions in deep learning . Originated from information theory , Cross entropy measures the difference between two probability distributions of a given random variable or event set . As a loss function , It is ostensibly equivalent to negative log likelihood loss , For binary classification , Binary cross entropy loss (LBCE) The definition is as follows :

here ,y, ^y ∈ {0, 1} N, among ^y It refers to the predicted value ,y refer to ground truth label . This can be extended to multi class problems , Classification cross entropy loss (LCCE) The calculation for the :

among yi,c Use ground truth Labeled one-hot coding scheme ,pi,c Is the predictive value matrix of each class , The index c and i Iterate over all classes and pixels separately . Cross entropy loss is based on minimizing pixel level errors , In the case of class imbalance , It will lead to the over representation of larger objects in the loss , As a result, the segmentation quality of smaller objects is poor .

Focal loss

The standard cross entropy loss is used to solve the class imbalance problem , The way is to reduce the contribution of simple examples , So that we can learn more difficult examples (Lin et al., 2017). In order to derive Focal Loss function , Let's first simplify the equation 1 The loss in is :

Next , We will predict ground truth class pt The probability of is defined as :

therefore , Binary cross entropy loss (LBCE) It can be rewritten as :

Focal loss (LF) A modulation factor is added to the binary cross entropy loss :

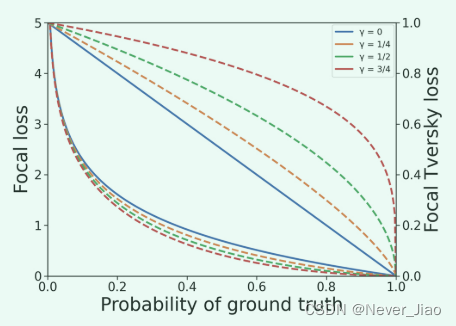

Focal loss from α and γ A parameterized , They respectively control the class weight of easy to classify pixels and reduce the degree of weighting ( chart 2). When γ = 0 when ,Focal loss Reduced to binary cross entropy loss .

Fig.2 Use unity Focal Loss changes γ Influence . The top and bottom curve groups are connected with Focal Tversky loss and Focal loss of . Dashed lines indicate with modifications Focal Tversky Lost and modified Focal Different loss components γ value .

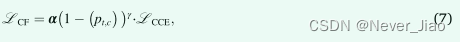

For multi class segmentation , We define classification Focal loss(LCF):

among α Now it's the vector of class weights ,pt,c Is the real probability matrix of each class ,LCCE It's the equation 2 Classification cross entropy loss defined in .

Dice loss

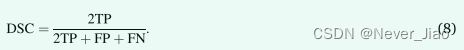

Sørensen-Dice When applied to Boolean data, an index is called Dice Similarity coefficient (DSC), It is the most commonly used index to evaluate the accuracy of segmentation . We can base on the true positive (TP)、 False positive (FP) And false negatives (FN) For each voxel classification DSC:

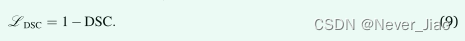

therefore ,Dice Loss (LDSC) Can be defined as :

Dice Other variants of loss include generalized Dice Loss (Crum wait forsomeone ,2006;Sudre wait forsomeone ,2017), The class weight is corrected by the reciprocal of its volume , And in a broad sense Wasser stein Dice Loss (Fidon wait forsomeone ., 2017), It will Wasserstein Measurement and Dice Combined with loss , Suitable for processing hierarchical data , for example BraTS20 Data sets (Menze et al., 2014).

Even in the simplest formula ,Dice Losses also apply to deal with class imbalances to some extent . However ,Dice The loss gradient is inherently unstable , The most obvious is the highly unbalanced data , The gradient calculation involves the small denominator (Wong wait forsomeone ,2018;Bertels wait forsomeone ,2019).

Tversky loss

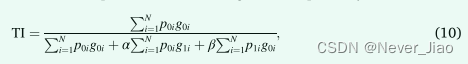

Tversky Index (Salehi et al., 2017) And DSC Is closely related to the , But you can use the weight α and β Assign false positives and false negatives respectively to optimize output imbalance :

among p0i It's pixels i Probability of belonging to foreground class ,p1i Is the probability that the pixel belongs to the background class . g0i by 1 Show the future ,0 Background representation , contrary g1i The value is 1 Background representation ,0 Show the future .

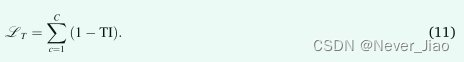

Use Tversky Indexes , We will C Class Tversky Loss (LT) Defined as :

When Dice When the loss function is applied to the class imbalance problem , The generated segmentation usually shows high accuracy but low recall score (Salehi wait forsomeone ,2017). Assigning greater weight to false negatives can improve recall , And better balance accuracy and recall . therefore ,β Usually set higher than α, The most common is β = 0.7 and α = 0.3.

Asymmetric similarity loss comes from Tversky Loss , But use Fβ Score and will α Replace with 1/1+β2 and β Replace β2 /1+β2, Added α and β Must sum to 1 Constraints (Hashemi et al., 2018 ). In practice , choice Tversky Lost α and β value , Make them sum to 1, Make the two loss functions functionally equivalent .

Focal Tversky loss

Subject to cross entropy loss Focal loss Inspiration of adaptation ,Focal Tversky loss (Abraham and Khan, 2019) By applying a focal Parameters to adapt Tversky Loss .

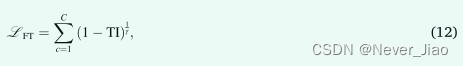

Use the equation 10 Medium TI Definition , Pictured 10 Shown ,Focal Tversky Loss definition (LFT) by :

among γ < 1 Increased attention to more difficult examples . When γ = 1 when ,Focal Tversky The loss is simplified to Tversky Loss . However , And Focal Losses are the opposite , The best value reported is γ = 4∕3, It enhances rather than suppresses the loss of simple examples . actually , At the end of training , Most examples are classified more confidently and Tversky The index is close to 1, Increasing the loss in this area will maintain a higher loss , This may prevent premature convergence to a suboptimal solution .

Combo loss

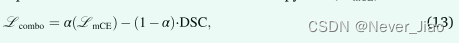

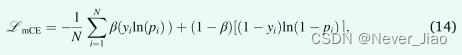

Combo Loss (Taghanaki et al., 2019) It belongs to compound loss , Among them, multiple loss functions are uniformly minimized . Portfolio loss (Lcombo) Defined as equation 8 in DSC Weighted sum of And cross entropy loss (LmCE) The form of modification :

among ,

α ∈ [0,1] control Dice And the relative contribution of the cross entropy term to the loss ,β Controls the relative weights assigned to false positives and negatives . β > 0.5 The penalty of false negative prediction is greater than that of false positive .

What is puzzling is ,“Dice And cross entropy loss ” The term has been used to refer to cross entropy loss and DSC The sum of (Taghanaki wait forsomeone ,2019;Isensee wait forsomeone ,2018), And the sum of cross entropy loss and entropy loss Dice Loss , for example DiceFocal Loss and Dice And weighted cross entropy loss (Zhu et al., 2019b; Chen et al., 2019). ad locum , We decided to use the previous definition , This is related to the combination loss and KiTS19 The loss function used in the latest technology of data sets is consistent (Isensee wait forsomeone ,2018 year ).

Hybrid Focal loss

Combo Loss (Taghanaki et al., 2019) and DiceFocal Loss (Zhu et al., 2019b) Is two composite loss functions , They inherit Dice And the benefits of loss function based on cross entropy . However , In case of category imbalance , Neither takes full advantage . Combo Loss and DiceFocal The losses in the cross entropy component losses are respectively adjustable β and α Parameters , It is partially robust to output imbalance . however , Both are lacking Dice Equivalent term of component loss , The weights of positive samples and negative samples are the same . Similarly , Two kinds of losses Dice Components are not suitable for handling input imbalance , Even though DiceFocal Loss better adapts to its in Focal Loss of focal Parameters .

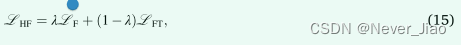

To overcome this problem , We previously proposed mixing focal Loss function , It combines adjustable parameters to deal with output imbalance , And for dealing with input imbalance focal Parameters , be used for Dice And component loss based on cross entropy (Yeung wait forsomeone ,2021 ) By way of Dice Replace the loss with Focal Tversky Loss , And the cross entropy loss is replaced by Focal Loss , blend focal Loss (LHF) Defined as :

among λ ∈ [0,1] And determine the relative weight of the two component loss functions .

Unified Focal loss

Hybrid Focal loss At the same time, I adapted Dice And dealing with class imbalance based on the loss of cross entropy . However , Use mixing in practice Focal There are two main problems with losses . First , There are six Super parameters that need to be adjusted : come from Focal Lost α and γ, come from Focal Tversky Lost α / β and γ, And for controlling the relative weight of the two component losses λ. Although this allows a greater degree of flexibility , But this is at the cost of significantly larger hyperparametric search space . The second problem is all Focal Loss functions are common , among Focal The enhancement or suppression effect introduced by the parameter applies to all classes , This may affect the convergence at the end of the training .

Unified Focal loss These two problems have been solved , By combining the functionally equivalent superparameters together and using asymmetry to focus on the modified Focal loss and Focal Tversky In the loss component focal Suppression and enhancement effect of parameters .

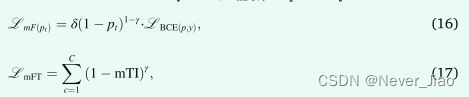

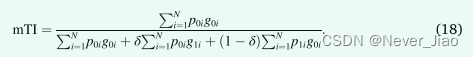

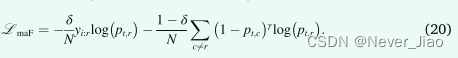

First , We will Focal loss Medium α and Tversky Index Medium α and β Replace with common δ Parameters to control output imbalance , And again Formulaize γ To achieve Focal loss Inhibition and Focal Tversky loss enhance , Name these as modified Focal loss ( LmF) And revised Focal Tversky Loss (LmFT), Respectively :

among ,

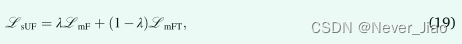

therefore ,Unified Focal loss (LsUF) The symmetric variant of is defined as :

among λ ∈ [0,1] And determine the relative weight of the two losses . By grouping the functionally equivalent superparameters , And Hybrid Focal loss The relevant six Super parameters are reduced to three , among δ Control the relative weight of positive and negative cases ,γ Control the suppression of background classes and the enhancement of rare classes , And finally λ Determine the weight of the two component losses .

although Focal loss Realize the suppression of background classes , But because will focal Parameters apply to all classes , Therefore, the loss of rare species contribution is also suppressed . Asymmetry is used by assigning different losses to each class focal Parameters are selectively enhanced or suppressed , This overcomes the harmful suppression of rare classes and the enhancement of background classes . Modified asymmetric focus loss (LmaF) Removed and rare classes r The focus parameter of the relevant loss component , At the same time, it retains the suppression of background elements (Li et al., 2019):

by comparison , For the modified Focal Tversky Loss , We delete the focus parameter of the loss component related to the background , Rare species are preserved r The enhancement of , And the modified asymmetry Focal Tversky Loss (LmaFT) Defined as :

therefore ,Unified Focal loss (LaUF) The asymmetric variant of is defined as :

And Focal The loss related loss suppression problem is related to Focal Tversky The complementary pairing of losses is alleviated , Asymmetry can suppress background loss and enhance foreground loss at the same time , Similar to increasing signal-to-noise ratio ( chart 2).

By combining the idea of the previous loss function ,Unified Focal loss Will be based on Dice And the loss function based on cross entropy is extended to a single frame . in fact , It can be proved that all the descriptions so far are based on Dice And the loss function of cross entropy is Unified Focal loss The special case of ( chart 1). for example , By setting γ = 0 and δ = 0.5, Respectively in λ Set to 0 and 1 When it's time to recover Dice Loss and cross entropy loss . By clarifying the relationship between loss functions ,Unified Focal loss It is easier to optimize than trying different loss functions alone , And it's also more powerful , Because it is robust to input and output imbalance . It is important to , Whereas Dice Loss and cross entropy loss are both effective operations , And apply focal The time complexity of parameter increase can be ignored ,Unified Focal loss It is not expected to significantly increase the training time of its component loss function .

In practice ,Unified Focal loss The optimization of can be further simplified to a single superparameter . Whereas focal Different effects of parameters on the loss of each component ,λ The function of is partially redundant , Therefore, we suggest setting λ = 0.5, It assigns equal weight to each component loss , And supported by empirical evidence (Taghanaki wait forsomeone , 2019). Besides , We suggest that δ = 0.6, To correct Dice Loss trend , To produce high accuracy 、 Segmentation with low recall and unbalanced categories . This is in Tversky The loss is less than δ = 0.7, To explain the influence of components based on cross entropy . This heuristic reduces the hyperparametric search space to a single γ The practice of parameters makes Unified Focal loss Powerful and easy to optimize . We are in the supplementary materials Unified Focal loss Provides further empirical evidence behind these heuristic methods .

Materials and methods

Dataset descriptions and evaluation metrics

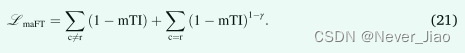

We selected five kinds of unbalanced medical imaging data sets for our experiment :CVC-ClinicDB、DRIVE、BUS2017、KiTS19 and BraTS20. To assess the degree of class imbalance , Calculate foreground pixels per image / Percentage of voxels , And take the average value on the whole data set ( surface 1).

CVC-ClinicDB dataset

Colonoscopy is the gold standard screening tool for colorectal cancer , But it is related to the significant missed diagnosis rate of polyps , This provides an opportunity to use computer-aided systems to support clinicians in reducing the number of missed polyps (Kim etc. ,2017). We use CVC-ClinicDB Data sets , The data set is composed of 612 The frame of , Including image resolution of 288 × 384 Polyps of pixels , From 13 Of different patients 23 Video sequence generation , Use standard colonoscopy and white light intervention (Bernal etc. ,2015).

DRIVE dataset

Degenerative retinal disease shows features that can be used to help diagnose in ophthalmoscopy . especially , Retinal vascular abnormalities , Changes such as tortuosity or neovascularization provide important clues for staging and treatment planning . We chose DRIVE Data sets (Staal wait forsomeone ,2004), It consists of 40 Composed of color fundus photos obtained from diabetes retinopathy screening in the Netherlands , The resolution used is 768 × 584 Each color plane of 8 Bit shooting .33 Photo shows no signs of diabetes retinopathy , and 7 This photo shows signs of mild diabetes retinopathy .

BUS2017 dataset

The most commonly used breast cancer assessment and screening tool is digital breast X Line radiography . However , Dense breast tissue is usually seen in young patients , In the breast X It is difficult to see in line photography . An important alternative is ultrasound imaging , This is an operator dependent program , Skilled radiologists are needed , But with breasts X Line photography is different , It has the advantage of no radiation exposure . BUS2017 Data sets B from 163 individual ul Ultrasonic images and related ground truth It's made up of , The average image size is 760 × 570 Pixels , From Spain, savadel Parc Taulí The company's UDIAT The diagnostic center collects . 110 One image is benign , Include 65 An unspecified cyst 、39 Fibroadenoma and 6 From other benign types of lesions . other 53 This image depicts a cancerous mass , Most of them are invasive ductal carcinoma .

BraTS20 dataset

BraTS20 Data set is currently the largest used in medical image segmentation 、 Publicly available and fully annotated data sets (Nazir wait forsomeone ,2021 year ), Include 494 Multimodal scans of patients with low-grade gliomas or high-grade glioblastomas (Menze wait forsomeone , 2014; Bacas et al ,2017、2018). BraTS20 The data set is as follows MRI Sequences provide images :T1 weighting (T1)、 Use gadolinium contrast agent (T1-CE) Enhanced T1 Weighted comparison (T1-CE)、T2 weighting (T2) Reverse recovery with fluid attenuation (FLAIR) Sequence . The image is annotated manually , Tumor related areas are marked : Necrotic and non enhanced tumor core 、 Peritumoral edema or gadolinium enhanced tumor . In providing the 494 In scanning , Yes 125 This scan is used to verify , The reference segmentation mask prohibits public access , So it's excluded . To define the binary segmentation task , We further excluded T1、T2 and FLAIR Sequence , To focus on using T1-CE Sequence (Rundo wait forsomeone ,2019b;Han wait forsomeone ,2019) Gadolinium enhances tumor segmentation , This is not only the category that seems to be the most difficult to segment (Henry et al., 2020), But it is also the clinical category most related to radiotherapy (Rundo et al., 2017, 2018). We further exclude the other 27 Second scan without enhancing the tumor area , leave 342 Scans , The image resolution is 240 × 240 × 155 Voxel .

KiTs19 dataset

Due to the widespread existence of low-density tissue and CT The highly heterogeneous appearance of the upper tumor , Segmentation of renal tumors is a challenging task (Linguraru wait forsomeone ,2009;Rundo wait forsomeone ,2020a). To evaluate our loss function , We chose KiTS19 Data sets (Heller et al., 2019), This is a highly unbalanced multi class classification problem . In short , The data set was collected by the University of Minnesota Medical Center in the United States from patients who received partial resection of the tumor and the surrounding kidney or complete resection of the kidney including the tumor 300 Arterial phase abdomen CT Scan composition . Image size is axial plane 512×512 Pixels , Coronal plane average 216 A slice . The kidney and tumor boundaries were manually divided by two students , Kidneys 、 Tumor or background class tags are assigned to each voxel , Thus, semantic segmentation tasks are generated (Heller wait forsomeone ,2019). Provides 210 Sub scans and their associated segmentation are used for training , other 90 The split mask of this scan prohibits public access for testing . therefore , We exclude those without segmentation mask 90 Scans , And further exclude the other 6 Scans ( Case study 15、23、37、68、125 and 133), Because I'm worried about the real quality of the ground (Heller wait forsomeone ,2021), be left over 204 This scan uses .

Evaluation metrics

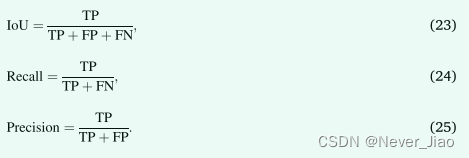

To evaluate segmentation accuracy , We use four commonly used indicators (Wang et al., 2020):DSC、Intersection over Union (IoU)、 Recall and precision . DSC In the equation 8 In the definition of ,IoU、 Recall and accuracy are also based on each pixel / Voxels and according to the equation 23、24 and 25 Definition , Respectively :

Implementation details

All experiments were carried out with TensorFlow Back end Keras Programming , And in NVIDIA P100 GPU Up operation . We use medical image segmentation with convolutional neural network (MIScnn) Open source Python library (Müller and Kramer,2019 year ).

come from CVC-ClinicDB、DRIVE and BUS2017 The images of the dataset are anonymous tiff、jpeg and png File format provides . about KiTS19 and BraTS20 Data sets , Images and ground truth Split masks are anonymous NIfTI File format provides . For all data sets , Except that it was initially divided into 20 A training image and 20 Test image DRIVE Data sets , We randomly divide each data set into 80% Development set and 20% Test set of , Further divide the development set into 80% Training set and 20% The verification set of . Use z-Score Standardize all images to [0,1]. We make use of “batchgenerators” Kuyi 0.15 The probability of using real-time data to enhance , Include : The zoom (0.85 − 1.25 × )、 rotate (-15∘ To +15∘)、 Mirror image ( Vertical and horizontal axes ) , Elastic deformation (α ∈ [0,900] and σ ∈ [9.0, 13.0]) And brightness (0.5 − 2 × )

about 2D Binary partition , We use CVC-ClinicDB、DRIVE and BUS2017 Data set and perform full image analysis , The image size is shown in the table 1 Described . about 3D Binary partition , We used BraTS20 Data sets . ad locum , The image is preprocessed , The skull was stripped , The image is interpolated to the same isotropic resolution 1 mm3, We use a size of 96 × 96×96 The randomness of voxels patch Conduct patch analysis , To train , And 48 × 48×48 Voxel's patch Overlap to infer . about 3D Multi class segmentation , We used KiTS19 Data sets . take Hounsfield Company (HU) Cut to [ − 79, …, 304] HU, The voxel spacing is resampled as 3.22 × 1.62 × 1.62 mm3 (Müller and Kramer, 2019). We use a size of 80 × 160 × 160 Patch analysis of random patches of voxels , And use 40 × 80 × 80 The patches of voxels overlap for reasoning .

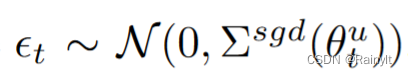

about 2D Split task , We use the original 2D U-Net framework (Ronneberger et al., 2015), about 3D Split task , We use 3D U-Net (Cicek et al., 2016). Model parameters use Xavier initialization (Glorot and Bengio,2010) To initialize , We added instance normalization and final softmax Activation layer (Zhou and Yang,2019). We use the random gradient descent optimizer for training , Batch size is 2, The initial learning rate is 0.1. For convergence criteria , If the verification loss is 10 individual epoch There was no improvement after , We use ReduceLROnPlateau Reduce the learning rate 0.1, If the verification loss is 20 individual epoch There was no improvement after , We use EarlyStopping Callback to terminate training . At every epoch Then evaluate the verification loss , Choose the model with the lowest verification loss as the final model .

We evaluate the following loss function : Cross entropy loss 、focal Loss 、dice Loss 、Tversky Loss 、Focal Tversky Loss 、Comba Loss and Unified Focal Symmetric and asymmetric variants of loss . We use the optimal hyperparameter of each loss function reported in the original study . say concretely , We are Focal Loss setting α = 0.25 and γ = 2(Lin wait forsomeone ,2017), by Tversky Loss setting α = 0.3,β = 0.7(Salehi wait forsomeone ,2017),α = 0.3,β = 0.7 Of Focal Tversky Loss and γ = 4∕3(Abraham and Khan,2019),Combo The loss is α = β = 0.5. about Unified Focal Loss, We set up λ = 0.5, δ = 0.6, Also on 2D Split tasks using γ ∈ [0.1, 0.9] Make a super parameter adjustment , about 3D Split task settings γ = 0.5.

To test statistical significance , We used Wilcoxon Rank sum test . Statistically significant differences are defined as p < 0.05.

Experimental results

In this section , We first describe the use CVC-ClinicDB、DRIVE and BUS2017 Data sets 2D The result of binary segmentation , And then use BraTS20 The dataset goes on 3D Binary partition , Finally using KiTS19 The dataset goes on 3D Multi class segmentation .

2D binary segmentation

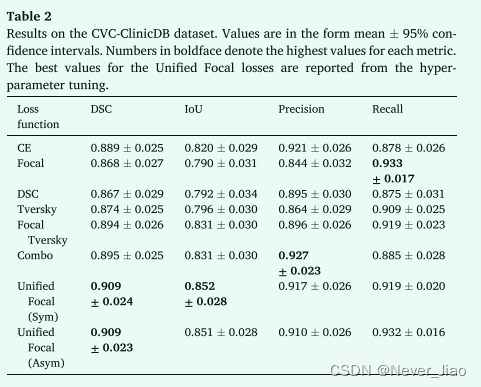

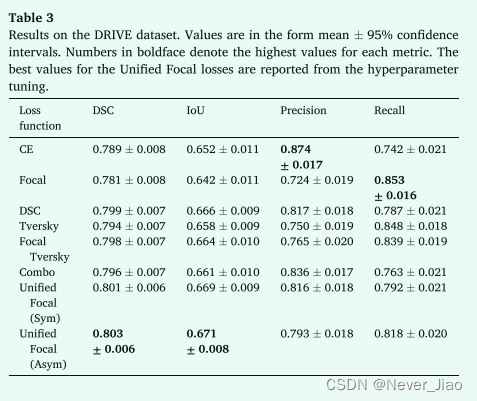

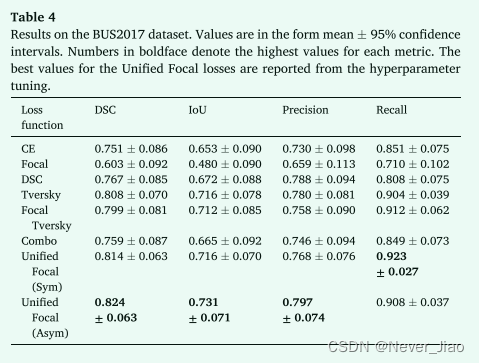

2D The results of binary segmentation experiment are shown in table 2、3 and 4 Shown .

In all three data sets ,Unified Focal loss The asymmetric variant of always observed the best performance , stay CVC-ClinicDB、DRIVE and BUS2017 Data sets are implemented separately 0.909 ± 0.023、0.803 ± 0.006 and 0.824 ± 0.063 Of DSC. The second is Unified Focal loss Symmetric variant of , It's in CVC-ClinicDB Obtained on dataset 0.852 ± 0.028 The best IoU score , also DSC Score and DSC by 0.909 ± 0.024、0.801 ± 0.006 And in CVCClinicDB、DRIVE and BUS2017 The data set is 0.814 ± 0.063. On these datasets ,Unified Focal loss No significant differences in performance were observed between the two variants of . Generally speaking , It is observed that the performance of the loss function based on cross entropy is the worst ,Focal loss stay CVCClinicDB (p = 0.04) and BUS2017 (p = 0.004) The performance on the dataset is significantly worse than the cross entropy loss , And significantly better than the three data sets Unified Focal loss Asymmetric variant of (CVC-ClinicDB:p = 2×10-6,DRIVE:p = 110-4 and BUS2017:p = 5×10-5). Based on Dice No significant difference was observed between the losses .

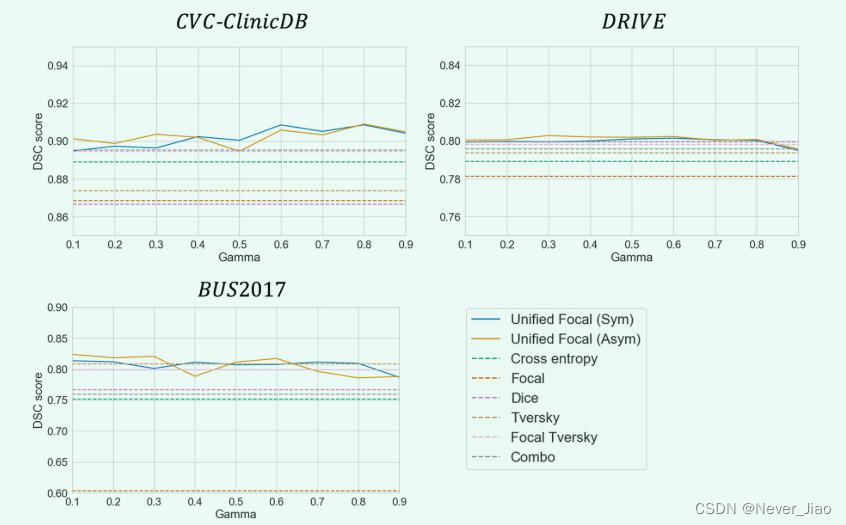

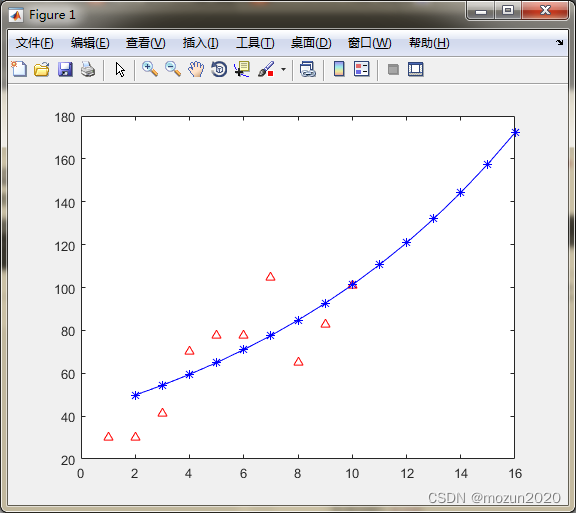

To evaluate γ Performance stability of hyperparameters , We are in the picture 3 Shows γ ∈ [0.1, 0.9] Of each value of DSC performance .

Fig.3 In each data set DSC Performance use Unified Focal loss assessment γ The stability of . A solid line represents Unified Focal loss Symmetric and asymmetric variants of , As a reference , The dotted line represents... Of other loss functions DSC performance .

For symmetric and asymmetric variants ,Unified Focal loss stay γ ∈ [0.1, 0.9] Consistently strong performance in the range . This is in CVC-ClinicDB The data set is the most obvious , The performance improvement compared with other loss functions is observed in the whole range of hyperparametric values . The worst performance occurs in γ = 0.9 At the same high value , and γ = 0.5 Equivalency provides a powerful performance advantage across datasets .

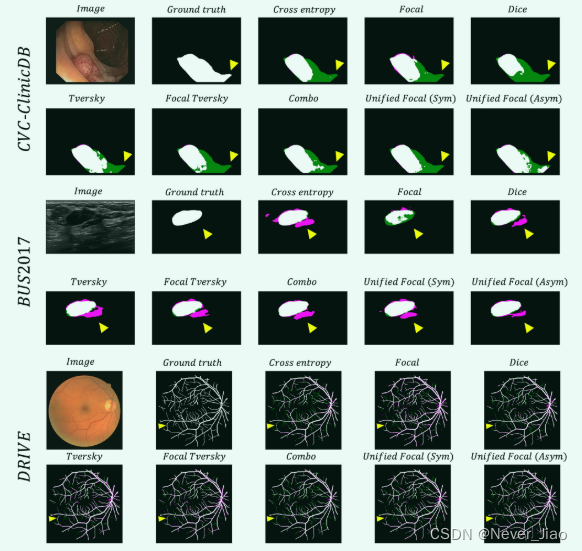

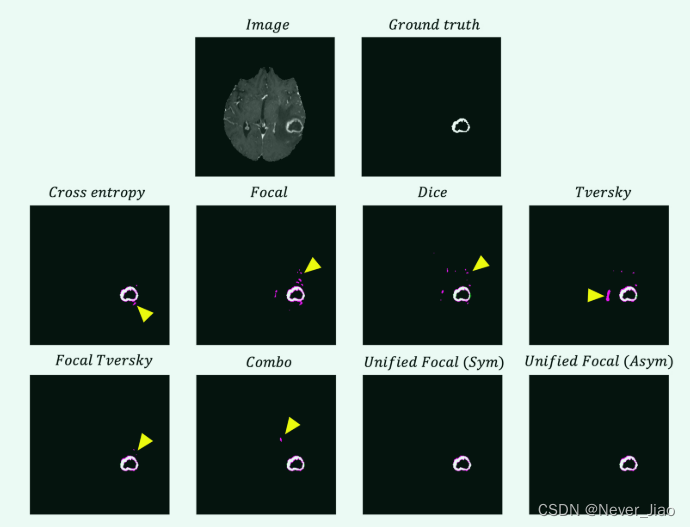

For qualitative comparison , The example segmentation is shown in the figure 4 Shown .

There are obvious visual differences between the segmentation generated by different loss functions . And based on Dice Compared with the loss function , The segmentation of loss function based on cross entropy is related to a larger proportion of false negative prediction . The highest quality segmentation is produced by the composite loss function , The best segmentation is generated by using the unified focus loss . This is in CVC-ClinicDB The loss of uniform focus in the example is particularly evident in the asymmetric variant .

Fig.4 Example segmentation , Each loss function for each of the three data sets . Provide images and ground truth For reference . False positives are highlighted in magenta , False negatives are highlighted in green . The yellow arrows highlight sample areas with different segmentation quality .

3D binary segmentation

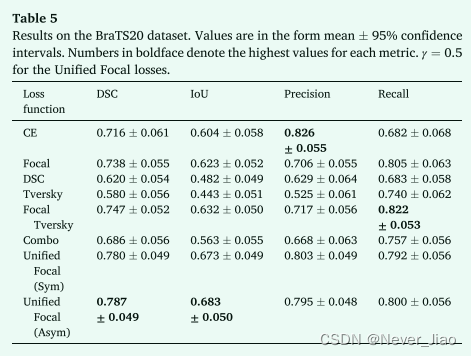

3D The results of binary segmentation experiment are shown in table 5 Shown .

Best performance is observed using uniform focus loss , In particular, asymmetric variants ,DSC by 0.787 ± 0.049,IoU by 0.683 ± 0.050, Accuracy of 0.795 ± 0.048, The recall rate is 0.800 ± 0.056. Next is the symmetrical variant of unified focus loss , There is no significant difference between the two loss functions . by comparison , Compared with all other loss functions , Asymmetric unified focus loss shows significantly improved performance ( Cross entropy loss :p = 0.02, Loss of focus :p = 0.03, Dice loss :p = 6×10− 10,Tversky Loss :p = 5×10− 11,Focal Tversky loss: p = 0.02 and Combo loss: p = 1×10− 4).

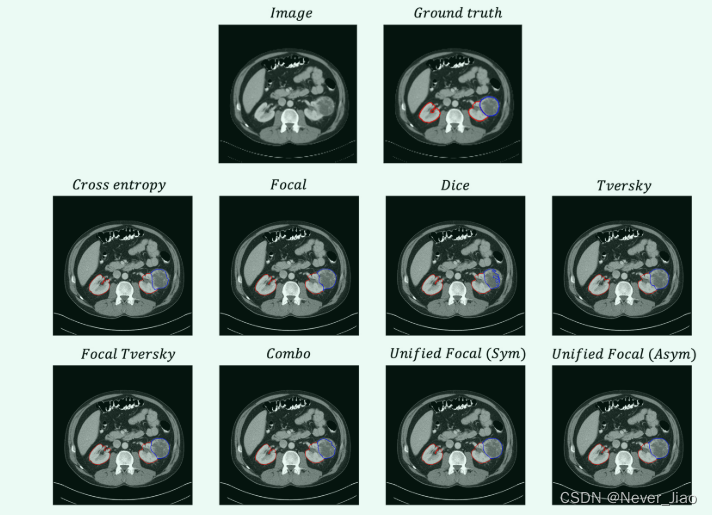

The axial slice obtained from the example segmentation is shown in the figure 5 Shown .

From the results , The data set has obvious recall deviation , This is reflected in the false positive prediction ratio of each segmentation prediction . The composite loss function shows the best recall accuracy balance , This can be clearly seen in the significantly reduced false positive predictions in the segmentation produced by using these loss functions .

3D multiclass segmentation

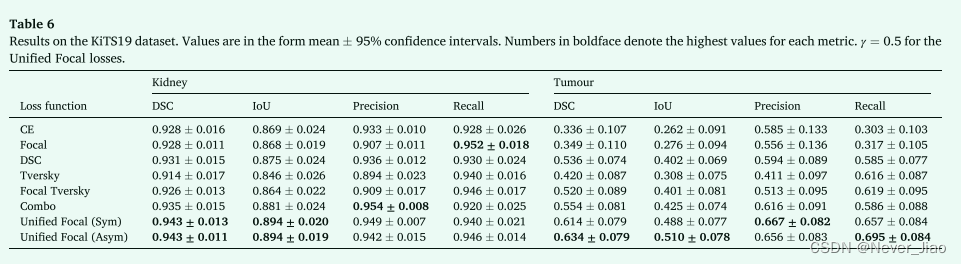

3D The results of multi class segmentation experiments are shown in the table 6 Shown .

Unified Focal loss To achieve the best performance , For kidney and kidney tumor segmentation , Asymmetrical variant DSC Respectively 0.943 ± 0.011 and 0.634 ± 0.079, And symmetrical variants DSC Respectively 0.943 ± 0.013 and 0.614 ± 0.079. For kidney segmentation , And cross entropy loss (p = 0.03)、Focal Loss (p = 0.004)、Tversky Loss (p = 0.001) and Focal Tversky Loss compared with ,Unified Focal loss The asymmetric variant of achieves significantly improved performance . p = 0.03). Using a Dice The worst performance of kidney segmentation was observed ,Tversky Losses are followed by Focal Tversky Loss . by comparison , Using the loss based on cross entropy, the worst performance of kidney tumor segmentation is observed , Compared to the cross entropy loss , Use Dice Lost DSC Obviously better performance (p = 0.01). For kidney tumor segmentation , And cross entropy loss (p = 6×10 - 5)、 Loss of focus (p = 1×10 - 4)、 Dice loss (p < 0.05) and Tversky Loss (p = 4×10 - 4).

The axial slice obtained from the example segmentation is shown in the figure 5 Shown .

Although the kidneys are usually well segmented , There are only slight differences between loss functions , But the quality of tumor segmentation varies greatly . A low tumor recall score with a loss function based on cross entropy is reflected in segmentation , Metastasis occurred at the boundary between the tumor and the kidney , Conducive to kidney prediction . The highest quality segmentation is observed by unifying the focus loss , It has the most accurate tumor contour .

Discussion and conclusions

In this study , We propose a new layered framework to include various Dice And loss function based on cross entropy , And use it to deduce Unified Focal loss, It sums up Dice And the loss function based on cross entropy to deal with class imbalance . We are dealing with 2D Binary system 、3D Binary sum 3D Five category unbalanced data sets with multi class segmentation (CVC ClinicDB、DRIVE、BUS2017、BraTS20 and KiTS19) Admiral Unified Focal loss Compared with the other six loss functions .Unified Focal loss Always get the highest in five data sets DSC and IoU fraction , The performance observed using the asymmetric metric variant is slightly better than the symmetric variant . We proved that Unified Focal loss The optimization of can be simplified as adjusting a single γ Hyperparameters , We observe that it is stable , Therefore, it is easy to optimize ( chart 3).

The significant differences in the performance of models using different loss functions highlight the importance of loss function selection in the task of class unbalanced image segmentation . The most striking is the use of distribution based loss functions in highly unbalanced KiTS19 The performance of segmenting renal tumor categories on the dataset is poor ( surface 6). In view of the greater representation of classes occupying a larger region in the loss based on cross entropy , This sensitivity to class imbalances is expected . Generally speaking , be based on Dice The loss function and composite loss function of perform better on class unbalanced data , But a notable exception is BraTS20 Data sets , among Dice Loss and Tversky The performance of loss is significantly lower than other loss functions . This may reflect the relationship with Dice Loss related instability gradient problem , This leads to suboptimal convergence and poor performance . Composite loss function ( for example Combo loss and Unified Focal loss) Always perform well on the dataset , This is due to the increased gradient stability of components based on cross entropy , And based on Dice The robustness of components to class imbalance . Qualitative evaluation is related to performance indicators , Use the highest quality segmentation observed by the unified focus loss ( chart 4-6). As expected , No difference in training time was observed between any of the loss functions used in these experiments .

Fig.5 BraTS20 An example of each loss function of the data set is the segmented axial slice . Provide images and ground truth For reference . False positives are highlighted in magenta , False negatives are highlighted in green . The yellow arrows highlight sample areas with different segmentation quality .

Fig.6 KiTS19 An example of each loss function of the data set is the segmented axial slice . Provide images and ground truth For reference . The red outline corresponds to the kidney , The blue outline corresponds to the tumor .

Our research has some limitations . First , We limit our framework and comparison to include only those based on Dice And a subset of the most popular variants of the loss function based on cross entropy . However , It should be noted that ,Unified Focal loss It also generalizes other loss functions that are not included , for example DiceFocal loss (Zhu et al., 2019b) and Asymmetricsimilarity loss (Hashemi et al., 2018). The main loss function not included is the boundary based loss function (Kervadec wait forsomeone ,2019;Zhu wait forsomeone ,2019a), This is another kind of loss function , Instead of using cross entropy and based on Dice Loss of use distribution or area . secondly , It is not clear how to optimize in multi class segmentation tasks γ Hyperparameters . In our experiment , We regard both kidney and kidney tumors as rare , And designate γ = 0.5. By assigning different γ Values can be observed for better performance , for example ,KiTS19 The kidney category in the data set is four times the tumor category . however , Even with this simplification , We still use the unified focus loss function to obtain improved performance than other loss functions .

Last , We highlighted several areas for future research . In order to inform the loss function selection of class imbalance segmentation , It is important to compare the loss functions of more quantities and kinds , In particular, loss functions from other loss function categories and different categories of unbalanced data sets . We use the original U-Net Architecture to simplify and emphasize the importance of loss function to performance , But evaluate whether the performance gain can be extended to the most advanced deep learning methods ( for example nnU- Net(Isensee wait forsomeone ,2021 year )—— And whether this can complement or even replace alternatives , For example, training to deal with class imbalance or sampling based methods .

边栏推荐

- 2014 Alibaba web pre intern project analysis (1)

- Motion capture for snake motion analysis and snake robot development

- Aardio - does not declare the method of directly passing float values

- How to use flexible arrays?

- Method of canceling automatic watermarking of uploaded pictures by CSDN

- UVa 11732 – strcmp() Anyone?

- Export MySQL table data in pure mode

- Mysql 身份认证绕过漏洞(CVE-2012-2122)

- DR-Net: dual-rotation network with feature map enhancement for medical image segmentation

- Clip +json parsing converts the sound in the video into text

猜你喜欢

Export MySQL table data in pure mode

Web APIs DOM time object

Should novice programmers memorize code?

Improving Multimodal Accuracy Through Modality Pre-training and Attention

Aardio - does not declare the method of directly passing float values

MATLAB小技巧(27)灰色预测

CUDA exploration

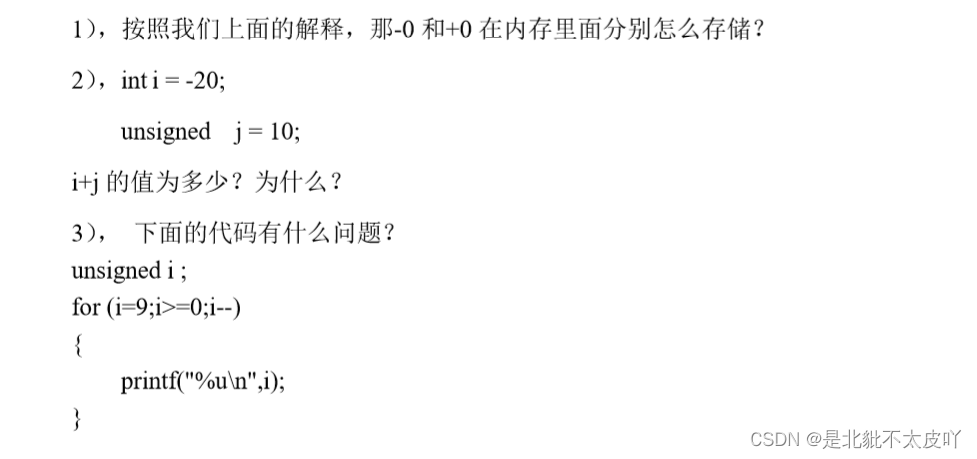

Signed and unsigned keywords

![pytorch_ Yolox pruning [with code]](/img/98/31d6258635ce48ac53819d0ca12d1d.jpg)

pytorch_ Yolox pruning [with code]

Balanced Multimodal Learning via On-the-fly Gradient Modulation(CVPR2022 oral)

随机推荐

新手程序员该不该背代码?

第十九章 使用工作队列管理器(二)

Signed and unsigned keywords

Designed for decision tree, the National University of Singapore and Tsinghua University jointly proposed a fast and safe federal learning system

如何实现文字动画效果

Web APIs DOM 时间对象

QT信号和槽

Config:invalid signature solution and troubleshooting details

枚举与#define 宏的区别

Some suggestions for foreign lead2022 in the second half of the year

Windows Auzre 微软的云计算产品的后台操作界面

[IELTS speaking] Anna's oral learning record part1

MySQL教程的天花板,收藏好,慢慢看

UVa 11732 – strcmp() Anyone?

Uniapp setting background image effect demo (sorting)

BasicVSR_PlusPlus-master测试视频、图片

That's why you can't understand recursion

const关键字

云原生技术--- 容器知识点

Detailed explanation of ThreadLocal