当前位置:网站首页>ICLR 2022 | pre training language model based on anti self attention mechanism

ICLR 2022 | pre training language model based on anti self attention mechanism

2022-07-06 22:45:00 【Zhiyuan community】

Title of thesis :

Adversarial Self-Attention For Language Understanding

ICLR 2022

https://arxiv.org/pdf/2206.12608.pdf

There is a great deal of evidence that , Self attention can be drawn from allowing bias Benefit from ,allowing bias A certain degree of transcendence ( Such as masking, Smoothing of distribution ) Add to the original attention structure . These prior knowledge can enable the model to learn useful knowledge from smaller corpus . But these prior knowledge are generally task specific knowledge , It makes it difficult to extend the model to rich tasks . adversarial training The robustness of the model is improved by adding disturbances to the input content . The author found that only input embedding Adding disturbances is difficult confuse To attention maps. The attention of the model does not change before and after the disturbance .

Maximize empirical training risk, Learn by automating the process of building prior knowledge biased(or adversarial) Structure . adversial Structure is learned from input data , bring ASA It is different from the traditional confrontation training or the variant of self attention . Use gradient inversion layer to convert model and adversary Combine as a whole . ASA Nature is interpretable .

边栏推荐

- NPDP certification | how do product managers communicate across functions / teams?

- cuda 探索

- NPM cannot install sharp

- Void keyword

- Leetcode exercise - Sword finger offer 26 Substructure of tree

- Aardio - 通过变量名将变量值整合到一串文本中

- MySQL教程的天花板,收藏好,慢慢看

- Slide the uniapp to a certain height and fix an element to the top effect demo (organize)

- Detailed explanation of ThreadLocal

- Rust knowledge mind map XMIND

猜你喜欢

Pit encountered by handwritten ABA

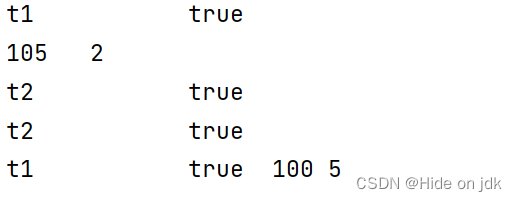

机试刷题1

MySQL authentication bypass vulnerability (cve-2012-2122)

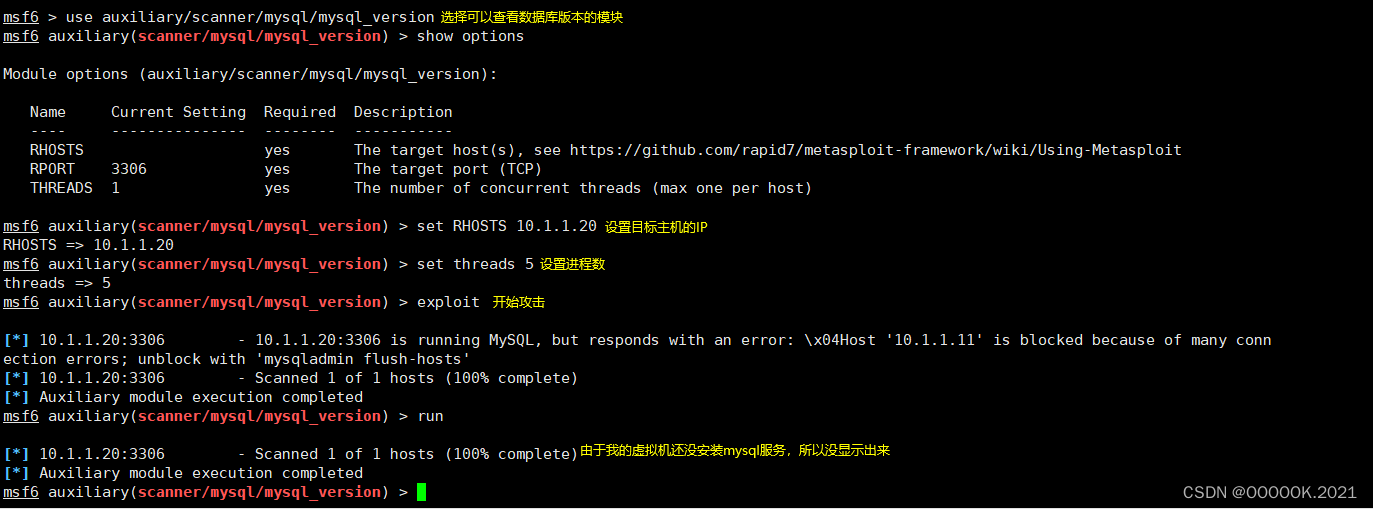

Aardio - integrate variable values into a string of text through variable names

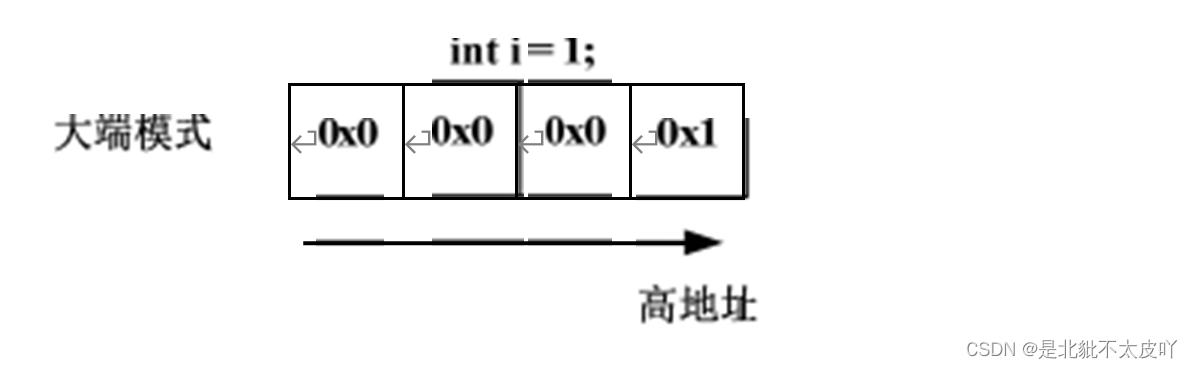

如何用程序确认当前系统的存储模式?

![pytorch_ Yolox pruning [with code]](/img/98/31d6258635ce48ac53819d0ca12d1d.jpg)

pytorch_ Yolox pruning [with code]

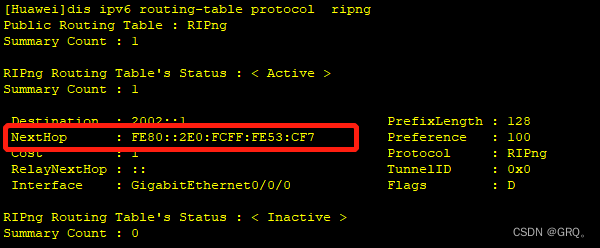

Advantages of link local address in IPv6

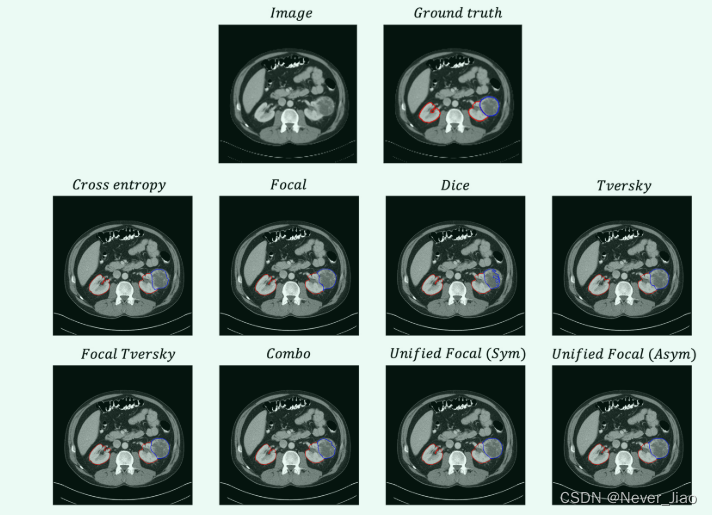

Unified Focal loss: Generalising Dice and cross entropy-based losses to handle class imbalanced medi

Financial professionals must read book series 6: equity investment (based on the outline and framework of the CFA exam)

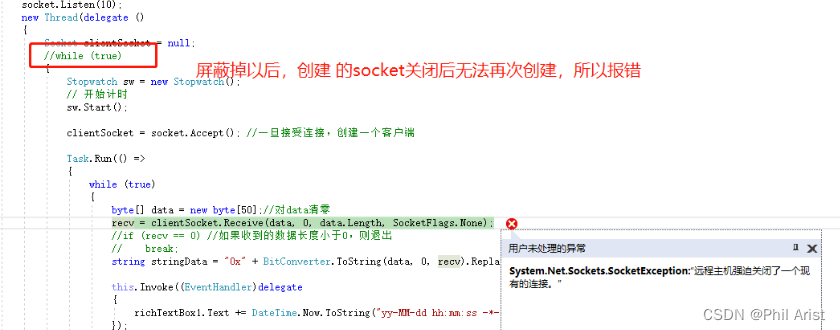

C# 三种方式实现Socket数据接收

随机推荐

Aardio - Method of batch processing attributes and callback functions when encapsulating Libraries

Senior soft test (Information System Project Manager) high frequency test site: project quality management

柔性数组到底如何使用呢?

leetcode:面试题 17.24. 子矩阵最大累加和(待研究)

MATLAB小技巧(27)灰色预测

手写ABA遇到的坑

自制J-Flash烧录工具——Qt调用jlinkARM.dll方式

MySQL教程的天花板,收藏好,慢慢看

关于声子和热输运计算中BORN电荷和non-analytic修正的问题

MySQL----初识MySQL

UVa 11732 – strcmp() Anyone?

Unity3d minigame unity webgl transform plug-in converts wechat games to use dlopen, you need to use embedded 's problem

Self made j-flash burning tool -- QT calls jlinkarm DLL mode

Uniapp setting background image effect demo (sorting)

The ceiling of MySQL tutorial. Collect it and take your time

Rust knowledge mind map XMIND

【雅思口语】安娜口语学习记录part1

雅思口语的具体步骤和时间安排是什么样的?

signed、unsigned关键字

Aardio - 利用customPlus库+plus构造一个多按钮组件