当前位置:网站首页>Entrepreneurship is a little risky. Read the data and do a business analysis

Entrepreneurship is a little risky. Read the data and do a business analysis

2022-07-02 00:52:00 【Coriander Chat Game】

1、 cause

After the new year , As a program is also very old , So I want to do a sideline , But blind entrepreneurship is not enough , Mr. Lu Xun said : Direction over effort , So choosing the right direction is very important , The first step of data research , Don't act blindly , A waste of time , Waste energy , The main waste of my hard-earned money .

I usually order takeout during working hours , So I want to make takeout , Because I am Xiaobai , So I want to find a brand takeout store to join , But most of them know that franchise stores are relatively stupid , So be cautious , Do research .

2、 Data crawling

The platforms selected for data crawling are two big takeout platforms , Here, choose one of them for analysis. I want to see the take away order volume of the business district , Do a survey , The data content crawled is the category of takeout , A single quantity , And the name , Location , These basic data , And then make a summary , Analyze the data in the business district .

3、 Crawling step

1、 Confirm the URL you crawled

The data source of crawling is a app, The local solutions are nocturnal simulator and Charles, Installed the environment and did a few things , I didn't figure it out , This plan was abandoned

stay xxx After a search on our official website, we found h5 The interface of , It seems that this interface is not public , After searching for a long time, I finally found the entrance

After twists and turns, I finally found , But after logging in, you still have to jump to the official website , So enter this URL again

be based on csdn The rules of ,xxx Please replace

The final crawling data interface is :

2, Analyze the website

The data request method is get request , So all the parameters are url in

latitude=31.296829

&longitude=120.736135

&offset=16

&limit=8

&extras[]=activities

&extras[]=tags

&extra_filters=home

&terminal=h5

The first two data are longitude and latitude , That is, the offset of positioning information , This data determines the data pulled , This is where I want to locate .

offset I don't know what this offset means , I guess it may be the number , Is not important

limit I guess how many data per page

terminal Is the type of terminal , Basically

3、 Analyze the returned data

{

has_next: true,

items: - [

- {

restaurant: + {... }

},

- {

restaurant: + {... }

},

- {

restaurant: + {... }

},

- {

restaurant: + {... }

},

- {

restaurant: + {... }

},

- {

restaurant: + {... }

},

- {

restaurant: + {... }

},

- {

restaurant: + {... }

}

],

meta: - {

rankId: "",

rankType: - {

505517688: "33"

}

}

}The most external data format is like this , What we want is restaurant This layer of data , It is also important to analyze

restaurant: - {

act_tag: 0,

activities:[],

address: null,

authentic_id: 502101541,

average_cost: null,

baidu_id: null,

bidding: null,

brand_id: 710858,

business_info: "{"pickup_scheme":"https://tb.xxx.me/wow/a/act/eleme/dailygroup/682/wupr?wh_pid=daily-186737&id=E14670700902593244","ad_info":{"isAd":"false"},"recent_order_num_display":" On sale 1244"}",

closing_count_down: 14234,

delivery_fee_discount: 0,

delivery_mode: + {... },

description: null,

distance: 2804,

favor_time: null,

favored: false,

flavors: + [... ],

float_delivery_fee: null,

float_minimum_order_amount: 20,

folding_restaurant_brand: null,

folding_restaurants:[],

has_story: false,

id: "E14670700902593244",

image_path: "https://img.alicdn.com/imgextra/i2/2212739556234/O1CN01jzFa3R1vvDoDnsojl_!!2212739556234-0-koubei.jpg",

is_new: false,

is_premium: true,

is_star: false,

is_stock_empty: 0,

is_valid: null,

latitude: null,

longitude: null,

max_applied_quantity_per_order: -1,

name: " Fruit cutter ( East Lake CBD shop )",

next_business_time: " Tomorrow, 9:30",

only_use_poi: null,

opening_hours: - [

"9:30/0:10"

],

order_lead_time: 42,

out_of_range: false,

phone: null,

piecewise_agent_fee: + {... },

platform: 0,

posters:[],

promotion_info: null,

rating: 4.7,

rating_count: null,

recent_order_num: 1244,

recommend: + {... },

recommend_reasons: + [... ],

regular_customer_count: 0,

restaurant_info: null,

scheme: "https://h5.xxx.me/newretail/p/shop/?store_id=546110047&geolat=31.296829&geolng=120.736135&o2o_extra_param=%7B%22rank_id%22%3A%22%22%7D",

status: 1,

support_tags: + [... ],

supports:[],

target_tag_path: "35a1bb9025ab98c28112d82f83f73d7ejpeg",

theme: null,

type: 1

}The data seems easy to understand , The data we need here is

name The name of the take out shop

business_info.recent_order_num_display Sales figures

activities Store activity data

opening_hours It's the business hours of the store

support_tags It is the classification of stores

4、 Crawl data

I haven't written all the code for crawling data , So the above analysis

The core of crawling data is pretending to be a normal request , We have seen the data web Interface , Basically nothing

In general, that is :

cookie requirement

js encryption

token Request and so on

Because I don't use it in batches , So it can be web After the client logs in , Use data directly , Disguised as a web That's all right. , At its worst, it can be used directly seleunim To climb , So it's not a big problem

5、 summary

Looking at the data, it seems that some brands have good sales , But I can't see the opening time of the store , So there is no way to judge whether it is a buying behavior , But the average sales volume is not high , A little polarized , The situation of taking out is grim , Earn hard money , The platform also draws from the turnover 15% , Remove the franchise fee , Shop rentals , There are also various equipment costs , It's really a lot of money . In the end, all the money was earned by the platform , As a business, there is basically no profit . therefore Take out is not recommended , Feel stifled .

边栏推荐

- 2022 pinduoduo details / pinduoduo product details / pinduoduo SKU details

- JS common library CDN recommendation

- BPR (Bayesian personalized sorting)

- Deb file installation

- 2023款雷克萨斯ES产品公布,这回进步很有感

- [eight sorts ①] insert sort (direct insert sort, Hill sort)

- Bc35 & bc95 onenet mqtt (old)

- When installing mysql, there are two packages: Perl (data:: dumper) and Perl (JSON)

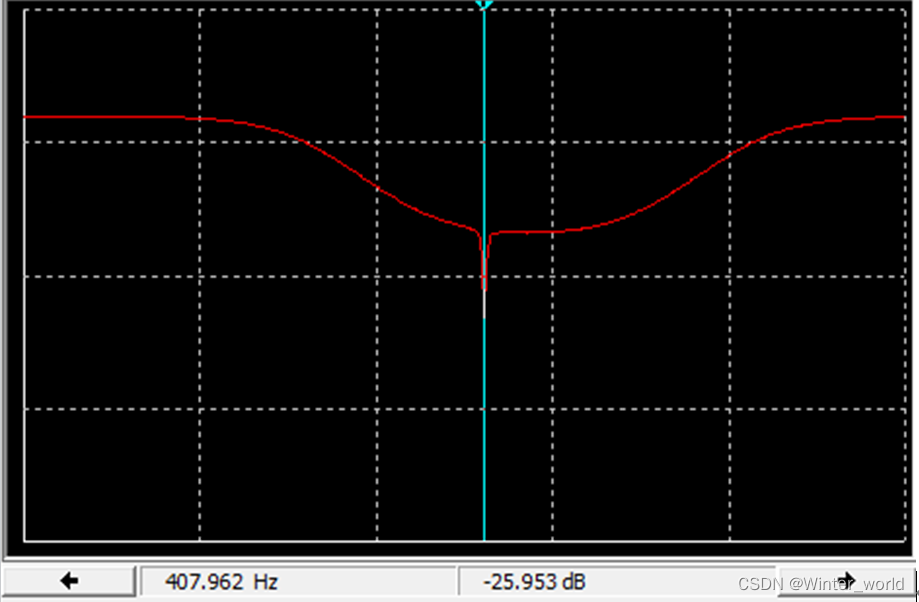

- Practical calculation of the whole process of operational amplifier hysteresis comparator

- Data analysis methodology and previous experience summary [notes dry goods]

猜你喜欢

2022 pinduoduo details / pinduoduo product details / pinduoduo SKU details

Geek DIY open source solution sharing - digital amplitude frequency equalization power amplifier design (practical embedded electronic design works, comprehensive practice of software and hardware)

Leetcode skimming: binary tree 02 (middle order traversal of binary tree)

RFID makes the inventory of fixed assets faster and more accurate

2022 high altitude installation, maintenance and removal of test question simulation test platform operation

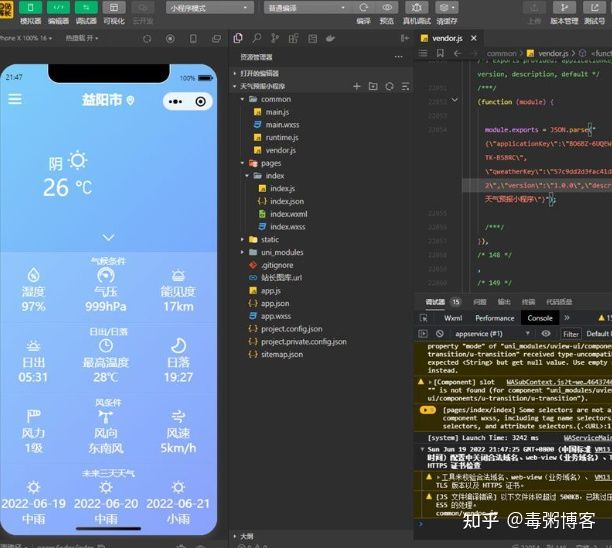

Weather forecast applet source code weather wechat applet source code

2022 safety officer-a certificate examination questions and online simulation examination

2022 safety officer-b certificate examination practice questions simulated examination platform operation

heketi 记录

Leetcode skimming: stack and queue 04 (delete all adjacent duplicates in the string)

随机推荐

Using multithreaded callable to query Oracle Database

ThreadLocal内存泄漏是什么,怎么解决

2023款雷克萨斯ES产品公布,这回进步很有感

Intelligent operation and maintenance practice: banking business process and single transaction tracking

JS——图片转base码 、base转File对象

[wechat authorized login] the small program developed by uniapp realizes the function of obtaining wechat authorized login

Geek DIY open source solution sharing - digital amplitude frequency equalization power amplifier design (practical embedded electronic design works, comprehensive practice of software and hardware)

Friends circle community program source code sharing

Promise和模块块化编程

[leetcode] number of maximum consecutive ones

Summary of Aix storage management

【CTF】bjdctf_2020_babystack2

一名优秀的软件测试人员,需要掌握哪些技能?

[CTF] bjdctf 2020 Bar _ Bacystack2

Leetcode skimming: stack and queue 02 (realizing stack with queue)

I want to ask, which is the better choice for securities companies? I don't understand. Is it safe to open an account online now?

Excel search and reference function

How to extract login cookies when JMeter performs interface testing

Otaku wallpaper Daquan wechat applet source code - with dynamic wallpaper to support a variety of traffic owners

EMC circuit protection device for surge and impulse current protection