当前位置:网站首页>Linear algebra of deep learning

Linear algebra of deep learning

2022-07-07 00:41:00 【Peng Xiang】

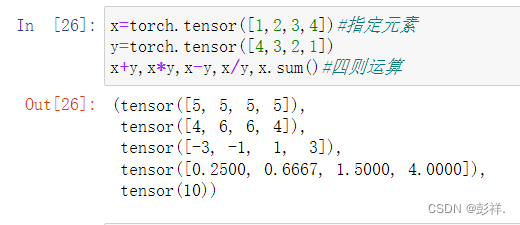

Here we mainly introduce the calculation of some tensors , If sum , Transpose, etc

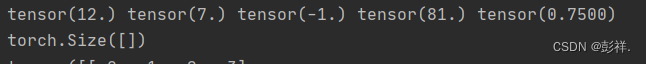

Scalar operation

import torch

x=torch.tensor(3.0)

y=torch.tensor(4.0)

print(x*y,x+y,x-y,x**y,x/y)# This single element ( Scalar ) It can perform all kinds of four operations

print(x.shape)

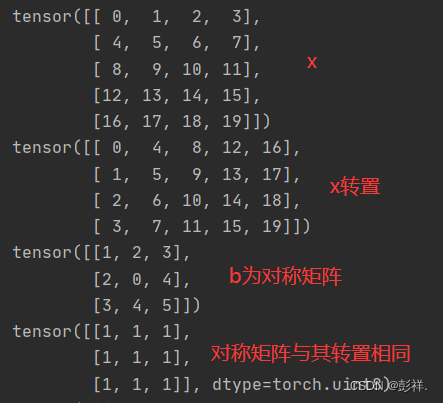

Matrix transposition

import torch

x=torch.arange(20).reshape(5,4)

print(x)

print(x.t())# Matrix transposition

B = torch.tensor([[1, 2, 3], [2, 0, 4], [3, 4, 5]])

print(B)

print(B==B.t())# The symmetric matrix is the same as the original matrix after transposition

About python Assignment in , It just assigns the address to a variable , When it changes , Will change together , You can use y=x.clone() To regenerate data

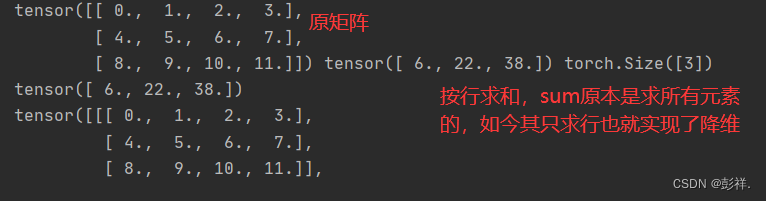

# Dimension reduction

import torch

A = torch.arange(12, dtype=torch.float32).reshape(3,4)

A_sum_axis0 = A.sum([1])#0 To sum by column ,1 To sum by line ,[0,1] For all , At this point, dimensionality reduction is achieved

print(A,A_sum_axis0, A_sum_axis0.shape)

A = torch.arange(24, dtype=torch.float32).reshape(2,3,4)

sum_A = A.sum(1)#3 Dimension time loses a dimension and becomes a dimension , That is, the row dimension is missing , Empathy ,0 For losing the first ,2 For the third

print(A_sum_axis0)

print(A)

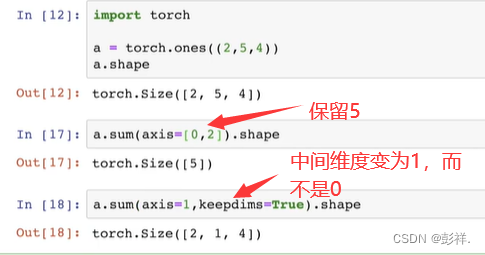

keepdims It will turn the dimension into one

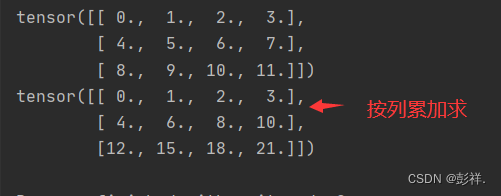

Sum by accumulation

import torch

A = torch.arange(12, dtype=torch.float32).reshape(3,4)

A_sum_axis0=A.cumsum(0)

print(A)

print(A_sum_axis0)

import torch

y = torch.ones(4, dtype = torch.float32)

print(y)

print(torch.dot(y,y))# Vector dot product

y = torch.ones(4, dtype = torch.float32)

x=torch.arange(12,dtype = torch.float32).reshape(3,4)

print(torch.mv(x,y))# vector * matrix

B = torch.ones(4, 3)

print(torch.mm(x, B))# matrix * matrix

边栏推荐

- 2022年PMP项目管理考试敏捷知识点(9)

- Leecode brushes questions to record interview questions 17.16 massagist

- Devops can help reduce technology debt in ten ways

- What can the interactive slide screen demonstration bring to the enterprise exhibition hall

- Interesting wine culture

- Command line kills window process

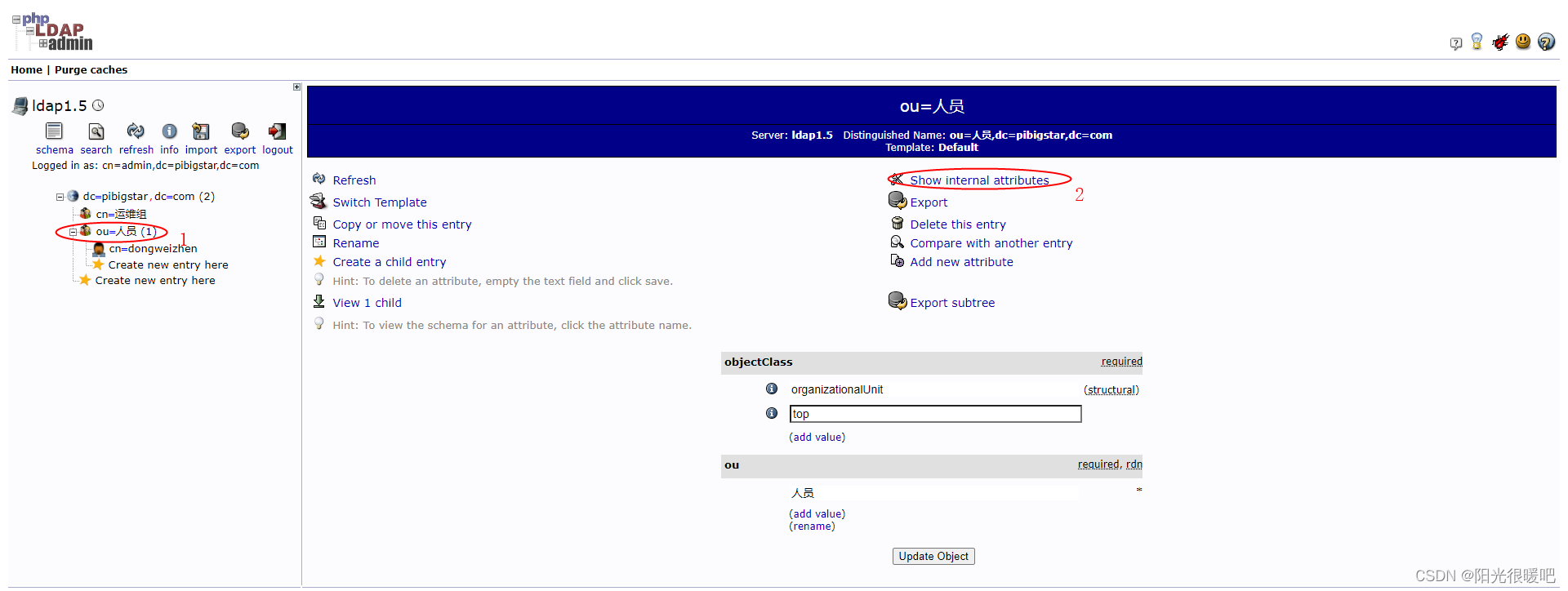

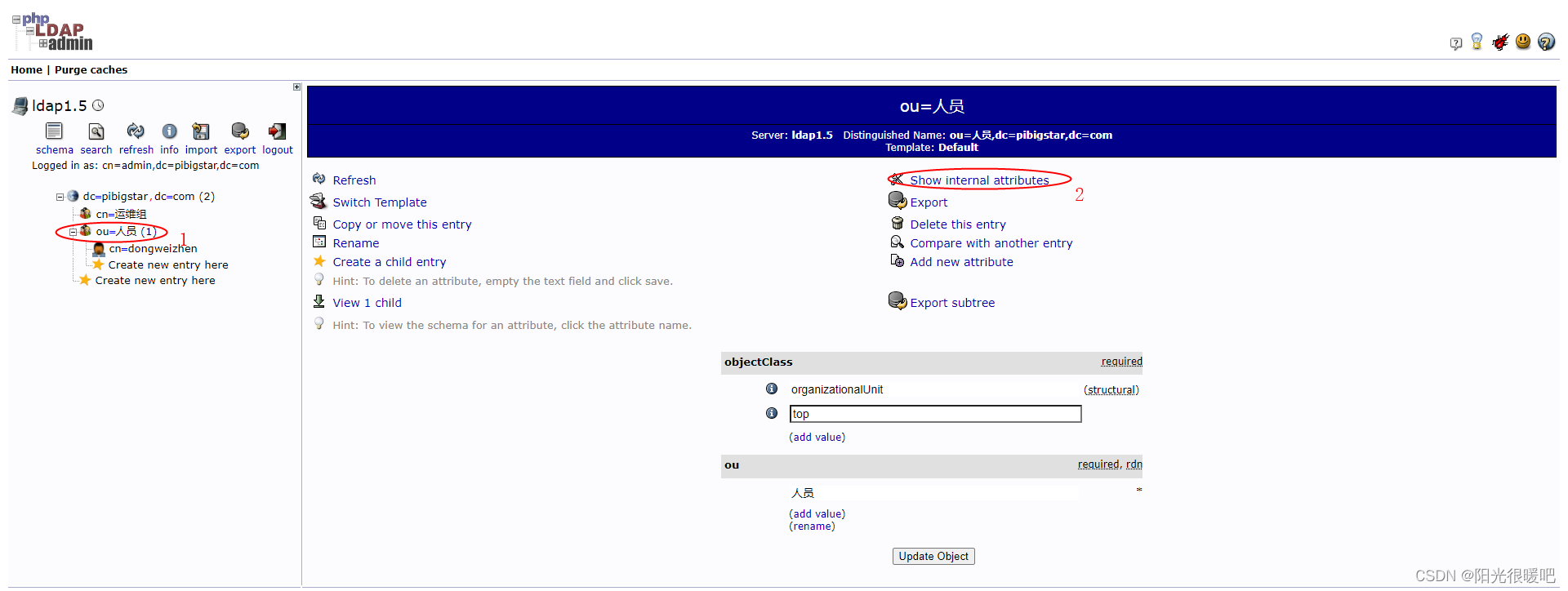

- Racher integrates LDAP to realize unified account login

- On February 19, 2021ccf award ceremony will be held, "why in Hengdian?"

- 基于GO语言实现的X.509证书

- Leecode brush questions record interview questions 32 - I. print binary tree from top to bottom

猜你喜欢

准备好在CI/CD中自动化持续部署了吗?

Everyone is always talking about EQ, so what is EQ?

System activity monitor ISTAT menus 6.61 (1185) Chinese repair

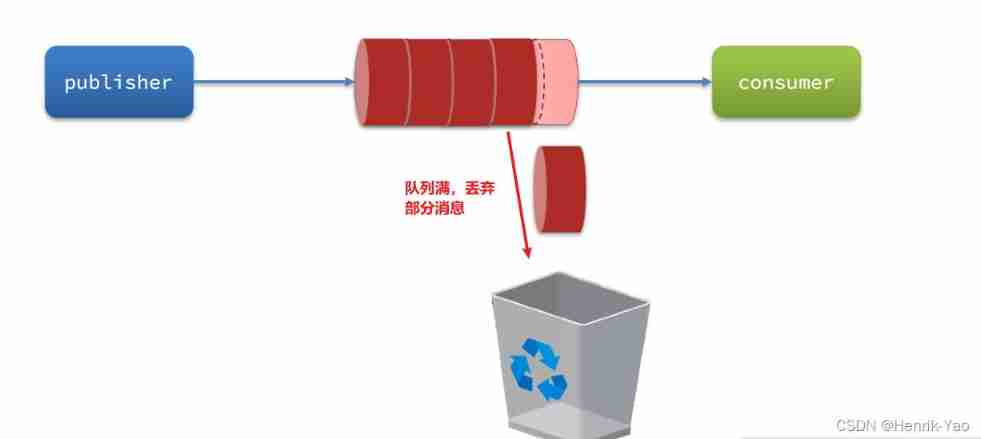

Service asynchronous communication

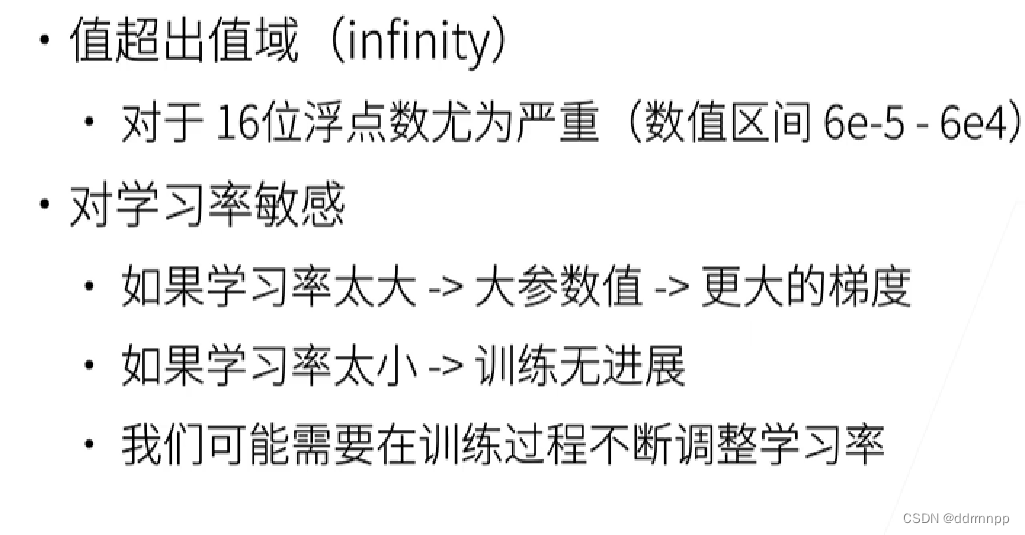

Alexnet experiment encounters: loss Nan, train ACC 0.100, test ACC 0.100

rancher集成ldap,实现统一账号登录

深度学习之数据处理

alexnet实验偶遇:loss nan, train acc 0.100, test acc 0.100情况

Racher integrates LDAP to realize unified account login

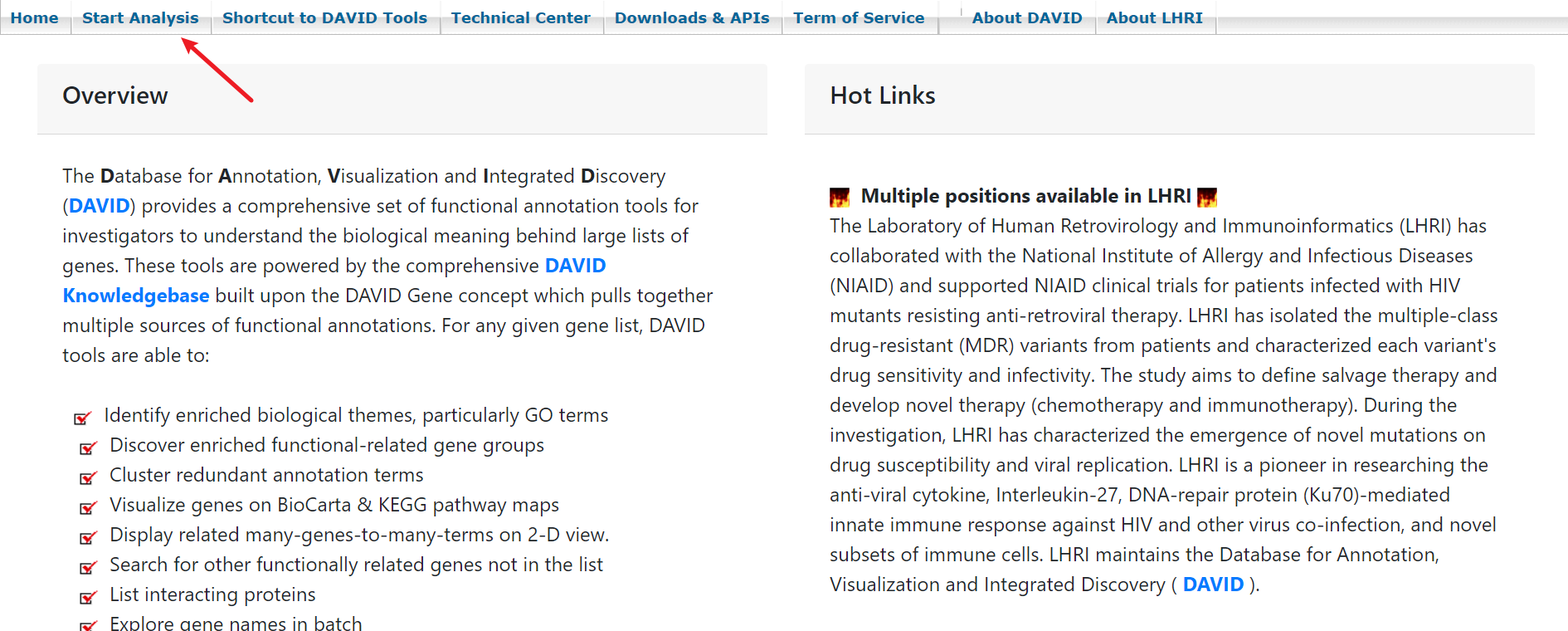

Geo data mining (III) enrichment analysis of go and KEGG using David database

随机推荐

深度学习之数据处理

Typescript incremental compilation

AI超清修复出黄家驹眼里的光、LeCun大佬《深度学习》课程生还报告、绝美画作只需一行代码、AI最新论文 | ShowMeAI资讯日报 #07.06

37页数字乡村振兴智慧农业整体规划建设方案

Data analysis course notes (V) common statistical methods, data and spelling, index and composite index

Attention SLAM:一种从人类注意中学习的视觉单目SLAM

Mujoco produces analog video

Article management system based on SSM framework

uniapp中redirectTo和navigateTo的区别

Three application characteristics of immersive projection in offline display

Stm32f407 ------- SPI communication

C language input / output stream and file operation [II]

2022/2/12 summary

Explain in detail the implementation of call, apply and bind in JS (source code implementation)

js导入excel&导出excel

Mujoco Jacobi - inverse motion - sensor

Everyone is always talking about EQ, so what is EQ?

37 pages Digital Village revitalization intelligent agriculture Comprehensive Planning and Construction Scheme

Cross-entrpy Method

509 certificat basé sur Go