当前位置:网站首页>Service grid is still difficult - CNCF

Service grid is still difficult - CNCF

2020-11-09 00:40:00 【On jdon】

The service grid is more mature than it was a year or two ago , however , It's still hard for users . Service grid has two technical roles , Platform owners and service owners . Platform owners ( Also known as grid Administrator ) Have a service platform , It also defines the overall strategy and implementation of service owner adopting service grid . The service owner owns one or more services in the grid .

For platform owners , It's easier to use a service grid , Because the project is being implemented to simplify the network configuration , How to configure security policy and visualize the whole grid . for example , stay Istio in , Platform owners can set up whatever they like Istio Authentication policy or authorization policy . The platform owner can host / port / TLS The gateway is configured on the related settings , At the same time, the actual routing behavior and traffic policy of the target service are entrusted to the service owner . Service owners who implement well tested and common scenarios can start with Istio Benefit from usability improvement of , Thus, it is easy to load its microservices into the grid .

And service owners have a steep learning curve .

I think the service grid is still very difficult , Here's why :

1. Lack of clear guidance on whether a service grid is needed

Before users start evaluating multiple service grids or delving into specific service grids , They need guidance on whether the service grid can help . Unfortunately , It's not a simple one, it's / No problem . There are many factors to consider :

- How many people in your engineering organization ?

- How many micro services do you have ?

- What language do these microservices use ?

- Do you have experience with open source projects ?

- What platform do you run the service on ?

- What functions does the service grid need ?

- For a given service grid project , Whether the function is stable ?

For various service grid projects , The answer becomes different , This adds complexity . Even in Istio Inside , We also use microservices to make the most of it Istio 1.5 Grid in earlier versions , But the decision will Multiple Istio The control plane component changes to Overall application to reduce operational complexity . for example , It makes more sense to run a whole service rather than four or five microservices .

2. After injecting into the sidecar , Your service may be interrupted immediately

Last Thanksgiving , I try to use the latest Zookeeper Rudder chart helps users run in grid Zookeeper service .Zookeeper As Kubernetes StatefulSet function . Once I try to inject the special envoy side car agent into every Zookeeper pod,Zookeeperpod Will not run and continue to restart , Because they can't build leaders and communicate among members .

By default ,Zookeeper monitor Pod IP Address for communication between servers . however ,Istio And other service grids require local hosts (127.0.0.1) As the listening address , This makes Zookeeper Servers can't communicate with each other .

Working with upstream communities , We are Zookeeper,Casssandra,Elasticsearch,Redis and Apache NiFi Added Configuration solution . I'm sure there are other apps that are not compatible with sidecar .

3. Your service may behave abnormally when it starts or stops

Kubernetes Lack of a standard way to declare container dependencies . There is one Sidecar Kubernetes Enhancement suggestions (KEP), however Kubernetes The version has not yet implemented , And it will take some time to stabilize the feature . meanwhile , Service owners may observe unexpected behavior when starting or stopping .

To help solve this problem ,Istio Global configuration options are implemented for platform owners , In order to Application startup delay To Sidecar Until it's ready .Istio Service owners will soon be allowed to pod Level to configure .

4. Zero configuration for your service , But not zero code change

One of the main goals of the service grid project is to provide zero configuration for service owners . image Istio Some of these projects have added intelligent protocol detection capabilities , To help detect protocols and simplify the grid entry experience , however , We still recommend that users explicitly state the agreement in production . By means of Kubernetes Add appProtocol Set up , Service owners can now use standard methods for the newer Kubernetes edition ( for example 1.19) Running in Kubernetes Service configuration application protocol .

In order to make full use of the function of service grid , Unfortunately, zero code changes are not possible .

- In order for service owners and platform owners to observe service tracking correctly , Between services Propagation trace header crucial .

- To avoid confusion and unexpected behavior , It's important to reexamine the service code for retries and timeouts , To see if adjustments should be made and to understand their behavior and sidecar The relationship between retrying and timeout of proxy configuration .

- In order to make Sidecar The agent checks the traffic sent from the application container and intelligently uses the content to make decisions , for example Request based routing or Header based authorization , For service owners , It is important to ensure that pure traffic is sent from the source service and that the target Service trusts sidecar The agent safely upgrades the connection .

5. Service owners need to understand the nuances of client and server configuration

Before using the service grid , I don't know there's too much with overtime and from Envoy The agent retries the relevant configuration . Most users are familiar with request timeouts , Idle timeout and number of retries , But there are many nuances and complexities :

- When it comes to idle timeout ,HTTP There is one under the agreement idle_timeout, It applies to HTTP Connection manager and upstream cluster HTTP Connect . There is one stream_idle_timeout A flow and existence do not The upstream or The downstream Activity and even route idle_timeout Rewritable stream_idle_timeout.

- Automatically retry It's also complicated . Retrying is not just the number of retries , And it's the maximum number of retries allowed , This may not be the actual number of retries . The actual number of retries depends on the retrial condition , Route requests Overtime s And the interval between retries , These intervals must fall between the total request timeout and Retrying the budget s within .

In the world of non service grid , There is only... Between the source container and the target container 1 A connection pool , But in the service grid world , Yes 3 A connection pool :

- Source container to source Sidecar agent

- Source Sidecar Agent to target Sidecar agent

- The goal is Sidecar Proxy to target container

Each of these connection pools has its own individual configuration . Carl · stoney (Karl Stoney) Of Blog A good description of these problems , Explains the complexity , Any one of the three could go wrong and how to fix them .

版权声明

本文为[On jdon]所创,转载请带上原文链接,感谢

边栏推荐

- 14.Kubenetes简介

- Introduction to nmon

- SQL语句的执行

- When iperf is installed under centos7, the solution of make: * no targets specified and no makefile found. Stop

- 对象

- Octave basic syntax

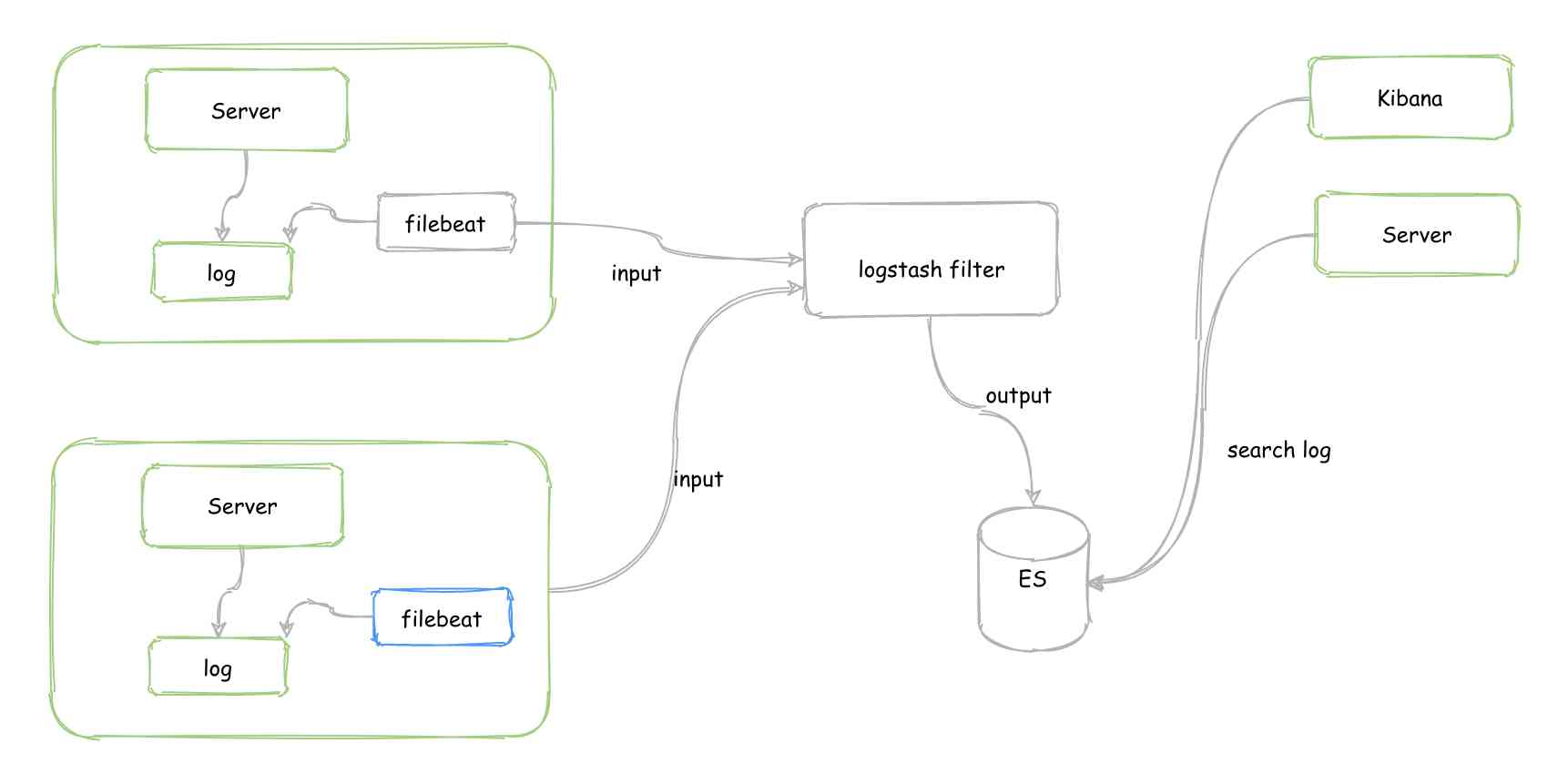

- A few lines of code can easily transfer traceid across systems, so you don't have to worry about losing the log!

- Five design patterns frequently used in development

- Share API on the web

- 教你如何 分析 Android ANR 问题

猜你喜欢

A few lines of code can easily transfer traceid across systems, so you don't have to worry about losing the log!

上线1周,B.Protocal已有7000ETH资产!

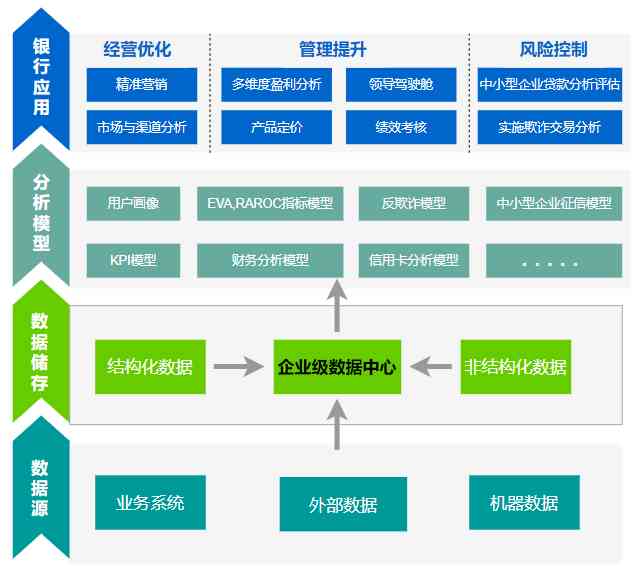

移动大数据自有网站精准营销精准获客

使用容器存储表格数据

简单介绍c#通过代码开启或关闭防火墙示例

Depth first search and breadth first search

Platform in architecture

国内三大云数据库测试对比

VIM 入门手册, (VS Code)

C/C++编程笔记:指针篇!从内存理解指针,让你完全搞懂指针

随机推荐

架构中台图

SaaS: another manifestation of platform commercialization capability

App crashed inexplicably. At first, it thought it was the case of the name in the header. Finally, it was found that it was the fault of the container!

leetcode之反转字符串中的元音字母

Introduction skills of big data software learning

大数据岗位基础要求有哪些?

Why need to use API management platform

Several common playing methods of sub database and sub table and how to solve the problem of cross database query

Database design: paradigms and anti paradigms

How to analyze Android anr problems

实现图片的复制

链表

分库分表的几种常见玩法及如何解决跨库查询等问题

使用容器存储表格数据

Introduction to nmon

通过canvas获取视频第一帧封面图

How does semaphore, a thread synchronization tool that uses an up counter, look like?

App crashed inexplicably. At first, it thought it was the case of the name in the header. Finally, it was found that it was the fault of the container!

What courses will AI programming learn?

Get the first cover image of video through canvas