当前位置:网站首页>Looking at the trend of sequence modeling of recommended systems in 2022 from the top paper

Looking at the trend of sequence modeling of recommended systems in 2022 from the top paper

2022-07-06 02:24:00 【kaiyuan_ sjtu】

author | Schrodinger of the cat

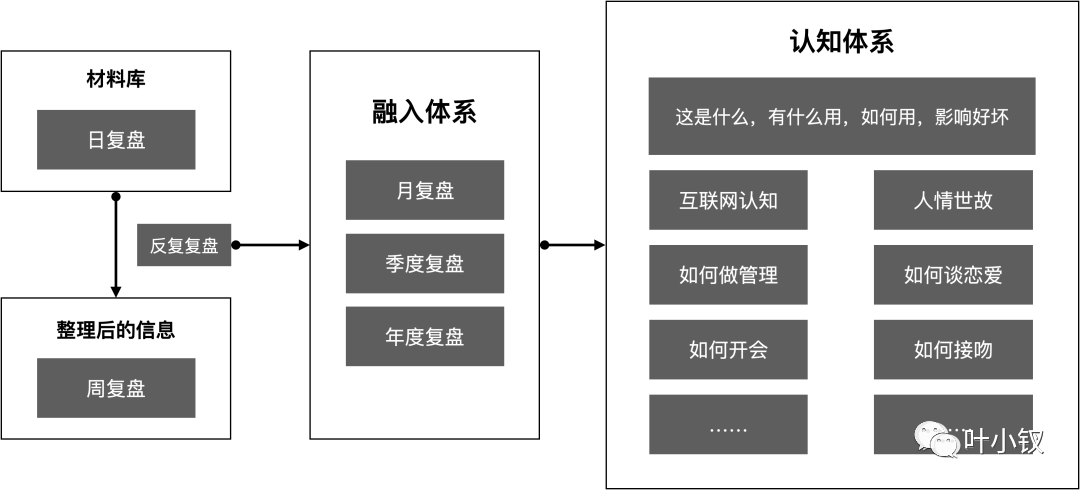

Recently saw 22 Several articles on sequence modeling at the top meeting , The models are all complex and profound , But on closer inspection , It is found that these articles are essentially input changes , The model is only to match the input . See how the nearest top will play .

background

The purpose of sequence modeling is to mine users' interests from their historical behaviors , And then recommend items of interest to users .

First, I will introduce two classic articles on sequence modeling .

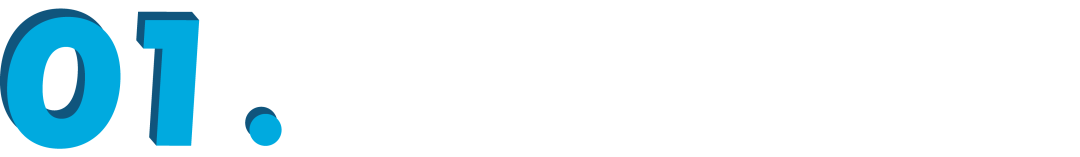

The first one is what I think is the beginning of the mountain —— Ali's DIN, The structure of the model is shown in the figure below . The paper argues that in choosing target item when ,user behaviors Medium item Should have different weights , And adopted target-attention To calculate the weight .DIN Of user behavior It's a one-dimensional item Sequence ,emb Then one more emb dimension , I hope you can remember this input format , The latter algorithm is enriching this format .

▲ DIN

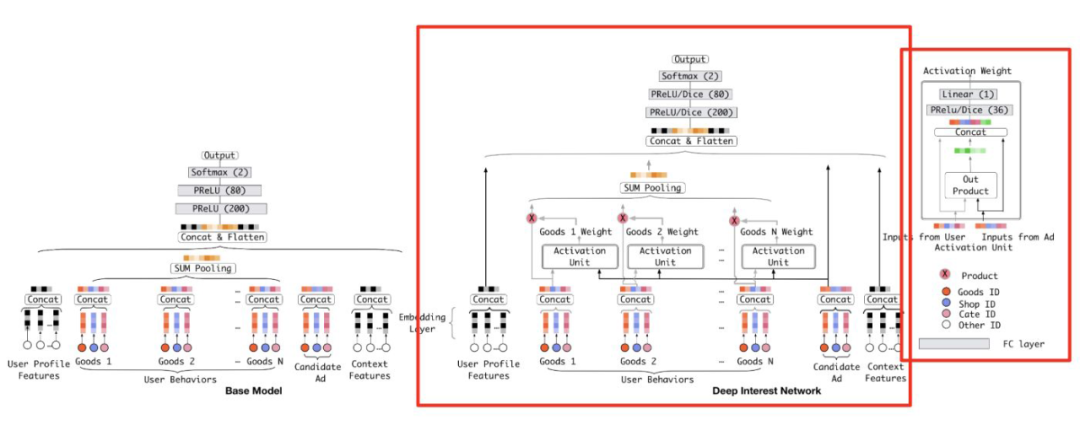

The second is a long series of classic articles —— Ali's SIM, The structure of the model is shown in the figure below . stay DIN On the basis of , Lengthen the sequence of user behavior , If computing resources allow , Mindless adoption DIN It's not a bad way . go back to SIM, This is what the paper does : Keep the short-term behavior sequence , use DIEN(DIN A variant of ) To extract users' short-term interests ; A long sequence uses a two-stage approach , Recall gets topk individual item after , Calculate again target-attention.

▲ SIM

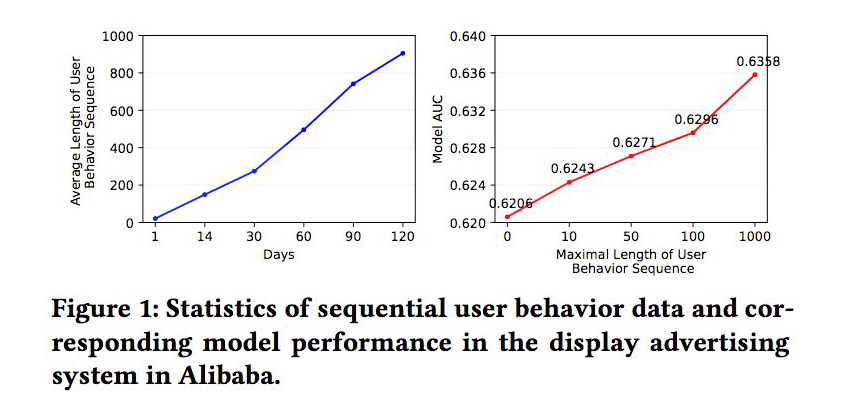

Insert a digression , Ali's MIMN Have proved , The longer the sequence ,auc The higher the .

Play method one : Sequence addition side info

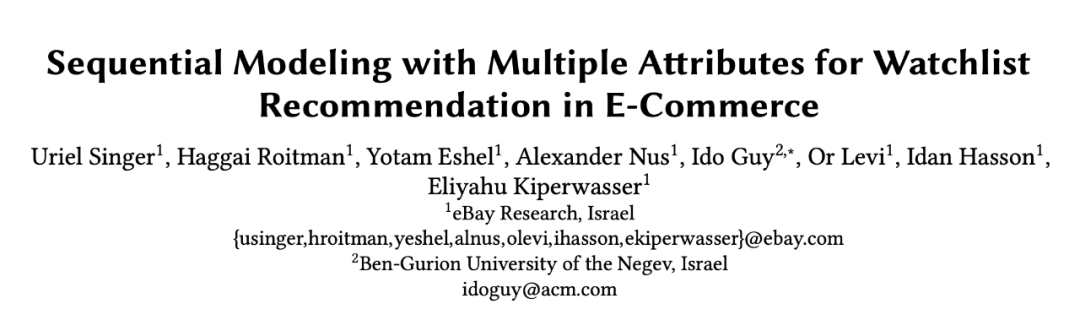

This is a eBay stay WSDM 2022 Articles on .

Paper title :

Sequential Modeling with Multiple Attributes for Watchlist Recommendation in E-Commerce

Thesis link :

https://arxiv.org/pdf/2110.11072.pdf

The previous sequences were just item Sequence ,eBay Extra item Properties of , Like the price ; This property is variable , For example, when the price changes , Users may be interested .

therefore The input changes from one-dimensional sequence to 2 D matrix , The corresponding calculation attention The way has also become 2D Of ——attention2D, The model structure is shown below .

▲ attention2D

Specific calculation process :

1. Embedding Layer—— Input with attribute information , It's a 2D Matrix ;embedding More after that emb dimension ,shape by ,N Is the length of the sequence ,C Is the number of attributes ;

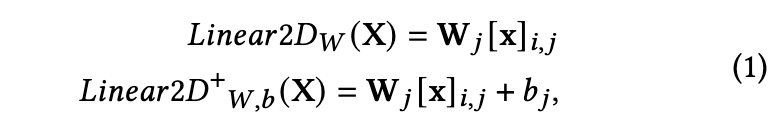

2. After adding multiple attribute dimensions ,emb How to turn into QKV Well ? For each attribute ( Also called channel) Do the same linear transformation , namely :

▲ Linear2D,i representative item,j Representative attribute

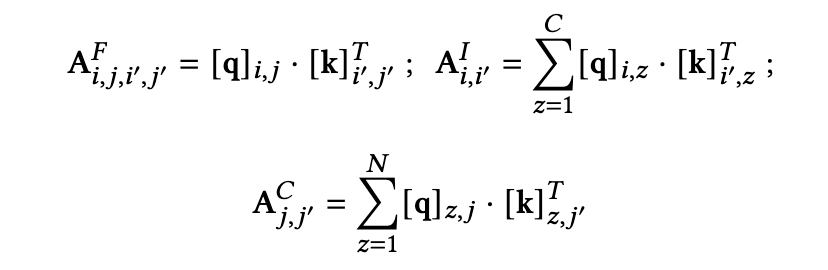

3. that 2D Of QK How to calculate attention score Well ? This paper introduces 3 There are two ways to calculate granularity : yes 4 D data , Represents the i individual item Of the j Attribute and the individual item Of the Interaction of attributes , Is the most granular interaction ; yes 2 Dimension group , Yes item All of the channel Sum up , It means item Interaction of dimensions ; yes 2 Dimension group , Yes channel All of the item Sum up , It means channel Interaction of dimensions . Put these three kinds of score The weighted sum is the final score 了 ( The weighting coefficient is also learned ).

▲ 2D Of attention score

4. up to now , To calculate the attention after , The output still has 2 dimension , Attributes and dimensions emb dimension . The dimensionality reduction process is performed in prediction layer Before , Attribute dimension pooling, What is left emb Wei songru mlp.

Play two : Sequence widening

This article is written by Ali in WSDM 2022 Articles on .

Paper title :

Triangle Graph Interest Network for Click-throughRate Prediction

Thesis link :

https://arxiv.org/pdf/2202.02698.pdf

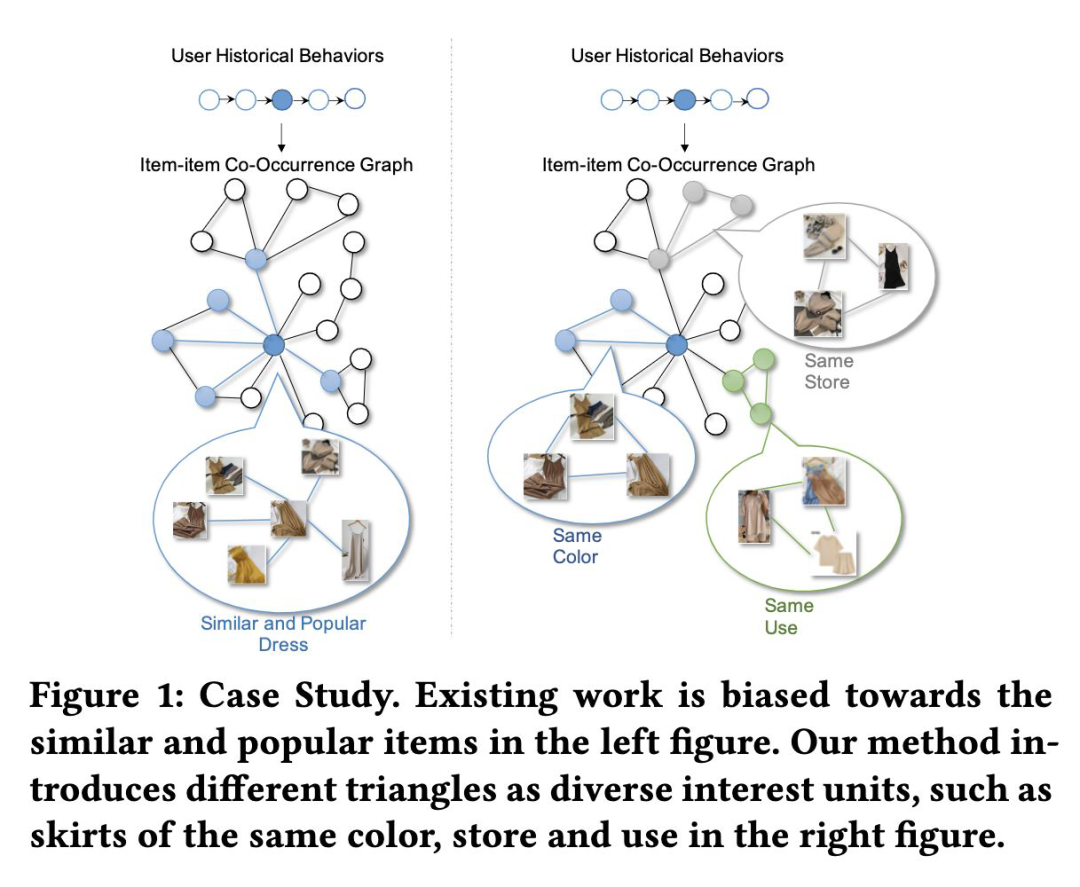

No matter how long the sequence is, it is only the sequence of this user , This paper looks directly at the behavior of other users item. Build a sequence of all users' behaviors item chart , Each node on the graph is a item,item An edge means that a user has clicked on the two in turn item.

In this paper, we first define a graph triangle,triangle The definition of is not the point , You can put triangle It is simply understood as the neighbor on the graph .

▲ triangle

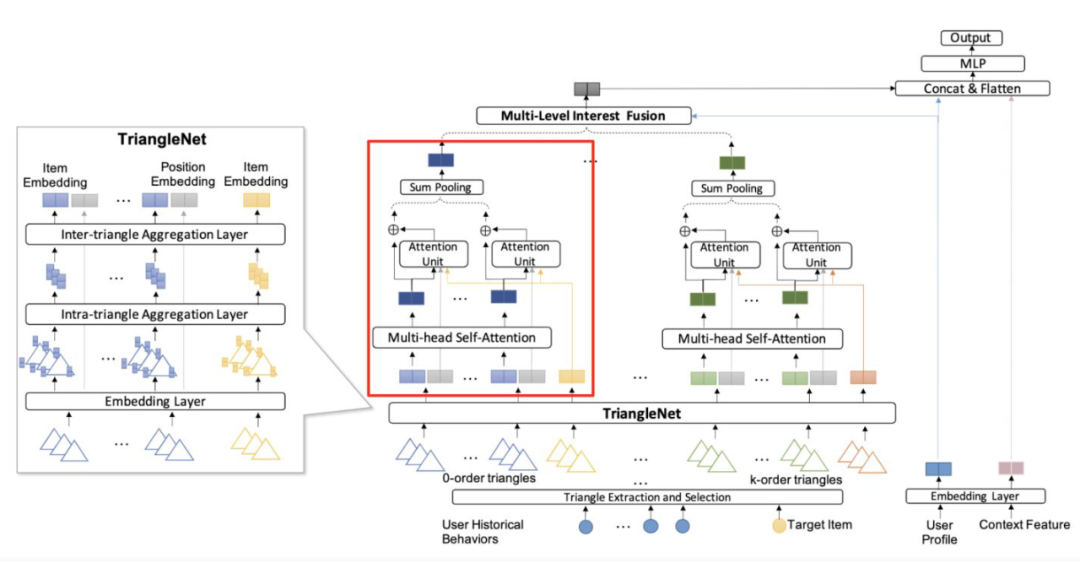

TGIN The structure of the model is as follows , Look at the complexity , It's very simple . The red box in the figure below is an example similar to DIN The calculation of attention Network of , On the left is processing triangle Of , An edge is a computational multiorder triangle( It can be understood as multi-level neighbors on a graph )attention Network of .

▲ TGIN

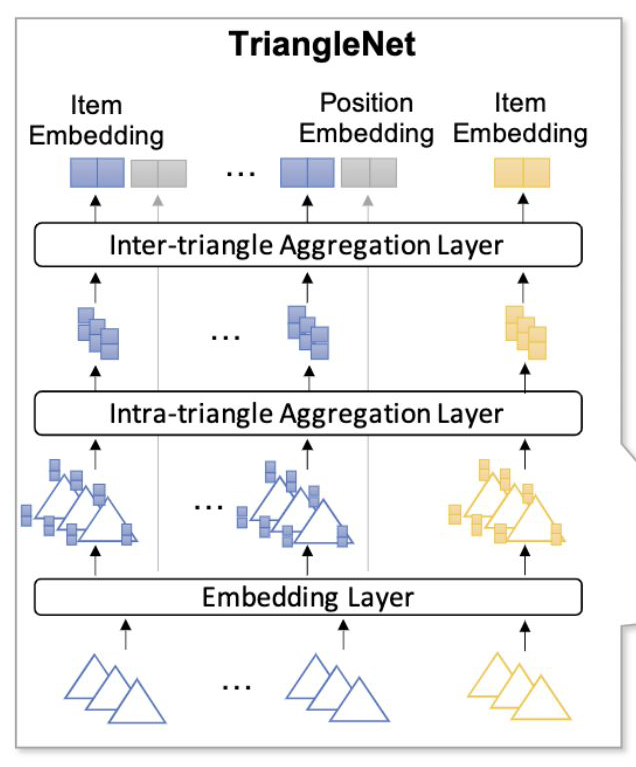

Of a certain order TriangleNet: Each level will have multiple triangle, Every triangle There will be 3 individual item, So let's start with internal aggregation (intra), ordinary avg pooling; Then external aggregation (inter),multi-head self-attention.

▲ TriangleNet

Play three : Sequence segmentation and labeling

Ali WSDM 2022

Paper title :

Modeling Users’ Contextualized Page-wise Feedback for Click-Through Rate Prediction in E-commerce Search

Thesis link :

https://guyulongcs.github.io/files/WSDM2022_RACP.pdf

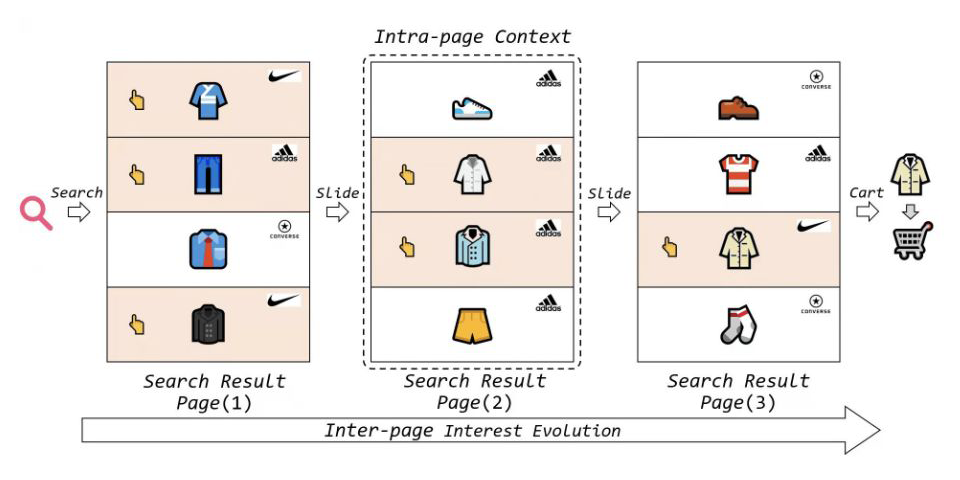

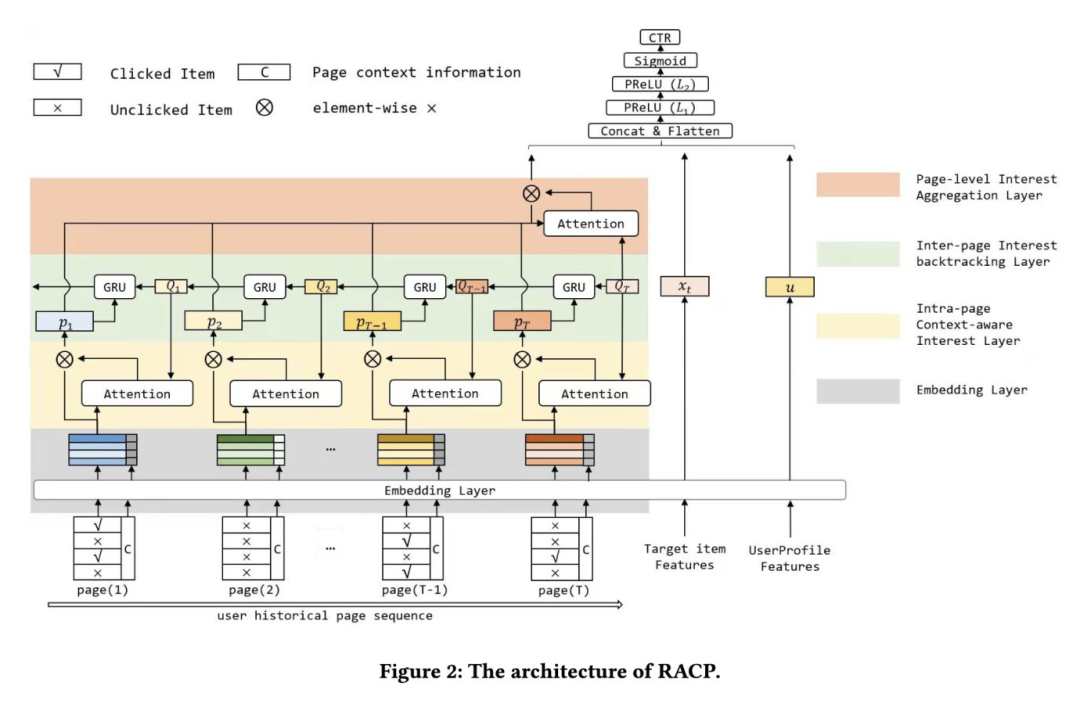

Sequence with page The shape of ,page There are not only clicks poi( The positive feedback ), There is still no click poi( Negative feedback ), Capture... Within the page context The evolution of interest between information and pages . What page information can learn ? Users may not click because they don't like , Instead, there is a cheaper one of the same type in the page .

The model structure is shown below . Generally, the input of this two-layer structure , The model also has two layers , One layer extracts the information in the page (intra), A layer of aggregation between pages (inter). This model has three layers , There's a layer in the middle backtrack layer . And one more detail ,page It is filtered , Limit it to the same category as the current query vector .

▲ RACP

Focus on the interest backtracking layer , That's the green part of the picture above . General models only focus on long-term interests and target item The relevance of , While ignoring the consistency of short-term interests . Specific to this paper , Short term interest refers to every page The interest represented .

In this layer, a query vector of users' current interest is introduced ( This is a search article , So there are query vectors ), The rest No longer a real query vector , It is attention query vector.attention query vector adopt GRU Pass to the left layer by layer , influence page Internal attention Calculation .

summary

Previously, I introduced the playing methods of several sequences on the input side .

Play method one : Add item Properties of . The choice of this attribute is very particular , It must be changeable 、 And the user is very sensitive , Such as price 、 Such as subsidies ; Can not be that kind of irrelevant attribute .

Play two : Add more item, But not longer , But through the diagram ( The essence is through other users ), Introduce some users that you haven't seen before ( Or no interaction ) But you may be interested in item; This kind of addition item Can be done offline , You can use some “ On the tall ” The way to boast is to force . Personal feeling , The essence of the effectiveness of this method is Learn more about co-occurrence . I was giving user choice poi when , Only learning. user In my own history poi The co-occurrence relationship of ; In some reasonable way ( Such as the historical behavior of other users ) Introducing more co-occurrence relations can broaden the vision of the model .

Play three : Sequence segmentation ( branch page、 branch session), Mixed sequences ( It's not just a click sequence ), Most truly Restore the environment selected by the user , Infer the user's click logic within the segment , Analyze the user's choice psychology .

Communicate together

I want to learn and progress with you !『NewBeeNLP』 At present, many communication groups in different directions have been established ( machine learning / Deep learning / natural language processing / Search recommendations / Figure network / Interview communication / etc. ), Quota co., LTD. , Quickly add the wechat below to join the discussion and exchange !( Pay attention to it o want Notes Can pass )

边栏推荐

- 更改对象属性的方法

- Get the relevant information of ID card through PHP, get the zodiac, get the constellation, get the age, and get the gender

- 数据准备工作

- 0211 embedded C language learning

- 在GBase 8c数据库中使用自带工具检查健康状态时,需要注意什么?

- sql表名作为参数传递

- Prepare for the autumn face-to-face test questions

- 【clickhouse】ClickHouse Practice in EOI

- 3D drawing ()

- 2022年版图解网络PDF

猜你喜欢

The ECU of 21 Audi q5l 45tfsi brushes is upgraded to master special adjustment, and the horsepower is safely and stably increased to 305 horsepower

Advanced technology management - what is the physical, mental and mental strength of managers

![[postgraduate entrance examination English] prepare for 2023, learn list5 words](/img/6d/47b853e76d1757fb6e42c2ebba38af.jpg)

[postgraduate entrance examination English] prepare for 2023, learn list5 words

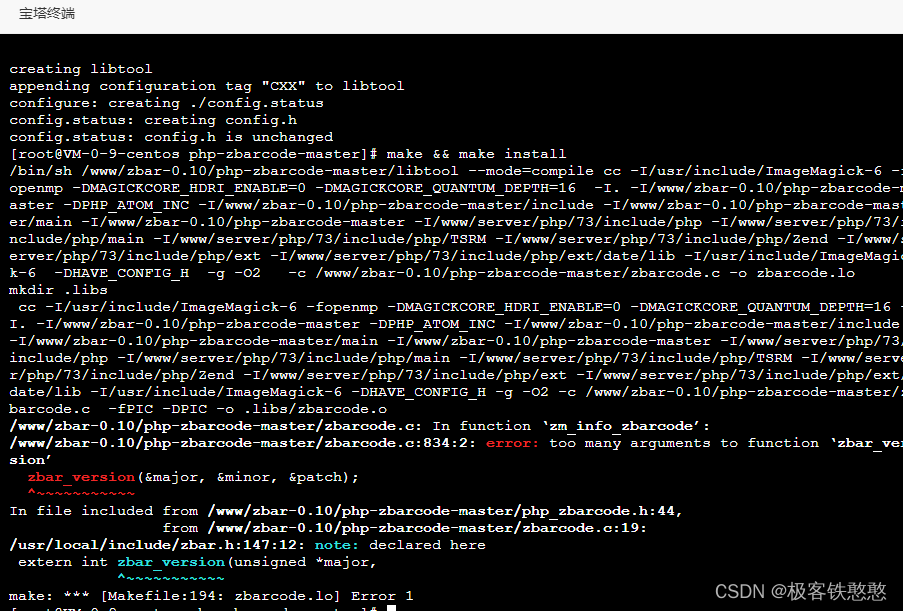

It's wrong to install PHP zbarcode extension. I don't know if any God can help me solve it. 7.3 for PHP environment

![[depth first search] Ji Suan Ke: Betsy's trip](/img/b5/f24eb28cf5fa4dcfe9af14e7187a88.jpg)

[depth first search] Ji Suan Ke: Betsy's trip

![[solution] add multiple directories in different parts of the same word document](/img/22/32e43493ed3b0b42e35ceb9ab5b597.jpg)

[solution] add multiple directories in different parts of the same word document

A basic lintcode MySQL database problem

Computer graduation design PHP enterprise staff training management system

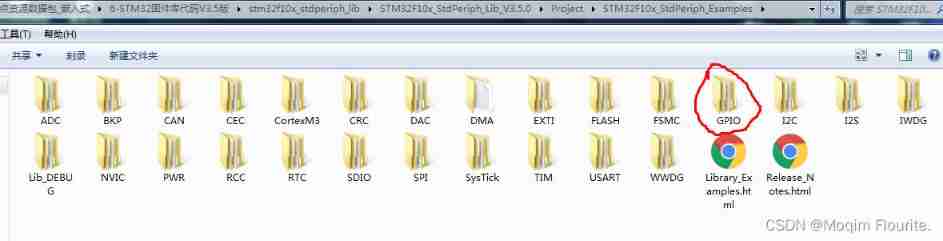

Blue Bridge Cup embedded_ STM32 learning_ Key_ Explain in detail

The ECU of 21 Audi q5l 45tfsi brushes is upgraded to master special adjustment, and the horsepower is safely and stably increased to 305 horsepower

随机推荐

模板_快速排序_双指针

Exness: Mercedes Benz's profits exceed expectations, and it is predicted that there will be a supply chain shortage in 2022

Get the relevant information of ID card through PHP, get the zodiac, get the constellation, get the age, and get the gender

Black high-end responsive website dream weaving template (adaptive mobile terminal)

Spark accumulator

Online reservation system of sports venues based on PHP

How to check the lock information in gbase 8C database?

VIM usage guide

我把驱动换成了5.1.35,但是还是一样的错误,我现在是能连成功,但是我每做一次sql操作都会报这个

[Clickhouse] Clickhouse based massive data interactive OLAP analysis scenario practice

Multiple solutions to one problem, asp Net core application startup initialization n schemes [Part 1]

Jisuanke - t2063_ Missile interception

Building the prototype of library functions -- refer to the manual of wildfire

729. My schedule I / offer II 106 Bipartite graph

How to use C to copy files on UNIX- How can I copy a file on Unix using C?

Initial understanding of pointer variables

[depth first search notes] Abstract DFS

Have a look at this generation

Computer graduation design PHP part-time recruitment management system for College Students

模板_求排列逆序对_基于归并排序