当前位置:网站首页>Machine learning notes week02 convolutional neural network

Machine learning notes week02 convolutional neural network

2022-07-06 11:20:00 【Octopus loving monster】

Convolutional neural networks

List of articles

- Convolutional neural networks

- Deep learning trilogy

- Convolutional neural networks

- Typical structure of convolutional neural network

- Manual implementation of a simple ResNet The Internet

- MNIST Data set classification

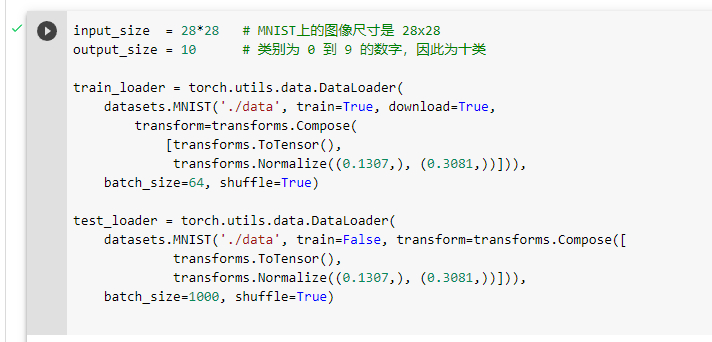

- load MNIST Data sets

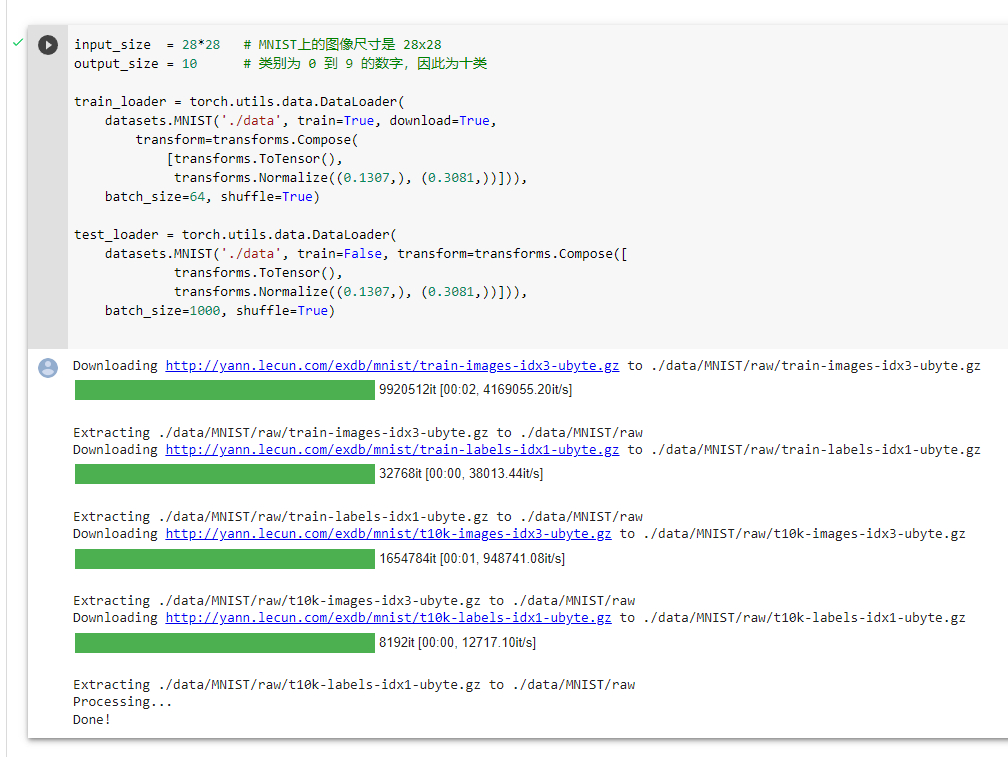

- utilize matplotlib Load data visualization

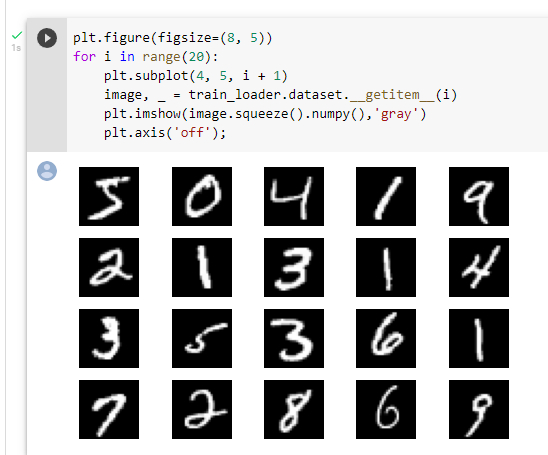

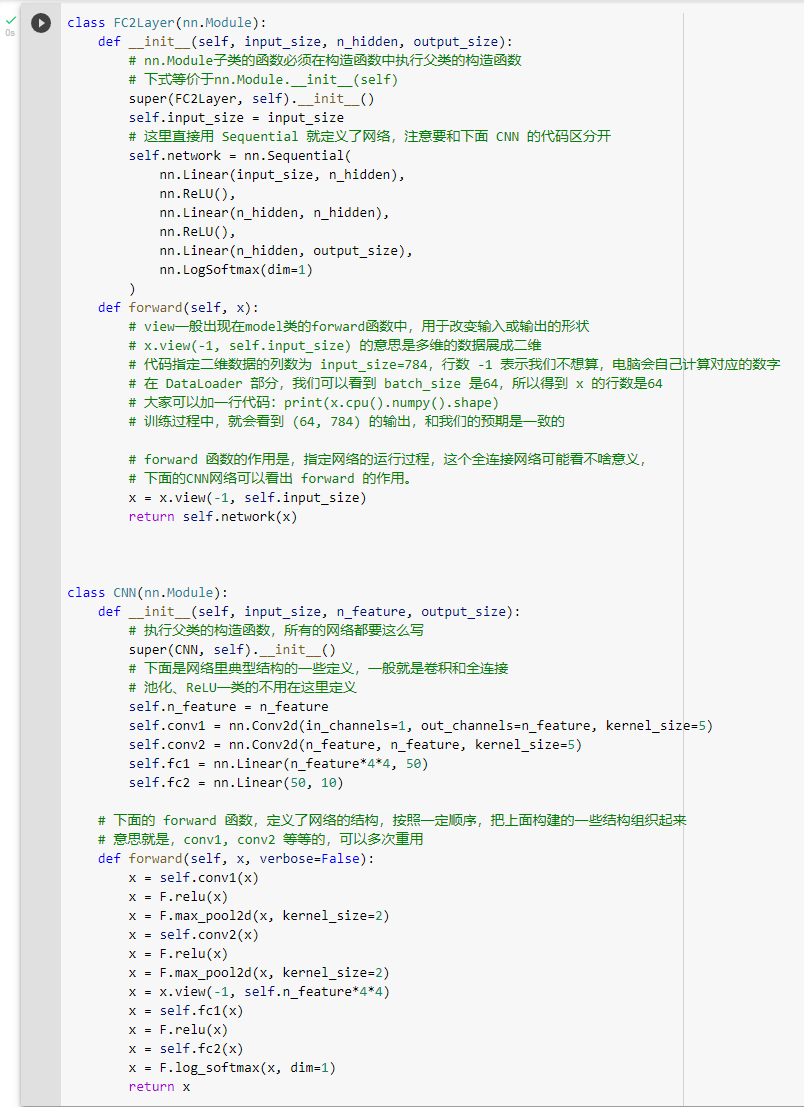

- Create fully connected networks and Convolutional Neural Networks

- Define training test functions

- Train with a fully connected network

- Using convolutional neural network to train

- Disturb the pixels of the training image

- Define training and testing functions after disrupting data

- Observe the training results after disruption

- CIFAR10 Data set classification

Deep learning trilogy

- Step1: Build the neural network structure

- Step2: Find a suitable loss function

- Step3: Find a suitable optimization function , Update parameters

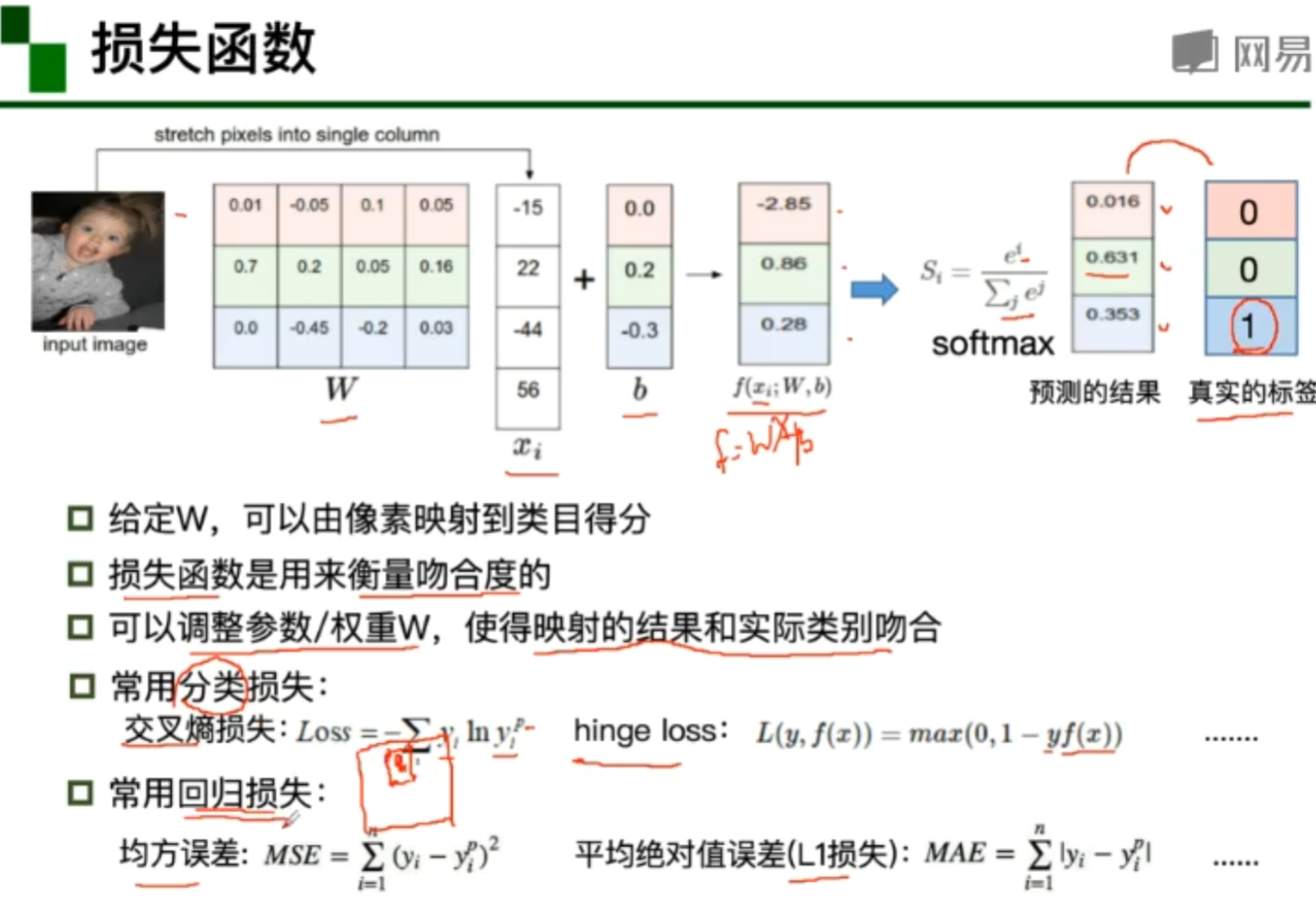

Loss function

Through the prediction of neural network model . Calculate the consistency between the model training results and the real results through the loss function .

Fully connected network processing image

Because each neuron is connected with the pixel of the image , It leads to over fitting of too many parameters , So that some characteristics of the training set are overemphasized , Resulting in functional limitations

Convolutional neural networks

Local correlation

Parameters of the Shared

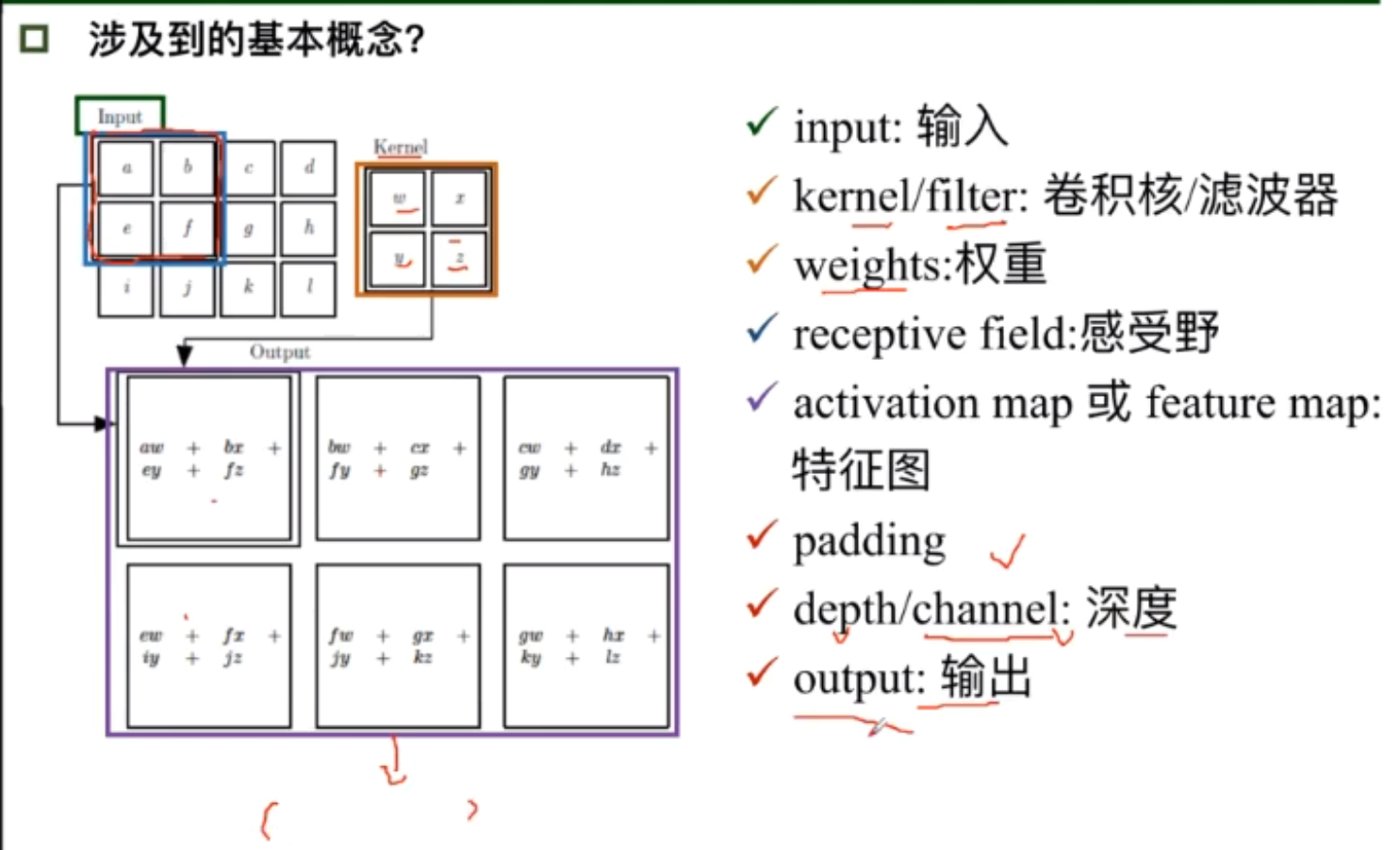

Each neuron is only connected to part of the image , Calculate the convolution kernel of some regions .

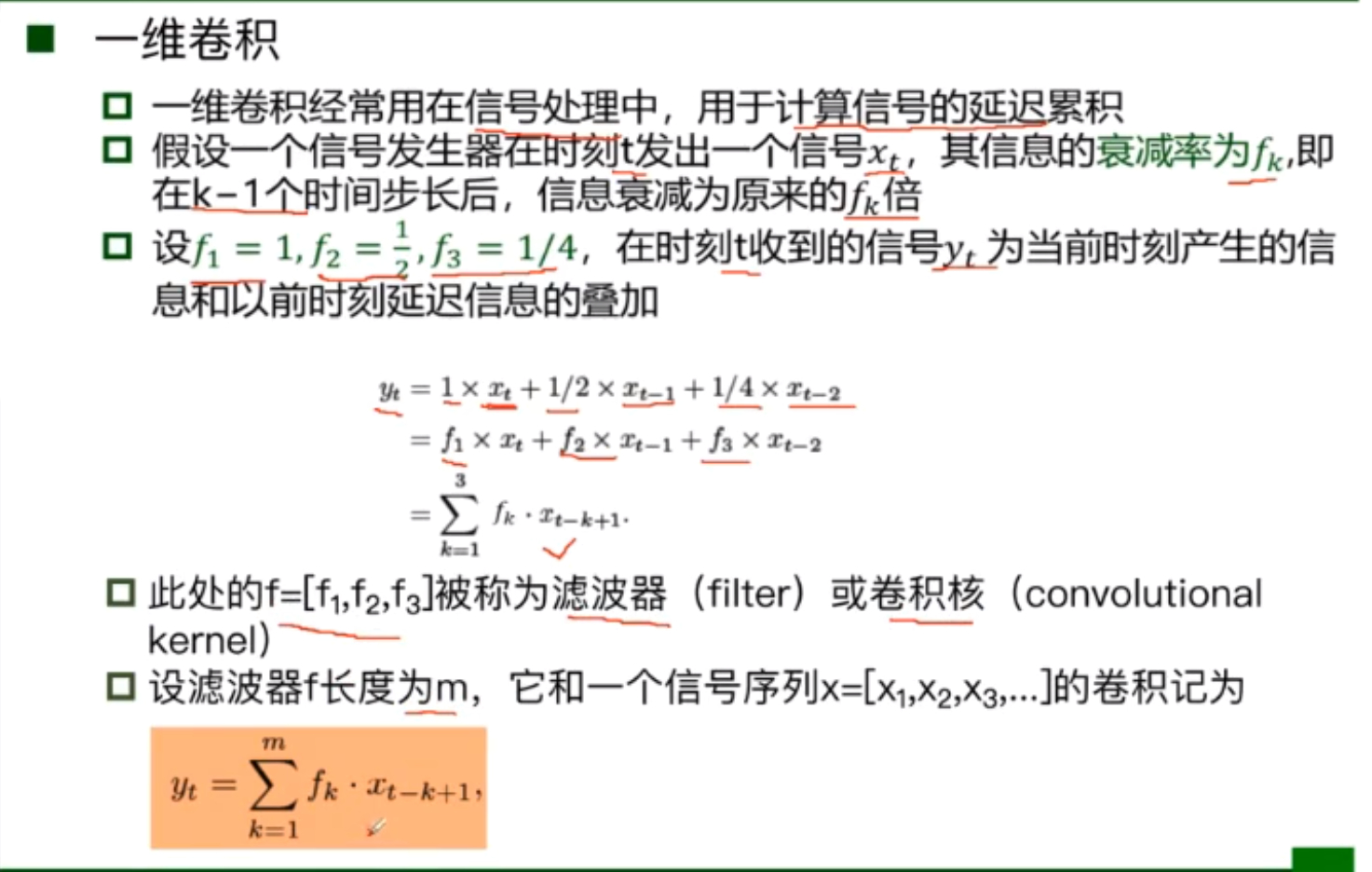

Convolution

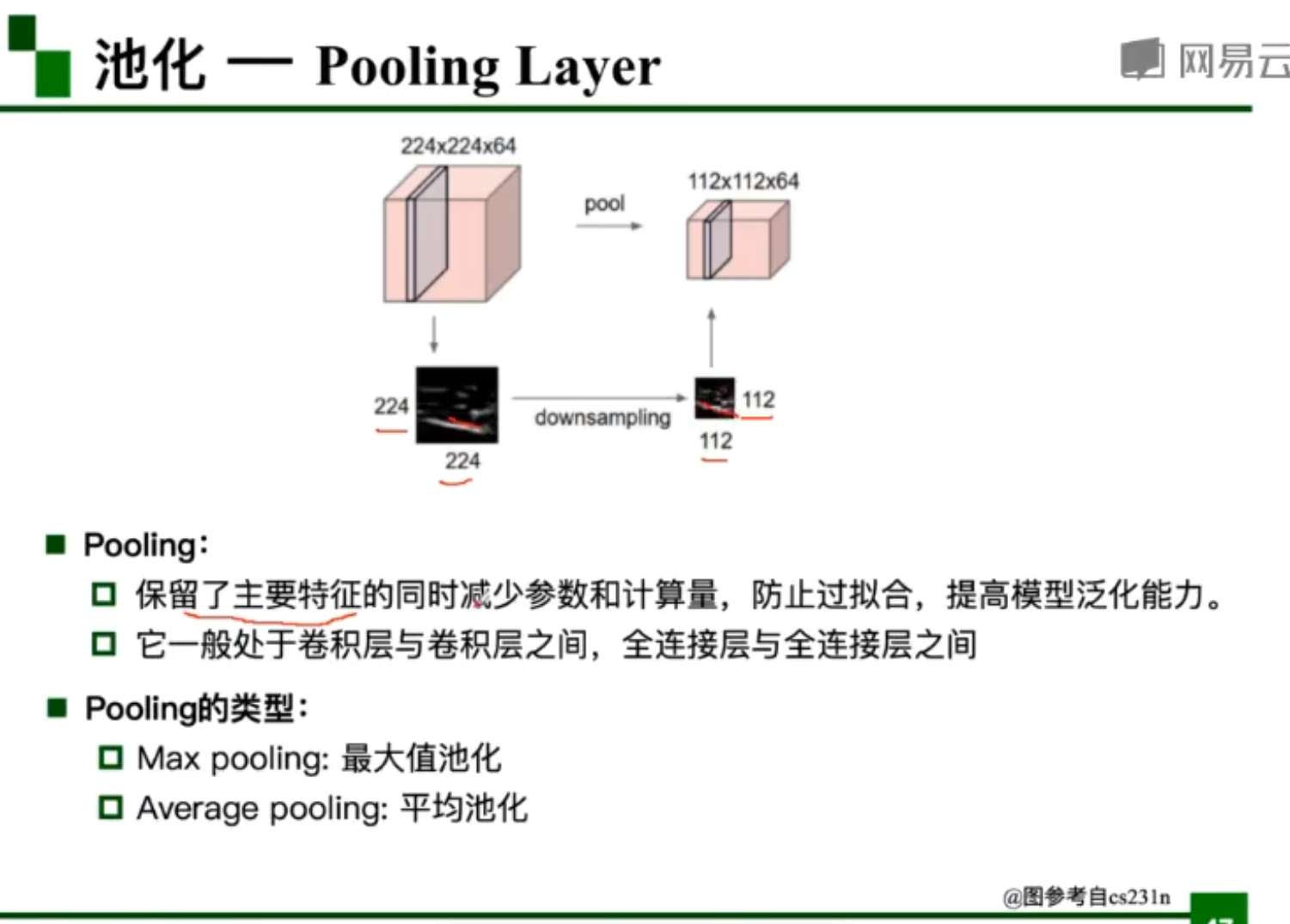

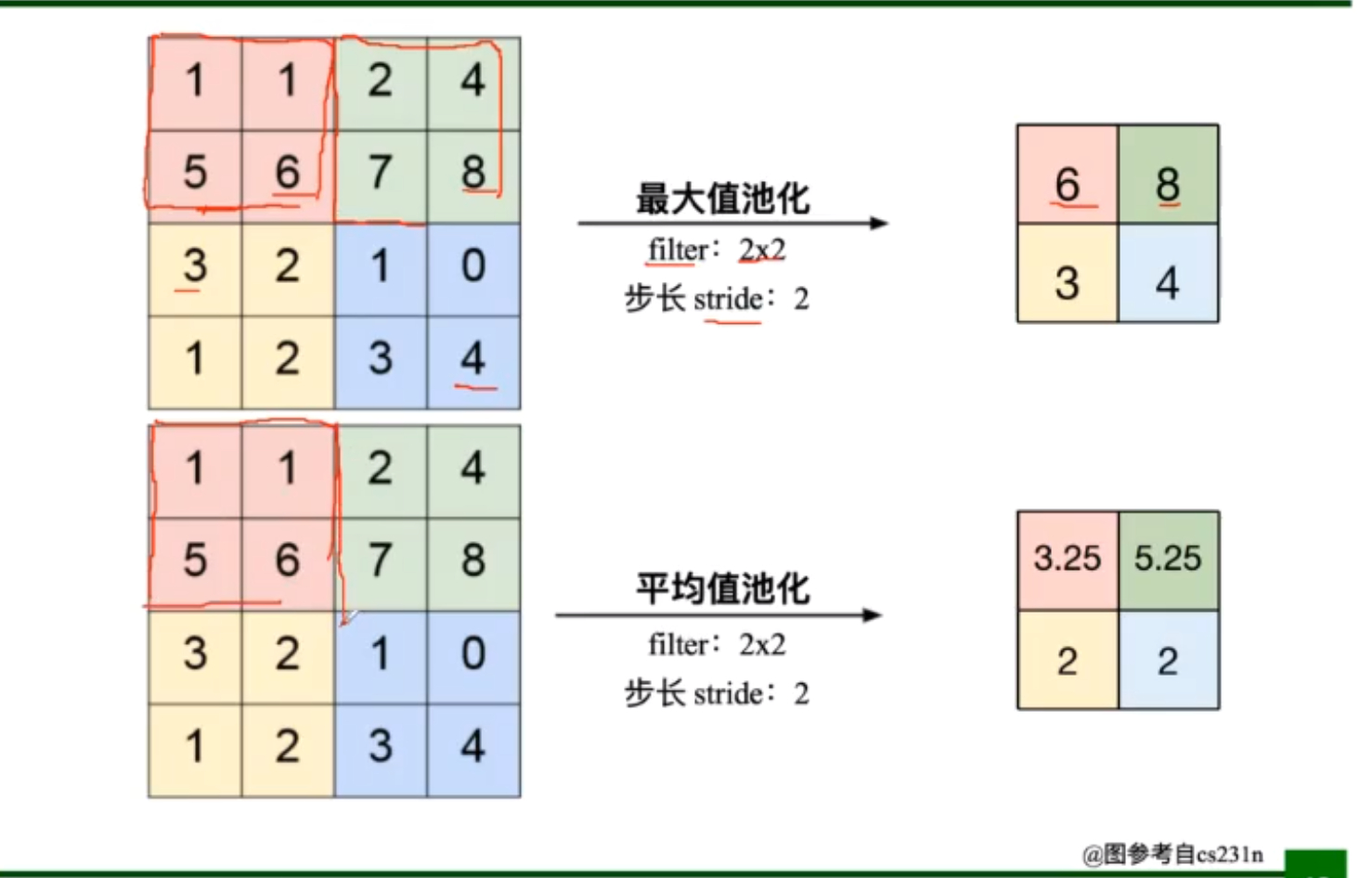

Pooling

Pooling reduces the amount of parameters and calculations , Pooling layer is a mathematical operation , No parameter

In the classification and recognition task , Prefer to use maximum pooling

Full connection

[ Failed to transfer the external chain picture , The origin station may have anti-theft chain mechanism , It is suggested to save the pictures and upload them directly (img-zIct7Mgt-1646810190645)(C:\Users\CrazyBin\AppData\Roaming\Typora\typora-user-images\image-20220307194013491.png)]

- A typical convolution network consists of convolution layers 、 Pooling layer 、 The whole connection layer is cross stacked

- Convolution is a mathematical operation on two functions of real variables

- Convolution has local correlation , Features of parameter sharing

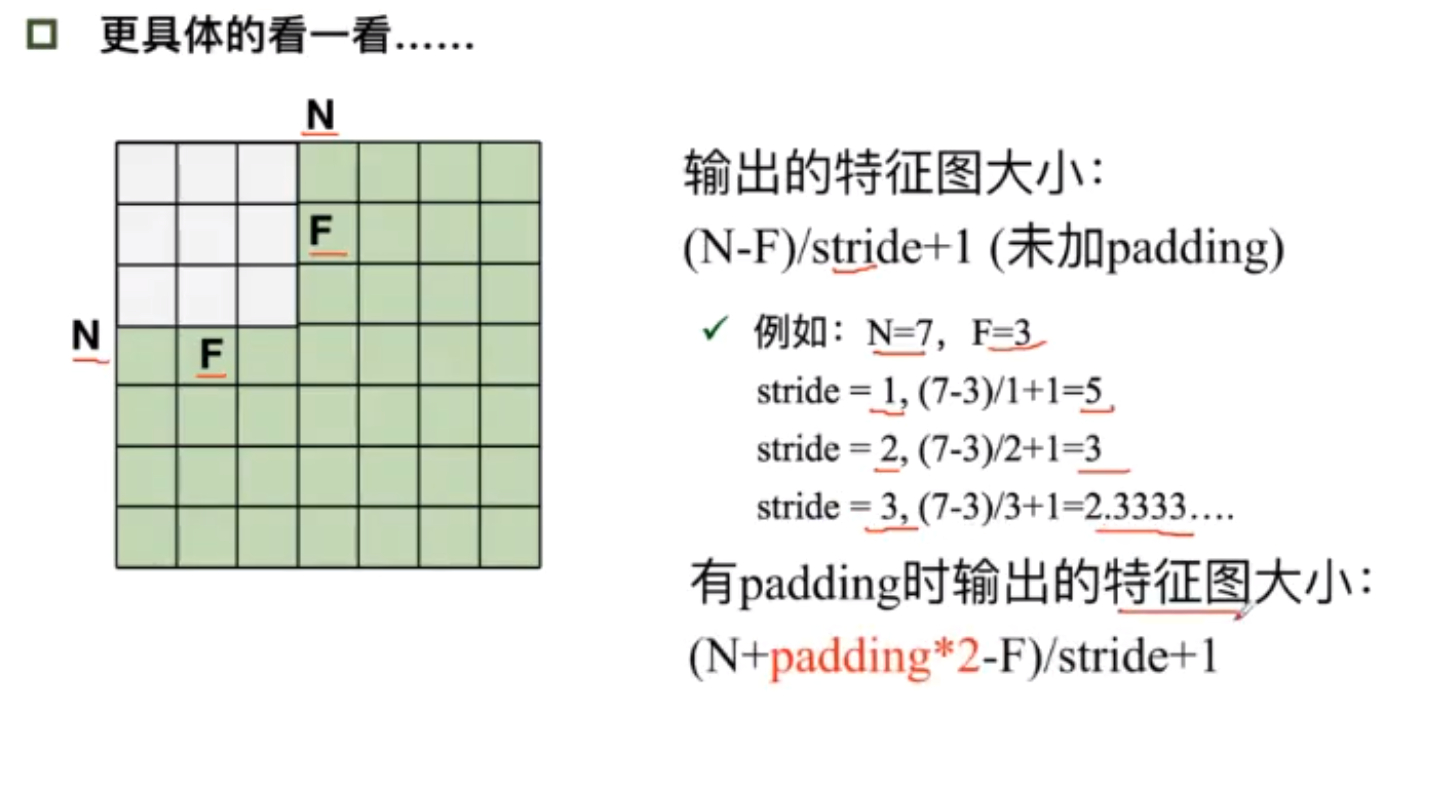

- Not added padding The size of the output characteristic graph : ( N − F ) / s t r i d e + 1 (N-F)/stride+1 (N−F)/stride+1 Input minus convolution divided by step plus 1

- Yes padding when : ( N + p a d d i n g ∗ 2 − F ) / s t r i d e + 1 (N+padding*2-F)/stride+1 (N+padding∗2−F)/stride+1

- Pooling The type of :Max pooking: Maximum pooling ,Average pooling: The average pooling

- Full connection : Usually the full connection layer is at the end of the convolutional neural network

Typical structure of convolutional neural network

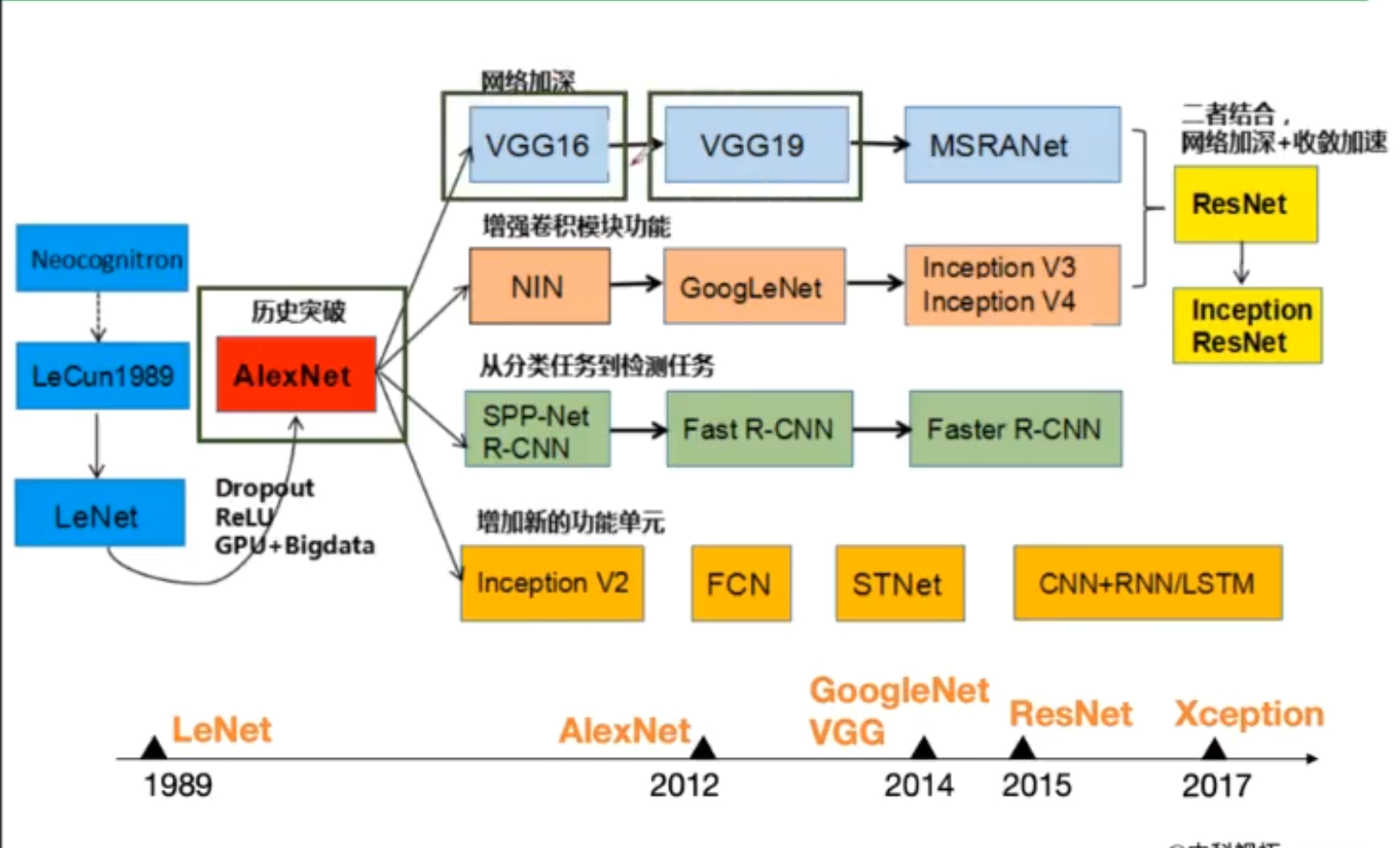

AlexNet

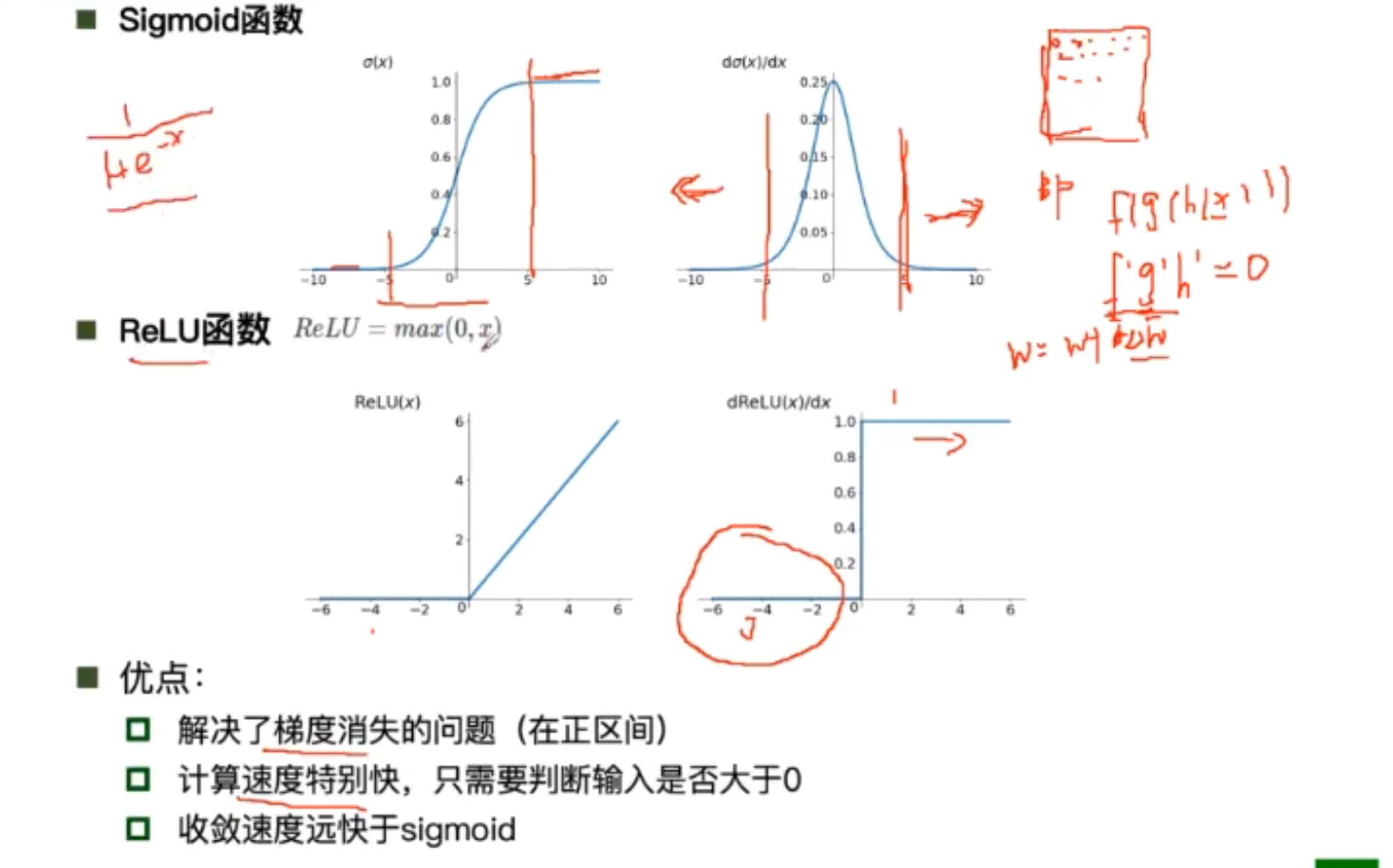

AlexNet Use Relu Function as activation function , bring BP The gradient vanishing problem when the algorithm reflects the tuning parameters has been solved ,Relu Because of its simple form , The gradient is large , Make the convergence speed of the function faster , Make the model training feedback faster

AlexNet Use Relu Function as activation function , bring BP The gradient vanishing problem when the algorithm reflects the tuning parameters has been solved ,Relu Because of its simple form , The gradient is large , Make the convergence speed of the function faster , Make the model training feedback faster

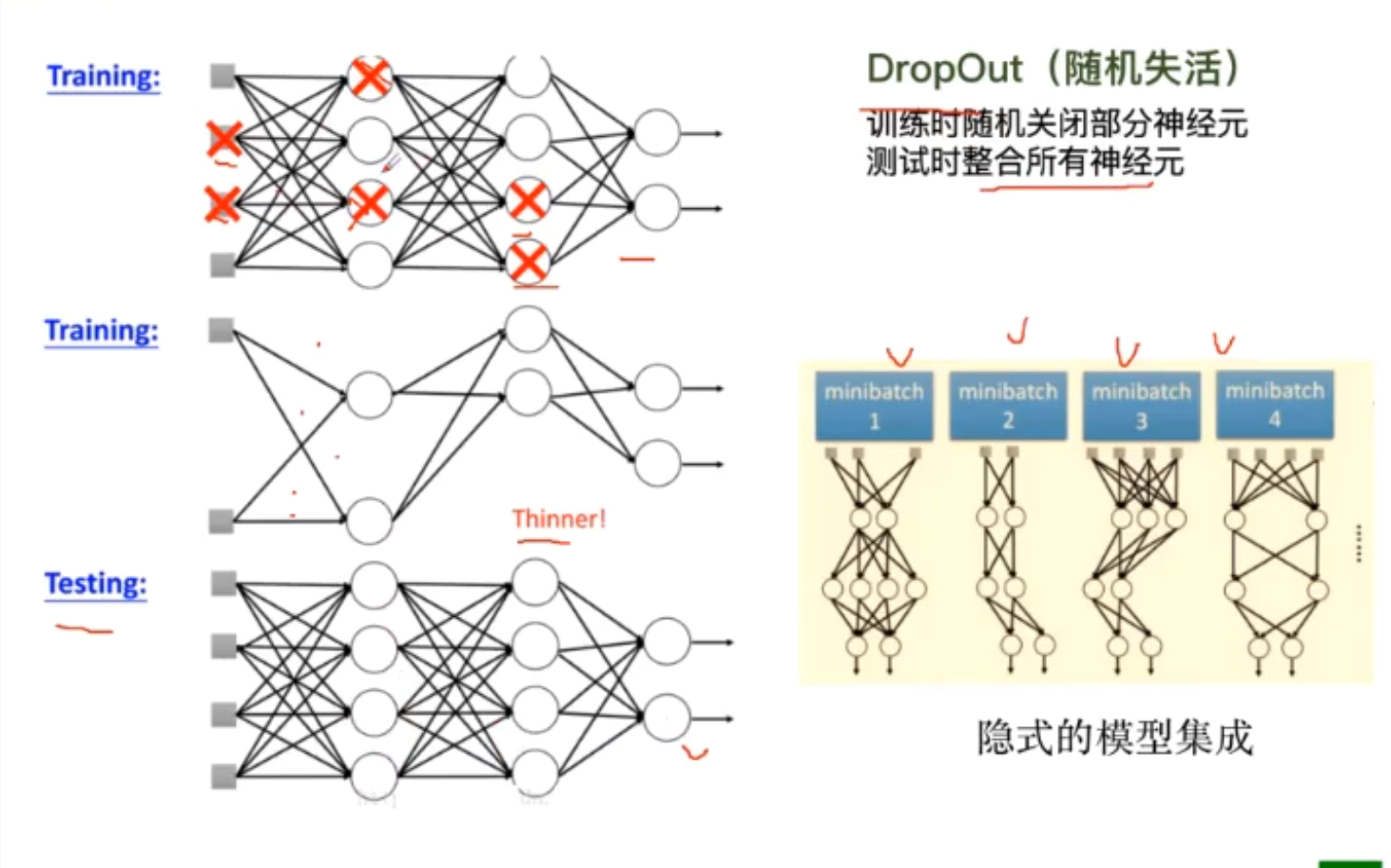

utilize DropOut Avoid overfitting

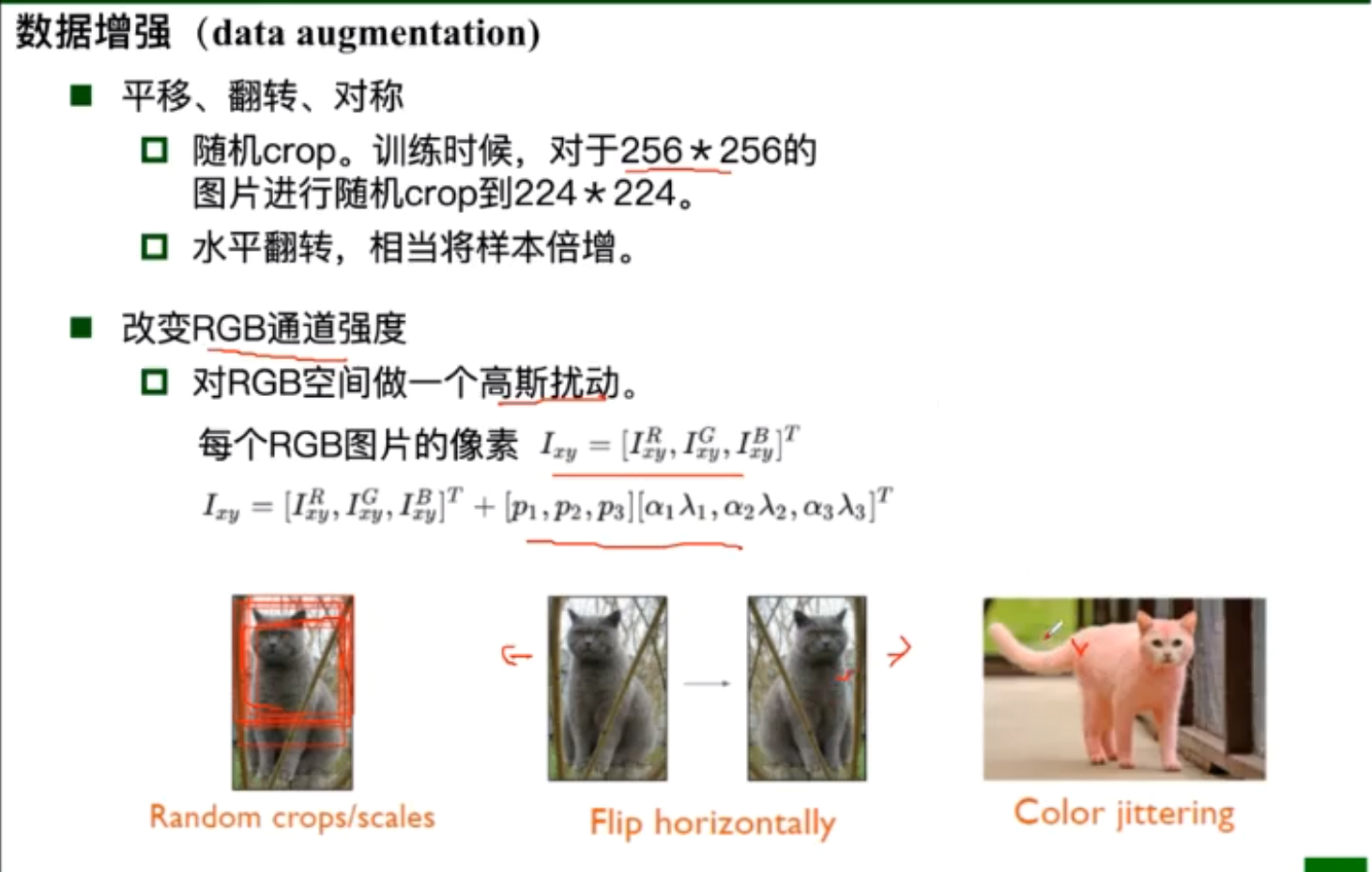

Add data to the training set

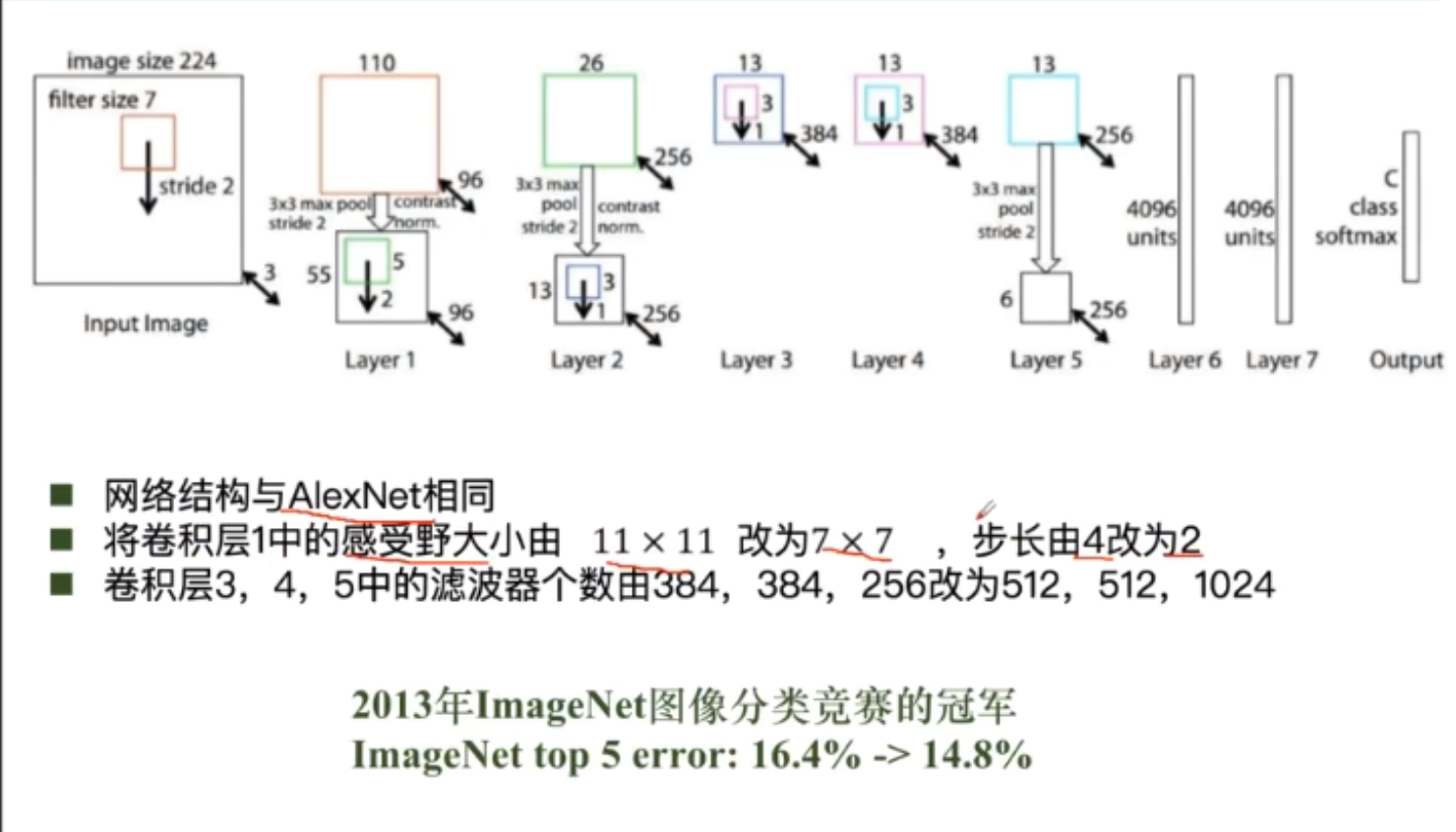

ZFNet

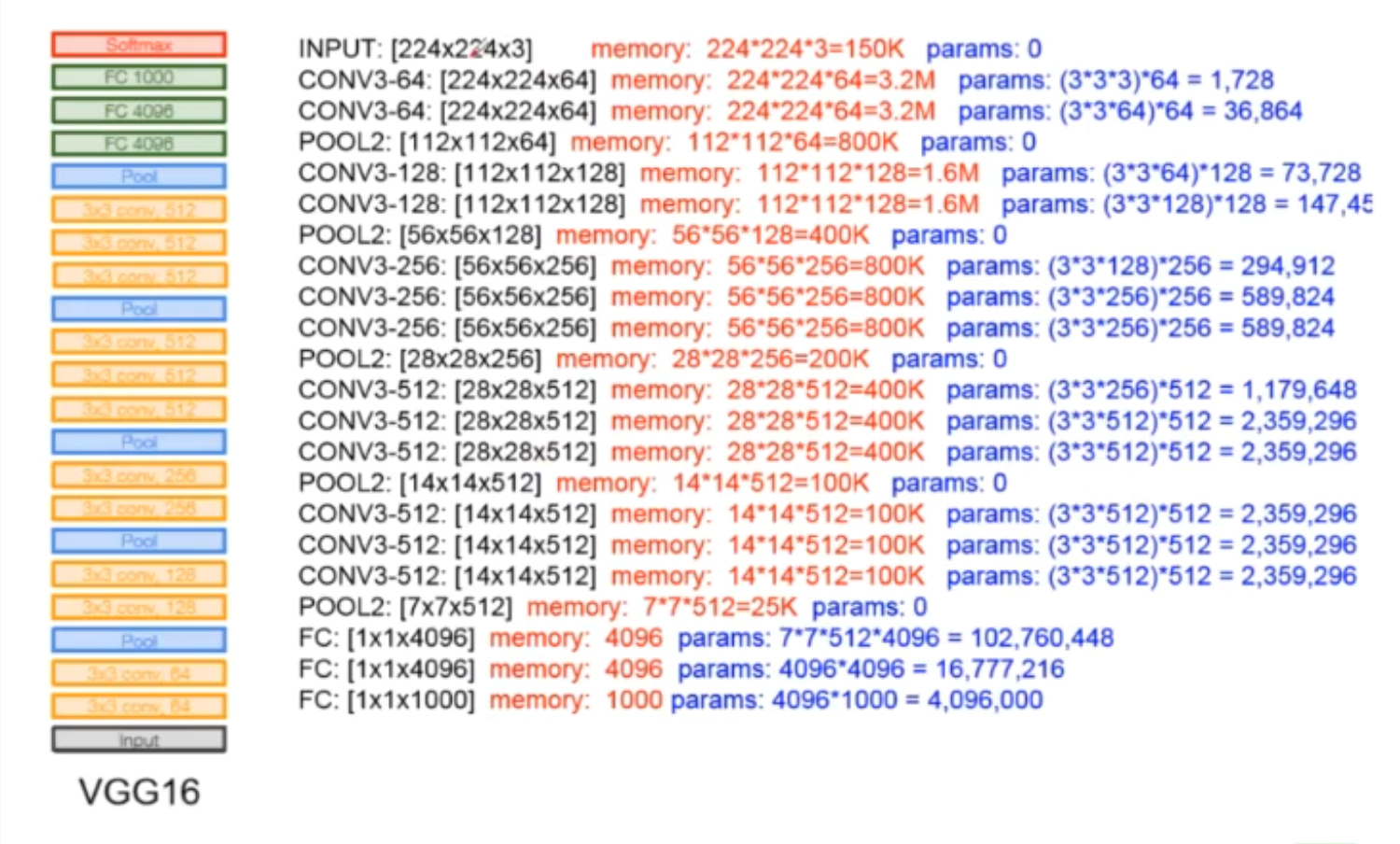

VGG

VGG Is a deeper network , A lot of parameters

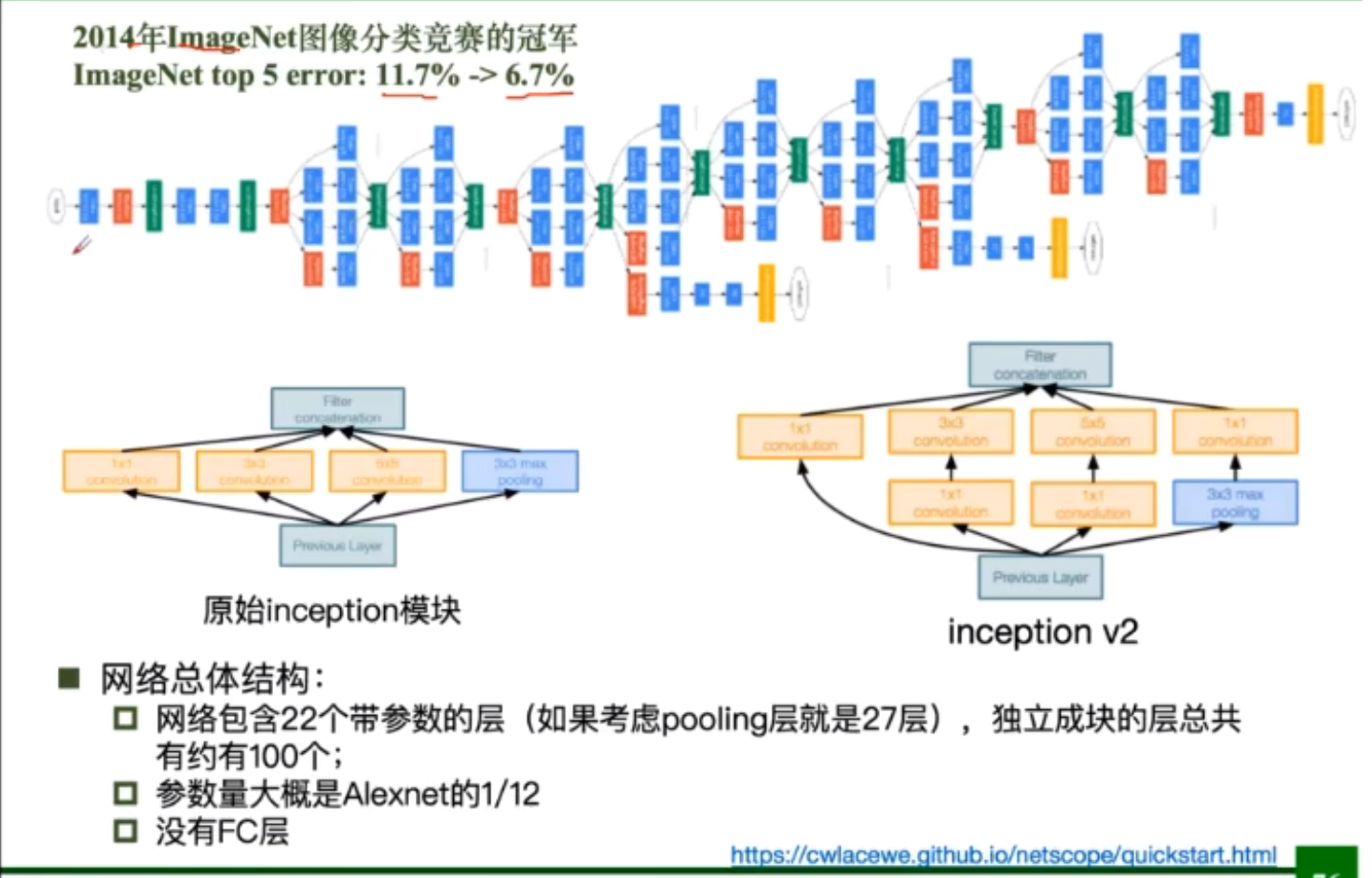

GoogleNet

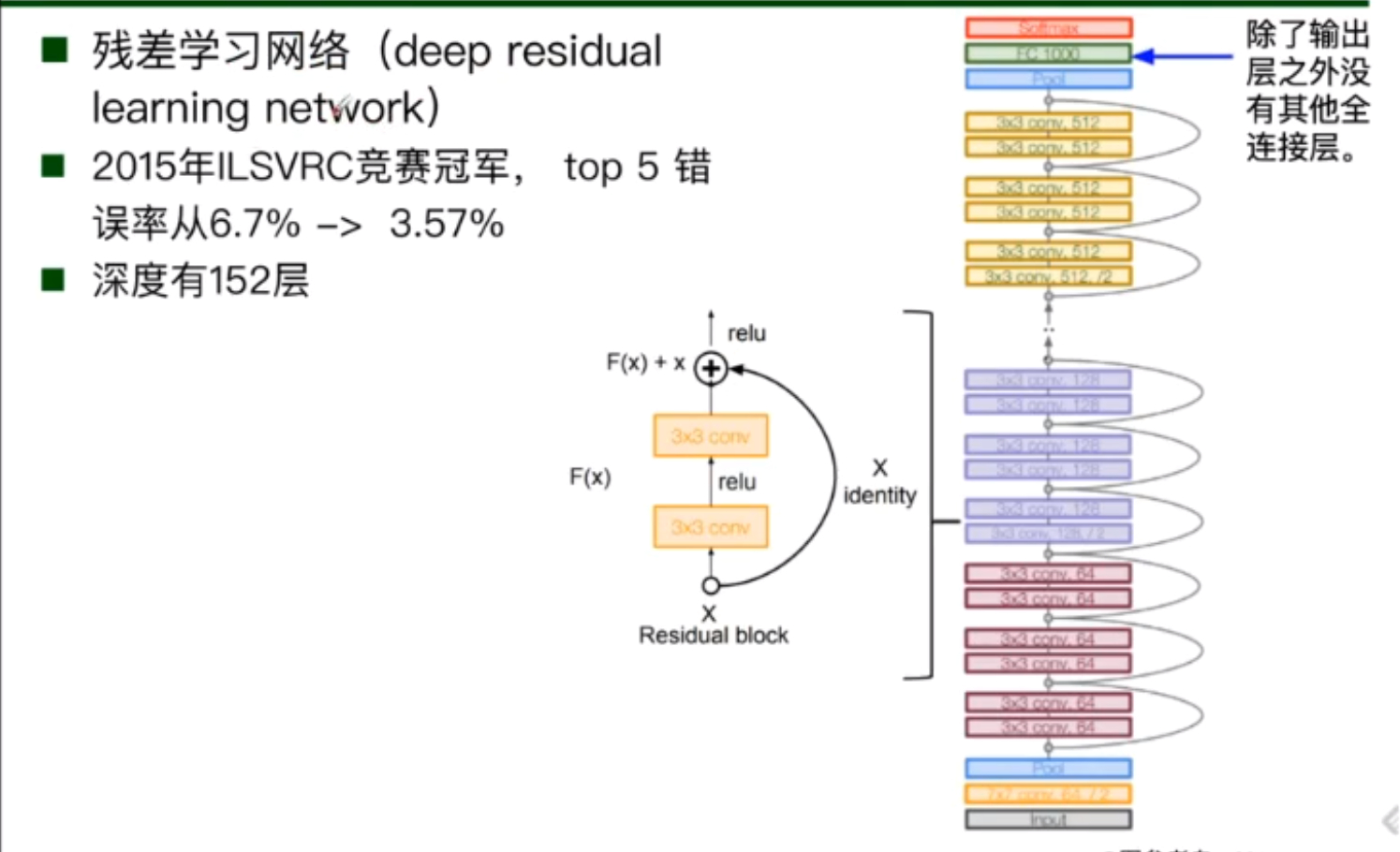

ResNet

ResNet The important idea of is uneven learning , As the network deepens with the number of layers during training , Prone to degradation .

It makes it difficult for us to simulate the desired function , But using residuals to learn , Transform the function we want to simulate into an easy to learn form , And add our residual on the basis of the result .

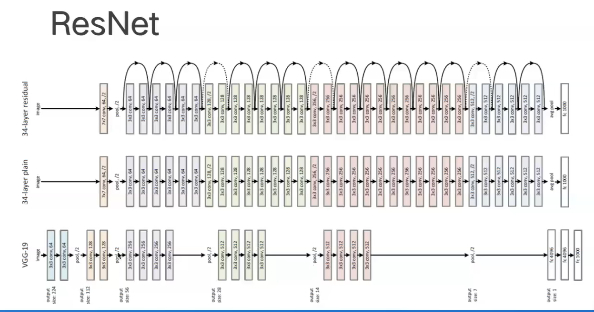

Can be observed ResNet It is a very deep network model

Because the depth is deep , Prone to degradation , Make the training effect of the network poor , utilize ResNet The residual thought of , Adding our identity mapping bridging layer between each two convolution layers can greatly improve the effect of our model .

For different depths ResNet There are some differences in structure

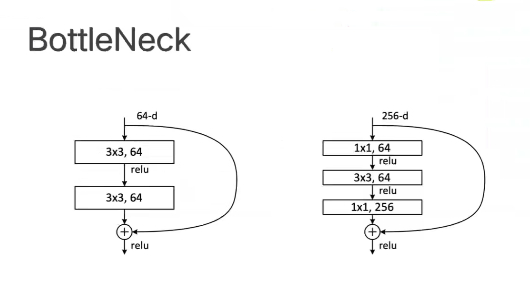

stay 50 Layer above ResNet There are BottleNeck Bottleneck structure

In the deeper network, the dimension is reduced first and then increased , This is because when the network model is deep , The amount of parameters required is very large . utilize BottleNeck Structure can reduce the amount of parameters we need to deal with .

Manual implementation of a simple ResNet The Internet

import torch

import torch.nn as nn

from torch.hub import load_state_dict_from url

model_urls = {

"resnet18": "https://download.pytorch.org/models/resnet18-f37072fd.pth",

"resnet34": "https://download.pytorch.org/models/resnet34-b627a593.pth",

"resnet50": "https://download.pytorch.org/models/resnet50-0676ba61.pth",

"resnet101": "https://download.pytorch.org/models/resnet101-63fe2227.pth",

"resnet152": "https://download.pytorch.org/models/resnet152-394f9c45.pth",

"resnext50_32x4d": "https://download.pytorch.org/models/resnext50_32x4d-7cdf4587.pth",

"resnext101_32x8d": "https://download.pytorch.org/models/resnext101_32x8d-8ba56ff5.pth",

"wide_resnet50_2": "https://download.pytorch.org/models/wide_resnet50_2-95faca4d.pth",

"wide_resnet101_2": "https://download.pytorch.org/models/wide_resnet101_2-32ee1156.pth",

}

def conv3x3(in_planes, out_planes, stride=1, padding=1):

return nn.Conv2d(in_planes, out_planes, kernel_size=3, stride=stride, padding=padding, bias=False)

def conv1x1(in_planes, out_planes, stride=1):

return nn.Conv2d(in_planes, out_planes, kernel_size=1, stride=stride, bias=False)

class BasicBlock(nn.Module):

expansion = 1

def __init__(self, inplanes, planes, stride=1, downsample=None, norm_layer=None):

super(BasicBlock, self).__init__()

if norm_layer is None:

norm_layer=nn.BatchNorm2d

self.conv1 =conv3x3(inplanes, planes, stride)

self.bn1 =norm_layer(planes)

self.relu = nn.Relu(inplace=True)

self.conv2 = conv3x3(planes, planes)

self.bn2 = norm_layer(planes)

self.downsample =downsample

self.strid =stride

def forward(self,x)

identity = x

out = self.conv1(x)

out = self.bn1(out)

out = self.relu(out)

out = self.conv2(out)

out = self.bn2(out)

if self.downsample is not None:

identity = self.downsample(x)

out += identity

out = self.relu(out)

return out

class BottleNeck(nn.Module)

expansion = 4

def __init__(self, inplanes, planes, stride=1, downsample=None, norm_layer=None):

super(BottleNeck, self).__init__()

if norm_;ayer is None:

norm_layer = nn.BatchNorm2d

self.conv1 = conv1x1(inplanes, planes)

self.bn1 = norm_layer(planes)

self.conv2 = conv3x3(planes, pkanes, stride)

self.bn2 = norm_layer(planes)

self.conv3 = conv1x1(planes, planes * self.expansion)

self.relu = nn.ReLU(inplace=True)

self.downsample = downsample

self.stride =stride

def forward(self, x):

identity = x

out = self.conv1(x)

out = self.bn1(out)

out = self.relu(out)

out = self.conv2(out)

out = self.bn2(out)

out = self.relu(out)

out = self.conv3(out)

out = self.bn3(out)

if self.downsample is not None;

identity = self.downsample(x)

out += identity

out = self.relu(out)

return out

class ResNet(nn.Module):

def __init__(self, block, layer, num_class=1000, norm_layer=None):

super(ResNet, self).__init__()

if norm_layer is None:

norm_layer = nn.BatchNorm2d

slef.inplanes =64

self.conv1 = nn.Conv2d(3, self.inplanes, kernel_size = 7, stride=2,padding=3,bias=False)

self.bn1 = norm_layer(self.inplanes)

self.relu = nn.ReLU(inplanes=True)

self.maxpool = nn.MaxPool2d(kernel_size=3, stride=2, padding=1)

self.layer1 = self._make_layer(block, 64, layers[0])

self.layer2 = self._make_layer(block, 128, layer[1], stride=2)

self.layer3 = self._make_layer(block, 128, layer[2], stride=2)

self.layer4 = self._make_layer(block, 512, layer[3], stride=2)

self._avgpool = nn.AdaptiveAvgPool2d((1,1))

self.fc = nn.Linear(512*block.expansion, num_class)

for m in self.modules():

if isinstance(m, nn.Conv2d):

nn.init.kaiming_normal_(m.weight, mode='fan_out'. nonlinearity='relu')

elif isinstance(m, (nn.BatchNorm2d, nn.GroupNorm)):

nn.init.constant_(m.weight, 1)

nn.init.constant_(m.bias, 0)

def _make_layer(self,block, planes, blocks, stride=1):

norm_layer = self.norm_layer

downsample = None

if stride !=1 or self.inplanes != planes*block.expansion:

downsample = nn.Sequential(

conv1x1(self.inplanes, planes*block.expansion, stride),

norm_layer(planes*block,expansion)

)

layers=[]

layers.append(block(self.inplanes, planes, stride, downsample, norm_layer))

self.inplanes = planes * slef.expansion

for _ in range(1,blocks):

layers.append(block(slef.inplanes, planes, norm_layer=norm_layer))

return nn.Sequential(*layers)

def forward(self, x):

x = self.conv1(x)

x = self.bn1(x)

x = self.relu(x)

x = self.maxpool(x)

x = self.layer1(x)

x = slef.layer2(x)

x = slef.layer3(x)

x = slef.layer4(x)

x = slef.avgpool(x)

x = torch.flattern(x,1)

x = self.fc(x)

return x

def _resnet(arch, block, layers, pretrained, progress, **kwargs):

model = ResNet(block, layers, **kwargs)

if pretrianed:

state_dict = load_state_dict_from_url(model_urls[arch], progress=progress)

model.load_state_dict(state_dict)

return model

def resnet152(pretrained=Falsem progress=True, **kwargs):

return _resnet('resnet152', BottleNeck. [3, 8, 36, 3],pretrained, progress, **kwargs)

model = resnet152(pretrianed =True)

model.eval()

MNIST Data set classification

load MNIST Data sets

utilize matplotlib Load data visualization

Create fully connected networks and Convolutional Neural Networks

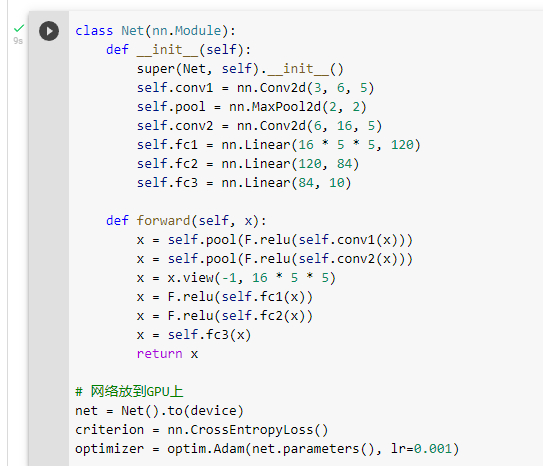

You can see , When we create a neural network , You need to define each of each network layer, and forward Function is a function that calls our neural network , stay foward Functions always , We can define pooling operations and activation functions

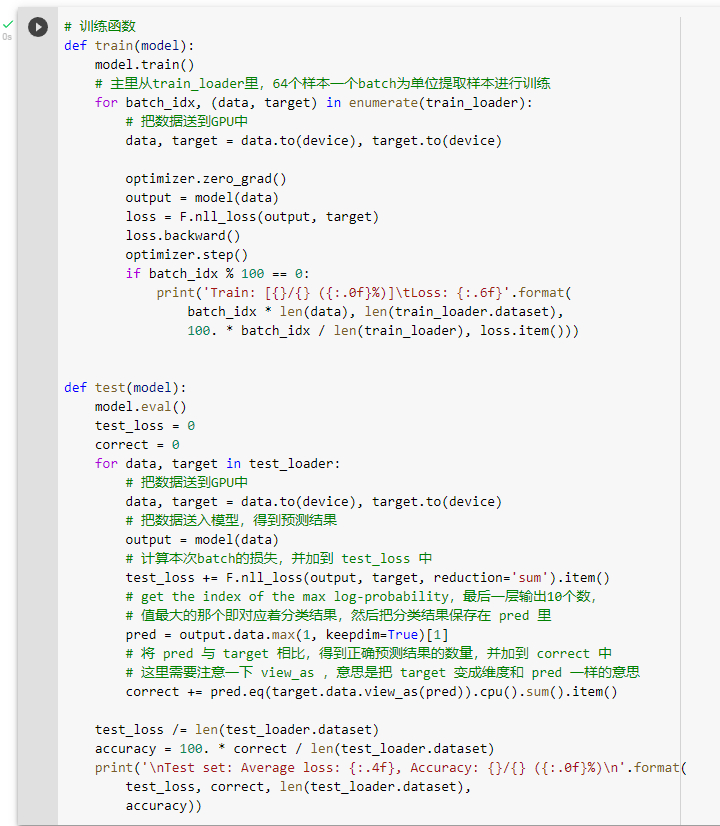

Define training test functions

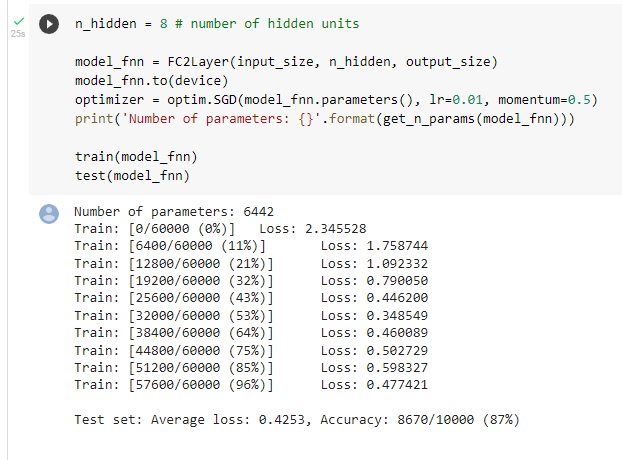

Train with a fully connected network

After observing the training, we tested , The accuracy of the model is 87%

Using convolutional neural network to train

[ Failed to transfer the external chain picture , The origin station may have anti-theft chain mechanism , It is suggested to save the pictures and upload them directly (img-WtoTeV9B-1646810190663)(C:\Users\CrazyBin\AppData\Roaming\Typora\typora-user-images\image-20220309130953394.png)]

It can be observed in the number of parameters , Both models are the same , But the result of training convolution neural network has higher accuracy , This shows that the extracted features are more accurate through convolution and pooling

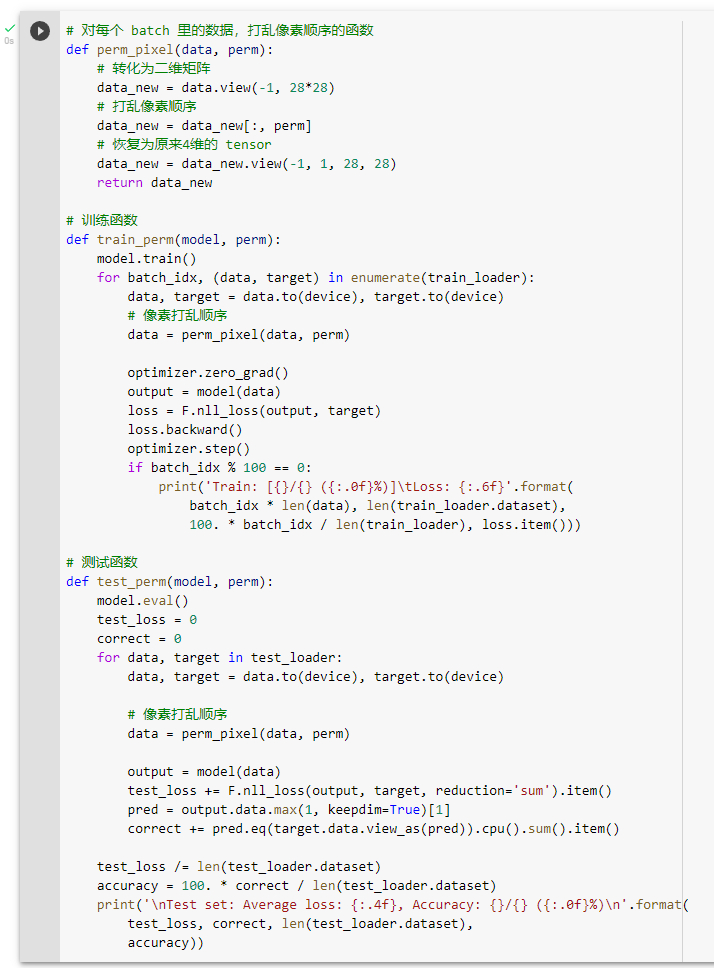

Disturb the pixels of the training image

Define training and testing functions after disrupting data

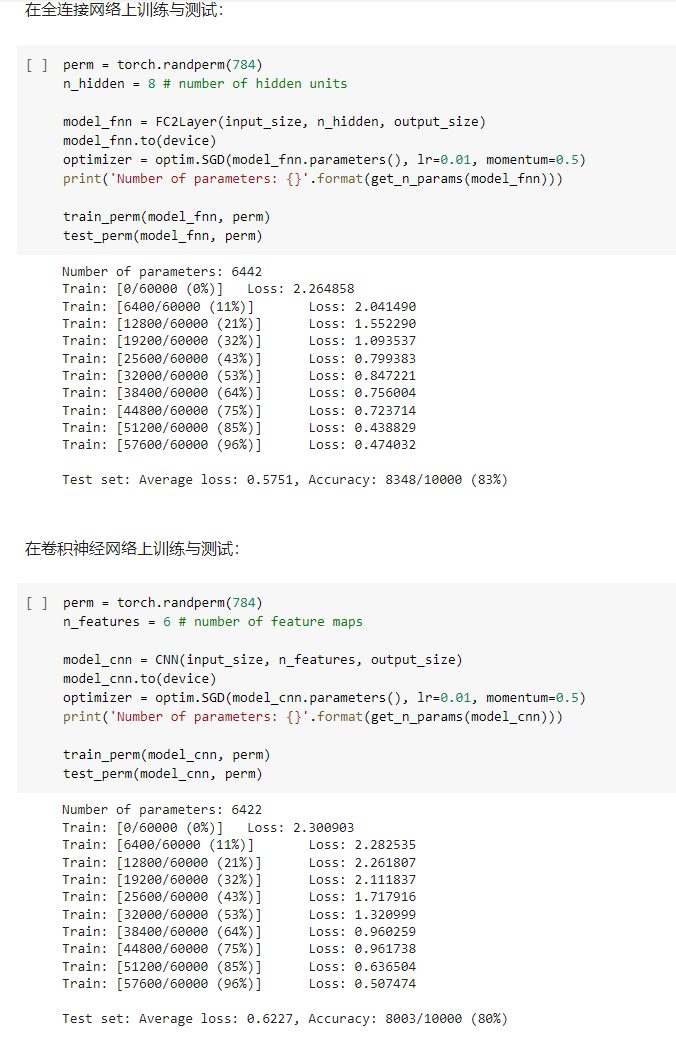

Observe the training results after disruption

Observe the two models after disrupting the data , It is found that the performance is close to . The performance of convolutional neural network is obviously inferior to that before . This is because convolution and pooling operations are the result of using the pixel relationship of local pictures , It can extract local features well and avoid over fitting . But after disrupting the order , Make the local pixel relationship characteristics weak , Leading to performance degradation .

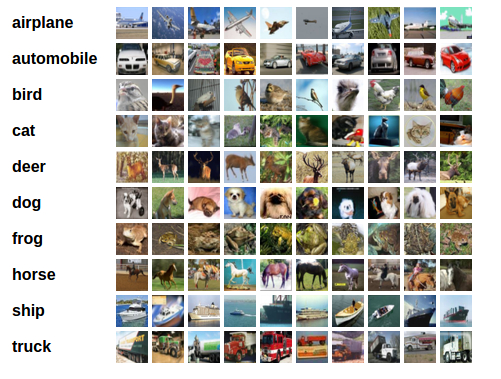

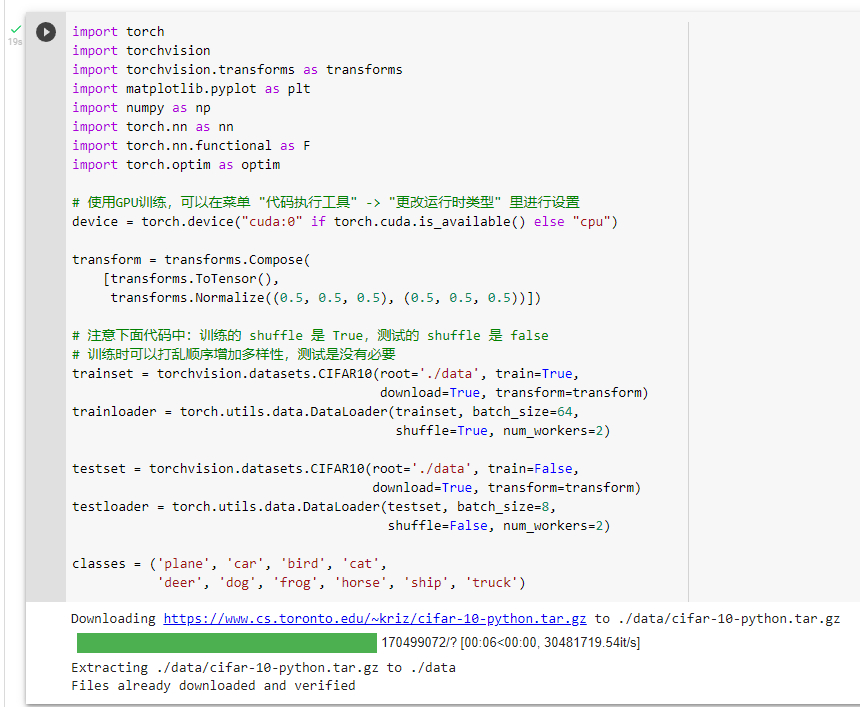

CIFAR10 Data set classification

CIFAR10 The dataset contains ten categories , with RGB3 Layer color channel

Load training set

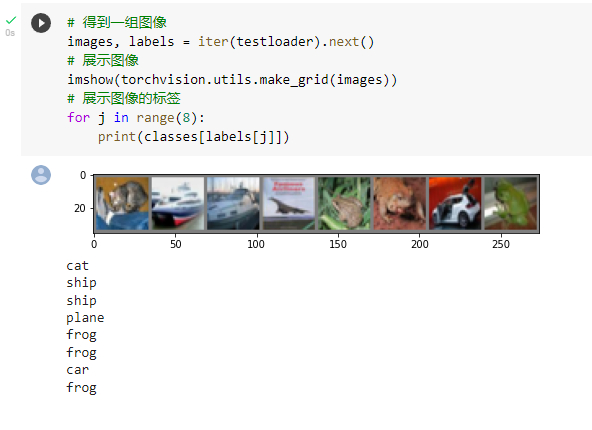

View some images and labels of the training set

Build networks and forward function

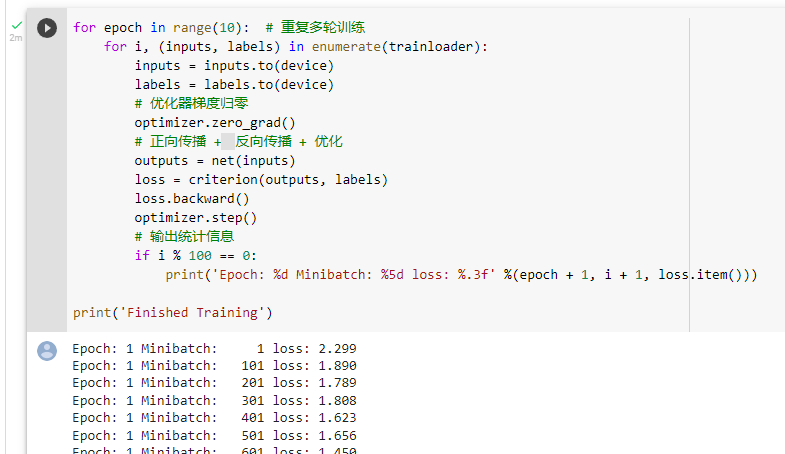

Training network

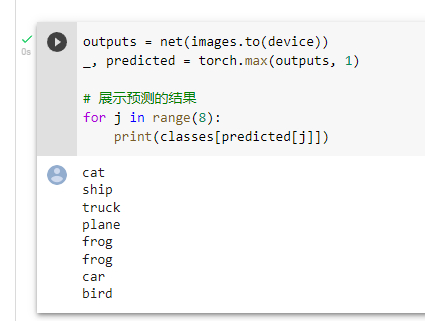

test model

Found only right 5 individual

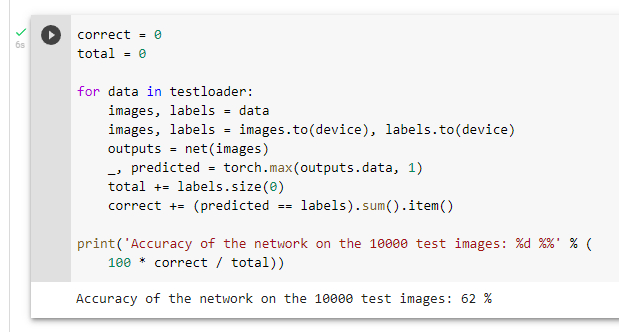

Test the entire data set

Accuracy rate is 62%, The model used is an ordinary convolutional neural network

Reference material :

Leading edge theory group of Vision Laboratory of Ocean University of China PyTorch Study

边栏推荐

- SSM integrated notes easy to understand version

- [C language foundation] 04 judgment and circulation

- AcWing 179.阶乘分解 题解

- AcWing 1294. Cherry Blossom explanation

- Detailed reading of stereo r-cnn paper -- Experiment: detailed explanation and result analysis

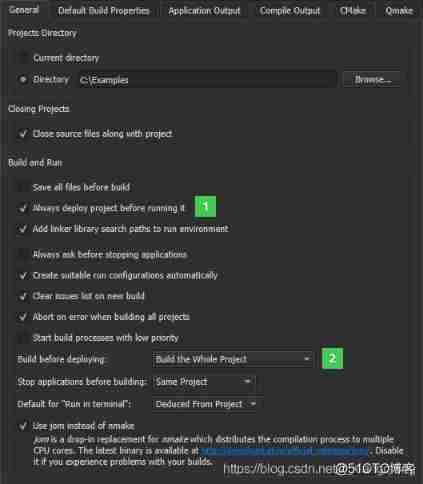

- QT creator shape

- Use dapr to shorten software development cycle and improve production efficiency

- QT creator specify editor settings

- LeetCode #461 汉明距离

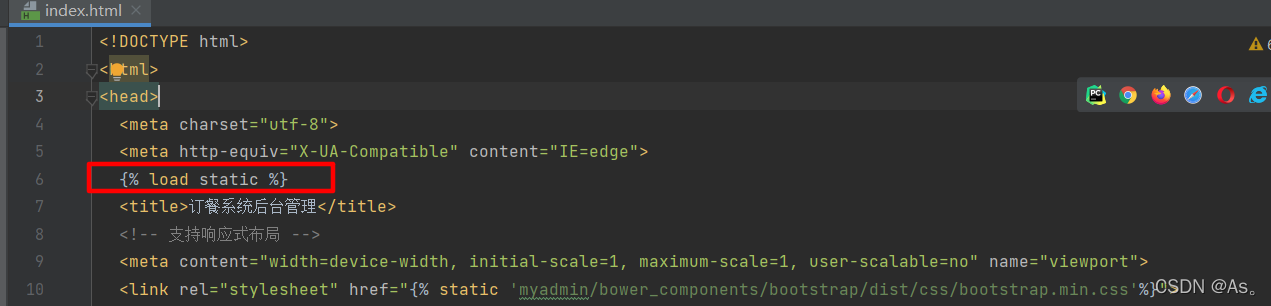

- Did you forget to register or load this tag

猜你喜欢

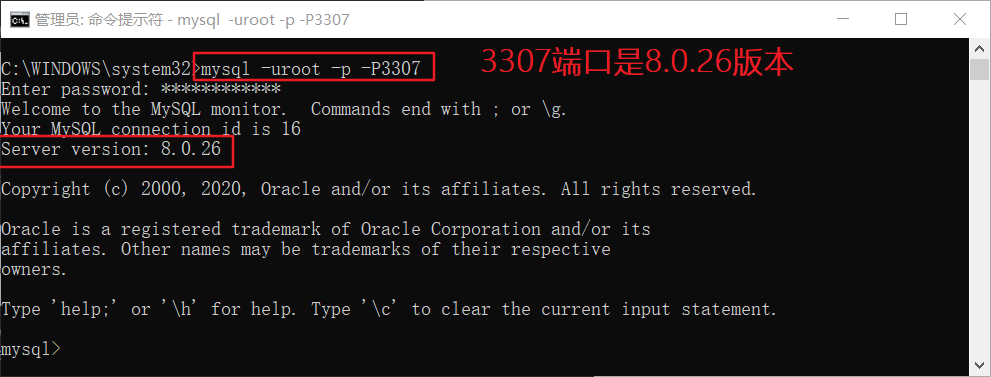

windows下同时安装mysql5.5和mysql8.0

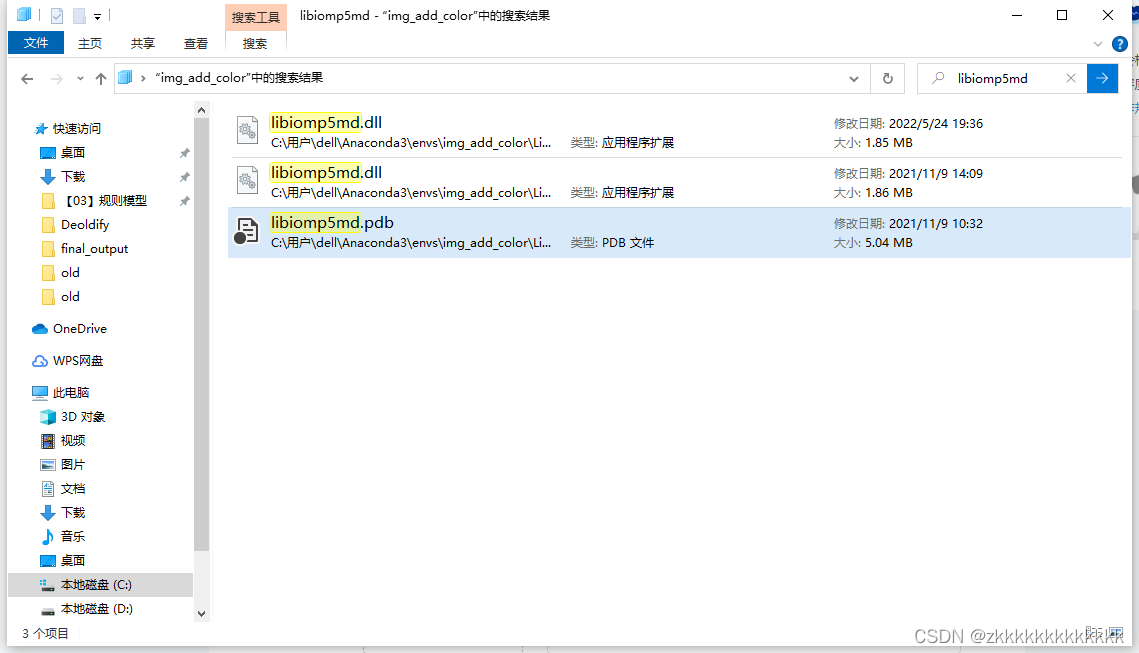

Deoldify项目问题——OMP:Error#15:Initializing libiomp5md.dll,but found libiomp5md.dll already initialized.

Did you forget to register or load this tag 报错解决方法

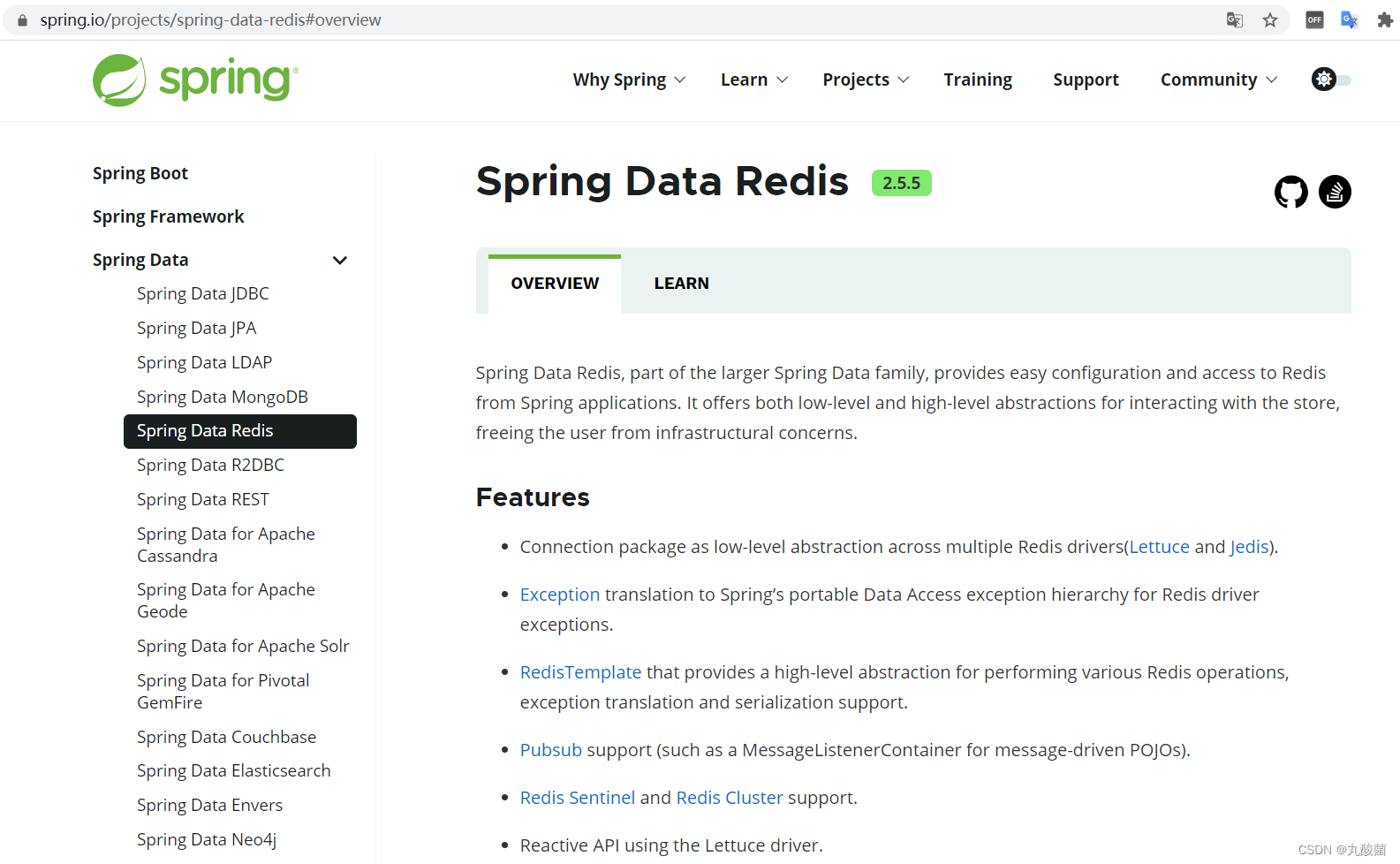

Redis的基础使用

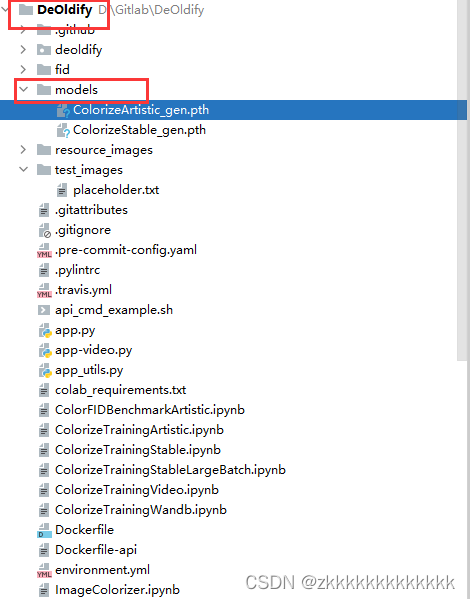

Picture coloring project - deoldify

csdn-Markdown编辑器

QT creator custom build process

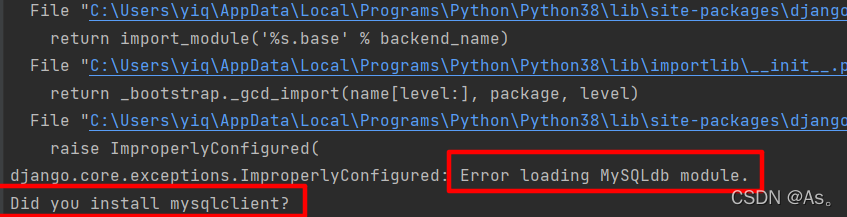

Django running error: error loading mysqldb module solution

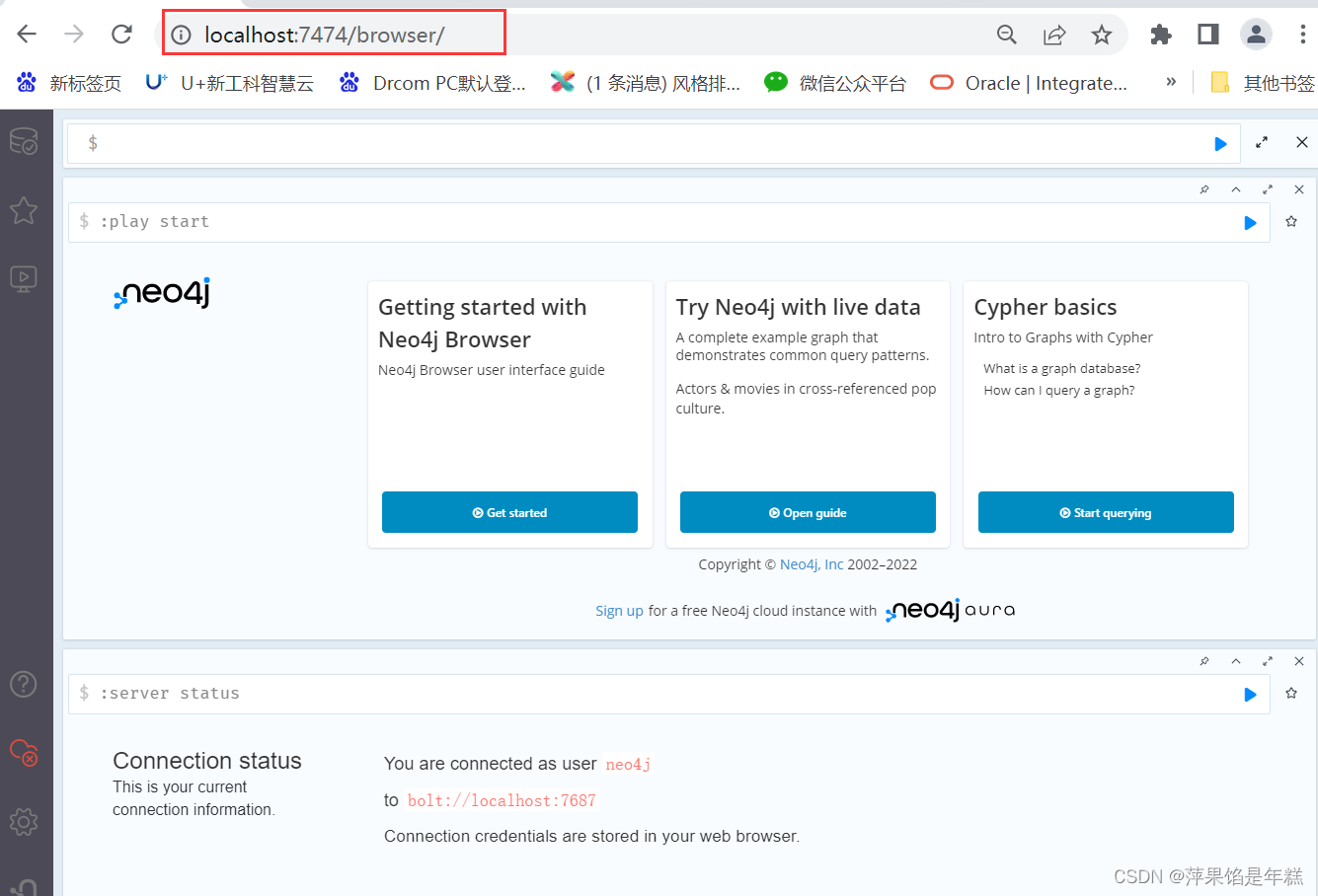

neo4j安装教程

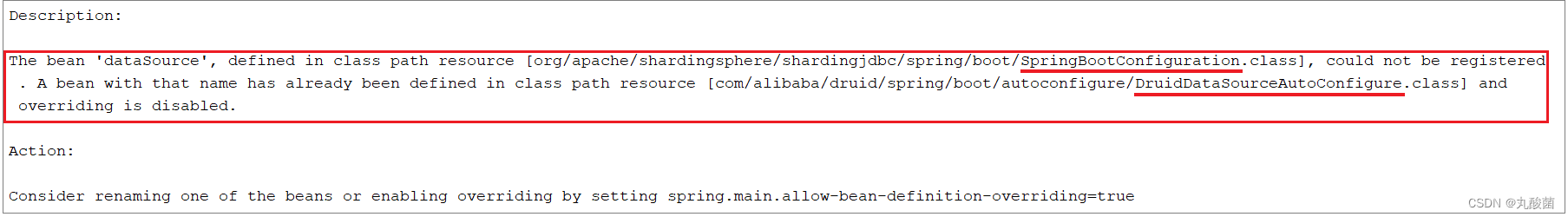

MySQL主從複制、讀寫分離

随机推荐

Solution: log4j:warn please initialize the log4j system properly

【博主推荐】SSM框架的后台管理系统(附源码)

项目实战-后台员工信息管理(增删改查登录与退出)

Use dapr to shorten software development cycle and improve production efficiency

QT creator specifies dependencies

虚拟机Ping通主机,主机Ping不通虚拟机

机器学习笔记-Week02-卷积神经网络

Why can't STM32 download the program

[free setup] asp Net online course selection system design and Implementation (source code +lunwen)

[C language foundation] 04 judgment and circulation

Data dictionary in C #

QT creator uses Valgrind code analysis tool

SSM integrated notes easy to understand version

La table d'exportation Navicat génère un fichier PDM

Machine learning -- census data analysis

MySQL主从复制、读写分离

A trip to Macao - > see the world from a non line city to Macao

[AGC009D]Uninity

MySQL的一些随笔记录

[recommended by bloggers] background management system of SSM framework (with source code)