当前位置:网站首页>Detailed reading of stereo r-cnn paper -- Experiment: detailed explanation and result analysis

Detailed reading of stereo r-cnn paper -- Experiment: detailed explanation and result analysis

2022-07-06 10:57:00 【Is it Wei Xiaobai】

In the past, I used to read the method part when reading papers , Then look at the performance of the test data . Recently, when I was writing my thesis, I found ,“ How to design the experiment ” It's also important , I will pay more attention to this part when I read the thesis in the future .

One 、 Details of the experiment

Introduce the conditions required for the test in detail

Network

Use five ranges (scale){32, 64, 128, 126, 512} And three proportions (ratios){0.5, 1, 2} Of archor. Adjust the size of the shorter edge of the original image to 600 Pixels . about Stereo-RPN, Due to the connection of left and right characteristic graphs , You need to have 1024 Input channels , instead of 512 Layers layer. Again , stay R-CNN Back to the head head Yes 512 Input channels . stay Titan XP GPU On ,Stereo R-CNN To a Stereo pair The reasoning time is about 0.28s.

Training

It's mainly about loss Explanation

Among them  Express RPN and R-CNN, Subscript box、α、dim、key respectively stereo boxes Of loss,viewpoint Of loss、dimension Of loss and keypotint Of loss.

Express RPN and R-CNN, Subscript box、α、dim、key respectively stereo boxes Of loss,viewpoint Of loss、dimension Of loss and keypotint Of loss.

During training, the left and right images will also be flipped and exchanged ( Correspondingly, it will viewpoint angle and keypoint Mirror image ) To expand the data set . One per training batch Keep one in stereo and 512 individual RoIs.

Other conditions : Use SGD、 The weight decays to 0.0005、 Momentum is 0.9%、 The learning rate is initialized to 0.001 And each 5 individual epoch Reduce 0.1%. Total training 20 individual epoch.

Two 、 Result analysis

Stereo Recall and Stereo Detection

Stereo R-CNN The target of is to detect and correlate the targets in the left and right images at the same time . In addition to evaluating the left and right images 2D Average recall (AR) and 2D average precision (AP) Outside , Also defined stereo AR and stereo AP Measure , Only query stereo box Only when the following conditions are met can it be considered as true positive (TPS):

1. left GT The maximum size of the box IOU Greater than the given threshold ;

2. On the right side GT The maximum size of the box IOU Greater than the given threshold ;

3. Select the left and right GT The box belongs to the same object .

As shown in the table 1 Shown , And Faster RCNN comparison Stereo RCNN Have similar on a single image proposal recall and detection precision, At the same time, high-quality data association is generated in the left and right images without additional calculation .

although RPN Medium stereo AR Slightly smaller than left AR, But in R-CNN Left observed after 、 Right and right stereo AP Almost the same , This shows that the detection performance on the left and right images is consistent , And almost all the left images are true positive box There is a corresponding true positive box.

In addition, two left and right feature fusion strategies are tested : Element based Averaging Strategy and channel cascading strategy . As shown in the table 1 Described in , Because all the information is retained , Channel cascading shows better performance .

above , Proved accurate stereo detection and association Provide enough box-level constraint .

3D Detection and 3D Localization

Use Precision for bird’s eye view (APbv) and 3D box (AP3d) evaluation 3D Detection and positioning accuracy . It turns out that table2 in . The detailed comparative analysis will not be repeated , You can read the paper directly .

It is worth noting that ,Kitti 3D The detection reference is for image-based (image-based) The method is difficult , For this method ,3D Performance tends to decline as the distance from the target object increases . This phenomenon is shown in Figure 7 Can be observed intuitively , Although the method in this paper realizes subpixel disparity estimation ( Less than 0.5 Pixels ), But because parallax is inversely proportional to depth , The depth error increases with the increase of object distance . For targets with obvious parallax , Based on strict geometric constraints, this paper realizes high-precision depth estimation . That explains why IoU The higher the threshold , The easier it is for the target object to belong to , Compared with other methods , This article gets more improvements .

边栏推荐

- MySQL27-索引优化与查询优化

- A trip to Macao - > see the world from a non line city to Macao

- [programmers' English growth path] English learning serial one (verb general tense)

- MySQL23-存储引擎

- Global and Chinese markets of static transfer switches (STS) 2022-2028: Research Report on technology, participants, trends, market size and share

- MySQL21-用户与权限管理

- Valentine's Day is coming, are you still worried about eating dog food? Teach you to make a confession wall hand in hand. Express your love to the person you want

- Postman uses scripts to modify the values of environment variables

- MySQL22-逻辑架构

- Windchill configure remote Oracle database connection

猜你喜欢

MySQL29-数据库其它调优策略

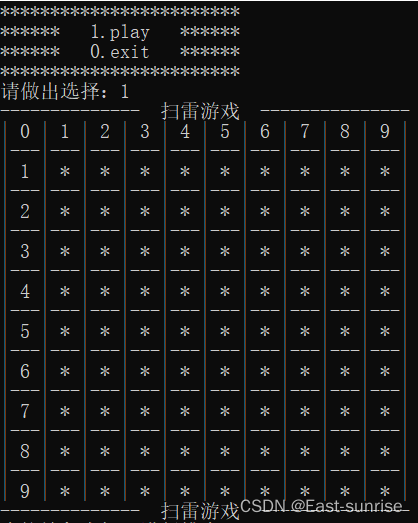

该不会还有人不懂用C语言写扫雷游戏吧

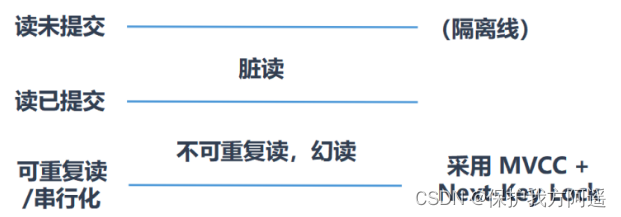

MySQL33-多版本并发控制

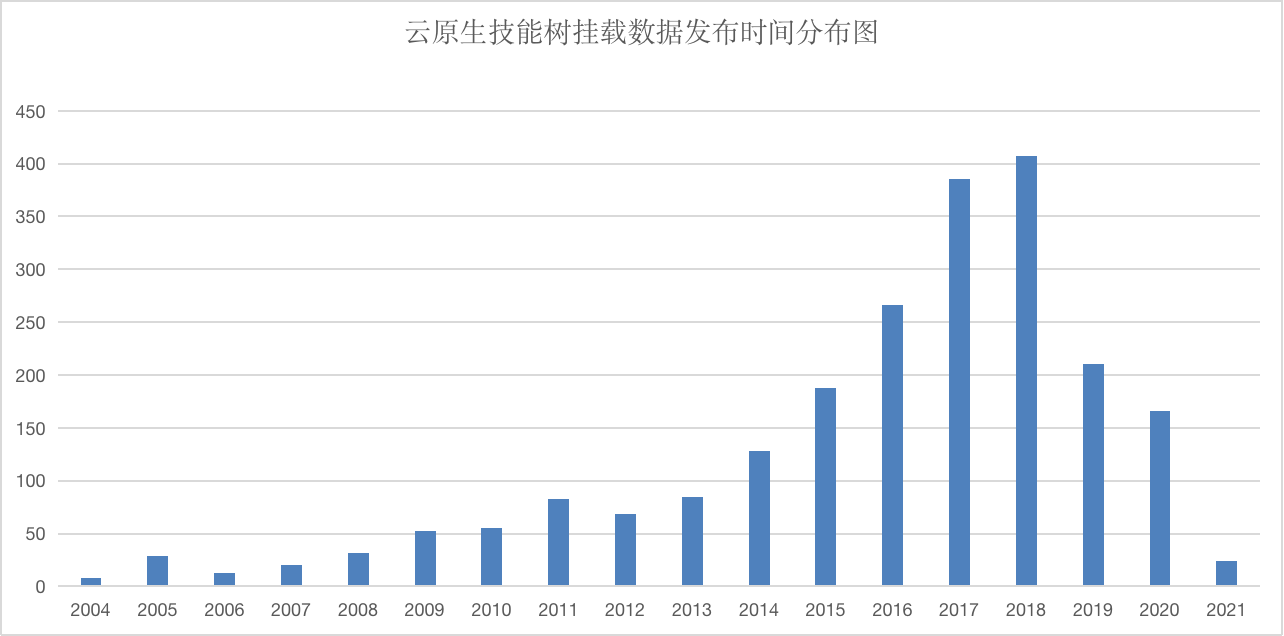

CSDN Q & a tag skill tree (V) -- cloud native skill tree

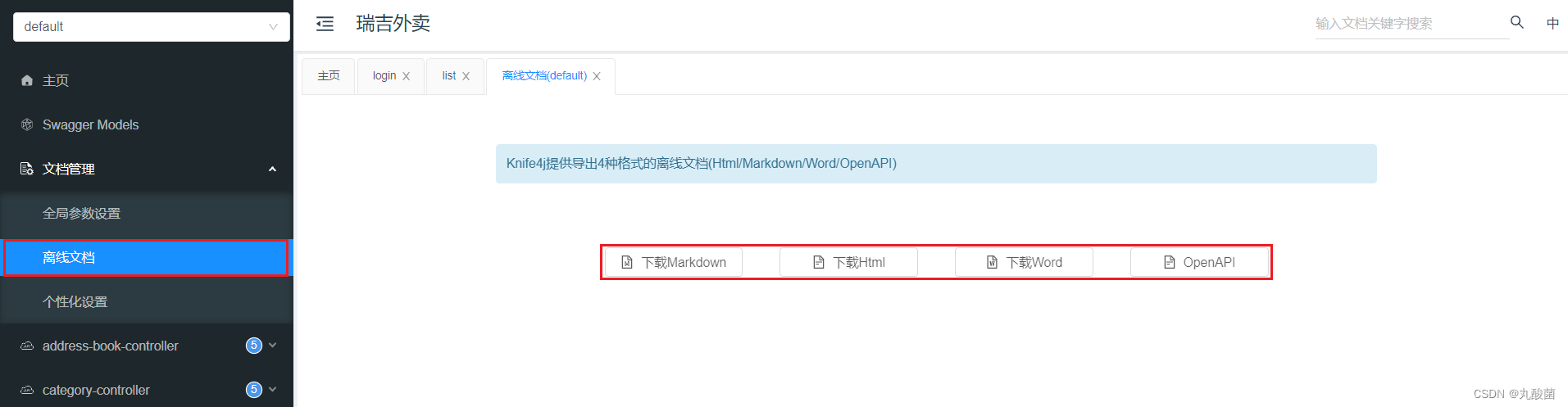

Swagger、Yapi接口管理服务_SE

CSDN markdown editor

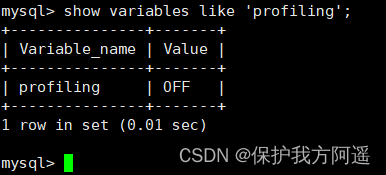

Mysql26 use of performance analysis tools

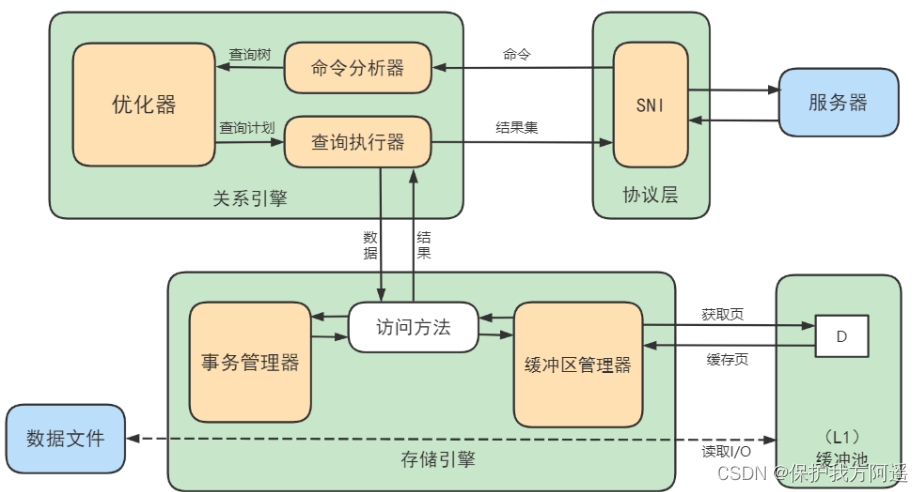

Mysql22 logical architecture

![[C language foundation] 04 judgment and circulation](/img/59/4100971f15a1a9bf3527cbe181d868.jpg)

[C language foundation] 04 judgment and circulation

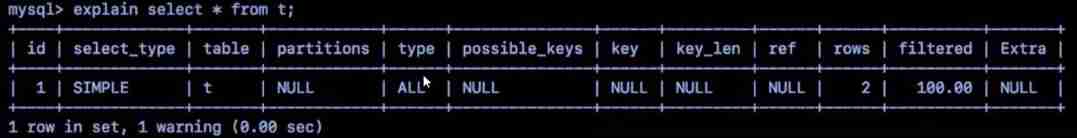

Why is MySQL still slow to query when indexing is used?

随机推荐

Mysql25 index creation and design principles

虚拟机Ping通主机,主机Ping不通虚拟机

[C language] deeply analyze the underlying principle of data storage

MySQL35-主从复制

Mysql32 lock

MySQL29-数据库其它调优策略

Kubernetes - problems and Solutions

Mysql27 index optimization and query optimization

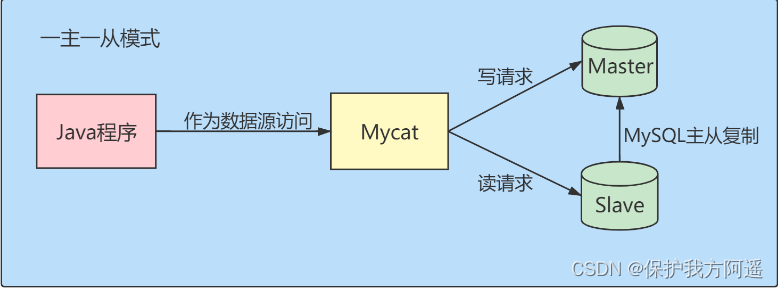

Database middleware_ MYCAT summary

C language string function summary

CSDN question and answer tag skill tree (II) -- effect optimization

Global and Chinese markets of static transfer switches (STS) 2022-2028: Research Report on technology, participants, trends, market size and share

MySQL 20 MySQL data directory

First blog

API learning of OpenGL (2002) smooth flat of glsl

MySQL transaction log

Bytetrack: multi object tracking by associating every detection box paper reading notes ()

【博主推荐】asp.net WebService 后台数据API JSON(附源码)

Navicat 導出錶生成PDM文件

Copy constructor template and copy assignment operator template