当前位置:网站首页>ABM thesis translation

ABM thesis translation

2022-07-02 07:36:00 【wxplol】

List of articles

Address of thesis : https://arxiv.org/pdf/2112.03603.pdf

Project address :https://github.com/XH-B/ABM

One 、Abstract

This paper proposes an attention aggregation model based on two-way interactive learning (ABM), This model consists of two parallel encoders with opposite directions (L2R and R2L) form . These two encoders distill each other , In the training of one-to-one information transmission at each step , Complementary information in both directions is fully utilized . in addition , In order to deal with mathematical symbols of different scales , This paper proposes an attention aggregation model (AAM), This model can aggregate attention at different scales . It is worth noting that , In the reasoning stage , Considering that the model has learned knowledge from two directions , So just use L2R Some branches of reasoning , In this way, the size of the original parameters and the reasoning speed can be maintained .

Two 、Introduction

WAP First, two-dimensional attention is introduced , To solve the problem of insufficient space coverage , Here's the picture 1 The two-dimensional attention shown focuses on the sum of the past , Designed to track past alignment information , In this way, the attention model can be guided , Assign a higher probability of attention to the untranslated region of the image . However , The main limitation of this approach is , It only uses historical alignment information , Without considering future information ( Untranslated area ). Such as , Many mathematical expressions are symmetrical structures , Left “{” And right “}” Parentheses always appear together , Sometimes far away . Some symbols in the equation are related , such as f f f and d x dx dx. Most methods only use left to right attention to recognize the current symbol , While ignoring the future information from the right , This may cause attention drift . The dependent information between the symbol and the previous symbol becomes weaker with the increase of its distance . therefore , They do not take full advantage of long-distance correlations or grammatical norms of mathematical expressions .

BTTR Use with two directions transformer Decoder to solve the problem of attention drift , But there is no effective way to BTTR Learn the opposite direction supervision information , also BTTR There is no alignment of attention in the whole learning process , This makes it still limited in identifying long formulas .

DWAP-MSA By adding multi-scale features to the code, we can alleviate the problem of recognition difficulty or uncertainty caused by the change of character scale in mathematical expressions . However , They do not scale the local acceptance domain , Only zoom the feature map , This makes it impossible to pay attention to small characters accurately in the recognition process .

therefore , We proposed ABM frame , The framework contains three models :(1) feature extraction . Use DenseNet The extracted features ;(2) Attention aggregation module (Attention Aggregation module). We propose multiscale attention , Recognize characters of different sizes in mathematical expressions , Thus, the current recognition accuracy is improved , It alleviates the problem of error accumulation .(3) Two way learning module ( Bi-directional Mutual Learning module). We propose a new decoder framework , There are two parallel decoder branches with opposite decoding directions (L2R and R2L), And use mutual distillation to learn from each other . Be careful , Although we use two decoders for training , But we only use one L2R Branch for reasoning .

3、 ... and 、Method

We propose a new end-to-end attention aggregation and two-way mutual learning (ABM) framework , Pictured 2 Shown . It mainly consists of three modules :

Feature extraction module (FEM), This module can extract feature information from a mathematical expression image .

Note the aggregation module (AAM) Integrated multiscale coverage note , Align history note information , In the decoding stage, the different scale features of symbols of different sizes are effectively aggregated .

Two way mutual learning module (BML) By two parallel decoders with opposite decoding directions (L2R and R2L) form , To complement each other . In the process of training , Each decoder branch can not only learn real latex Sequence , You can also learn the opposite latex Sequence , So as to improve the decoding ability .

3.1、 Feature extraction module (Feature Extraction Modul)

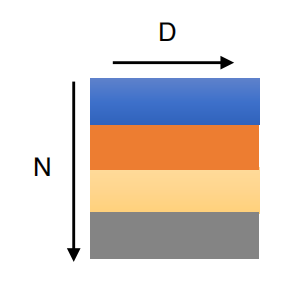

Use DenseNet The extracted features , Output H × W × D H \times W \times D H×W×D, We encode information transformation M dimension ( M = H × W M=H \times W M=H×W), The output vector is a = ( a 1 , a 2 , … , a M ) a=(a_{1},a_{2},\dots,a_{M} ) a=(a1,a2,…,aM).

3.2、 Note the aggregation module (Attention Aggregation Module)

The attention mechanism can guide the encoder to pay more attention to the specific area of the input picture . Especially the attention mechanism based on the overall situation , It can better track the alignment information and guide the model to allocate a higher probability of attention to the translation area . Inspired by this , We proposed AAM Modules aggregate different receptive fields on global attention . Different from the traditional attention mechanism ,AAM Not only pay attention to local information , At the same time, we also pay attention to the overall information on the larger receptive field . therefore ,AAM Will produce finer alignment information , And help the model capture more accurate spatial relationships . differ DWAP-MSA The model passes dense Multi-scale branches of the encoder to generate low-level and high-level features ,AAM Propose a hidden state h t h_{t} ht、 Characteristics of figure F F F And global attention β t \beta_{t} βt Calculate the current attention weight α t \alpha_{t} αt, Then get the context vector c t c_{t} ct.

A s = U s β t , A l = U l β t β t = ∑ l = 1 t − 1 α l A_{s} = U_{s}β_{t}, A_{l} = U_{l}β_{t} \\ β_{t}=\sum^{t-1}_{l=1}\alpha_{l} As=Usβt,Al=Ulβtβt=l=1∑t−1αl

U s U_{s} Us and U l U_{l} Ul It means small nucleus and large nucleus respectively ( Such as 5、11) Convolution of , β t β_{t} βt Represents the sum of all the attention probabilities in the past , Initialize to zero vector . among , α l α_{l} αl For the first time l l l Step's attention score .

therefore , The current attention α t α_{t} αt The calculation process is as follows :

α t = v a T t a n h ( W h h t + U f F + W s A s + W l A l ) \alpha_{t}=v^{T}_{a}tanh(W_{h}h_{t}+U_{f}F+W_{s}A_{s}+W_{l}A_{l}) αt=vaTtanh(Whht+UfF+WsAs+WlAl)

The final context vector c t c_{t} ct For characteristic information a a a And attention t force α t α_{t} αt Weighted sum of , The calculation formula is as follows :

c t = ∑ i = 1 M α t , i a i c_{t}=\sum^{M}_{i=1}\alpha_{t,i}a_{i} ct=i=1∑Mαt,iai

3.3、 Two way mutual learning module (Bi-directional Mutual Learning Module)

Given a mathematical formula, input the image , The traditional method is decoding from left to right (L2R), This method does not consider the problem of long-distance dependence . Therefore, we propose a bidirectional decoder to translate the input image into two opposite directions (L2R 、R2L) Of Latex Sequence , Then learn to decode information from each other . The two branches have the same architecture , Only in its decoding direction .

For two-way training , Let's add < s o s > <sos> <sos> and < e o s > <eos> <eos> As the beginning and end symbols of latex sequence . Specially , For length is T Of Latex Sequence Y = ( Y 1 , Y 2 , . . . , Y T ) Y=(Y_{1},Y_{2},...,Y_{T}) Y=(Y1,Y2,...,YT),

From left to right (L2R) Express : y = ( < s o s > , Y 1 , Y 2 , . . . , Y T , < e o s > ) y=(<sos>,Y1,Y2,...,YT,<eos>) y=(<sos>,Y1,Y2,...,YT,<eos>)

From right to left (R2L) Express : y = ( < e o s > , Y T , Y T − 1 , . . . , Y 1 , < e o s > ) y=(<eos>,Y_{T},Y_{T−1},...,Y_{1},<eos>) y=(<eos>,YT,YT−1,...,Y1,<eos>)

L2R and R2L Branch in step t The probability of prediction at is calculated as follows :

p ( y ⃗ ∣ y ⃗ y − 1 ) = W o m a x ( W y E y ⃗ t − 1 + W h h t + W t c t ) p ( y ← ∣ y ← y − 1 ) = W o ′ m a x ( W y E ′ y ← t − 1 + W h ′ h t ′ + W t ′ c t ′ ) p(\vec y|\vec y_{y-1})=W_{o}max(W_{y}E \vec y_{t-1}+W_{h}h_{t}+W_{t}c_{t}) \\ p(\overleftarrow y|\overleftarrow y_{y-1})=W^{'}_{o}max(W_{y}E^{'} \overleftarrow y_{t-1}+W^{'}_{h}h^{'}_{t}+W^{'}_{t}c^{'}_{t}) p(y∣yy−1)=Womax(WyEyt−1+Whht+Wtct)p(y∣yy−1)=Wo′max(WyE′yt−1+Wh′ht′+Wt′ct′)

among , h t h_{t} ht、 y ⃗ t \vec y_{t} yt Express L2R Step in branch t Current state of and previous predicted output . ∗ ′ *' ∗′ Express R2L Branch . W o ∈ R K × d W_{o}\in R^{K\times d} Wo∈RK×d, W y ∈ R d × n W_{y}\in R^{d \times n} Wy∈Rd×n、 W h ∈ R d × n W_{h} \in R^{d \times n} Wh∈Rd×n and W d × D W^{d \times D} Wd×D Is a trainable matrix .d、K and n Respectively denote attention dimension 、 Number of all tag classes and GRU Dimension of .E Is an embedded matrix .Max Indicates the maximum activation function . Hide representation { h 1 、 h 2 、 . . . , h t } \{h1、h2、...,ht\} { h1、h2、...,ht} from :

h ^ t = f 1 ( h t − 1 , E y ⃗ t − 1 ) , h t = f 2 ( h ^ t , c t ) \widehat h_{t} =f_{1}(h_{t-1},E \vec y_{t-1}), \\ h_{t}=f_{2}(\widehat h_{t},c_{t}) ht=f1(ht−1,Eyt−1),ht=f2(ht,ct)

We define L2R The probability of branching is p ⃗ l 2 r = { < s o s > , y ⃗ 1 , y ⃗ 2 , . . . , y ⃗ T , < e o s > } \vec p_{l2r}= \{ <sos>,\vec y_{1},\vec y_{2},...,\vec y_{T},<eos> \} pl2r={ <sos>,y1,y2,...,yT,<eos>},R2L The probability of branching is p ⃗ r 2 l = { < e o s > , y ← 1 , y ← 2 , . . . , y ← T , < s o s > } \vec p_{r2l}= \{ <eos>,\overleftarrow y_{1},\overleftarrow y_{2},...,\overleftarrow y_{T},<sos> \} pr2l={ <eos>,y1,y2,...,yT,<sos>}. y i y_{i} yi Is to execute the i Prediction probability of label symbol in step decoding . In order to learn the predicted distribution of the two branches from each other , We need to align by L2R and R2L Generated by decoder LaTeX Sequence . meanwhile , introduce kullback-leibler(KL) Loss to quantify the difference in the predicted distribution between them . In the process of training , We use the soft probability generated by the model to provide more information . therefore , about k Categories , come from L2R The soft probability of a branch is defined as :

σ ( z ⃗ i , k , S ) = e x p ( z ⃗ i , k ) / S ∑ j = 1 K e x p ( Z ⃗ i , j / S ) \sigma(\vec z_{i,k},S)=\frac{exp(\vec z_{i,k})/S}{\sum^{K}_{j=1}exp(\vec Z_{i,j}/S)} σ(zi,k,S)=∑j=1Kexp(Zi,j/S)exp(zi,k)/S

among ,S Indicates the parameters for generating soft labels . The... Of the sequence calculated by the decoder network i i i The logarithm of symbols is defined as z i = { z 1 , z 2 , . . . , z K } z_{i}=\{z_{1},z_{2},...,z_{K}\} zi={ z1,z2,...,zK}. Our goal is to minimize the distance between two branch probability distributions . therefore , p ⃗ l 2 r \vec p_{l2r} pl2r And P ← r 2 l ∗ \overleftarrow P^{∗}_{r2l} Pr2l∗ Between KL The distance is calculated as follows :

L K L = S 2 ∑ i = 1 T ∑ j = 1 K σ ( Z ⃗ i , j , S ) l o g σ ( Z ⃗ i , j , S ) σ ( Z ← T + 1 − i , j , S ) L_{KL}= S^{2}\sum^{T}_{i=1}\sum^{K}_{j=1}\sigma(\vec Z_{i,j},S)log\frac{\sigma(\vec Z_{i,j},S)}{\sigma(\overleftarrow Z_{T+1-i,j},S)} LKL=S2i=1∑Tj=1∑Kσ(Zi,j,S)logσ(ZT+1−i,j,S)σ(Zi,j,S)

3.4、 Loss function (Loss Function)

Specially , For length is T Of Latex Sequence y ⃗ l 2 r = { < s o s > , Y 1 , Y 2 , . . . , Y T , < e o s > } \vec y_{l2r}=\{<sos>,Y_{1},Y_{2},...,Y_{T},<eos>\} yl2r={ <sos>,Y1,Y2,...,YT,<eos>}, We will be the first to i Time steps correspond to one-hot The real label is expressed as Y i = { x 1 , x 2 , . . . , x K } Y_{i}=\{x_{1},x_{2},...,x_{K}\} Yi={ x1,x2,...,xK}. The first k It's symbolic softmax The probability is calculated as :

y ⃗ i , k = e x p ( Z ⃗ i , k ) ∑ j = 1 K e x p ( Z ⃗ i , j ) \vec y_{i,k}=\frac{exp(\vec Z_{i,k})}{\sum^{K}_{j=1}exp(\vec Z_{i,j})} yi,k=∑j=1Kexp(Zi,j)exp(Zi,k)

For multi classification , Target tag with two branches softmax The cross entropy loss between probabilities is defined as :

L c e l 2 r = ∑ i = 1 T ∑ j = 1 K − Y i , j l o g ( y ⃗ i , j ) L c e r 2 l = ∑ i = 1 T ∑ j = 1 K − Y i , j l o g ( y ← T + 1 − i , j ) L^{l2r}_{ce}=\sum^{T}_{i=1}\sum^{K}_{j=1}-Y_{i,j}log(\vec y_{i,j}) \\ L^{r2l}_{ce}=\sum^{T}_{i=1}\sum^{K}_{j=1}-Y_{i,j}log(\overleftarrow y_{T+1-i,j}) Lcel2r=i=1∑Tj=1∑K−Yi,jlog(yi,j)Lcer2l=i=1∑Tj=1∑K−Yi,jlog(yT+1−i,j)

The global loss function is :

L = L c e l 2 r + L c e r 2 l + λ L K L L=L^{l2r}_{ce}+L^{r2l}_{ce}+\lambda L_{KL} L=Lcel2r+Lcer2l+λLKL

Four 、Experiments

边栏推荐

- [introduction to information retrieval] Chapter II vocabulary dictionary and inverted record table

- 【模型蒸馏】TinyBERT: Distilling BERT for Natural Language Understanding

- Feeling after reading "agile and tidy way: return to origin"

- Conversion of numerical amount into capital figures in PHP

- @Transational踩坑

- Yaml file of ingress controller 0.47.0

- 常见的机器学习相关评价指标

- Module not found: Error: Can't resolve './$$_gendir/app/app.module.ngfactory'

- Typeerror in allenlp: object of type tensor is not JSON serializable error

- How do vision transformer work?【论文解读】

猜你喜欢

Win10+vs2017+denseflow compilation

腾讯机试题

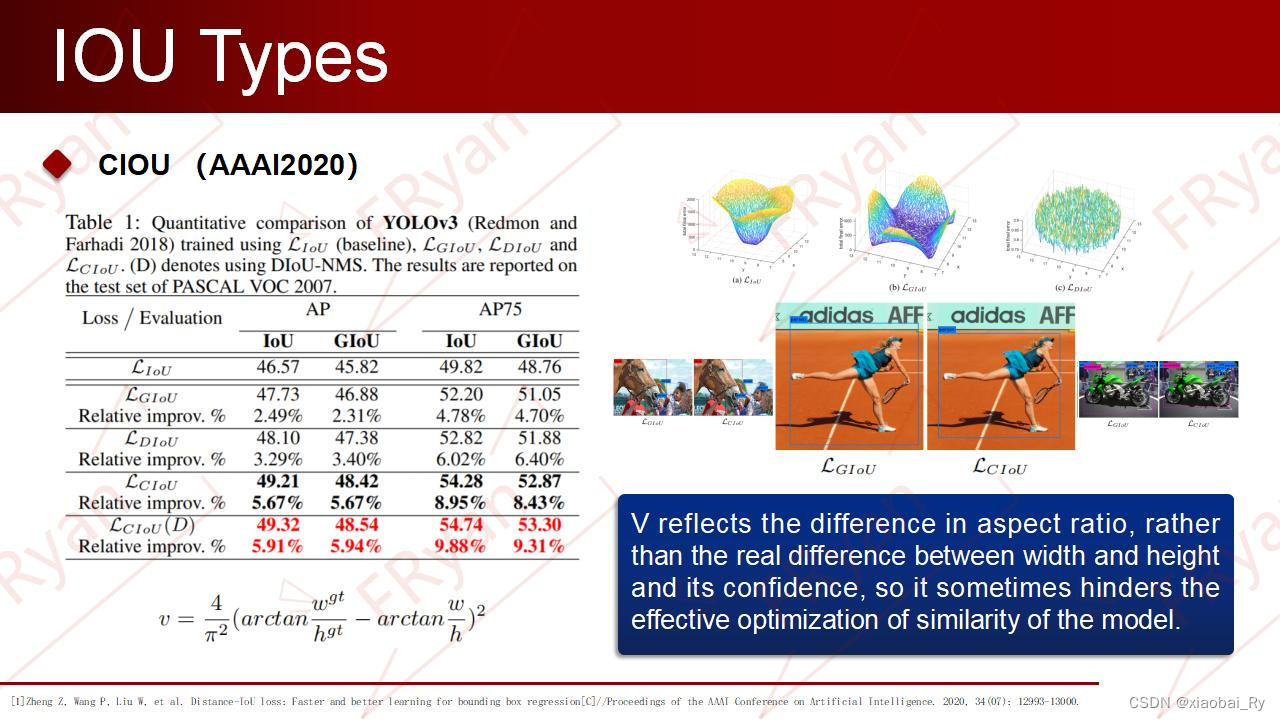

一份Slide两张表格带你快速了解目标检测

Use Baidu network disk to upload data to the server

Drawing mechanism of view (I)

![[introduction to information retrieval] Chapter 6 term weight and vector space model](/img/42/bc54da40a878198118648291e2e762.png)

[introduction to information retrieval] Chapter 6 term weight and vector space model

《Handwritten Mathematical Expression Recognition with Bidirectionally Trained Transformer》论文翻译

点云数据理解(PointNet实现第3步)

【信息检索导论】第三章 容错式检索

叮咚,Redis OM对象映射框架来了

随机推荐

Error in running test pyspark in idea2020

Practice and thinking of offline data warehouse and Bi development

【MEDICAL】Attend to Medical Ontologies: Content Selection for Clinical Abstractive Summarization

Module not found: Error: Can't resolve './$$_ gendir/app/app. module. ngfactory'

Drawing mechanism of view (3)

離線數倉和bi開發的實踐和思考

【论文介绍】R-Drop: Regularized Dropout for Neural Networks

Drawing mechanism of view (I)

Mmdetection installation problem

Alpha Beta Pruning in Adversarial Search

Huawei machine test questions-20190417

生成模型与判别模型的区别与理解

第一个快应用(quickapp)demo

【模型蒸馏】TinyBERT: Distilling BERT for Natural Language Understanding

spark sql任务性能优化(基础)

【Ranking】Pre-trained Language Model based Ranking in Baidu Search

Faster-ILOD、maskrcnn_benchmark训练自己的voc数据集及问题汇总

Optimization method: meaning of common mathematical symbols

TimeCLR: A self-supervised contrastive learning framework for univariate time series representation

CONDA creates, replicates, and shares virtual environments