author | Zheng Chao

Reading guide :OpenYurt It is Alibaba's open source cloud edge collaborative Integration Architecture , Compared with similar open source solutions ,OpenYurt It has the ability to realize full scene coverage of edge computing . stay A previous article in , We introduced OpenYurt How to realize the edge autonomy in the weak network and disconnected network scenarios . Article as OpenYurt The fourth in the series , We will focus on OpenYurt Another core competency of —— Cloud side communication , And related components Yurttunnel.

Use scenarios

In the process of application deployment and operation and maintenance , Users often need to get the log of the application , Or directly log in to the running environment of the application for debugging . stay Kubernetes Environment , We usually use kubectl log,kubectl exec Wait for instructions to fulfill these requirements . As shown in the figure below , stay kubectl On the request link , kubelet Will play the server side , Responsible for handling by kube-apiserver(KAS) A request forwarded , This requires that KAS and kubelet There needs to be a network path between , allow KAS Take the initiative to visit kubelet.

Figure 1 :kubectl Execute the process

However , In edge computing scenarios , Edge nodes are often located in the local private network , This ensures the security of the edge nodes , But it also results in KAS Can't directly access the kubelet. therefore , In order to support the operation and maintenance of edge applications through cloud nodes , We have to be in the cloud 、 A reverse operation and maintenance channel is established between the edges .

The reverse channel

Yurttunnel yes OpenYurt An important component of open source recently , To solve the problem of cloud edge communication . Reverse channel is a common way to solve cross network communication , and Yurttunnel In essence, it's a reverse channel . The nodes under an edge cluster are often located in different network region in , And in the same place region The nodes in can communicate with each other , So when setting the reverse channel , We just need to make sure that at every region Set an internal connection with proxy server Connected agent that will do ( As shown in the figure below ). It includes the following steps :

- Within the network where the control component resides , Deploy proxy server.

- proxy server Open up to the outside world, which can be accessed through a public network IP.

- At every region Deploy a agent, And pass server The public IP And server Establish long connection .

- The access requests of the control component to the edge nodes will be forwarded to proxy server.

- proxy server Then send the request to the target node through the corresponding long connection .

Figure 2

stay Yurttunnel in , We chose to use upstream projects apiserver-network-proxy(ANP) To achieve server and agent Communication between .ANP Is based on kubernetes 1.16 Alpha new function EgressSelector Development , To achieve Kubernetes Cluster components across intranet signal communication ( for example ,master In control VPC, and kubelet Other components are located in the user VPC).

Readers may be curious , since OpenYurt Is based on ACK@Edge Open source , And in the production environment , ACK@Edge The cloud edge operation and maintenance channel uses self-developed components tunnellib, So why should we choose a new component in the open source version ? I have to mention it again OpenYurt Core design concept of “Extend upstream Kubernetes to Edge”.

indeed ,tunnellib It's been tested in a complex online environment , Stable component performance , But we want to maintain the largest common divisor of technology with upstream , Give Way OpenYurt The user experience is closer to native Kubernetes ; meanwhile , stay ACK@Edge In the process of development and operation and maintenance , We found that , Many of the requirements for edge clustering also exist in other scenarios ( for example , Most cloud manufacturers also need to implement node cross network communication ), And the transmission efficiency of operation and maintenance channel can be further optimized (4.5 Chapter will detail the optimization process ). therefore , Adhering to the principle of open sharing 、 The open source spirit of equality and inclusiveness , We hope to share the valuable experience accumulated in the development and operation and maintenance process with more developers in the upstream community .

ANP Not out of the box

However ANP The project is still in its infancy , The function is not perfect yet , Many problems remain to be solved . The main problems we find in practice include :

- How to forward requests from cloud nodes -- A prerequisite for the reverse channel to work properly is , The request from the control node to the edge node must go through the proxy server. about Kubernetes 1.16 + edition ,KAS Can you borrow from EgressSelector Send the request to the node first to the specified proxy server. But for the 1.16 Previous versions ,KAS And other control components (Prometheus and metrics server) You can only access nodes directly , Instead of taking a detour proxy server. Predictably enough , Some users in the short term , Will still use 1.16 Previous versions , also Prometheus and metrics server There is no support for other control components in the short term EgressSelector The plan for . therefore , The first problem we have to solve is , How to forward the request sent by the control component to the node proxy server.

- How to ensure server Copy covers all region -- In the production environment , An edge cluster often contains tens of thousands of nodes , And serve hundreds of users at the same time , If a cluster has only one proxy server, that , once proxy server Something goes wrong , All users will not be able to access pod To operate . therefore , We have to deploy multiple proxy server Replica to ensure cluster high availability . meanwhile ,proxy server The workload will increase with the increase of access traffic , The user's access delay is bound to increase . So we're deploying proxy server when , We also need to think about how to deal with proxy server Expand the level , To cope with high concurrency scenarios . A classic solution to single point of failure and high concurrency scenarios is , Deploy multiple proxy server copy , And use load balancing to distribute traffic . However, in OpenYurt scenario , about KAS Any request from ,LoadBalancer (LB) To which server Copies are uncontrollable , therefore , The second problem needs to be solved , How to ensure that each server Copies can work with all of agent Establishing a connection .

- How to forward a request to the correct agent -- At run time ,proxy server After receiving the request , According to the request destination IP, Forward the request to the corresponding network region Internal agent. However ,ANP The current implementation , Suppose all the nodes are in one cyberspace , server I'll pick one at random agent Forward the request . therefore , The third problem we need to solve is , How to correctly forward a request to the specified agent.

- How to decouple components from node certificates -- At run time , We need to server Provide a set of TLS certificate , In order to realize the server And KAS,server And agent Secure communication between . meanwhile , We also need to do something for agent Prepare a set TLS client certificate , To establish agent and server Between the gRPC channel .ANP The current implementation , requirement server It has to be with KAS Deploy on the same node , And mount the node at boot time volume share KAS tls certificate . Again ,agent It also needs to be mounted at boot time volume share kubelet tls certificate . This virtually reduces the flexibility of deployment , This results in a strong dependence on the node certificate , In some cases , Users may want to server Deployed in non KAS On the node . therefore , Another concern is , How to decouple components from node certificates .

- How to shrink Tunnel bandwidth -- ANP One of the core design ideas of , It's using gRPC encapsulation KAS All external HTTP request . Choose here gRPC, It's mainly about convection (stream) Support and clear interface specification , Besides , Strongly typed client and server can effectively reduce runtime errors , Improve system stability . However , We also found that , Compared with direct use TCP agreement , use ANP It also brings extra overhead, increases bandwidth . From the product level ,Tunnel The traffic is on the public network , The increase of bandwidth also means the increase of user cost . therefore , A very important question is , While improving the stability of the system , Can we also reduce bandwidth ?

Yurttunnel Design analysis

1. To develop DNAT Rules forward requests from cloud nodes

As mentioned earlier ,ANP It's based on the new upstream features EgressSelector Developed , This function allows the user to start KAS By passing in egress configuration To demand KAS take egress The request is forwarded to the specified proxy server. But because we need to take into account the new and old versions of Kubernetes colony , And consider , Other control components (Prometheus and metric server) Does not support EgressSelector characteristic , We need to make sure that we can't use EgressSelector In the case of KAS egress Forward the request to proxy server. So , We deploy one on each cloud management node Yurttunnel Server copy , And in Server A new component is embedded in Iptabel Manager.Iptable Manager Will pass through the host computer Iptable Medium OUTPUT Add... To the chain DNAT The rules , Forward the request from the control component to the node Yurttunnel Server.

meanwhile , When you enable EgressSelector after ,KAS All external requests follow a uniform format , So we add a component , ANP interceptor.ANP interceptor Will be responsible for intercepting from master It's from http request , And encapsulate it as EgressSelector Format .Yurttunnel The specific process of request forwarding is shown in Figure 3 .

Figure 3 :Yurttunnel Request forwarding process

2. Get dynamic Server replications

In the last section , We mentioned , We will adopt the way of load balancing to manage yurttunnel server, be-all ingress All requests will go through LB Distribute it to a server copy . Because we can't predict LB Which one will be chosen server copy , We have to make sure that every server Copy all with all agent Establishing a connection . here , We will use ANP Its own function realizes this requirement , The specific process is as follows :

- Start up yurttunnel server when , We'll count the copies (serverCount) Pass in each server In copy , And specify one for each copy server ID;

- agent Connect LB after ,LB Will randomly choose one server Copy it and make it match with agent Establish long connection ;

- meanwhile ,server Through this channel to agent Return to one ACK package, This package Will include serverCount and serverID;

- agent Through analysis ACK package, We can learn that server Number of copies , And record the connected... Locally serverID;

- If agent Find out , Locally connected server The number of copies is less than serverCount, And then again to LB Send a connection request , Until the local record serverID Number and server Count Count till you count .

Although this mechanism has helped us realize server Full network segment coverage of the replica . But at the same time , There are also shortcomings that cannot be ignored , because agent It's impossible to choose which server Copy establishes connection , therefore , To connect all the server copy ,agent It has to be repeated LB. In the process ,server Because not yet with all of agent Establishing a connection ,KAS The request may not be forwarded to the corresponding node . One potential solution is , For each server Copy creates a separate LB, Responsible for working with agent Connection between , At the same time agent End record all server The copy corresponds to LB Information about , This program can help agent Quickly with all of server Copy establishes connection . The implementation details of the scheme , It's still under discussion with developers in the upstream community .

3. by ANP Add proxy policy

stay OpenYurt Under the network model of , Edge nodes are distributed in different network region in , Randomly selected agent You may not be able to forward requests to other locations region On the node inside . So we have to modify ANP server Logic of underlying proxy forwarding . However , According to long experience , We believe that ,proxy server Support different proxy strategies , for example , Forward request to designated data center ,region, Or specify the host , It is a more general requirement . Process and ANP Community developers Discuss , We decided to refactor ANP management agent Connection interface , Allow users to implement new proxy policies based on their needs , And plan to put feature Finally into the upstream code base . The refactoring is still in progress , stay Yurttunnel In the first open source version , We have the following configuration for the time being :

- Deploy one on each edge node agent.

- agent stay server At the time of registration , Use agent nodal IP As agentID.

- server When forwarding a request , By matching the request target IP and agentID, Forward the request to the corresponding agent.

We plan to OpenYurt Subsequent releases Yurt Unit( Edge node partition management and control ) after , With the new ANP Proxy forwarding strategy , Realization agent Partition deployment of , And the requested partition forwarding .

4. Apply for security certificate dynamically

In order to relieve yurttunnel Component dependence on node certificates , We are yurttunnel Newly added cert manager To form a ,cert manager Will be in server and agent After operation , towards KAS Submit certificate signning request(CSR).server The requested certificate will be used to ensure that it matches KAS and agent Secure communication between ,agent You will use the requested certificate to ensure that it matches server between gRPC Channel security . because agent and kubelet It's through tcp Protocol connection , therefore , We don't have to do it for agent and kubelet Connection Preparation Certificate between .

5. Compress Tunnel bandwidth , Cost savings

stay 3.5 in , We mentioned , Use gRPC encapsulation Tunnel Although it can improve transmission stability , But it will also increase public network traffic . Does that mean stability and performance , We can only choose one of two ? Through the analysis of different user scenarios , We found that , in the majority of cases , Users use the O & M channel to get container logs ( namely kubectl log), And traditional log files , There are many of the same text messages , So we infer that gzip Equal compression algorithm can effectively reduce the bandwidth . To test this hypothesis , We are ANP At the bottom gRPC Added to Library gzip compressor, And compared with using native TCP The amount of data transferred in the connection scenario .

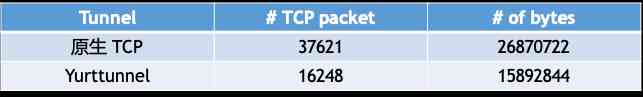

The first experimental scenario we considered was , Pass respectively TCP The connection and ANP Get the same kubeproxy Container log , We intercepted the process Tunnel Up and down package and bytes Total amount .

surface 1: Native TCP V.S. ANP (kubectl logs kube-proxy)

As shown in the table 1 Shown , By using ANP, The total amount of data transferred has decreased 29.93%.

After a long run , The log text of the container can reach more than ten megabytes , In order to simulate the scene of getting large text log . We've created an inclusion of 10.5M systemd log( namely journalctl) Of ubuntu Containers , Again, we use native TCP The connection and ANP Transfer the log file , And measured Tunnel The total amount of data on .

surface 2: Native TCP V.S. ANP (large log file)

As shown in the table 2 Shown , In the case of large log text , By using ANP, The total amount of data transferred has decreased 40.85%.

thus it can be seen , Compared with the original TCP Connect ,ANP Not only can it provide higher transmission stability , It can also greatly reduce public network traffic . Considering the size of tens of thousands of nodes in edge cluster , The new solution will help users save a lot of money in public network traffic .

Yurttunnel System architecture

Figure 4 :Yurttunnel System architecture

Sum up ,Yurttunnel It mainly includes the following components :

Yurttunnel Server - Responsible for apiserver,prometheus,metrics server The request sent by the control component to the node , Forward to the corresponding agent. It includes the following sub components :

- ANP Proxy Server - Yes ANP gRPC server Encapsulation , Be responsible for managing and Yurttunnel Agent Long connection between , And forward the request .

- Iptable Manager - Modify the control node's DNAT The rules , To ensure that requests from the governing component can be forwarded to Yurttunnel Server.

- Cert Manager - by Yurttunnel Server Generate TLS certificate .

- Request Interceptor - take KAS For nodes HTTP Request encapsulation to conform to ANP Regular gRPC In the bag .

Yurttunnel Agent - And Yurttunnel Server Take the initiative to establish a connection , And will Yurttunnel Server The request is forwarded to Kubelet. It includes two sub components :

- ANP Proxy Agent - Yes ANP gRPC agent Encapsulation , Compared with upstream , We added extra gzip compressor To compress data .

- Cert Manager - by Yurttunnel Agent Generate TLS certificate .

- Yurttunnel Server Service - It's usually a SLB, Responsible for distributing requests from control components to appropriate Yurttunnel Server copy , Guarantee Yurttunnel High availability and load balancing .

Summary and prospect

Yurttunnel As OpenYurt Important components of open source recently , Opened the OpenYurt Cloud edge channel of cluster , It provides a unified entrance for container operation and maintenance on edge cluster . By revamping the upstream solution ,Yurttunnel Not only provides higher transmission stability , It also dramatically reduces the amount of data transferred .

OpenYurt On 2020 year 5 month 29 Japan officially open source to the outside world , With the strength of the community and the vast number of developers to grow rapidly , Open source only 3 It will officially become CNCF Sandbox level edge computing cloud native project . future ,OpenYurt Will continue “Extending your upstream Kubernetes to edge” Core design concept of , While choosing to maintain the maximum common divisor of technology with upstream , Carry forward the spirit of open source sharing , Promote with the majority of developers Kubernetes The progress of the community .

OpenYurt Has been shortlisted “2020 year 10 Big new sharp open source project ”, Welcome to the link :https://www.infoq.cn/talk/sQ7eKfv1KW1A0kUafBgv, choice “25 Number ”, by OpenYurt Come on, help !

“ Alibaba cloud native Focus on microservices 、Serverless、 Containers 、Service Mesh And other technical fields 、 The trend of primary popular technology of focus cloud 、 Large scale practice of cloud original , Official account of cloud developers .”