Seata

Seata Is an open source distributed transaction solution , We are committed to providing high-performance and easy-to-use distributed transaction services under the microservice architecture . stay Seata Before open source ,Seata The corresponding internal version has always played the role of distributed consistency Middleware in Ali economy , To help the economy ride through the years of double 11, To each BU The business has been strongly supported . After years of precipitation and accumulation , Commercial products have been successively used in Alibaba cloud 、 Financial cloud for sale .2019.1 In order to create a more perfect technology ecology and inclusive technology achievements ,Seata Officially announced open source , Since opening up , Popular , In less than a year, it has become the most popular distributed transaction solution .

Official Chinese website :https://seata.io/zh-cn

github Project address :https://github.com/seata/seata

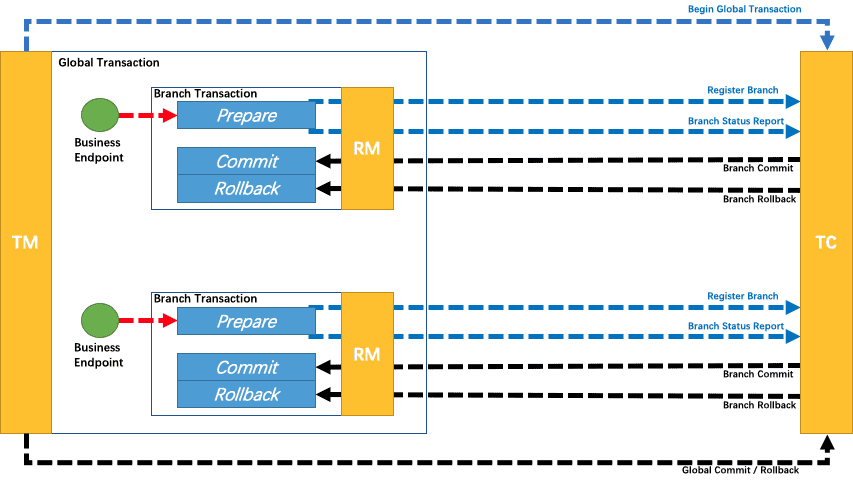

4.1 Seata The term

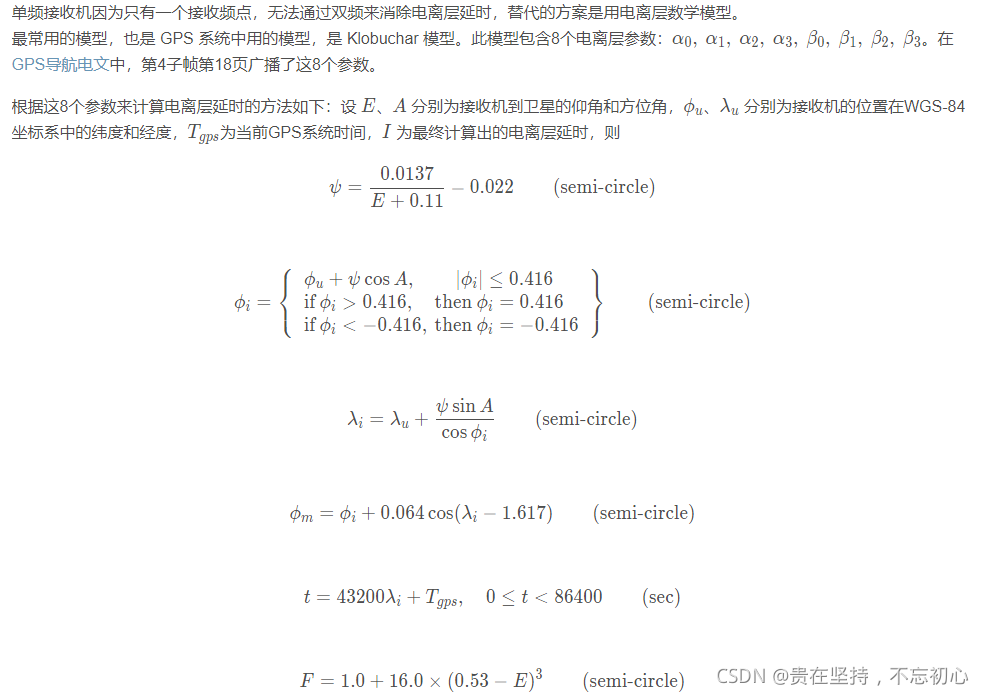

TC (Transaction Coordinator) - A business coordinator

Maintain the state of global and branch transactions , Drive global transaction commit or rollback .

TM (Transaction Manager) - Transaction manager

Define the scope of the global transaction : Start global transaction 、 Commit or roll back global transactions .

RM (Resource Manager) - Explorer

Manage resources for branch transactions , And TC Talk to register branch transactions and report the status of branch transactions , And drive branch transaction commit or rollback .

Seata Committed to providing high-performance and easy-to-use distributed transaction services .Seata Will provide users with AT、TCC、SAGA and XA Transaction mode , Create a one-stop distributed solution for users .

4.1 Seata AT Pattern

Seata Will provide users with AT、TCC、SAGA and XA Transaction mode , Create a one-stop distributed solution for users . among AT Mode is the most popular , It's very easy to use , But its internal principle is not simple .

AT Please refer to the official documentation for information about the model :https://seata.io/zh-cn/docs/overview/what-is-seata.html

The picture below is AT The execution process of the pattern :

4.1.1 AT Mode and workflow

See official documents :https://seata.io/zh-cn/docs/overview/what-is-seata.html

4.1.2 Seata-Server install

We are choosing to use Seata At version time , You can first refer to the version matching given by the official (Seata The version can also be selected according to your own requirements ):

https://github.com/alibaba/spring-cloud-alibaba/wiki/ Version Description

| Spring Cloud Alibaba Version | Sentinel Version | Nacos Version | RocketMQ Version | Dubbo Version | Seata Version |

|---|---|---|---|---|---|

| 2.2.5.RELEASE | 1.8.0 | 1.4.1 | 4.4.0 | 2.7.8 | 1.3.0 |

| 2.2.3.RELEASE or 2.1.3.RELEASE or 2.0.3.RELEASE | 1.8.0 | 1.3.3 | 4.4.0 | 2.7.8 | 1.3.0 |

| 2.2.1.RELEASE or 2.1.2.RELEASE or 2.0.2.RELEASE | 1.7.1 | 1.2.1 | 4.4.0 | 2.7.6 | 1.2.0 |

| 2.2.0.RELEASE | 1.7.1 | 1.1.4 | 4.4.0 | 2.7.4.1 | 1.0.0 |

| 2.1.1.RELEASE or 2.0.1.RELEASE or 1.5.1.RELEASE | 1.7.0 | 1.1.4 | 4.4.0 | 2.7.3 | 0.9.0 |

| 2.1.0.RELEASE or 2.0.0.RELEASE or 1.5.0.RELEASE | 1.6.3 | 1.1.1 | 4.4.0 | 2.7.3 | 0.7.1 |

We are SpringCloud Alibaba The version is 2.2.5.RELEASE, Corresponding Seata The version is 1.3.0, So we first install Seata-Server1.3.0

We are directly based on docker Start to get :

docker run --name seata-server -p 8091:8091 -d -e SEATA_IP=192.168.200.129 -e SEATA_PORT=8091 --restart=on-failure seataio/seata-server:1.3.0

4.1.3 Integrate springcloud-alibaba

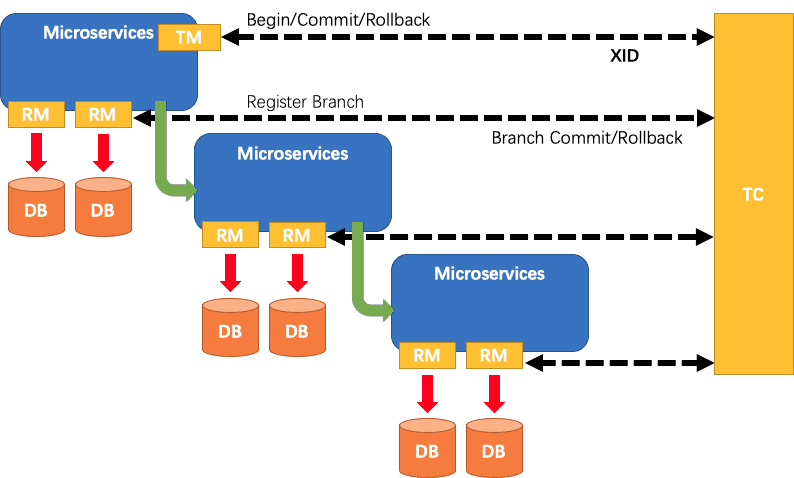

Next, we start to integrate and use in the project Seata Of AT Pattern realizes distributed transaction control , About how to integrate , Officials have also given many examples , Can pass

https://github.com/seata/seata-samples

Therefore, all kinds of integration modes need to look through the corresponding samples.

The integration can be realized as follows :

1: Introduce dependency package spring-cloud-starter-alibaba-seata

2: To configure Seata

3: Create proxy data sources

4:@GlobalTransactional Global transaction control

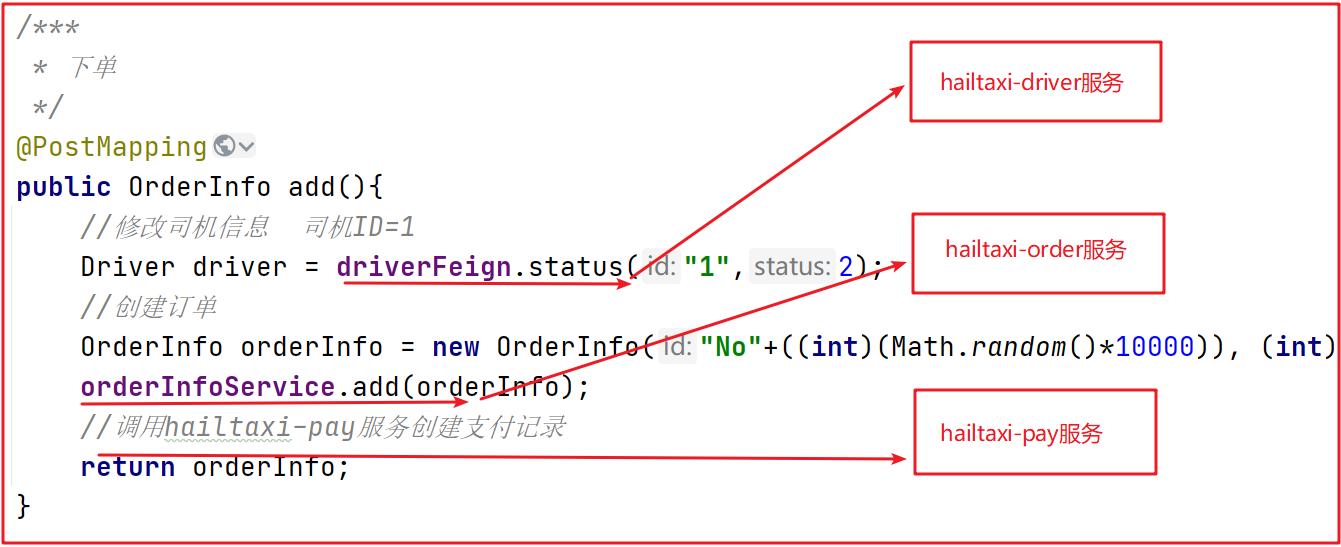

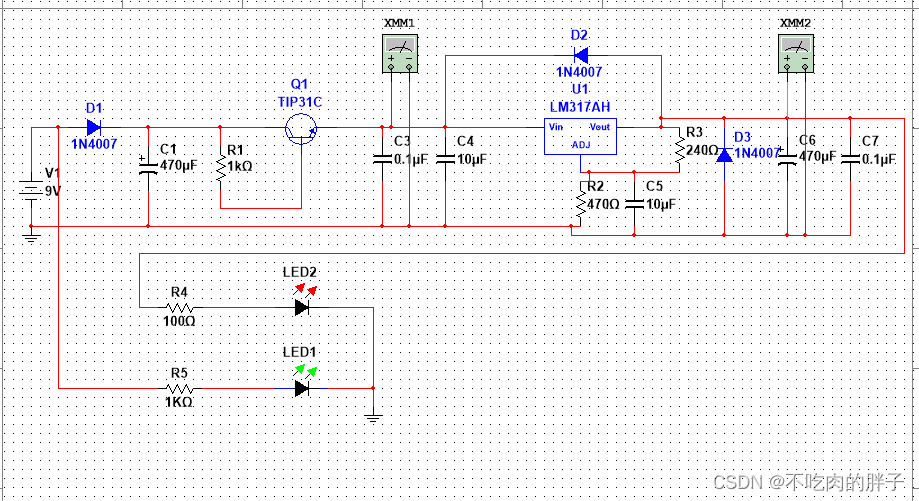

Case needs :

Pictured above , If the user takes a taxi successfully , The driver's status needs to be modified 、 Place an order 、 Keep a payment log , Each operation calls a different service , For example, at this time hailtaxi-driver Service execution succeeded , however hailtaxi-order It is possible that the execution failed , At this time, how to realize cross service transaction rollback ? This requires distributed transactions .

Since our general affairs are service Management at the level , therefore , modified hailtaxi-order Medium OrderInfoController#add

Method , Put the business implementation into the corresponding Service in

/***

* Place an order

*/

/*@PostMapping

public OrderInfo add(){

// Modify driver information The driver ID=1

Driver driver = driverFeign.status("3",2);

// Create order

OrderInfo orderInfo = new OrderInfo("No"+((int)(Math.random()*10000)), (int)(Math.random()*100), new Date(), " Shenzhen North Station ", " Luohu port ", driver);

orderInfoService.add(orderInfo);

return orderInfo;

}*/

@PostMapping

public OrderInfo add() {

return orderInfoService.addOrder();

}

stay Service In the implementation :

@Service

public class OrderInfoServiceImpl implements OrderInfoService {

@Autowired

private DriverFeign driverFeign;

/**

* 1、 Modify driver information The driver ID=1

* 2、 Create order

* @return

*/

@Override

public OrderInfo addOrder() {

// Modify driver information The driver ID=1

Driver driver = driverFeign.status("1",2);

// Create order

OrderInfo orderInfo = new OrderInfo("No"+((int)(Math.random()*10000)), (int)(Math.random()*100), new Date(), " Shenzhen North Station ", " Luohu port ", driver);

int count = orderInfoMapper.add(orderInfo);

System.out.println("====count="+count);

return orderInfo;

}

}

Case realization :

0) establish undo_log surface

You need to create this table in every database :

CREATE TABLE `undo_log` (

`id` bigint(20) NOT NULL AUTO_INCREMENT,

`branch_id` bigint(20) NOT NULL,

`xid` varchar(100) NOT NULL,

`context` varchar(128) NOT NULL,

`rollback_info` longblob NOT NULL,

`log_status` int(11) NOT NULL,

`log_created` datetime NOT NULL,

`log_modified` datetime NOT NULL,

PRIMARY KEY (`id`),

UNIQUE KEY `ux_undo_log` (`xid`,`branch_id`)

) ENGINE=InnoDB AUTO_INCREMENT=1 DEFAULT CHARSET=utf8;

1) Depend on the introduction of

We started with hailtaxi-driver and hailtaxi-order Introduce dependency in :

<!--seata-->

<dependency>

<groupId>com.alibaba.cloud</groupId>

<artifactId>spring-cloud-starter-alibaba-seata</artifactId>

<version>2.2.5.RELEASE</version>

</dependency>

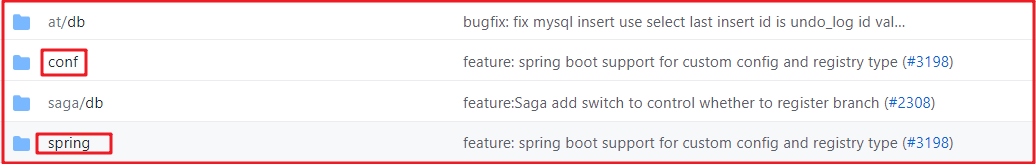

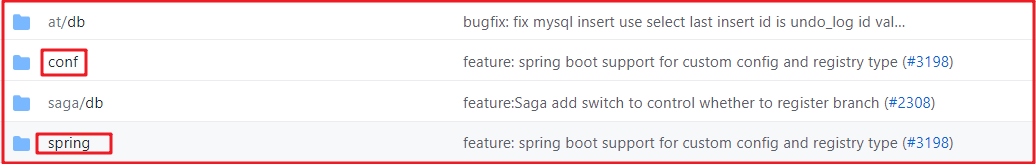

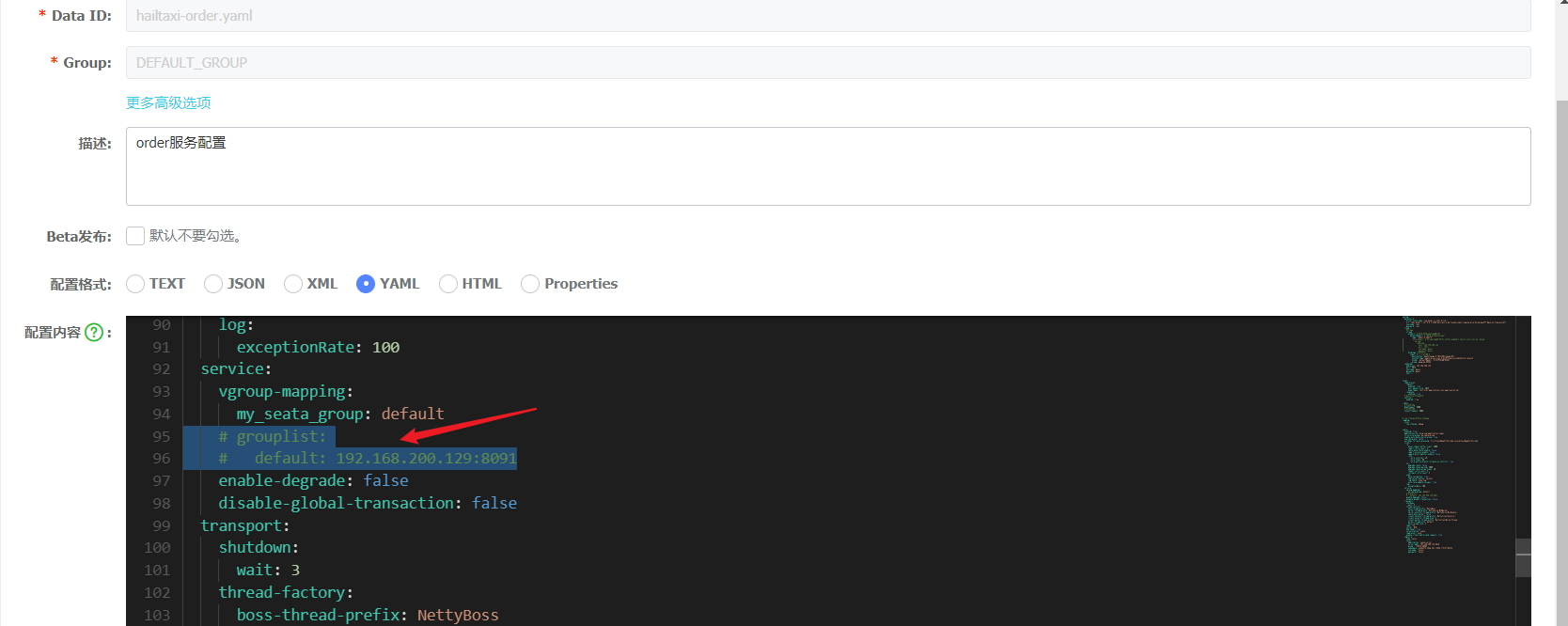

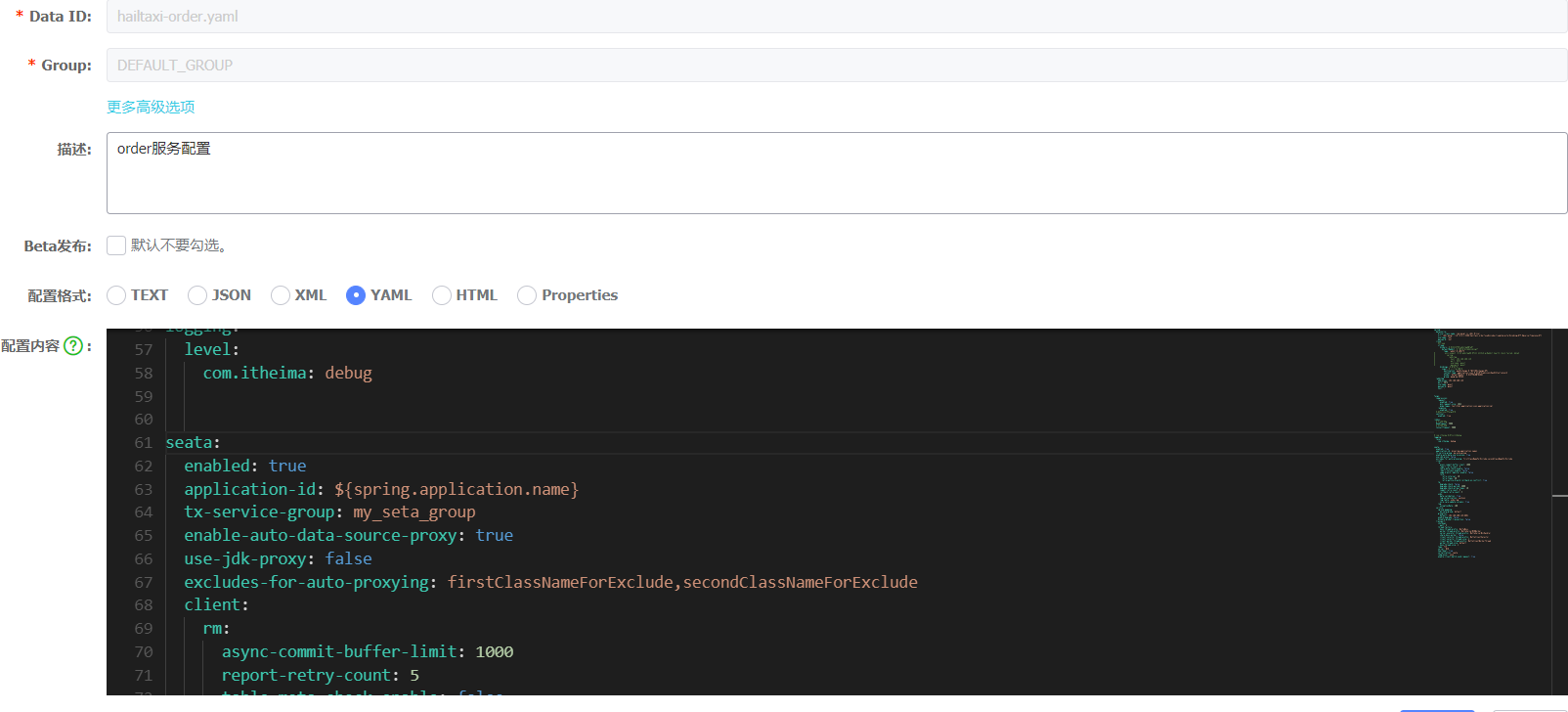

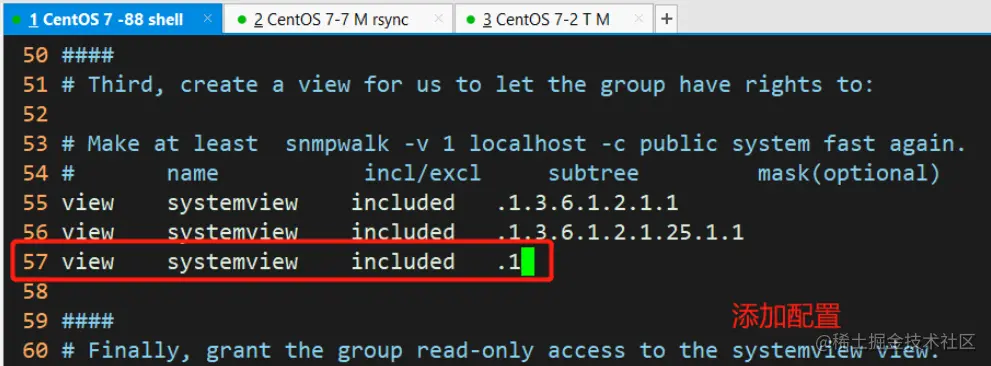

2) To configure Seata

After the introduction of dependency , We need to configure SeataClient End message , About SeataClient End configuration information , The official has also given many versions of the template , You can refer to the official project :

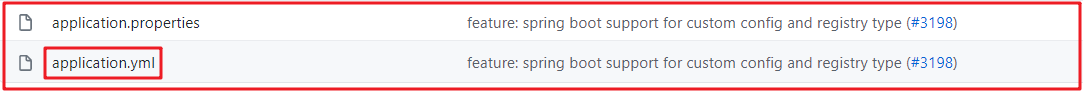

https://github.com/seata/seata/tree/1.3.0/script, Here's the picture :

We can choose spring, hold application.yml Files are copied directly into the project , The documents are as follows :

See :https://github.com/seata/seata/blob/1.3.0/script/client/spring/application.yml

After modification, we are hailtaxi-driver and hailtaxi-order The configuration in the project is as follows :

seata:

enabled: true

application-id: ${spring.application.name}

tx-service-group: my_seata_group

enable-auto-data-source-proxy: true

use-jdk-proxy: false

excludes-for-auto-proxying: firstClassNameForExclude,secondClassNameForExclude

client:

rm:

async-commit-buffer-limit: 1000

report-retry-count: 5

table-meta-check-enable: false

report-success-enable: false

saga-branch-register-enable: false

lock:

retry-interval: 10

retry-times: 30

retry-policy-branch-rollback-on-conflict: true

tm:

degrade-check: false

degrade-check-period: 2000

degrade-check-allow-times: 10

commit-retry-count: 5

rollback-retry-count: 5

undo:

data-validation: true

log-serialization: jackson

log-table: undo_log

only-care-update-columns: true

log:

exceptionRate: 100

service:

vgroup-mapping:

my_seata_group: default

grouplist:

default: 192.168.200.129:8091

enable-degrade: false

disable-global-transaction: false

transport:

shutdown:

wait: 3

thread-factory:

boss-thread-prefix: NettyBoss

worker-thread-prefix: NettyServerNIOWorker

server-executor-thread-prefix: NettyServerBizHandler

share-boss-worker: false

client-selector-thread-prefix: NettyClientSelector

client-selector-thread-size: 1

client-worker-thread-prefix: NettyClientWorkerThread

worker-thread-size: default

boss-thread-size: 1

type: TCP

server: NIO

heartbeat: true

serialization: seata

compressor: none

enable-client-batch-send-request: true

There are many parameters about the content of the configuration file , We need to master the core part :

seata_transaction: default: Transaction grouping , Ahead seata_transaction You can customize , It is easy to find the cluster node information through transaction grouping .

tx-service-group: seata_transaction: Specify the applied transaction grouping , It is consistent with the previous part of the grouping defined above .

default: 192.168.200.129:8091: Service address ,seata-server Service address .

Be careful :

Now the configuration information is managed to nacos Medium , So you can store the configuration directly in nacos in

hailtaxi-order

hailtaxi-driver

3) Proxy data sources

Through proxy data sources, transaction log data and business data can be synchronized , About proxy data sources, you need to create them manually in the early stage , But as the Seata Version update , The implementation schemes of different versions are different , Here's the official introduction :

1.1.0: seata-all Cancel property configuration , Change to notes @EnableAutoDataSourceProxy Turn on , And you can choose jdk proxy perhaps cglib proxy

1.0.0: client.support.spring.datasource.autoproxy=true

0.9.0: support.spring.datasource.autoproxy=true

Our current version is 1.3.0, So we only need to add @EnableAutoDataSourceProxy Annotations can be ,

stay hailtaxi-order And hailtaxi-driver Add the annotation on the startup class of :

@SpringBootApplication

@EnableDiscoveryClient

@EnableFeignClients(basePackages = {"com.itheima.driver.feign"})

@EnableAutoDataSourceProxy

public class OrderApplication {

}

4) Global transaction control

The successful creation of the order by taxi is initiated by the customer , stay hailtaxi-order In the implementation of , also feign call hailtaxi-driver, therefore hailtaxi-order It is the global transaction entry , We are OrderInfoServiceImpl.addOrder() Method @GlobalTransactional, At this time, this method is the entry of the global transaction ,

@Override

@GlobalTransactional

public OrderInfo addOrder() {

// Modify driver information The driver ID=1

Driver driver = driverFeign.status("1",2);

// Create order

OrderInfo orderInfo = new OrderInfo("No"+((int)(Math.random()*10000)), (int)(Math.random()*100), new Date(), " Shenzhen North Station ", " Luohu port ", driver);

int count = orderInfoMapper.add(orderInfo);

System.out.println("====count="+count);

return orderInfo;

}

5) Distributed transaction testing

1、 Test normal condition , Start the test

take id=1 The driver status of is manually changed to 1, Then test

2、 Abnormal test , stay hailtaxi-order Of service Add an exception to the method ,

@Override

@GlobalTransactional

public OrderInfo addOrder() {

// Modify driver information The driver ID=1

Driver driver = driverFeign.status("1",2);

// Create order

OrderInfo orderInfo = new OrderInfo("No"+((int)(Math.random()*10000)), (int)(Math.random()*100), new Date(), " Shenzhen North Station ", " Luohu port ", driver);

int count = orderInfoMapper.add(orderInfo);

System.out.println("====count="+count);

// Simulate anomalies

int i = 1 / 0;

return orderInfo;

}

Before testing , take id=1 The driver status of is manually changed to 1, Empty the order form , The test again , See if the status is updated , Has the order been added , To verify whether the distributed transaction is successfully controlled !

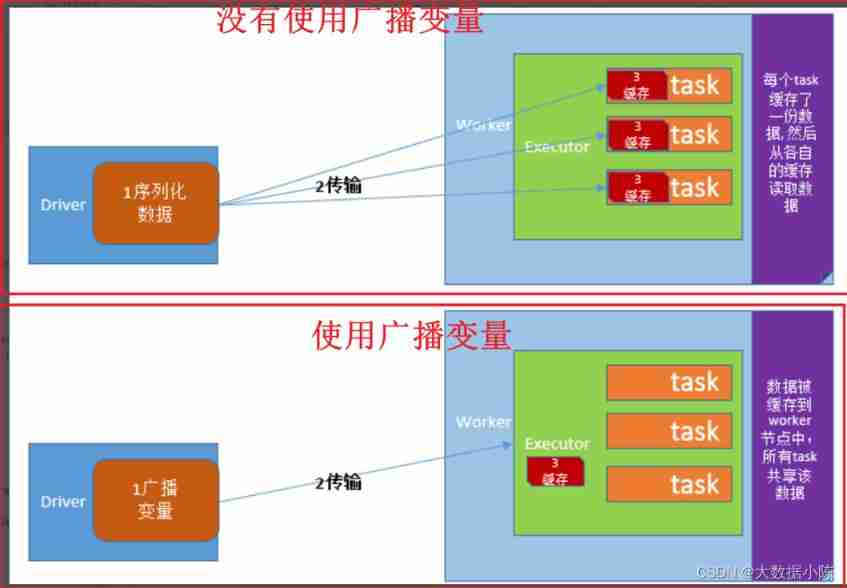

4.2 Seata TCC Pattern

A distributed global transaction , The whole is Two-phase commit Model of . A global transaction consists of several branch transactions , Branch transactions should satisfy Two-phase commit The model of , That is, each branch transaction should have its own :

- A stage prepare Behavior

- Two stages commit or rollback Behavior

According to the difference between the two-stage behavior patterns , We divide the branch transaction into Automatic (Branch) Transaction Mode and Manual (Branch) Transaction Mode.

AT Pattern ( Reference link TBD) be based on Support local ACID Business Of Relational database :

- A stage prepare Behavior : In a local transaction , Submit the business data update and the corresponding rollback log record .

- Two stages commit Behavior : It's over right now , Automatically Asynchronous bulk clean up rollback log .

- Two stages rollback Behavior : By rolling back the log , Automatically Generate compensation operation , Complete data rollback .

Corresponding ,TCC Pattern , Do not rely on transaction support of underlying data resources :

- A stage prepare Behavior : call Customize Of prepare Logic .

- Two stages commit Behavior : call Customize Of commit Logic .

- Two stages rollback Behavior : call Customize Of rollback Logic .

So-called TCC Pattern , It means to support Customize The branch transactions of are included in the management of global transactions .

TCC Realization principle :

There is one TCC Interceptor , It encapsulates Confirm and Cancel Methods as resources ( For the back TC Come on commit or rollback operation ) The package is finished , It will be cached locally to RM ( What is cached is the description information of the method ), It can be simply put into a Map Inside When TC When you want to call , You can start from Map Find this way , Just call with reflection in addition ,RM Not just registering branch transactions ( Branch transactions are registered to TC Inside GlobalSession Medium ) It will also put the important attributes in the resources just encapsulated ( Business ID、 The transaction group to which they belong ) Register as a resource to TC Medium RpcContext such ,TC You will know which branch transactions exist in the current global transaction ( This is all done in the initialization phase of branch transactions ) for instance :RpcContext There are resources 123, however GlobalSession There are only branch transactions 12 therefore TC You know branch transactions 3 Resources of have been registered , But branch transactions 3 Not registered yet Then if TM tell TC Commit or rollback , that GlobalSession Would pass RpcContext find 1 and 2 The location of the branch transaction ( For example, which method should be called ) When RM After receiving commit or rollback , You will find the corresponding method through your local cache , Finally, call the real... Through reflection or other mechanisms Confirm or Cancel

5 Seata Registry Center

see :https://github.com/seata/seata/tree/1.3.0/script You can see seata Support multiple registries !

5.1 Server registry configuration

Server registry ( be located seata-server Of registry.conf In the configuration file registry.type Parameters ), In order to achieve seata-server Cluster high availability will not be used file type , Third party registries are generally used , for example zookeeper、redis、eureka、nacos etc. .

Here we use nacos,seata-server Of registry.conf The configuration is as follows :

Because we are based on docker Starting up seata, Therefore, you can directly enter the container to modify the configuration file /resources/registry.conf

registry {

# file ...nacos ...eureka...redis...zk...consul...etcd3...sofa

type = "nacos"

nacos {

application = "seata-server"

serverAddr = "192.168.200.129:8848"

group = "SEATA_GROUP"

namespace = "1ebba5f6-49da-40cc-950b-f75c8f7d07b3"

cluster = "default"

username = "nacos"

password = "nacos"

}

}

At this point, we restart the container , visit :http://192.168.200.129:8848/nacos see seata Whether you have registered to nacos in

5.2 Client registry configuration

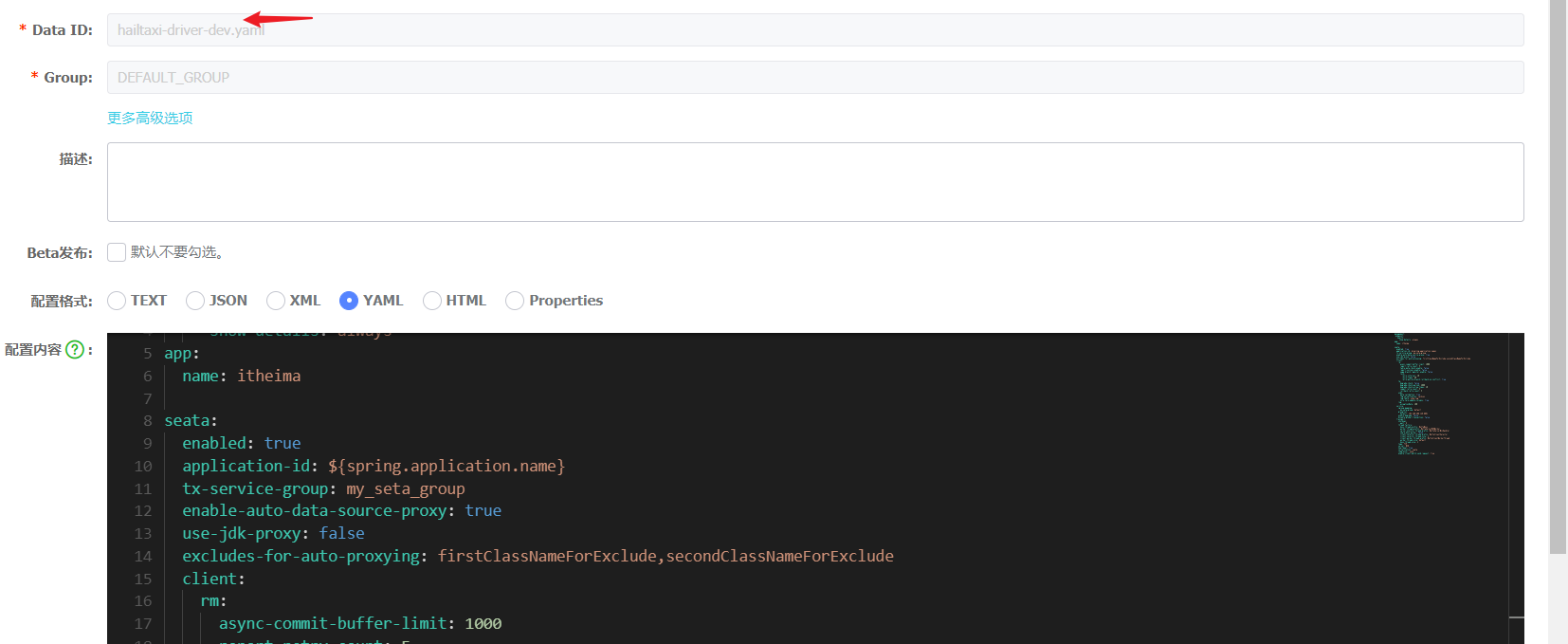

In the project , We need to use a registry , Add the following configuration ( stay nacos Configuration center hailtaxi-order.yaml and hailtaxi-driver-dev.yaml All modified )

see :https://github.com/seata/seata/tree/1.3.0/script

registry:

type: nacos

nacos:

application: seata-server

server-addr: 192.168.200.129:8848

group : "SEATA_GROUP"

namespace: 1ebba5f6-49da-40cc-950b-f75c8f7d07b3

username: "nacos"

password: "nacos"

At this point, you can comment out default.grouplist="192.168.200.129:8091"

The full configuration is as follows :

seata: enabled: true application-id: ${spring.application.name} tx-service-group: my_seata_group enable-auto-data-source-proxy: true use-jdk-proxy: false excludes-for-auto-proxying: firstClassNameForExclude,secondClassNameForExclude client: rm: async-commit-buffer-limit: 1000 report-retry-count: 5 table-meta-check-enable: false report-success-enable: false saga-branch-register-enable: false lock: retry-interval: 10 retry-times: 30 retry-policy-branch-rollback-on-conflict: true tm: degrade-check: false degrade-check-period: 2000 degrade-check-allow-times: 10 commit-retry-count: 5 rollback-retry-count: 5 undo: data-validation: true log-serialization: jackson log-table: undo_log only-care-update-columns: true log: exceptionRate: 100 service: vgroup-mapping: my_seata_group: default #grouplist: #default: 192.168.200.129:8091 enable-degrade: false disable-global-transaction: false transport: shutdown: wait: 3 thread-factory: boss-thread-prefix: NettyBoss worker-thread-prefix: NettyServerNIOWorker server-executor-thread-prefix: NettyServerBizHandler share-boss-worker: false client-selector-thread-prefix: NettyClientSelector client-selector-thread-size: 1 client-worker-thread-prefix: NettyClientWorkerThread worker-thread-size: default boss-thread-size: 1 type: TCP server: NIO heartbeat: true serialization: seata compressor: none enable-client-batch-send-request: true registry: type: nacos nacos: application: seata-server server-addr: 192.168.200.129:8848 group : "SEATA_GROUP" namespace: 1ebba5f6-49da-40cc-950b-f75c8f7d07b3 username: "nacos" password: "nacos"

test :

Start the service and test again , Check whether distributed transactions can still be controlled !!!

6 Seata High availability

seata-server Currently, a single node is used , Whether it can resist high concurrency is a question worth thinking . Almost all production environment projects need to ensure high concurrency 、 High availability , Therefore, production environment projects are generally clustered .

The above configuration only changes the registry to nacos, And it is a stand-alone version , If you want to achieve high availability , You have to implement clustering , The cluster needs to do some actions to ensure the data synchronization between cluster nodes ( Session sharing ) Wait for the operation

We need to prepare 2 individual seata-server node , also seata-server Transaction log storage mode , In support of 3 Ways of planting ,

1):file【 Cluster not available 】

2):redis

3):db

We choose redis Store session information for sharing .

1、 Start the second seata-server node

docker run --name seata-server-n2 -p 8092:8092 -d -e SEATA_IP=192.168.200.129 -e SEATA_PORT=8092 --restart=on-failure seataio/seata-server:1.3.0

2、 Enter the container to modify the configuration file registry.conf, Add the configuration of the registry

registry {

# file ...nacos ...eureka...redis...zk...consul...etcd3...sofa

type = "nacos"

nacos {

application = "seata-server"

serverAddr = "192.168.200.129:8848"

group = "SEATA_GROUP"

namespace = "1ebba5f6-49da-40cc-950b-f75c8f7d07b3"

cluster = "default"

username = "nacos"

password = "nacos"

}

}

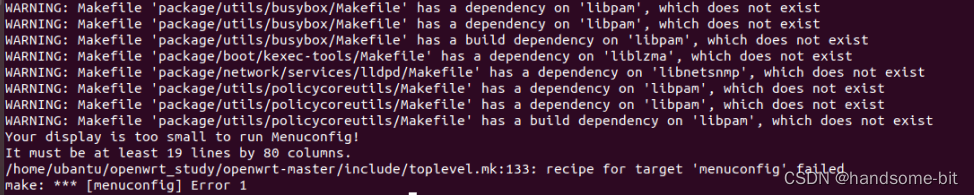

3、 modify seata-server Storage mode of transaction log ,resources/file.conf The changes are as follows :

We use based on redis To store the transaction logs of each node of the cluster , adopt docker Allows a redis

docker run --name redis6.2 --restart=on-failure -p 6379:6379 -d redis:6.2

And then modify seata-server Of file.conf, Revised as follows :

## transaction log store, only used in seata-server

store {

## store mode: file...db...redis

mode = "redis"

## file store property

file {

## store location dir

dir = "sessionStore"

# branch session size , if exceeded first try compress lockkey, still exceeded throws exceptions

maxBranchSessionSize = 16384

# globe session size , if exceeded throws exceptions

maxGlobalSessionSize = 512

# file buffer size , if exceeded allocate new buffer

fileWriteBufferCacheSize = 16384

# when recover batch read size

sessionReloadReadSize = 100

# async, sync

flushDiskMode = async

}

## database store property

db {

## the implement of javax.sql.DataSource, such as DruidDataSource(druid)/BasicDataSource(dbcp)/HikariDataSource(hikari) etc.

datasource = "druid"

## mysql/oracle/postgresql/h2/oceanbase etc.

dbType = "mysql"

driverClassName = "com.mysql.jdbc.Driver"

url = "jdbc:mysql://127.0.0.1:3306/seata"

user = "mysql"

password = "mysql"

minConn = 5

maxConn = 30

globalTable = "global_table"

branchTable = "branch_table"

lockTable = "lock_table"

queryLimit = 100

maxWait = 5000

}

## redis store property

redis {

host = "192.128.200.129"

port = "6379"

password = ""

database = "0"

minConn = 1

maxConn = 10

queryLimit = 100

}

}

If based on DB To store

seata-serverTransaction log data , You need to create a databaseseata, The table information is as follows :https://github.com/seata/seata/blob/1.3.0/script/server/db/mysql.sql

Restart after modification

Be careful : the other one seata-server Nodes also need to modify their mode of storing transaction logs

4、 Start the service test again , Check whether distributed transactions can still control success !

This article is written by the wisdom education valley - The teaching and research team of wild architects released

If this article helps you , Welcome to pay attention and like ; If you have any suggestions, you can also leave comments or private letters , Your support is the driving force for me to adhere to my creation

Reprint please indicate the source !

![[Yu Yue education] higher mathematics of Nanchang University (2) reference materials](/img/ae/a76f360590061229076d00d749b35f.jpg)