PrefaceSpeaking of Elasticsearch , One of the most obvious features is near real-time Quasi real-time —— When documents are stored in Elasticsearch In the middle of the day , Will be in 1 It's indexed and fully searched within seconds in almost real time . Then why ES It's quasi real time ?

official account :『 Liu Zhihang 』, Record the skills in work study 、 Development and source notes ; From time to time to share some of the life experience . You are welcome to guide !

Lucene and ES

Lucene

Lucene yes Elasticsearch Based on the Java library , It introduces the concept of search by segment .

Segment: It's also called Duan , Similar to inverted index , It's equivalent to a dataset .

Commit point: Submission point , Records all known segments .

Lucene index: “a collection of segments plus a commit point”. By a pile Segment Add a commit point to the collection of .

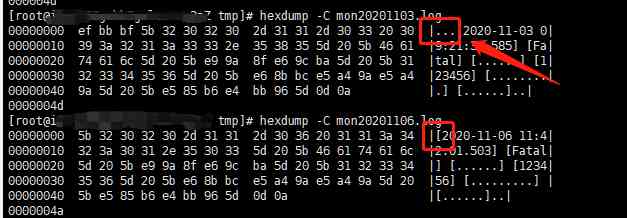

For one Lucene index The composition of , As shown in the figure below .

Elasticsearch

One Elasticsearch Index By one or more shard ( Fragmentation ) form .

and Lucene Medium Lucene index amount to ES One of the shard.

Write process

Write process 1.0 ( Imperfect )

- Keep going Document Write to In-memory buffer ( Memory buffer ).

- When certain conditions are satisfied, the memory buffer in the Documents Refresh to disk .

- Generate a new segment And one. Commit point Submission point .

- This segment It can be like anything else segment The same was read .

The picture is as follows :

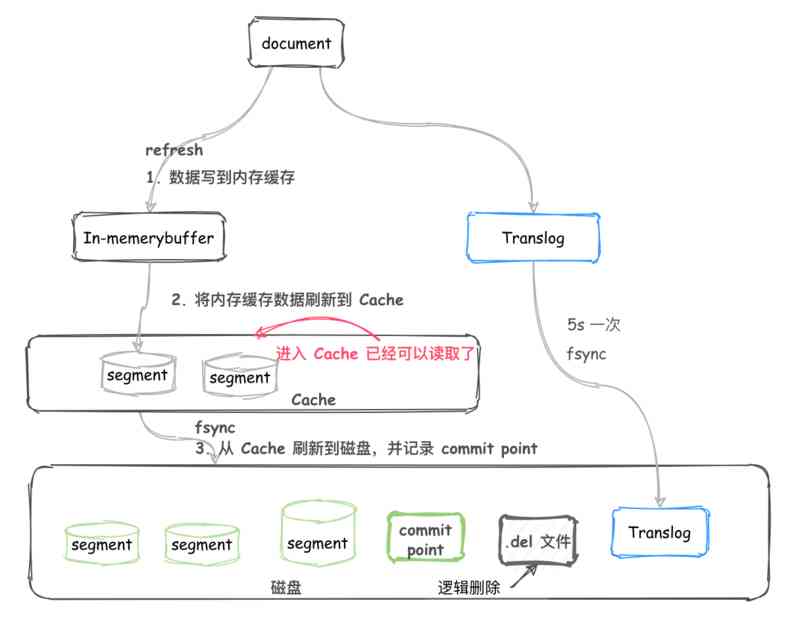

Flushing files to disk is very resource intensive , And there's a cache between the memory buffer and the disk (cache), Once the file enters cache It's like on disk segment The same was read .

Write process 2.0

- Keep going Document Write to In-memory buffer ( Memory buffer ).

- When certain conditions are satisfied, the memory buffer in the Documents Refresh to Cache (cache).

- Generate a new segment , This segment still cache in .

- At this time there is no commit , But it can be read .

The picture is as follows :

Data from buffer To cache The process is to refresh once a second on a regular basis . So the newly written Document The slowest 1 Seconds can be in the cache It was found in .

and Document from buffer To cache The process is called ?refresh . It's usually 1 Refresh every second , No additional modifications are required . Of course , If there is a need to modify , You can refer to the relevant information at the end of the paper . That's why we say Elasticsearch yes Quasi real-time Of .

Make the document immediately visible :

PUT /test/_doc/1?refresh

{"test": "test"}

// perhaps

PUT /test/_doc/2?refresh=true

{"test": "test"}Translog Transaction log

Here we can associate Mysql Of binlog, ES There is also a translog For failure recovery .

- Document Keep writing to In-memory buffer, At this time, we will add translog.

- When buffer Data per second in refresh To cache In the middle of the day ,translog No access to refresh to disk , It's a continuous addition .

- translog every other 5s Meeting fsync To disk .

- translog It's going to keep accumulating and getting bigger and bigger , When translog To a certain extent or at regular intervals , Will execute flush.

flush The operation is divided into the following steps :

- buffer Be emptied .

- Record commit point.

- cache Internal segment By fsync Refresh to disk .

- translog Be deleted .

It is worth noting that :

- translog Every time 5s Refresh the disk once , So the fault restarts , May lose 5s The data of .

- translog perform flush operation , Default 30 Minutes at a time , perhaps translog Too big Will perform .

Do it manually flush:

POST /my-index-000001/_flushDelete and update

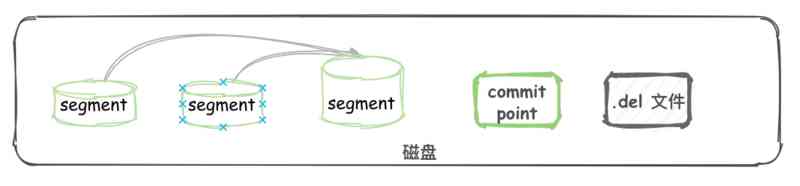

segment It can't be changed , therefore docment Not from the previous segment Remove or update .

So every time commit, Generate commit point when , There will be one. .del file , It will list the deleted document( Logical deletion ).

And when you query , The result will pass through before returning .del Filter .

update , It also marks the old docment Be deleted , Write to .del file , At the same time, a new file will be written . At this time, the query will query the data of two versions , But one will be removed before returning .

segment Merge

Every time 1s Do it once refresh Will create a data in memory segment.

segment Too many will cause more trouble . every last segment Will consume file handle 、 Memory and cpu Operation cycle . what's more , Each search request must be checked for each in turn segment ; therefore segment The more , The slower the search .

stay ES There will be a thread in the background segment Merge .

- refresh The operation creates a new segment And open it for search .

- The merge process selects a small number of similar size segment, And merge them in the background into a larger segment in . It doesn't interrupt indexing and searching .

When the merger ends , old segment Be deleted Describe the activity at the completion of the merge :

- new segment Has been refreshed (flush) To disk . Write a containing new segment And exclude the old and the smaller segment The new commit point.

- new segment Opened to search for .

- old segment Be deleted .

Physical delete :

stay segment merge This piece of , Those that are logically deleted document Will be deleted by the real physical .

summary

This paper mainly introduces the process of internal write and delete , Need to know refresh、fsync、flush、.del、segment merge The concrete meaning of a noun .

The complete picture is as follows :

That's what I share with you ES Related content , The main purpose is to share technology within the group , To carry out literacy . Wrong place , I hope you can correct me .

Related information

- Quasi real time search : https://www.elastic.co/guide/...

- Refresh API:https://www.elastic.co/guide/...

- Flush API:https://www.elastic.co/guide/...