当前位置:网站首页>[reading papers] deep learning face representation by joint identification verification, deep learning applied to optimization problems, deepid2

[reading papers] deep learning face representation by joint identification verification, deep learning applied to optimization problems, deepid2

2022-06-13 02:25:00 【Shameful child】

Deep Learning Face Representation by Joint Identification-Verification

- The key challenge of face recognition is to develop effective feature representation , To reduce individual differences , At the same time, it expands the differences between individuals .

- Face recognition task By extracting from different identities DeepID2 Separate to increase the difference between individuals , and Face verification task By extracting from the same identity DeepID2 Pull together to reduce individual differences

- In challenging LFW On dataset , The accuracy of face verification reaches 99.15%.

Face verification

Faces of the same identity in different postures 、 lighting 、 expression 、 Age and shade may look very different . It is the eternal theme of face recognition research to enlarge the difference between people and reduce the difference between people at the same time .

Traditional face recognition research methods :LDA、 Bayesian face and unified subspace

LDA Two linear subspaces are used to approximate the facial changes between people , And find the projection direction to maximize the ratio between them .

LDA It is a kind of supervised learning Dimension reduction technology , That is, each sample of its dataset It has category output . this and PCA Different .PCA yes Unsupervised dimensionality reduction without considering the output of sample categories .

LDA It can be summed up in one sentence , Namely “ Minimum intra class variance after projection , Maximum variance between classes ”. To project data on a low dimension , After projection, we hope the projection point of each kind of data is as close as possible , And the distance between the category centers of different categories of data is as large as possible .

There are two types of data Red and blue respectively , These data features are two-dimensional , Take these data A line projected into one dimension , Give Way The projection points of each category of data shall be as close as possible , and The distance between red and blue data centers should be as large as possible .

Necessary basic knowledge of mathematics

- hypothesis The data conform to Gaussian distribution ( Theoretical basis ).

- Rayleigh business (Rayleigh quotient) And the generalized Rayleigh quotient (genralized Rayleigh quotient)

- Rayleigh quotient refers to such a function R ( A , x ) = x H A x x H x R(A,x)=\frac{x^HAx}{x^Hx} R(A,x)=xHxxHAx, among x It's a non-zero vector , and A by n×n Of Hermitan matrix . So-called Hermitan Matrix is to satisfy A conjugate transpose matrix is a matrix equal to itself , namely A H = A A^H=A AH=A. If our matrix A Is a real matrix , Then meet A T = A A^T=A AT=A The matrix of is Hermitan matrix .

- Rayleigh quotient has a very important property , That is, its maximum value be equal to matrix A The largest eigenvalue , And the minimum be equal to matrix A The smallest eigenvalue of , Yes λ m i n ≤ x H A x x H x ≤ λ m a x \lambda{_{min}}\le\frac{x^HAx}{x^Hx}\le{\lambda_{max}} λmin≤xHxxHAx≤λmax. When the vector x When it is a standard orthogonal basis , The meet x H x {x^Hx} xHx=1 when , The Rayleigh quotient degenerates into : R ( A , x ) = x H A x R(A,x)=x^HAx R(A,x)=xHAx, This form is in PCA There's something in it .

- The generalized Rayleigh quotient is such a function R ( A , B , x ) = x H A x x H B x R(A,B,x)=\frac{x^HAx}{x^HBx} R(A,B,x)=xHBxxHAx, among x It's a non-zero vector , and A,B Are all n×n Of Hermitan matrix .B Is a positive definite matrix .

- It can be transformed into Rayleigh quotient format through standardization . We make x = B − 1 2 x ′ x=B^{\frac{−1}{2}}x′ x=B2−1x′, Then the denominator is transformed into : x H B x = x ′ H ( B − 1 2 ) H B B − 1 2 x ′ = x ′ H B − 1 2 B B − 1 2 x ′ = x ′ H x ′ x^HBx=x′^H(B^{\frac{−1}{2}})^HBB^{\frac{−1}{2}}x′=x′^HB^{\frac{−1}{2}}BB^{\frac{−1}{2}}x′=x′^Hx′ xHBx=x′H(B2−1)HBB2−1x′=x′HB2−1BB2−1x′=x′Hx′, And molecules turn into : x H A x = x ′ H B − 1 2 A B − 1 2 x ′ x^HAx=x′^HB^{\frac{−1}{2}}AB^{\frac{−1}{2}}x′ xHAx=x′HB2−1AB2−1x′.

- Now our R(A,B,x) Turn into R ( A , B , x ′ ) = x ′ H B − 1 2 A B − 1 2 x ′ x ′ H x ′ R(A,B,x′)=\frac{x′^HB^{\frac{−1}{2}}AB^{\frac{−1}{2}}x′}{x′^Hx′} R(A,B,x′)=x′Hx′x′HB2−1AB2−1x′.

- The nature of Rayleigh quotient , We can quickly learn that ,R(A,B,x′) The maximum value of is the matrix B − 1 2 A B − 1 2 B^{\frac{−1}{2}}AB^{\frac{−1}{2}} B2−1AB2−1 The maximum eigenvalue of , Or matrix B − 1 A B^{-1}A B−1A The maximum eigenvalue of , And the minimum is the matrix B − 1 A B^{-1}A B−1A The minimum eigenvalue of .

LDA Algorithm flow

Input : Data sets D = ( x 1 , y 1 ) , ( x 2 , y 2 ) , . . . , ( ( x m , y m ) ) D={(x_1,y_1),(x_2,y_2),...,((x_m,y_m))} D=(x1,y1),(x2,y2),...,((xm,ym)), Any of these samples x i x_i xi by n Dimension vector , y i ∈ { C 1 , C 2 , . . . , C k } yi∈\{C_1,C_2,...,C_k\} yi∈{ C1,C2,...,Ck}, Dimension reduced to d.

Output : Sample set after dimension reduction D ′ D′ D′

- Calculate the intra class scatter matrix Sw

- Compute the divergence matrix between classes Sb

- Calculation of matrix S w − 1 S b S^{−1}_wS_b Sw−1Sb

- Calculation S w − 1 S b S^{−1}_wS_b Sw−1Sb One of the biggest d Characteristic values and corresponding d eigenvectors ( w 1 , w 2 , . . . w d ) (w_1,w_2,...w_d) (w1,w2,...wd), Get the projection matrix W

- For each sample feature in the sample set x i x_i xi, Convert to a new sample z i = W T x i z_i=W^Tx_i zi=WTxi

- Get the output sample set D ′ = ( z 1 , y 1 ) , ( z 2 , y 2 ) , . . . , ( ( z m , y m ) ) D′={(z_1,y_1),(z_2,y_2),...,((z_m,y_m))} D′=(z1,y1),(z2,y2),...,((zm,ym))

LDA except It can be used for other than dimension reduction , It can also be used to classify . A common LDA The basic idea of classification is to assume that the sample data of each category conforms to Gaussian distribution , Use it like this LDA After projection , Maximum likelihood estimation can be used to calculate the mean and variance of each class of projection data , Then we get the probability density function of the Gaussian distribution . When a new sample arrives , We can project it , Then, the projected sample features are brought into the Gaussian distribution probability density function of each category , Calculate the probability that it belongs to this category , The category corresponding to the maximum probability is the prediction category .

Two category LDA principle

- Suppose our data set D = ( x 1 , y 1 ) , ( x 2 , y 2 ) , . . . , ( ( x m , y m ) ) D={(x_1,y_1),(x_2,y_2),...,((x_m,y_m))} D=(x1,y1),(x2,y2),...,((xm,ym)), Any of these samples x i x_i xi by n Dimension vector , y i ∈ { 0 , 1 } y_i∈\{0,1\} yi∈{ 0,1}. We define N j ( j = 0 , 1 ) N_j(j=0,1) Nj(j=0,1) For the first time j Number of class samples , X j ( j = 0 , 1 ) X_j(j=0,1) Xj(j=0,1) For the first time j A collection of class samples , and μ j ( j = 0 , 1 ) μ_j(j=0,1) μj(j=0,1) For the first time j The mean vector of a class of samples , Definition Σ j ( j = 0 , 1 ) Σ_j(j=0,1) Σj(j=0,1) For the first time j Covariance matrix of a class of samples ( Strictly speaking, it is the covariance matrix lacking the denominator part ).

- μ j μ_j μj The expression of is : μ j = 1 N j ∑ x ∈ X j x , ( j = ( 0 , 1 ) ) μ_j=\frac{1}{N_j}\sum_{x\in{X_j}}x,(j=(0,1)) μj=Nj1∑x∈Xjx,(j=(0,1)).

- Σ j Σ_j Σj The expression of is : Σ j = ∑ x ∈ X j ( x − μ j ) ( x − μ j ) T , ( j = 0 , 1 ) Σ_j=∑_{x∈Xj}(x−μ_j)(x−μ_j)^T,(j=0,1) Σj=∑x∈Xj(x−μj)(x−μj)T,(j=0,1).

- Because there are two types of data , So we just need Project the data onto a straight line . Suppose the projected line is a vector w, For any sample x i x_i xi, It's in a straight line w The projection of is w T x i w^Tx_i wTxi, For the center point of the two categories μ 0 , μ 1 μ_0,μ_1 μ0,μ1, In a straight line w The projection of is w T μ 0 w^Tμ_0 wTμ0 and w T μ 1 w^Tμ_1 wTμ1. because LDA It is necessary to make the distance between the category centers of different categories of data as large as possible , Which is maximizing ∣ ∣ w T μ 0 − w T μ 1 ∣ ∣ 2 2 ||w^Tμ_0−w^Tμ_1||^2_2 ∣∣wTμ0−wTμ1∣∣22, At the same time, we hope that the projection points of the same category of data are as close as possible , That is, the covariance of projection points of similar samples w T Σ 0 w w^TΣ_0w wTΣ0w and w T Σ 1 w w^TΣ_1w wTΣ1w As small as possible . in summary , Our optimization goal is : a r g m a x ⏟ w J ( w ) = ∣ ∣ w T μ 0 − w T μ 1 ∣ ∣ 2 2 w T Σ 0 w + w T Σ 1 w = w T ( μ 0 − μ 1 ) ( μ 0 − μ 1 ) T w w T ( Σ 0 + Σ 1 ) w \underbrace{argmax}_{w}J(w)=\frac{||w^Tμ_0−w^Tμ_1||^2_2}{w^TΣ_0w+w^TΣ_1w}=\frac{w^T(μ_0-μ_1)(μ_0-μ_1)^Tw}{w^T(Σ_0+Σ_1)w} wargmaxJ(w)=wTΣ0w+wTΣ1w∣∣wTμ0−wTμ1∣∣22=wT(Σ0+Σ1)wwT(μ0−μ1)(μ0−μ1)Tw.

- Define the intraclass divergence matrix S w = Σ 0 + Σ 1 = ∑ x ∈ X 0 ( x − μ 0 ) ( x − μ 0 ) T + ∑ x ∈ X 1 ( x − μ 1 ) ( x − μ 1 ) T S_w=Σ_0+Σ_1=\sum_{x\in{X_0}}(x-μ_0)(x-μ_0)^T+\sum_{x\in{X_1}}(x-μ_1)(x-μ_1)^T Sw=Σ0+Σ1=∑x∈X0(x−μ0)(x−μ0)T+∑x∈X1(x−μ1)(x−μ1)T.

- Define the inter class divergence matrix S b = ( μ 0 − μ 1 ) ( μ 0 − μ 1 ) T S_b=(μ_0-μ_1)(μ_0-μ_1)^T Sb=(μ0−μ1)(μ0−μ1)T.

- The optimization goal is rewritten as : a r g m a x ⏟ w J ( w ) = w T S b w w T S w w \underbrace{argmax}_{w}J(w)=\frac{w^TS_bw}{w^TS_ww} wargmaxJ(w)=wTSwwwTSbw.

Metric learning maps faces to certain feature representations , Make faces with the same identity close to each other , And the faces with different identities are separated from each other .

- In mathematics , A measure ( Or distance function ) Is a function that defines the distance between elements in a collection . A set with metrics is called metric space . Measurement learning is also called similarity learning

- Distance measure learning The purpose is to measure the similarity between samples , This is also one of the core problems of pattern recognition . A lot of machine learning methods , such as K a near neighbor 、 Support vector machine 、 Radial basis function network and other classification methods K-means Clustering method , There are also some graph based methods , Its performance is good or bad It is mainly determined by the choice of similarity measurement methods between samples .

- The goal is to recognize faces , Then we need to build a distance function to strengthen the appropriate features ( Like hair color , Face shape, etc ); And if our goal is to recognize posture , Then we need to build a distance function to capture the similarity of posture .

- In order to deal with a variety of feature similarity , You can select the appropriate features and build the distance function manually in a specific task . However, this method will require a lot of labor input , It can also be very insensitive to changes in data .

- Metric learning as an ideal alternative , We can learn the metric distance function for a specific task according to different tasks .

- The common goal of measurement learning is Make the distance between similar samples as small as possible , The distance between samples of different classes shall be enlarged as much as possible .

These models are largely limited by their linear properties or shallow structures , The changes between and within individuals are complex 、 Highly nonlinear , And it can be observed in the high-dimensional image space . ( Introduce the combination of face recognition technology and deep learning )

Use both monitoring signals at the same time ( Face recognition and verification signal ) To learn these characteristics

Recognition is to classify the input image into A large number of identity classes , Verification is to classify a pair of images into Whether it belongs to the same identification ( Binary classification )

In order to characterize the face from different angles , Extract complementary facial features from different facial regions and resolutions DeepID2 features , after PCA After dimension reduction, the final feature representation is formed by splicing .

The idea of jointly solving classification and verification tasks is applied to general object recognition , The key is Improve the classification accuracy of fixed object classes , Instead of implicit features .

Identification-verification guided deep feature learning

deep ConvNets Convolution and pooling operations in are specially designed for hierarchical extraction of visual features , From local low-level features to global high-level features

It contains four convolution layers , Behind the first three floors is the largest pool .

In order to learn different quantities of advanced features , We do not need to share the weight of the entire feature map in the higher convolution layer . Except for one place , In the third convolution layer of deep neural network , Every time 2×2 The weights of neurons in local regions are locally shared . In the fourth convolution , More appropriately, it is called local connection layer , The weights between neurons are not shared at all .

ConvNet At the last layer of the feature extraction cascade, a 160 dimension Of DeepID2 vector . To learn DeepID2 Layers are completely connected to the third and fourth convolution layers . because The fourth convolution layer extracts more global features than the third convolution layer , therefore DeepID2 Layer takes multi-scale features as input , Form what is called Multiscale convolution network .

Use activation function (ReLU) As convolution layer and deepID2 Layer neurons , about Large training datasets ,ReLU Than sigmoid The unit has better matching ability .

In two supervisory signals Lower learning DeepID2 function

The first is face recognition signal , It classifies each face image into n individual ( for example ,n=8192) One of the different identities .

Recognition is done by DeepID2 Add a layer after n road softmax Layer to achieve , This layer outputs n The probability distribution of classes . The training network makes Minimizing cross entropy loss , It is called identification loss .

I d e n t ( f , t , θ i d ) = − ∑ i = 1 n − p i l o g p i ^ = − l o g p t ^ Ident(f,t,\theta_{id})=-\sum^n_{i=1}-p_ilog\hat{p_i}=-log\hat{p_t} Ident(f,t,θid)=−i=1∑n−pilogpi^=−logpt^

among f yes DeepID2 vector ,t It's the target class , θ i d θ_{id} θid Express softmax Layer parameters . p i p_i pi Is the target probability distribution , Except for the target category t Of p t p_t pt=1 Outside , all i Of p i p_i pi=0. p i ^ \hat{p_i} pi^ Is the predicted probability distribution .

In order to correctly classify all categories at the same time ,DeepID2 Layers must form identity related distinguishing features

The second is the face verification signal , It expects to extract from faces of the same identity DeepID2 be similar .

Verify that the signal is directly to DeepID2 Regularize , Can effectively reduce changes within individuals .

Common constraints include L1/L2 Norm and cosine similarity . The paper adopts the following methods based on L2 The loss function of norm , This norm was originally formulated by Hadsell For dimensionality reduction, et al .

V e r i f ( f i , f j , y i j , θ v e ) = { 1 2 ∣ ∣ f i − f j ∣ ∣ 2 2 , y i j = 1 1 2 m a x ( 0 , m − ∣ ∣ f i − f j ∣ ∣ 2 ) 2 , y i j = − 1 Verif(f_i,f_j,y_{ij},\theta_{ve})=\begin{cases} \begin{aligned} \frac{1}{2}||f_i-f_j||_2^2 , y_{ij}=1\\ \frac{1}{2}max(0,m-||f_i-f_j||_2)^2 , y_{ij}=-1 \end{aligned} \end{cases} Verif(fi,fj,yij,θve)=⎩⎪⎨⎪⎧21∣∣fi−fj∣∣22,yij=121max(0,m−∣∣fi−fj∣∣2)2,yij=−1

among f i f_i fi and f j f_j fj It is extracted from two face images in the comparison DeepID2 vector . y i j y_{ij} yij=1 Express f i f_i fi and f j f_j fj From the same identity . under these circumstances , Make two DeepID2 Between vectors L2 Minimize distance . y i j y_{ij} yij=-1 Different identities , It is required to be greater than the margin m Distance of . θ v e θ_{ve} θve={m} Is the parameter to be learned in the verification loss function .

The application of cosine similarity V e r i f ( f i , f j , y i j , θ v e ) = 1 2 ( y i j − δ ( w d + b ) ) 2 Verif(f_i,f_j,y_{ij},\theta_{ve})=\frac{1}{2}(y_{ij}-\delta(wd+b))^2 Verif(fi,fj,yij,θve)=21(yij−δ(wd+b))2.

- among d = f i ⋅ f j ∣ ∣ f i ∣ ∣ 2 ∣ ∣ f j ∣ ∣ 2 d=\frac{f_i·f_j}{||f_i||_2||f_j||_2} d=∣∣fi∣∣2∣∣fj∣∣2fi⋅fj yes DeepID2 Cosine similarity between vectors , θ v e = { w , b } \theta_{ve}=\{w,b\} θve={ w,b} Is a learnable scaling and shifting parameter ,σ yes sigmoid function , y i j y_{ij} yij It is two binary objects that compare whether the face image belongs to the same identity .

The goal is to learn the feature extraction function Conv(·) Parameters in θ c θ_c θc, and θ i d θ_{id} θid and θ v e θ_{ve} θve Only the parameters of identification and verification signals are transmitted during training . In the test phase , Use only θc Feature extraction . Random gradient descent method is used for parameter updating .

The gradient is identified and verified by the hyperparameter λ weighting .

The DeepID2 learning algorithm.

- Input : Training set χ = { ( x i , l i ) } χ=\{(xi,li)\} χ={ (xi,li)}, Initialize parameters θ c θ_c θc, θ i d θ_{id} θid and θ v e θ_{ve} θve, Hyperparameters λ, Learning rate η ( t ) η(t) η(t),t← 0

- When the result does not converge , The threshold is not reached

- T← t+1 from χ Two training samples are taken from the ( x i , l i ) (x_i,l_i) (xi,li) and ( x j , l j ) (x_j,l_j) (xj,lj).

- Calculate extracted features : f i = C o n v ( x i , θ c ) f_i=Conv(x_i,θ_c) fi=Conv(xi,θc) and $f_j=Conv(x_j,θ_c) $

- ▽ θ i d = ∂ I d e n t ( f i , l i , θ i d ) ∂ θ i d + ∂ I d e n t ( f j , l j , θ i d ) ∂ θ i d \triangledown\theta_{id}=\frac{\partial Ident(f_i,l_i,\theta_{id})}{\partial\theta_{id}}+\frac{\partial Ident(f_j,l_j,\theta_{id})}{\partial\theta_{id}} ▽θid=∂θid∂Ident(fi,li,θid)+∂θid∂Ident(fj,lj,θid);

- ▽ θ v e = λ ⋅ ∂ V e r i f ( f i , f j , y i j , θ v e ) ∂ θ v e \triangledown\theta_{ve}=\lambda·\frac{\partial Verif(f_i,f_j,y_{ij},\theta_{ve})}{\partial\theta_{ve}} ▽θve=λ⋅∂θve∂Verif(fi,fj,yij,θve); When l i = l j l_i=l_j li=lj From time to tome y i j y_{ij} yij, Otherwise, y i j = − 1 y_{ij}=-1 yij=−1.

- ▽ f i = ∂ I d e n t ( f i , l i , θ i d ) ∂ f i + λ ⋅ ∂ V e r i f ( f i , f j , y i j , θ v e ) ∂ f i \triangledown{f_i}=\frac{\partial Ident(f_i,l_i,\theta_{id})}{\partial{f_i}}+\lambda·\frac{\partial Verif(f_i,f_j,y_{ij},\theta_{ve})}{\partial{f_i}} ▽fi=∂fi∂Ident(fi,li,θid)+λ⋅∂fi∂Verif(fi,fj,yij,θve)

- ▽ f j = ∂ I d e n t ( f j , l j , θ i d ) ∂ f j + λ ⋅ ∂ V e r i f ( f i , f j , y i j , θ v e ) ∂ f j \triangledown{f_j}=\frac{\partial Ident(f_j,l_j,\theta_{id})}{\partial{f_j}}+\lambda·\frac{\partial Verif(f_i,f_j,y_{ij},\theta_{ve})}{\partial{f_j}} ▽fj=∂fj∂Ident(fj,lj,θid)+λ⋅∂fj∂Verif(fi,fj,yij,θve).

- ▽ θ c = ▽ f i ⋅ ∂ C o n v ( x i , θ c ) ∂ θ c + ▽ f j ⋅ ∂ C o n v ( x j , θ c ) ∂ θ c \triangledown\theta_{c}=\triangledown{f_i}·\frac{\partial Conv(x_i,\theta_{c})}{\partial\theta_{c}}+\triangledown{f_j}·\frac{\partial Conv(x_j,\theta_{c})}{\partial\theta_{c}} ▽θc=▽fi⋅∂θc∂Conv(xi,θc)+▽fj⋅∂θc∂Conv(xj,θc).

- to update θ i d = θ i d − η ( t ) ⋅ θ i d \theta_{id}=\theta_{id}-η(t)·θ_{id} θid=θid−η(t)⋅θid, θ v e = θ v e − η ( t ) ⋅ θ v e 0 \theta_{ve}=\theta_{ve}-η(t)·θ_{ve0} θve=θve−η(t)⋅θve0, θ c = θ c − η ( t ) ⋅ θ c \theta_{c}=\theta_{c}-η(t)·θ_c θc=θc−η(t)⋅θc.

- Output θ c \theta_c θc.

Face Verification

use first SDM Algorithm testing 21 A facial landmark .

SDM(Supvised Descent Method) Method It is mainly applied to face alignment .SDM This is a method of finding function approximation , It can be used to solve the least square problem .

In the least square problem , Newton's method is a common method , But for When solving computer vision problems , There will be some problems ,

- Hessian Matrix optimality is positive definite when it is locally optimal , Other places may not be positive , This means that the gradient direction is not necessarily the descending direction ;

- Newton's method requires that the objective function be quadratic differentiable , But in practice, it may not be able to meet the requirements ;

- Hessian The matrix will be very large , If the face has 66 Characteristic points , Each feature point has 128 dimension , Then the expansion vector can achieve 66x128, thus Hessian Matrix can be reached 8448x8448, Large dimension inverse matrix solution , It is a very large amount of calculation (O(p3) Operations and O(p2) Storage space ).

- therefore Avoid losing Hessian Matrix calculation ,Hessian An indefinite problem , Large storage space and computation , Looking for such a method is the problem to be solved in the above paper .( Raises the SDM Algorithm )

Ix = R,I Is the characteristic ,x It's a mapping matrix ,R It's the offset .SDM The purpose of face alignment training is to get the mapping matrix x, Steps are as follows :

- Normalized sample , Make the scale of the sample uniform ;

- Calculate the mean face ;

- The average face , Put the face on the sample as an estimate , Align the mean center with the center of the original face shape ;

- Calculation Features of the marked points based on each mean face ,sift,surf perhaps hog, Remember not to rely on the mutual characteristics of gray values ;

- String the features of all points together , Form sample characteristics , All sample features form a matrix I;

- Calculate the offset between the estimated face and the real face , And form a matrix R;

- Solving linear equations Ix=R.

Then, according to the detected landmarks , The face image is globally aligned by similarity transformation .

Cut it out 400 A patch , According to the globally aligned face and the position of the face logo , These patches are in position 、 The proportion 、 Color channels and horizontal flipping are different .

Through a total of 200 A depth convnet extract 400 individual DeepID2 vector , Each depth convnet They are trained to extract two from a specific patch of each face and its horizontal lip shape counterpart 160 dimension DeepID2 vector .

In order to reduce a lot of DeepID2 Redundancy between features , Forward direction of use - Backward greedy algorithm to select a small number of effective and complementary DeepID2 vector ( In our experiment for 25), This saves most of the feature extraction time during testing .

All selected 25 A patch , From which 25 individual 160 Dimensional DeepID2 vector , And connect to 4000 Dimensional DeepID2 vector . adopt PCA Further compression 4000 Dimension vector for face verification .

Learned the joint Bayesian model , For extraction based DeepID2 Face verification based on .

- Joint Bayes has been successfully used to simulate the joint probability of two people whose faces are the same or different . Represent the features of human face f Modeled as the sum of changes between and within individuals , f = μ + ε f=\mu+\varepsilon f=μ+ε. among μ \mu μ and ε \varepsilon ε All the training results are in line with the Gaussian distribution .

- Face verification passes the log likelihood ratio test , l o g P ( f 1 , f 2 ∣ H i n t e r ) P ( f 1 , f 2 ∣ H i n t r a ) log\frac{P(f_1,f_2|H_{inter})}{P(f_1,f_2|H_{intra})} logP(f1,f2∣Hintra)P(f1,f2∣Hinter), Where the numerator and denominator are the joint probabilities of two faces under the assumption of inter individual or intra individual variation respectively .

Experiments

- use CelebFaces+ Data sets are trained , It includes 202599 Zhang collected it from the Internet 10177 Identity ( celebrity ) The face image of .

- from CelebFaces+( be called CelebFaces+A) Random sampling 8192 Learn from face images of identities DeepID2 features , and 1985 Remaining face images of identities ( be called CelebFaces+B) It is used for the following feature selection and face verification model learning ( United Bayes ).

- stay CelebFaces+A Learn from DeepID2 when ,CelebFaces+B Used as a validation set , To determine the learning rate 、 Training time and super parameters λ.

- CelebFaces+B Separated as 1485 A training set of identities and 500 Authentication sets for identities , For feature selection .

- Throughout CelebFaces+B Training joint Bayesian models on data , And use the selected DeepID2 stay LFW Test on .

Balancing the identification and verification signals

- By way of λ from 0 Turn into +∞, We study the interaction between recognition and verification signals in feature learning . λ=0 when , Verify that the signal disappears , Only the identification signal is effective . When λ increases , Verification signals gradually dominate the training process . stay λ On the other end of → +∞, Only the verification signal is still present .

- V e r i f ( f i , f j , y i j , θ v e ) = { 1 2 ∣ ∣ f i − f j ∣ ∣ 2 2 , y i j = 1 1 2 m a x ( 0 , m − ∣ ∣ f i − f j ∣ ∣ 2 ) 2 , y i j = − 1 Verif(f_i,f_j,y_{ij},\theta_{ve})=\begin{cases} \begin{aligned} \frac{1}{2}||f_i-f_j||_2^2 , y_{ij}=1\\ \frac{1}{2}max(0,m-||f_i-f_j||_2)^2 , y_{ij}=-1 \end{aligned} \end{cases} Verif(fi,fj,yij,θve)=⎩⎪⎨⎪⎧21∣∣fi−fj∣∣22,yij=121max(0,m−∣∣fi−fj∣∣2)2,yij=−1 Medium L2 Norm verification loss is used for training .

- By changing the weighting parameters λ To verify the accuracy of the face . By learning DeepID2 Respectively with L2 Norm and joint Bayesian model are compared , The accuracy of face verification on the test set . Neither identification signal nor verification signal is the best signal for learning features . contrary , Effective function comes from the proper combination of the two .

- The inter individual and intra individual variances are the eigenvalues of the corresponding scattering matrix , The corresponding eigenvectors represent different variation patterns .

- Use λ=0,0.05 and +∞ The first two characteristics of learning PCA dimension , Separately, these features come from LFW The six identities with the largest number of face images in , And mark with different colors .

- When λ=0( Left ) when , Although the cluster center is actually different , But because of Huge differences within individuals , Different clusters are mixed together .

- When λ Add to 0.05( middle ) when , Intra individual differences were significantly reduced , Clusters become distinguishable .

- When λ As it increases further toward infinity ( Right picture ), Even though Intra individual variation is further reduced , But the center of the cluster also began to collapse , Some clusters become obviously overlapping ( Red in the right figure 、 Blue and cyan clusters ), Make it difficult to distinguish again .

Investigating the verification signals

- moderate intensity The verification signal of the is mainly used to reduce the variation in humans . To further verify this , Align all samples to the L2 The norm verifies the signal and constrains only positive or negative sample pairs ( Expressed as L2+ and L2-) For comparison .

- L2+ It will only reduce the number of DeepID2 Distance between , and L2- It will only increase the number of different identifications DeepID2 Distance between ( If they are less than the margin ).

- From the test set DeepID2 The accuracy of face verification , Pass respectively L2 Norm and joint Bayesian measurement .

- Also with L1 Norm and cosine verification signals and no verification signals ( nothing ) Compare . All compared identification signals are the same ( Yes 8192 Identification for classification ).

- Use L2+ Verify signal learning DeepID2 Functionality is only better than using L2 The function of learning is slightly poor . by comparison ,L2- Verification signals are not helpful for feature learning , And the result is almost the same as that without verification signal . Prove the function of the verification signal The main thing is to reduce differences within individuals .

- Whenever a verification signal is added in addition to the identification signal , Face verification accuracy is usually improved .

- L2 The norm is better than other comparison verification indicators . this Probably because all other constraints are better than L2 weak , And less effective in reducing differences within individuals . for example , Cosine similarity constrains angles only , Without constraining the magnitude .

Final system and comparison with other methods

Before learning joint Bayes , First, through PCA take DeepID2 Feature projection to low dimensional feature space .PCA after , Throughout CelebFaces+B Training joint Bayesian models on data , And in LFW Medium 6000 Test on a given face pair , The log likelihood ratio given by joint Bayes is compared with the threshold optimized on the training data for face verification .

Extracted from more and more patches DeepID2 The accuracy of face verification .

Make further use of the rich... Extracted from a large number of patches DeepID2 Function pool

Repeat the feature selection algorithm six more times , Each time, select... From the patches not selected in the previous feature selection step DeepID2.

Learn the joint Bayesian model of seven groups of selected features respectively . By further learning support vector machine , We fused seven joint Bayesian scores on each pair of comparison faces .

In this way , Achieved higher 99.15% Face verification accuracy . About LFW The accuracy and ROC Comparison with previous state-of-the-art methods .

Conclusion

- The influence of face recognition and verification supervision signal on depth feature representation is consistent with the two aspects of constructing ideal features for face recognition , namely Increase the difference between people and reduce the difference between people , Of two monitoring signals The combination produces a much better feature than any of them .

边栏推荐

- SQL Server 删除数据库所有表和所有存储过程

- 柏瑞凱電子沖刺科創板:擬募資3.6億 汪斌華夫婦為大股東

- [pytorch]fixmatch code explanation (super detailed)

- Port mapping between two computers on different LANs (anydesk)

- Understanding and thinking about multi-core consistency

- Basic exercise of test questions Yanghui triangle (two-dimensional array and shallow copy)

- How to learn to understand Matplotlib instead of simple code reuse

- Leetcode daily question - 890 Find and replace mode

- SANs证书生成

- How to solve the problem of obtaining the time through new date() and writing out the difference of 8 hours between the database and the current time [valid through personal test]

猜你喜欢

Paper reading - joint beat and downbeat tracking with recurrent neural networks

![[reading some papers] introducing deep learning into the public horizon alexnet](/img/4b/6d9bafe48094ff6451efb211d83371.jpg)

[reading some papers] introducing deep learning into the public horizon alexnet

![Leetcode 450. 删除二叉搜索树中的节点 [二叉搜索树]](/img/39/d5c4d424a160635791c4645d6f2e10.png)

Leetcode 450. 删除二叉搜索树中的节点 [二叉搜索树]

Superficial understanding of conditional random fields

Yovo3 and yovo3 tiny structure diagram

【Unity】打包WebGL项目遇到的问题及解决记录

Stm32+ze-08 formaldehyde sensor tutorial

哈夫曼树及其应用

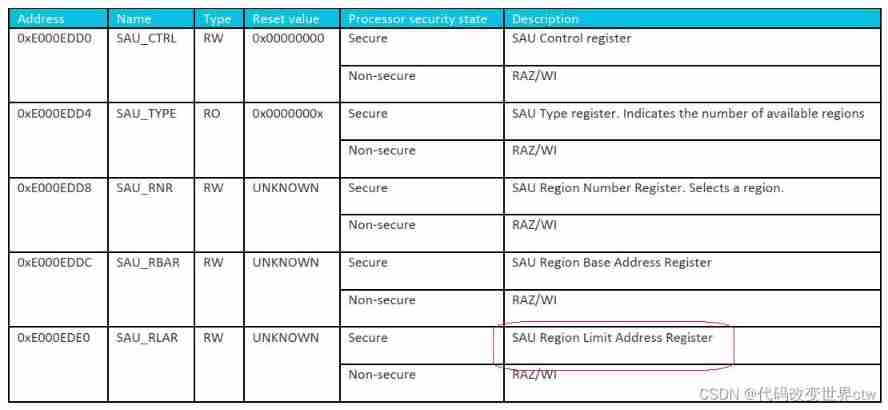

Armv8-m (Cortex-M) TrustZone summary and introduction

Understand CRF

随机推荐

[pytorch]fixmatch code explanation (super detailed)

Laptop touch pad operation

speech production model

Uniapp preview function

Resource arrangement

Laravel 权限导出

Share three stories about CMDB

Stm32 mpu6050 servo pan tilt support follow

Build MySQL environment under mac

Easydl related documents and codes

After idea uses c3p0 connection pool to connect to SQL database, database content cannot be displayed

[work notes] the problem of high leakage current in standby mode of dw7888 motor driver chip

SQL Server 删除数据库所有表和所有存储过程

SANs证书生成

Fast Color Segementation

Configuring virtual private network FRR for Huawei equipment

Basic exercises of test questions Fibonacci series

How to learn to understand Matplotlib instead of simple code reuse

Open source video recolor code

Thesis reading - autovc: zero shot voice style transfer with only autoencoder loss