当前位置:网站首页>[point cloud processing paper crazy reading frontier edition 13] - gapnet: graph attention based point neural network for exploring local feature

[point cloud processing paper crazy reading frontier edition 13] - gapnet: graph attention based point neural network for exploring local feature

2022-07-03 09:14:00 【LingbinBu】

GAPNet: Graph Attention based Point Neural Network for Exploiting Local Feature of Point Cloud

Abstract

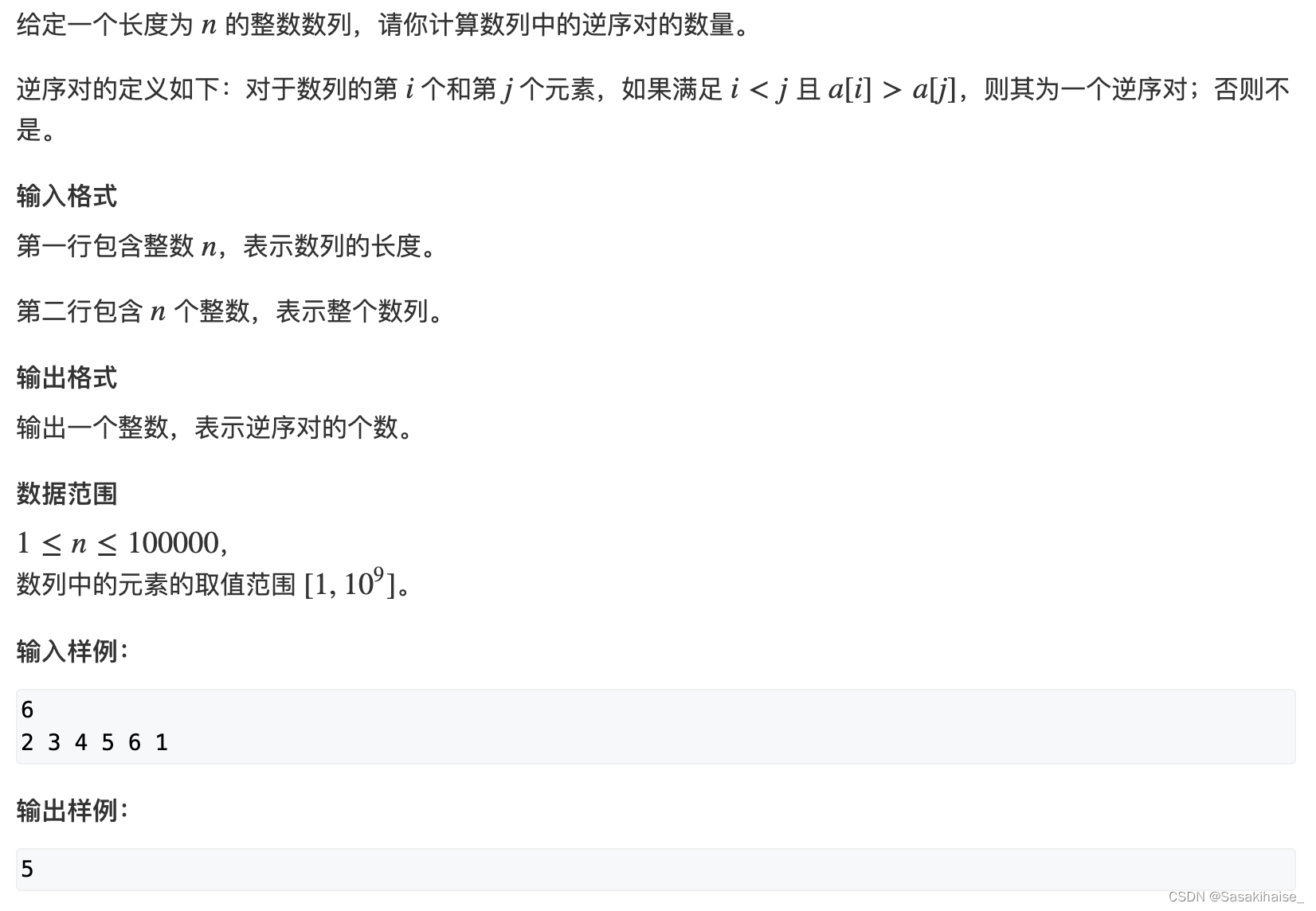

- Method : This paper presents a new method for point cloud neural network GAPNet, By way of graph attention mechanism Embedded in stacked Multi-Layer-Perceptron (MLP) layers Middle school learning point cloud Local geometric representation of

- introduce GAPLayer, Learn the weight of each point by emphasizing the different weights of the neighborhood attention features

- utilize multi-head mechanism, To be able to make GAPLayer From separate head Aggregate different features

- Propose in the neighborhood attention pooling layer obtain local signature, It is used to improve the robustness of the network

- Code :TenserFlow edition

Method

remember X = { x i ∈ R F , i = 1 , 2 , … , N } X=\left\{x_{i} \in \mathbb{R}^{F}, i=1,2, \ldots, N\right\} X={ xi∈RF,i=1,2,…,N} For input point cloud set, In this paper , F = 3 F=3 F=3, Representation coordinates ( x , y , z ) (x, y, z) (x,y,z).

GAPLayer

Local structure representation

Considering the real application point cloud The number is huge , So the use of k k k-nearest neighbor Tectonic orientation graph G = ( V , E ) G=(V, E) G=(V,E), among V = { 1 , 2 , … , N } V=\{1,2, \ldots, N\} V={ 1,2,…,N} Representation node , E ⊆ V × N i E \subseteq V \times N_{i} E⊆V×Ni edge , N i N_{i} Ni Indication point x i x_{i} xi Set of neighborhoods . Define the edge feature as y i j = ( x i − x i j ) y_{i j}=\left(x_{i}-x_{i j}\right) yij=(xi−xij), among i ∈ V , j ∈ N i i \in V, j \in N_{i} i∈V,j∈Ni, x i j x_{i j} xij Express x i x_{i} xi Of neighboring point x j x_{j} xj.

Single-head GAPLayer

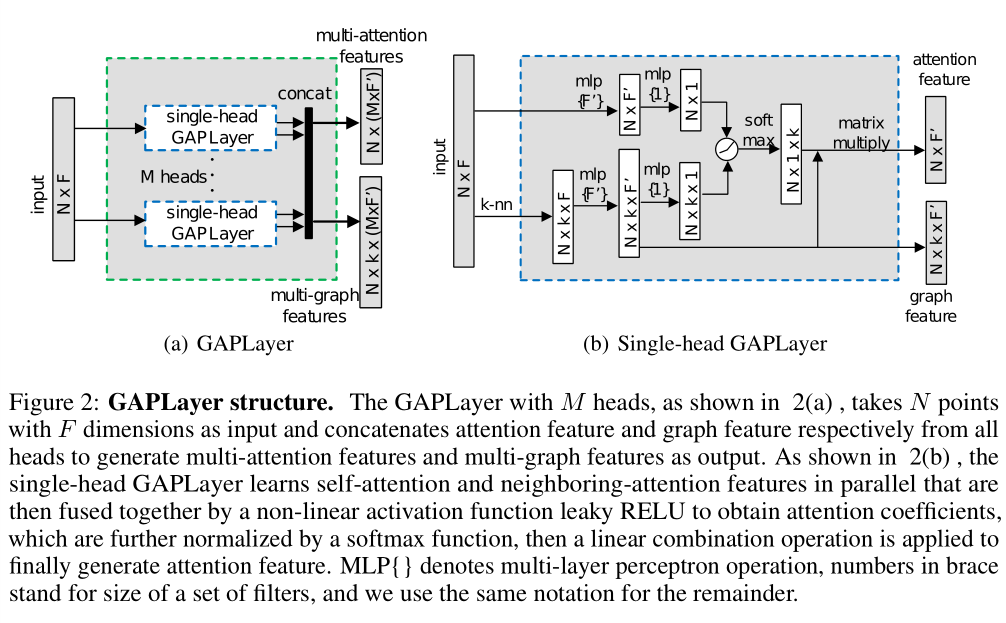

Single-head GAPLayer The structure of the is shown in the figure below 2(b).

To give everyone neighbors Allocate attention , They put forward respectively self-attention mechanism and neighboring-attention mechanism To get each point to its neighbors The attention coefficient of , Pictured 1 Shown . To be specific ,self-attention mechanism By considering the self-geometric information Study self-coefficients;neighboring-attention mechanism By considering neighborhood Focus on local-coefficients.

As an initialization step , Yes point cloud The vertices and edges of , Features mapped to higher dimensions , The dimension of the output is F F F:

x i ′ = h ( x i , θ ) y i j ′ = h ( y i j , θ ) \begin{aligned} x_{i}^{\prime} &=h\left(x_{i}, \theta\right) \\ y_{i j}^{\prime} &=h\left(y_{i j}, \theta\right) \end{aligned} xi′yij′=h(xi,θ)=h(yij,θ)

among h ( ) h() h() Is a parameterized nonlinear function , Selected in the experiment as single-layer neural network , θ \theta θ yes filter Set of learnable parameters .

Through fusion self-coefficients h ( x i ′ , θ ) h\left(x_{i}^{\prime}, \theta\right) h(xi′,θ) and local-coefficients h ( y i j ′ , θ ) h\left(y_{i j}^{\prime}, \theta\right) h(yij′,θ) To get the final attention coefficients, among h ( x i ′ , θ ) h\left(x_{i}^{\prime}, \theta\right) h(xi′,θ) and h ( y i j ′ , θ ) h\left(y_{i j}^{\prime}, \theta\right) h(yij′,θ) Yes output as 1 Dimensional single-layer neural network , LeakyReLU() Is the activation function :

c i j = LeakyRe L U ( h ( x i ′ , θ ) + h ( y i j ′ , θ ) ) c_{i j}=\operatorname{LeakyRe} L U\left(h\left(x_{i}^{\prime}, \theta\right)+h\left(y_{i j}^{\prime}, \theta\right)\right) cij=LeakyReLU(h(xi′,θ)+h(yij′,θ))

Use softmax Normalize these coefficients :

α i j = exp ( c i j ) ∑ k ∈ N i exp ( c i k ) \alpha_{i j}=\frac{\exp \left(c_{i j}\right)}{\sum_{k \in N_{i}} \exp \left(c_{i k}\right)} αij=∑k∈Niexp(cik)exp(cij)

Single-head GAPLayer The goal of is to calculate the value of each point ontextual attention feature. So , Use the calculated normalization coefficient to update the feature of the vertex x ^ i ∈ R F ′ \hat{x}_{i} \in \mathbb{R}^{F^{\prime}} x^i∈RF′ :

x ^ i = f ( ∑ j ∈ N i α i j y i j ′ ) \hat{x}_{i}=f\left(\sum_{j \in N_{i}} \alpha_{i j} y_{i j}^{\prime}\right) x^i=f⎝⎛j∈Ni∑αijyij′⎠⎞

among f ( ) f() f() It's a nonlinear activation function , Used in experiments RELU function .

Multi-head mechanism

In order to obtain sufficient structural information and stable network , We will M M M Independent single-head GAPLayers Splicing , The number of generated channels is M × F ′ M \times F^{\prime} M×F′ Of multi-attention features:

x ^ i ′ = ∥ m M x ^ i ( m ) \hat{x}_{i}^{\prime}=\|_{m}^{M} \hat{x}_{i}^{(m)} x^i′=∥mMx^i(m)

Pictured 2 Shown ,multi-head GAPLayer The output of is multi-attention features and multi-graph features. x ^ i ( m ) \hat{x}_{i}^{(m)} x^i(m) It's No m m m individual head Of attention feature, M M M yes heads The number of , ∥ \| ∥ Indicates the splicing operation between feature channels .

Attention pooling layer

In order to improve the stability and performance of the network , stay multi-graph features Defined on adjacent channels of attention pooling layer:

Y i = ∥ m M max j ∈ N i y i j ′ ( m ) Y_{i}=\|_{m}^{M} \max _{j \in N_{i}} y_{i j}^{\prime(m)} Yi=∥mMj∈Nimaxyij′(m)

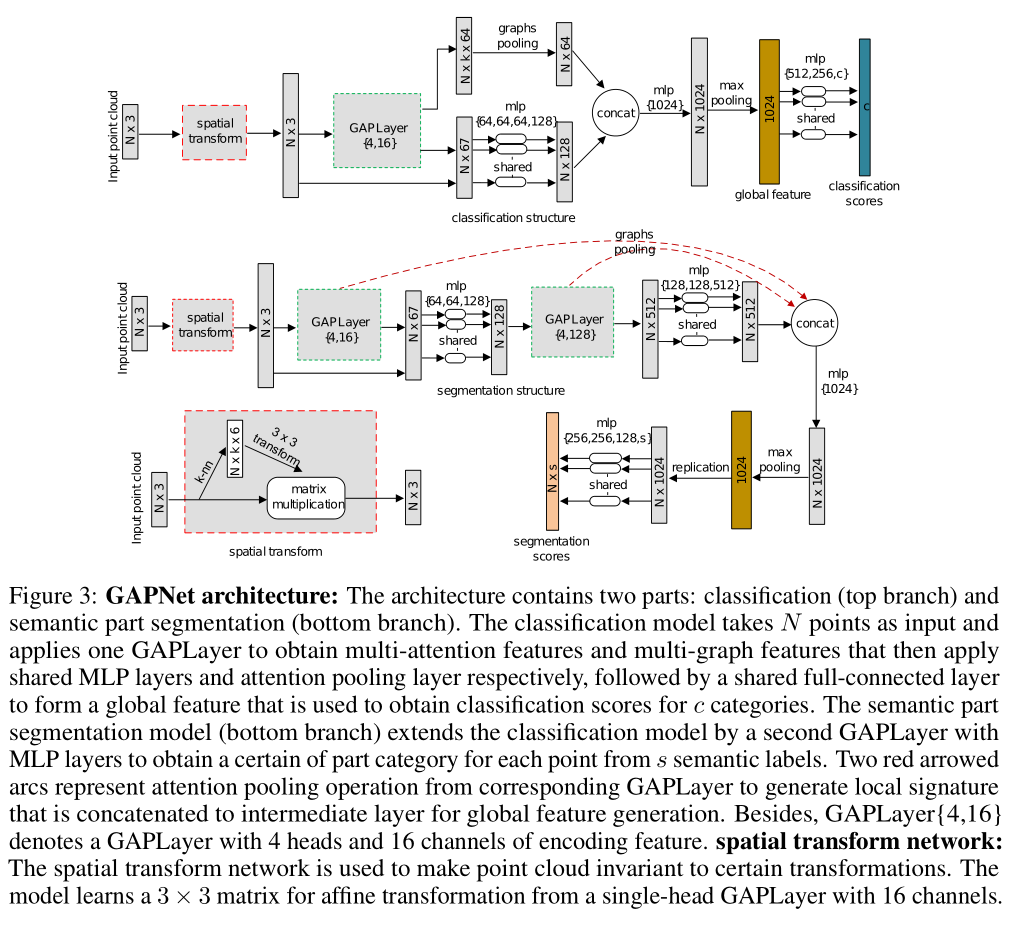

GAPNet architecture

This structure is related to PointNet Yes 3 It's different :

- Use attention-aware spatial transform network bring Point cloud It has some transformation invariance

- Do not process single points , Instead, it extracts local features

- Use attention pooling layer obtain local signature, Connect with the middle layer , Used to obtain global descriptor

experiment

Classification

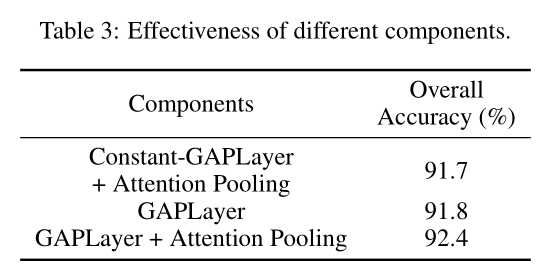

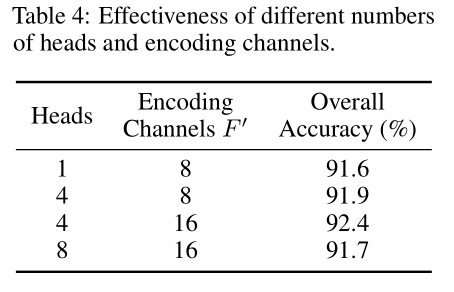

Ablation study

Semantic part segmentation

边栏推荐

- TP5 order multi condition sort

- 拯救剧荒,程序员最爱看的高分美剧TOP10

- Summary of methods for counting the number of file lines in shell scripts

- Memory search acwing 901 skiing

- LeetCode 715. Range module

- 数字化管理中台+低代码,JNPF开启企业数字化转型的新引擎

- 【点云处理之论文狂读前沿版10】—— MVTN: Multi-View Transformation Network for 3D Shape Recognition

- 低代码起势,这款信息管理系统开发神器,你值得拥有!

- [advanced feature learning on point clouds using multi resolution features and learning]

- 低代码前景可期,JNPF灵活易用,用智能定义新型办公模式

猜你喜欢

AcWing 788. 逆序对的数量

LeetCode 513. 找树左下角的值

Find the combination number acwing 886 Find the combination number II

LeetCode 535. Encryption and decryption of tinyurl

AcWing 786. Number k

浅谈企业信息化建设

求组合数 AcWing 886. 求组合数 II

How to check whether the disk is in guid format (GPT) or MBR format? Judge whether UEFI mode starts or legacy mode starts?

干货!零售业智能化管理会遇到哪些问题?看懂这篇文章就够了

【点云处理之论文狂读前沿版9】—Advanced Feature Learning on Point Clouds using Multi-resolution Features and Learni

随机推荐

Tree DP acwing 285 A dance without a boss

STM32F103 can learning record

On the setting of global variable position in C language

LeetCode 871. Minimum refueling times

Vscode connect to remote server

LeetCode 513. Find the value in the lower left corner of the tree

精彩回顾|I/O Extended 2022 活动干货分享

Low code momentum, this information management system development artifact, you deserve it!

Using variables in sed command

干货!零售业智能化管理会遇到哪些问题?看懂这篇文章就够了

LeetCode 871. 最低加油次数

[point cloud processing paper crazy reading frontier version 10] - mvtn: multi view transformation network for 3D shape recognition

我們有個共同的名字,XX工

即时通讯IM,是时代进步的逆流?看看JNPF怎么说

Binary tree sorting (C language, char type)

【点云处理之论文狂读经典版11】—— Mining Point Cloud Local Structures by Kernel Correlation and Graph Pooling

AcWing 786. Number k

Discussion on enterprise informatization construction

Find the combination number acwing 886 Find the combination number II

Common penetration test range