当前位置:网站首页>Convolutional neural network model -- lenet network structure and code implementation

Convolutional neural network model -- lenet network structure and code implementation

2022-07-04 22:11:00 【1 + 1= Wang】

List of articles

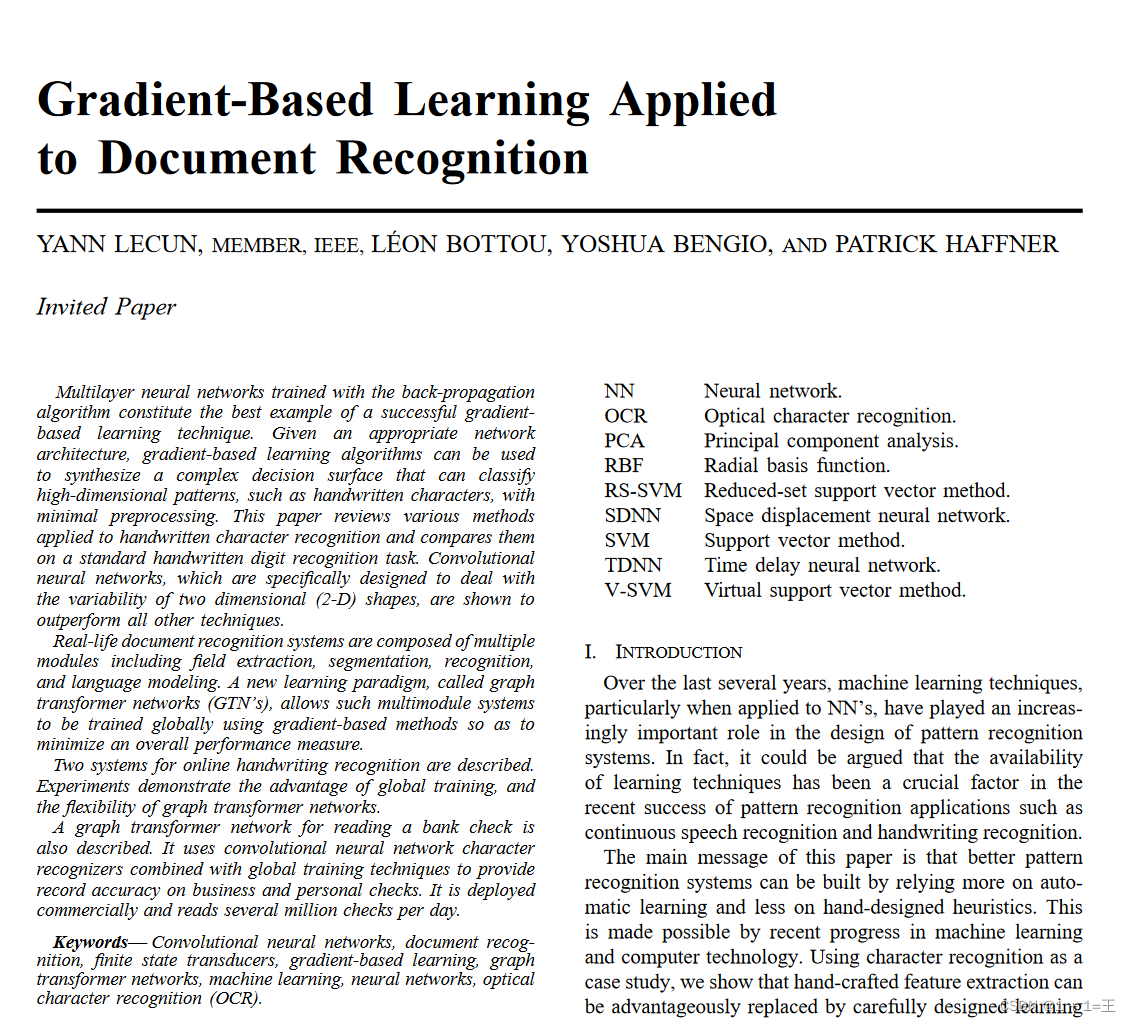

LeNet brief introduction

LeNet Original address :https://ieeexplore.ieee.org/abstract/document/726791

LeNet It can be said to be convolutional neural network “HelloWorld”, It is through clever design , Using convolution 、 Pool and other operations to extract features , Then use the fully connected neural network to classify .

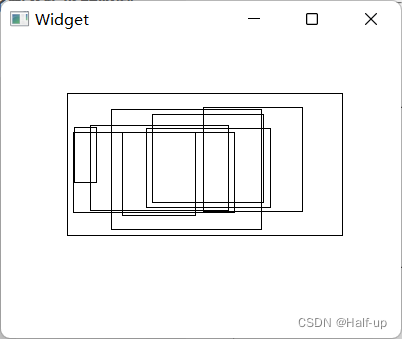

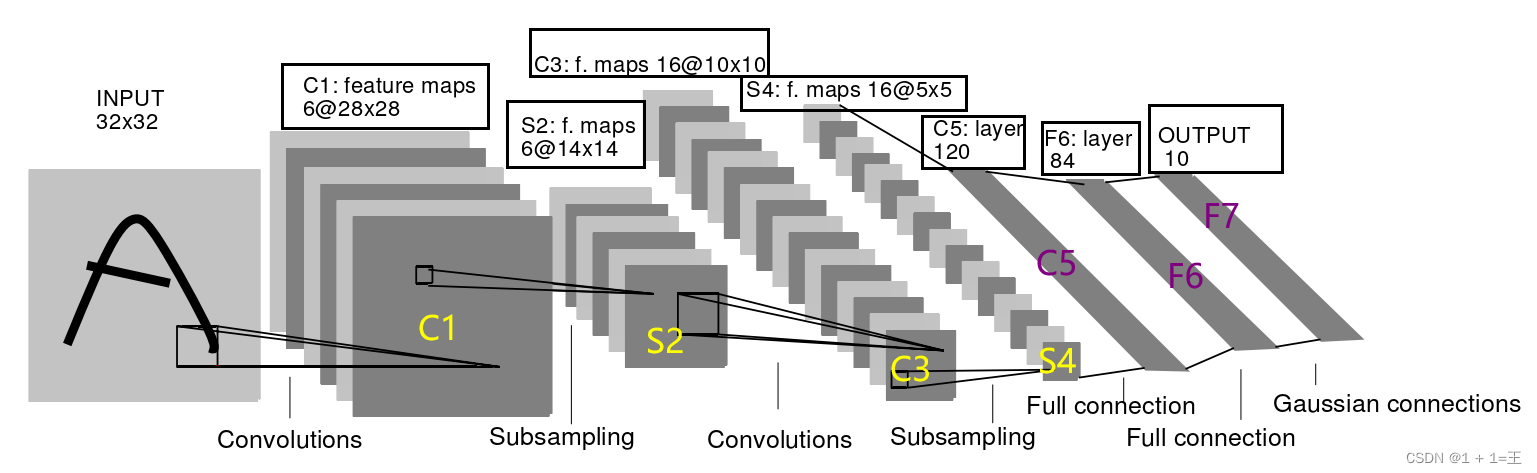

Lenet It's a 7 Layer of neural network ( Does not include the input layer ), contain 3 Convolution layers ,2 A pool layer ,2 All connection layers . Its network structure is shown below :

LeNet7 The layer structure

The first 0 layer : Input

The input original image size is 32×32 Pixel 3 Channel image .

C1: The first convolution layer

C1 It's a convolution , The convolution kernel size is 5, The input size is 3X32X32, The size of the output feature map is 16X28X28.

self.conv1 = nn.Conv2d(3, 16, 5)

torch.nn.Conv2d() The parameters of are explained as follows :

torch.nn.Conv2d(in_channels, out_channels, kernel_size, stride=1, padding=0, dilation=1, groups=1, bias=True, padding_mode='zeros', device=None, dtype=None)

- in_channels (int) – Number of channels in the input image

- out_channels (int) – Number of channels produced by the convolution

- kernel_size (int or tuple) – Size of the convolving kernel

- stride (int or tuple, optional) – Stride of the convolution. Default: 1

- padding (int, tuple or str, optional) – Padding added to all four sides of the input. Default: 0

- padding_mode (string, optional) – ‘zeros’, ‘reflect’, ‘replicate’ or ‘circular’. Default: ‘zeros’

- dilation (int or tuple, optional) – Spacing between kernel elements. Default: 1

- groups (int, optional) – Number of blocked connections from input channels to output channels. Default: 1

- bias (bool, optional) – If True, adds a learnable bias to the output. Default: True

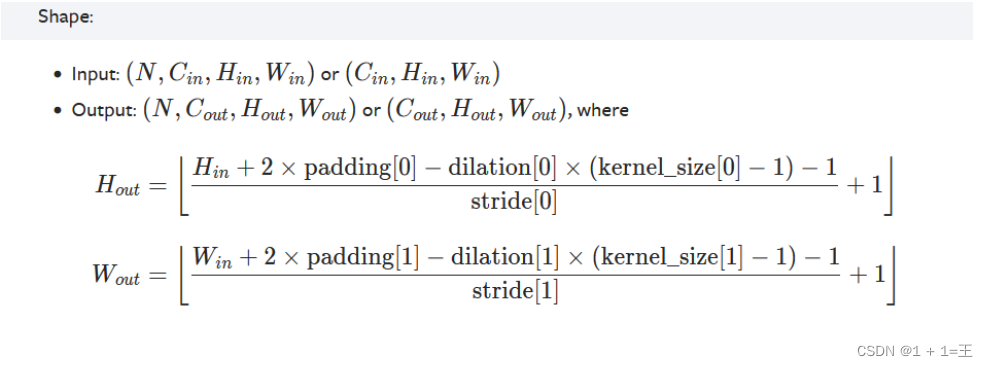

The output size after convolution is calculated as the following :

S2: First lower sampling layer

S2 It's a pool layer ,kernel_size by 2,stride by 2, The input size is 16X28X28, The size of the output feature map is 16X14X14.

self.pool1 = nn.MaxPool2d(2, 2)

torch.nn.MaxPool2d The parameters of are explained as follows :

torch.nn.MaxPool2d(kernel_size, stride=None, padding=0, dilation=1, return_indices=False, ceil_mode=False)

- kernel_size – the size of the window to take a max over

- stride – the stride of the window. Default value is kernel_size

- padding – implicit zero padding to be added on both sides

- dilation – a parameter that controls the stride of elements in the window

- return_indices – if True, will return the max indices along with the outputs. Useful for torch.nn.MaxUnpool2d later

- ceil_mode – when True, will use ceil instead of floor to compute the output shape

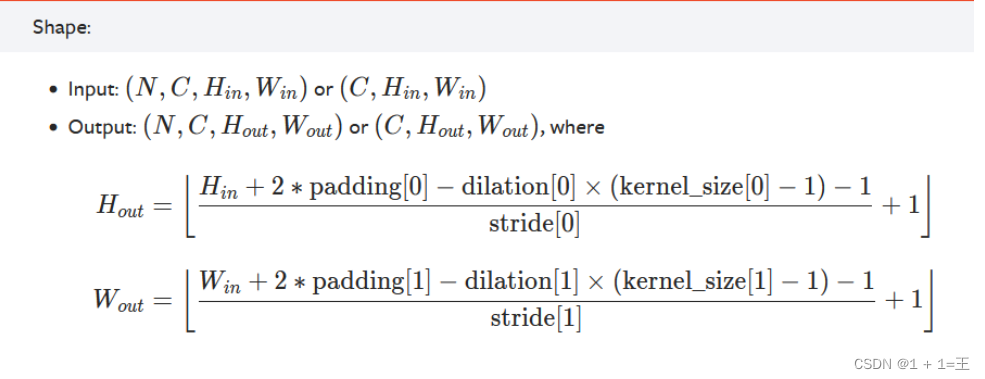

The output size after pooling is calculated as the following :

C3: The first 2 Convolution layers

C3 It's a convolution , The convolution kernel size is 5, The input size is 16X14X14, The size of the output feature map is 32X10X10.

self.conv2 = nn.Conv2d(16, 32, 5)

S4: The first 2 Next sampling layer

S4 It's a pool layer ,kernel_size by 2,stride by 2, The input size is 32X10X10, The size of the output feature map is 32X5X5.

self.pool2 = nn.MaxPool2d(2, 2)

C5: The first 3 Convolution layers

C5 It's a convolution , The convolution kernel size is 5, The input size is 32X5X5, The size of the output feature map is 120X1X1.

Here, the full connection layer is used instead

self.fc1 = nn.Linear(32*5*5, 120)

F6: The first 1 All connection layers

F6 Is a fully connected layer , The input size is 120, The size of the output feature map is 84.

self.fc2 = nn.Linear(120, 84)

F7: The first 2 All connection layers

F7 Is a fully connected layer , The input size is 84, The size of the output feature map is 10( Express 10 Species category ).

self.fc3 = nn.Linear(84, 10)

Use pytorch build LeNet

Build a network model , At least two steps are needed

1. Create a class and inherit nn.Module

import torch.nn as nn

# pytorch: Channel sorting :[N,Channel,Height,Width]

class LeNet(nn.Module):

2. Class to implement two methods

def __init__(self): Define the network layer structure that needs to be used in building the network

def forward(self, x): Define the forward propagation process

LeNet Network structure pytorch Realization :

class LeNet(nn.Module):

def __init__(self):

super(LeNet, self).__init__()

self.conv1 = nn.Conv2d(3, 16, 5) # C1

self.pool1 = nn.MaxPool2d(2, 2) # S2

self.conv2 = nn.Conv2d(16, 32, 5) # C3

self.pool2 = nn.MaxPool2d(2, 2) # S4

self.fc1 = nn.Linear(32*5*5, 120) # C5( Replace with full connection )

self.fc2 = nn.Linear(120, 84) # F6

self.fc3 = nn.Linear(84, 10) # F7

def forward(self, x):

x = F.relu(self.conv1(x)) # input(3, 32, 32) output(16, 28, 28)

x = self.pool1(x) # output(16, 14, 14)

x = F.relu(self.conv2(x)) # output(32, 10, 10)

x = self.pool2(x) # output(32, 5, 5)

x = x.view(-1, 32*5*5) # output(32*5*5) Flattening

x = F.relu(self.fc1(x)) # output(120)

x = F.relu(self.fc2(x)) # output(84)

x = self.fc3(x) # output(10)

return x

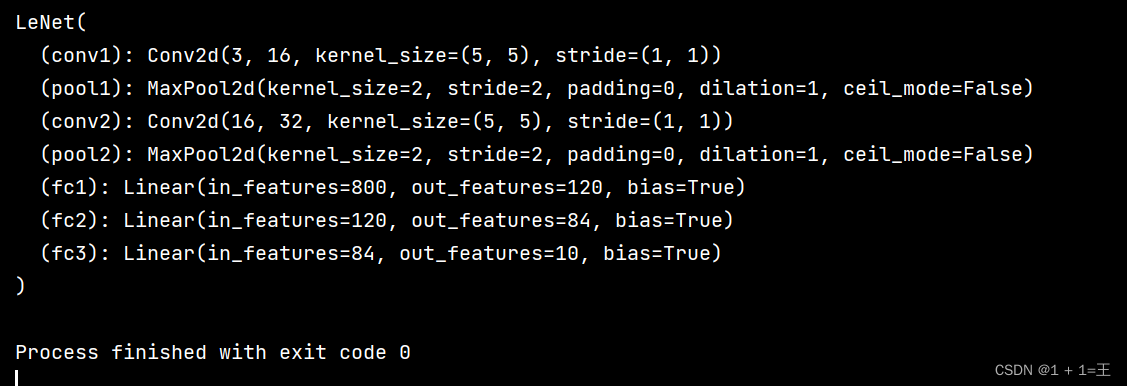

Print LeNet The structure is as follows :

model = LeNet()

print(model)

边栏推荐

- Solve the problem of data disorder caused by slow asynchronous interface

- How to remove the black dot in front of the title in word document

- What is business intelligence (BI), just look at this article is enough

- Machine learning notes mutual information

- 案例分享|金融业数据运营运维一体化建设

- 力扣2_1480. 一维数组的动态和

- The drawing method of side-by-side diagram, multi row and multi column

- Drop down selection of Ehlib database records

- Implementation rules for archiving assessment materials of robot related courses 2022 version

- Open3d surface normal vector calculation

猜你喜欢

随机推荐

Master the use of auto analyze in data warehouse

Open3d surface normal vector calculation

HDU - 2859 Phalanx(DP)

Radio and television Wuzhou signed a cooperation agreement with Huawei to jointly promote the sustainable development of shengteng AI industry

PMO:比较25种分子优化方法的样本效率

WebGIS framework -- kalrry

TCP shakes hands three times and waves four times. Do you really understand?

Use of class methods and class variables

GTEST from ignorance to proficiency (3) what are test suite and test case

时空预测3-graph transformer

Flink1.13 SQL basic syntax (I) DDL, DML

i.MX6ULL驱动开发 | 24 - 基于platform平台驱动模型点亮LED

一文掌握数仓中auto analyze的使用

Drop down selection of Ehlib database records

Why do you have to be familiar with industry and enterprise business when doing Bi development?

Delphi soap WebService server-side multiple soapdatamodules implement the same interface method, interface inheritance

服务线上治理

You don't have to run away to delete the library! Detailed MySQL data recovery

bizchart+slider实现分组柱状图

[early knowledge of activities] list of recent activities of livevideostack