当前位置:网站首页>Machine learning notes mutual information

Machine learning notes mutual information

2022-07-04 22:04:00 【Sit and watch the clouds rise】

1、 summary

When encountering a new data set, the important first step is to use the characteristic utility index to build the ranking , This indicator is a function of measuring the correlation between characteristics and objectives . then , You can choose a small number of the most useful functions for initial development .

The metrics we use are called “ Mutual information ”. Mutual information is much like relevance , Because it measures the relationship between two quantities . The advantage of mutual information is that it can detect any kind of relationship , Correlation only detects linear relationships .

Mutual information is a good general indicator , Especially useful at the beginning of function development , Because you may not know which model to use .

Mutual information is easy to use and interpret , High calculation efficiency , There is a theoretical basis , Over fitting , And can detect any type of relationship .

2、 Mutual information and its measurement

Mutual information describes the relationship in terms of uncertainty . Mutual information between two quantities (MI) It is a measure of how much knowledge of one quantity reduces the uncertainty of another . If you know the value of a feature , Will you have more confidence in your goals ?

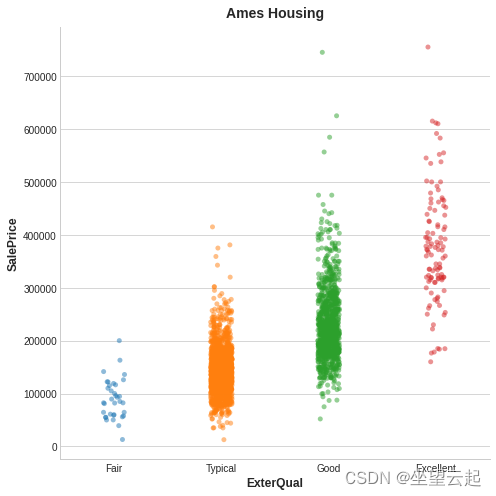

This is a Ames Housing An example of data . The figure shows the relationship between the appearance quality of the house and its selling price . Each dot represents a house .

As we can see from the picture , know ExterQual The value of should make you correct the corresponding SalePrice More certain ——ExterQual Each category of tends to SalePrice Concentrate in a certain range . ExterQual And SalePrice The mutual information of is ExterQual Four values of SalePrice Average reduction in uncertainty . for example , because Fair The occurrence frequency of is lower than that of typical , therefore Fair stay MI The weight in the score is small .

What we call uncertainty is the use of information theory called “ entropy ” To measure . The entropy of a variable roughly means :“ How many yes or no questions do you need to describe this happening Variable , On average, .” The more questions you have to ask , The greater your uncertainty about variables . Mutual information is how many questions about goals you expect the feature to answer .

3、 Explain mutual information scores

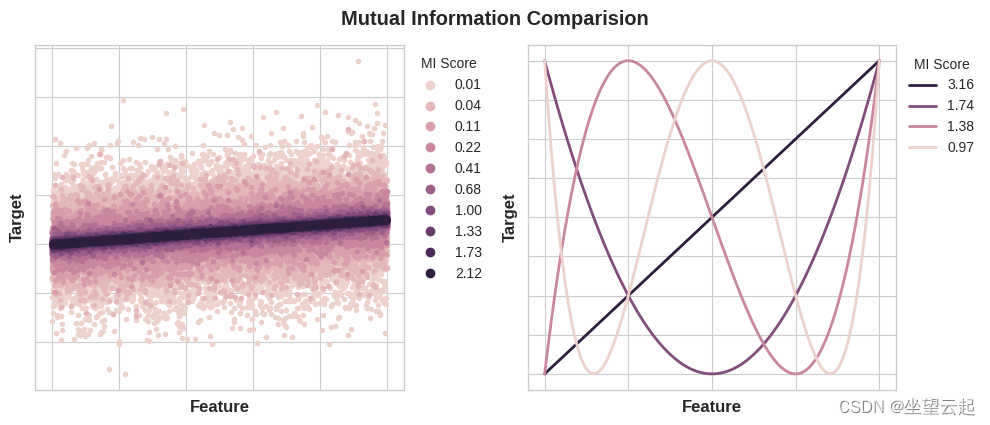

The minimum possible mutual information between quantities is 0.0. When MI Is zero , These quantities are independent : Neither can tell you anything about the other . contrary , Theoretically MI There is no upper limit . In practice , Although higher than 2.0 Values around are not common . ( Mutual information is a pair of numbers , So it increases very slowly .)

The following figure will show you MI How the value corresponds to the type and degree of association between the feature and the target .

Here are some things to remember when applying mutual information :

MI It can help you understand the relative potential of a feature as a target predictor , And consider it alone .

When interacting with other functions , A function may provide very rich information , But the amount of information alone may not be very large . MI Interaction between features cannot be detected . It is a univariate indicator .

The actual use of the function depends on the model you use . A feature is only useful if its relationship to the target is something your model can learn . Just because one feature has high MI Score does not mean that your model will be able to do anything with this information . You may need to transform features first to expose associations .

4、 Example - 1985 Cars in

The car data set consists of 1985 Year of 193 Vehicle composition . The goal of this data set is based on the 23 Features ( For example, brand 、 Body style and horsepower ) To predict the price of cars ( The goal is ). In this case , We will rank features with mutual information , And visualize the research results through data .

Automobile Dataset | KaggleDataset consist of various characteristic of an autohttps://www.kaggle.com/toramky/automobile-dataset The following code imports some libraries and loads the dataset .

import matplotlib.pyplot as plt

import numpy as np

import pandas as pd

import seaborn as sns

plt.style.use("seaborn-whitegrid")

df = pd.read_csv("../input/fe-course-data/autos.csv")

df.head()

MI Of scikit-learn The algorithm deals with discrete features differently from continuous features . Based on experience , Any must have float dtype Everything is not discrete . By giving them a tag code , You can classify ( Object or classification dtype) Considered discrete .

X = df.copy()

y = X.pop("price")

# Label encoding for categoricals

for colname in X.select_dtypes("object"):

X[colname], _ = X[colname].factorize()

# All discrete features should now have integer dtypes (double-check this before using MI!)

discrete_features = X.dtypes == int

Scikit-learn In its feature_selection There are two mutual information measures in the module : One for real value goals (mutual_info_regression), A target for classification (mutual_info_classif). Our goal , Price , Is of real value . The next unit calculates our characteristic MI fraction , And wrap them in a data frame .

from sklearn.feature_selection import mutual_info_regression

def make_mi_scores(X, y, discrete_features):

mi_scores = mutual_info_regression(X, y, discrete_features=discrete_features)

mi_scores = pd.Series(mi_scores, name="MI Scores", index=X.columns)

mi_scores = mi_scores.sort_values(ascending=False)

return mi_scores

mi_scores = make_mi_scores(X, y, discrete_features)

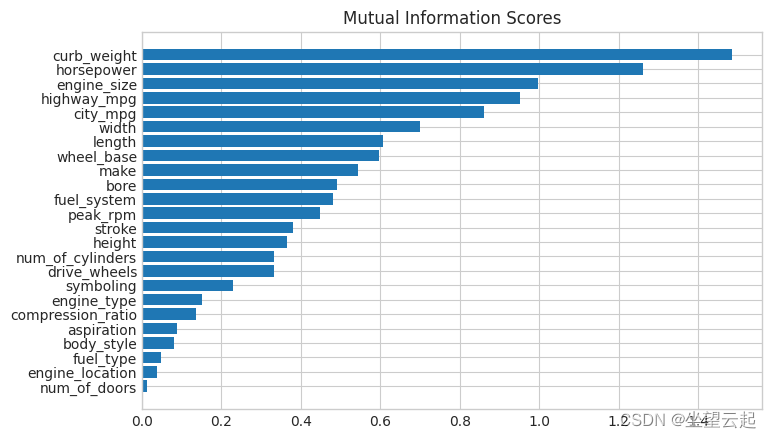

mi_scores[::3] # show a few features with their MI scorescurb_weight 1.486440

highway_mpg 0.950989

length 0.607955

bore 0.489772

stroke 0.380041

drive_wheels 0.332973

compression_ratio 0.134799

fuel_type 0.048139

Name: MI Scores, dtype: float64Now it's a bar chart , It can make the comparison easier :

def plot_mi_scores(scores):

scores = scores.sort_values(ascending=True)

width = np.arange(len(scores))

ticks = list(scores.index)

plt.barh(width, scores)

plt.yticks(width, ticks)

plt.title("Mutual Information Scores")

plt.figure(dpi=100, figsize=(8, 5))

plot_mi_scores(mi_scores)

Data visualization is a good follow-up to utility ranking . Let's take a closer look at some of them .

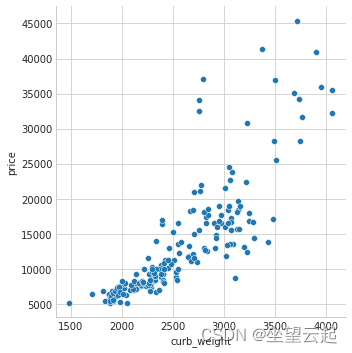

as we had expected , High score curb_weight Characteristics have a strong relationship with the target price .

sns.relplot(x="curb_weight", y="price", data=df);

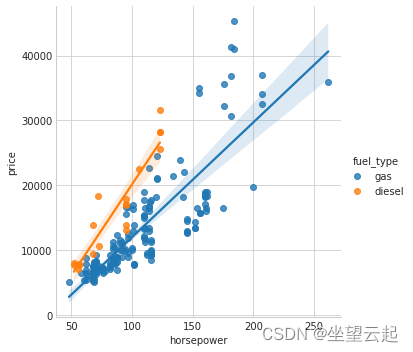

Fuel_type Features have a fairly low MI fraction , But we can see from the picture that , It clearly distinguishes two price groups with different trends in horsepower characteristics . This shows that fuel_type Contributed an interaction , And it may not be unimportant . In from MI Before the score determines that a characteristic is not important , It is best to investigate any possible interaction —— Knowledge in the professional field can provide a lot of guidance here .

sns.lmplot(x="horsepower", y="price", hue="fuel_type", data=df);

Data visualization is an important supplement to the feature engineering toolbox . In addition to practical indicators such as mutual information , This kind of visualization can help you discover important relationships in the data .

边栏推荐

猜你喜欢

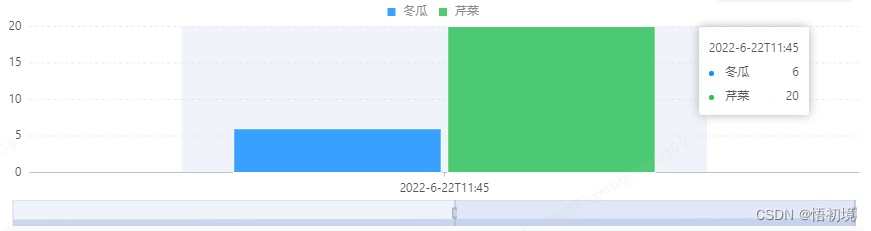

bizchart+slider实现分组柱状图

Flutter TextField示例

MP3是如何诞生的?

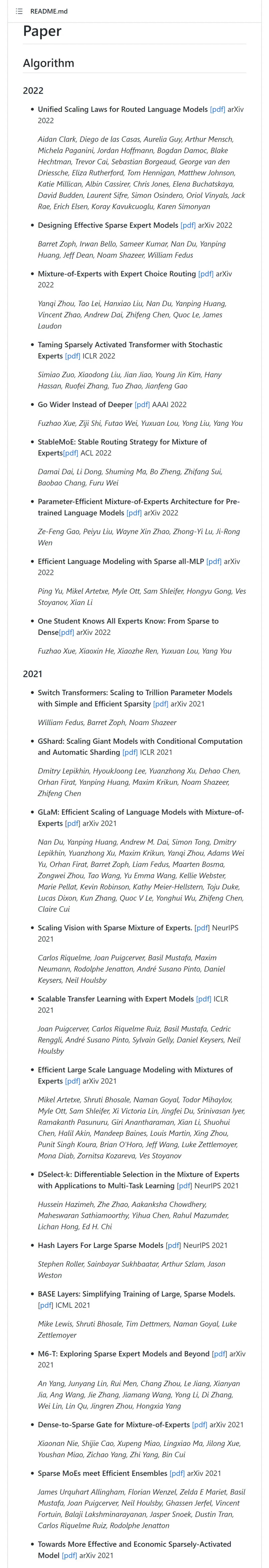

Sorting and sharing of selected papers, systems and applications related to the most comprehensive mixed expert (MOE) model in history

![[advanced C language] array & pointer & array written test questions](/img/3f/83801afba153cfc0dd73aa8187ef20.png)

[advanced C language] array & pointer & array written test questions

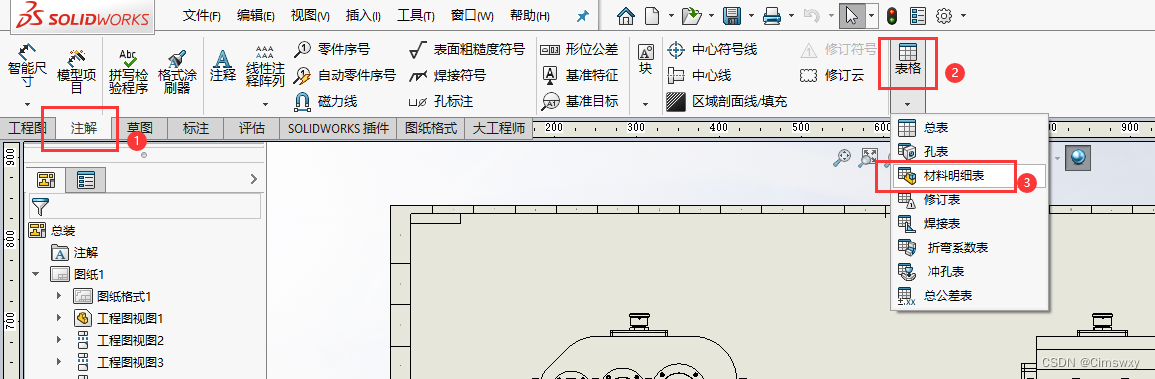

Operation of adding material schedule in SolidWorks drawing

QT - double buffer plot

智洋创新与华为签署合作协议,共同推进昇腾AI产业持续发展

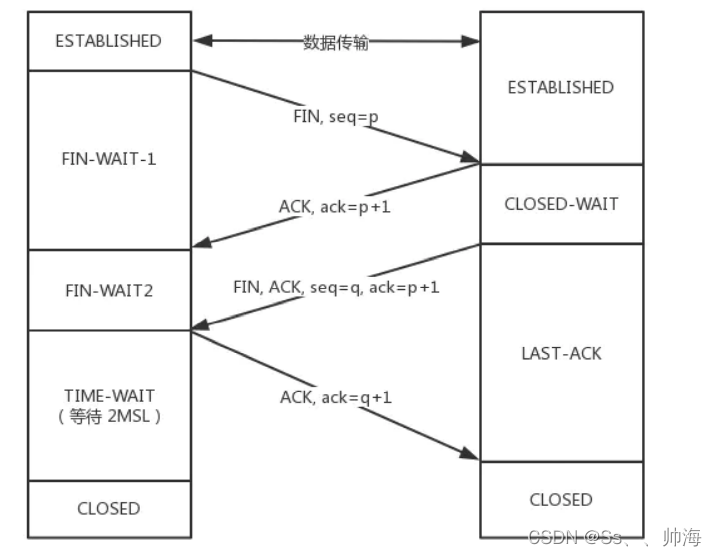

TCP shakes hands three times and waves four times. Do you really understand?

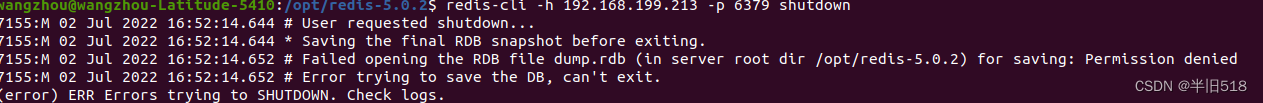

Redis03 - network configuration and heartbeat mechanism of redis

随机推荐

【C语言进阶篇】数组&&指针&&数组笔试题

Operation of adding material schedule in SolidWorks drawing

For MySQL= No data equal to null can be found. Solution

Jerry's ad series MIDI function description [chapter]

gtest从一无所知到熟练使用(3)什么是test suite和test case

close系统调用分析-性能优化

文件读取写入

面试题 01.01. 判定字符是否唯一

GTEST from ignorance to proficiency (4) how to write unit tests with GTEST

Learning breakout 3 - about energy

How was MP3 born?

Master the use of auto analyze in data warehouse

面试题 01.08. 零矩阵

Is it safe to open an account in the stock of Caicai college? Can you only open an account by digging money?

类方法和类变量的使用

【公开课预告】:视频质量评价基础与实践

# 2156. 查找给定哈希值的子串-后序遍历

TCP三次握手,四次挥手,你真的了解吗?

el-tree结合el-table,树形添加修改操作

MongoDB中的索引操作总结