当前位置:网站首页>Pytorch parameter initialization

Pytorch parameter initialization

2022-07-07 07:43:00 【Melody2050】

feasible initializer Yes kaiming_normal、xavier_normal.

Reference blog weight-initialization-in-neural-networks-a-journey-from-the-basics-to-kaiming

Gradient explosion and gradient disappearance

Back propagation

Chain according to gradient Back propagation , When the deep gradient is going to spread to the shallow , Will be multiplied by the gradient of this layer . We refer to Deep feedforward network and Xavier Initialization principle Give examples . Suppose there is a linear connection layer followed by an activation layer , Here's the picture :

- Linear connection layer f2 The input is x, Output is z. namely z = f 2

边栏推荐

- leetcode:105. 从前序与中序遍历序列构造二叉树

- Live online system source code, using valueanimator to achieve view zoom in and out animation effect

- 一、Go知识查缺补漏+实战课程笔记 | 青训营笔记

- buuctf misc USB

- 三、高质量编程与性能调优实战 青训营笔记

- UWB learning 1

- Detailed explanation of uboot image generation process of Hisilicon chip (hi3516dv300)

- Live broadcast platform source code, foldable menu bar

- URP - shaders and materials - light shader lit

- MobaXterm

猜你喜欢

I failed in the postgraduate entrance examination and couldn't get into the big factory. I feel like it's over

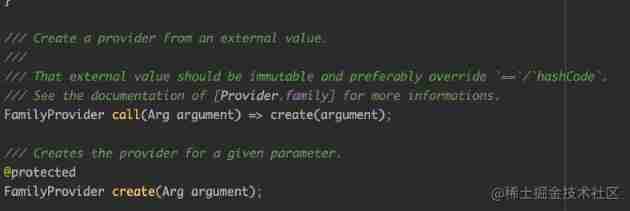

Flutter riverpod is comprehensively and deeply analyzed. Why is it officially recommended?

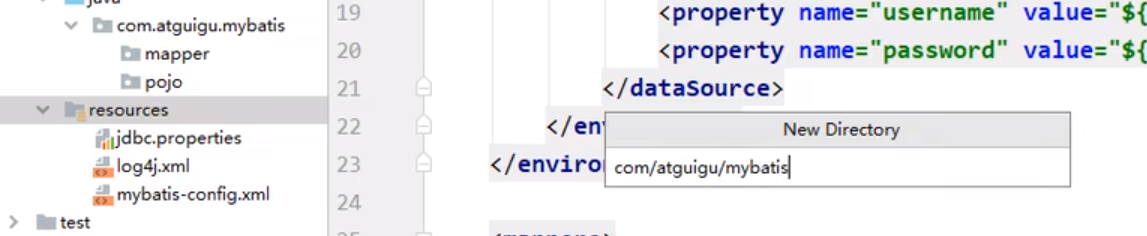

resource 创建包方式

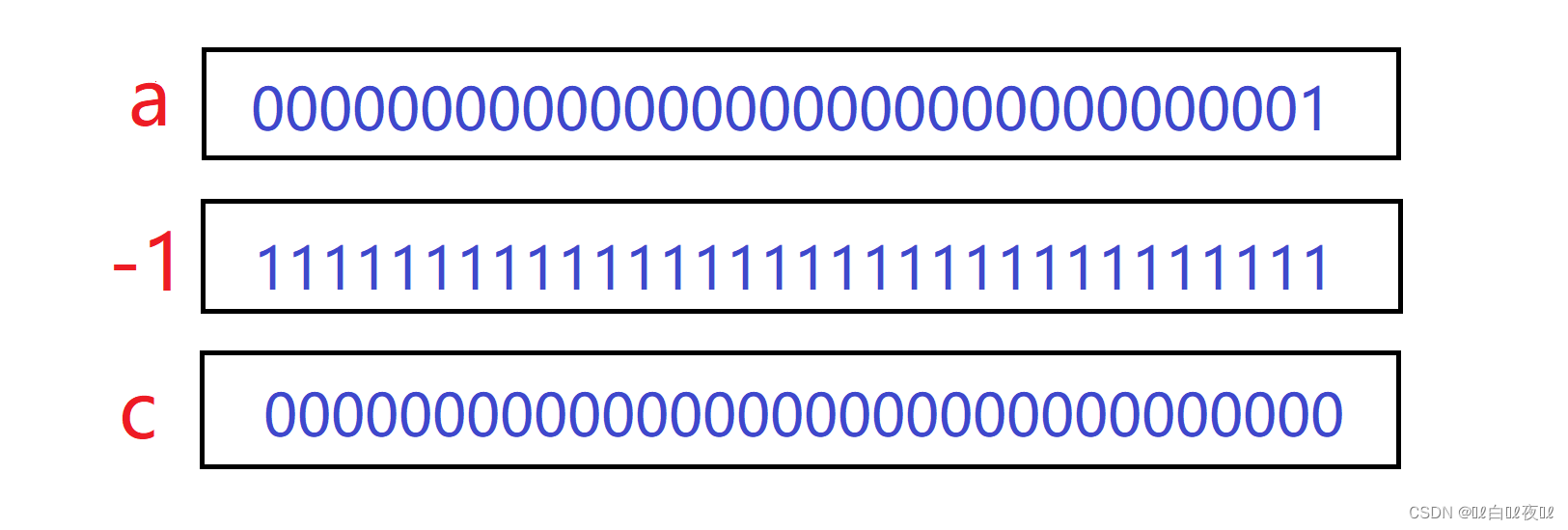

C language (high-level) data storage + Practice

![[SUCTF 2019]Game](/img/9c/362117a4bf3a1435ececa288112dfc.png)

[SUCTF 2019]Game

nacos

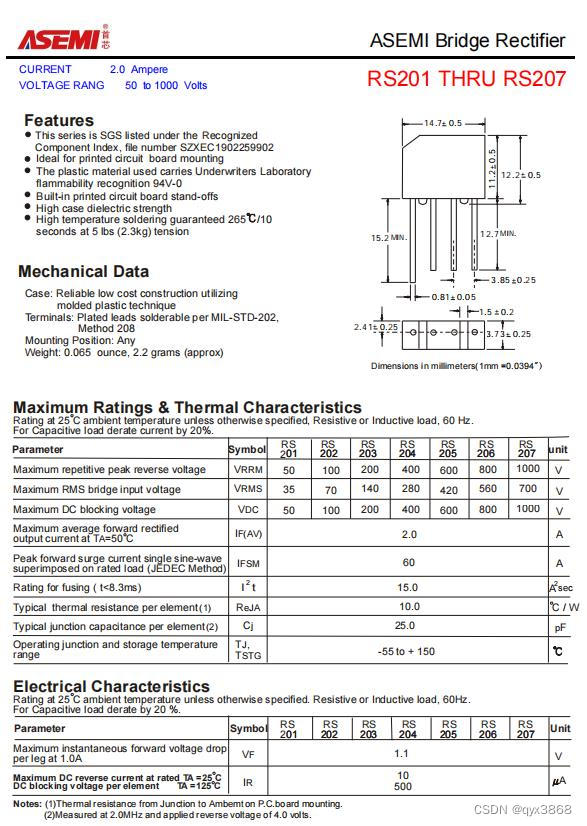

Idea add class annotation template and method template

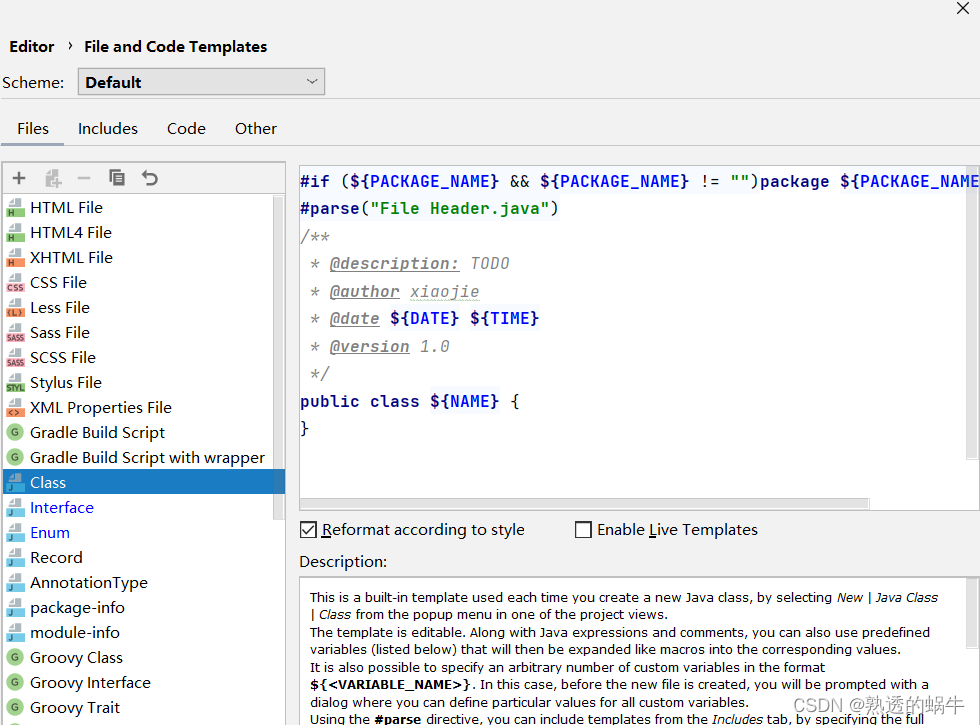

ASEMI整流桥RS210参数,RS210规格,RS210封装

L'externalisation a duré trois ans.

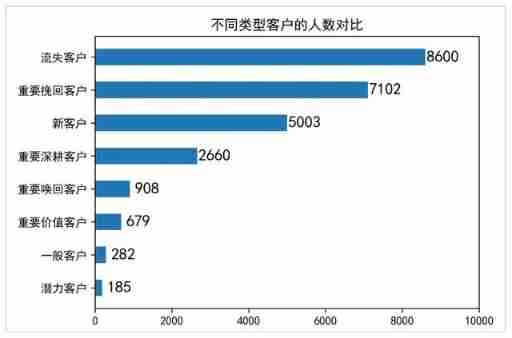

Summary of customer value model (RFM) technology for data analysis

随机推荐

一、Go知识查缺补漏+实战课程笔记 | 青训营笔记

Model application of time series analysis - stock price prediction

[SUCTF 2019]Game

How to * * labelimg

直播平台源码,可折叠式菜单栏

buuctf misc USB

Write CPU yourself -- Chapter 9 -- learning notes

【Unity】物体做圆周运动的几个思路

misc ez_ usb

[UTCTF2020]file header

测试周期被压缩?教你9个方法去应对

【经验分享】如何为visio扩展云服务图标

智联+影音,AITO问界M7想干翻的不止理想One

2、 Concurrent and test notes youth training camp notes

[2022 ACTF]web题目复现

Gslx680 touch screen driver source code analysis (gslx680. C)

leetcode:105. 从前序与中序遍历序列构造二叉树

Detailed explanation of uboot image generation process of Hisilicon chip (hi3516dv300)

外包干了四年,废了...

海思芯片(hi3516dv300)uboot镜像生成过程详解