当前位置:网站首页>Task5: multi type emotion analysis

Task5: multi type emotion analysis

2022-07-03 13:14:00 【Levi Bebe】

In this study , We will have 6 Data sets of classes perform classification

You can use jupyter notebook function !!!

import torch

from torchtext.legacy import data

from torchtext.legacy import datasets

import random

SEED = 1234

torch.manual_seed(SEED)

torch.backends.cudnn.deterministic = True

TEXT = data.Field(tokenize = 'spacy',tokenizer_language = 'en_core_web_sm')

LABEL = data.LabelField()

train_data, test_data = datasets.TREC.splits(TEXT, LABEL, fine_grained=False)

train_data, valid_data = train_data.split(random_state = random.seed(SEED))

# Building a vocabulary

MAX_VOCAB_SIZE = 25_000

TEXT.build_vocab(train_data,

max_size = MAX_VOCAB_SIZE,

vectors = "glove.6B.100d",

unk_init = torch.Tensor.normal_)

LABEL.build_vocab(train_data)

# Build iterators

BATCH_SIZE = 64

device = torch.device('cuda' if torch.cuda.is_available() else 'cpu')

train_iterator, valid_iterator, test_iterator = data.BucketIterator.splits(

(train_data, valid_data, test_data),

batch_size = BATCH_SIZE,

device = device)

# Model building

import torch.nn as nn

import torch.nn.functional as F

class CNN(nn.Module):

def __init__(self, vocab_size, embedding_dim, n_filters, filter_sizes, output_dim,

dropout, pad_idx):

super().__init__()

self.embedding = nn.Embedding(vocab_size, embedding_dim)

self.convs = nn.ModuleList([

nn.Conv2d(in_channels = 1,

out_channels = n_filters,

kernel_size = (fs, embedding_dim))

for fs in filter_sizes

])

self.fc = nn.Linear(len(filter_sizes) * n_filters, output_dim)

self.dropout = nn.Dropout(dropout)

def forward(self, text):

#text = [sent len, batch size]

text = text.permute(1, 0)

#text = [batch size, sent len]

embedded = self.embedding(text)

#embedded = [batch size, sent len, emb dim]

embedded = embedded.unsqueeze(1)

#embedded = [batch size, 1, sent len, emb dim]

conved = [F.relu(conv(embedded)).squeeze(3) for conv in self.convs]

#conv_n = [batch size, n_filters, sent len - filter_sizes[n]]

pooled = [F.max_pool1d(conv, conv.shape[2]).squeeze(2) for conv in conved]

#pooled_n = [batch size, n_filters]

cat = self.dropout(torch.cat(pooled, dim = 1))

#cat = [batch size, n_filters * len(filter_sizes)]

return self.fc(cat)

# Model parameter settings

INPUT_DIM = len(TEXT.vocab)

EMBEDDING_DIM = 100

N_FILTERS = 100

FILTER_SIZES = [2,3,4]

OUTPUT_DIM = len(LABEL.vocab)

DROPOUT = 0.5

PAD_IDX = TEXT.vocab.stoi[TEXT.pad_token]

model = CNN(INPUT_DIM, EMBEDDING_DIM, N_FILTERS, FILTER_SIZES, OUTPUT_DIM, DROPOUT, PAD_IDX)

# Load pre training model

pretrained_embeddings = TEXT.vocab.vectors

model.embedding.weight.data.copy_(pretrained_embeddings)

# use 0 Initialize unknown weights and padding Parameters

UNK_IDX = TEXT.vocab.stoi[TEXT.unk_token]

model.embedding.weight.data[UNK_IDX] = torch.zeros(EMBEDDING_DIM)

model.embedding.weight.data[PAD_IDX] = torch.zeros(EMBEDDING_DIM)

# Set up loss

import torch.optim as optim

optimizer = optim.Adam(model.parameters())

criterion = nn.CrossEntropyLoss()

model = model.to(device)

criterion = criterion.to(device)

# Calculation accuracy

def categorical_accuracy(preds, y):

""" Returns accuracy per batch, i.e. if you get 8/10 right, this returns 0.8, NOT 8 """

top_pred = preds.argmax(1, keepdim = True)

correct = top_pred.eq(y.view_as(top_pred)).sum()

acc = correct.float() / y.shape[0]

return acc

# Training

def train(model, iterator, optimizer, criterion):

epoch_loss = 0

epoch_acc = 0

model.train()

for batch in iterator:

optimizer.zero_grad()

predictions = model(batch.text)

loss = criterion(predictions, batch.label)

acc = categorical_accuracy(predictions, batch.label)

loss.backward()

optimizer.step()

epoch_loss += loss.item()

epoch_acc += acc.item()

return epoch_loss / len(iterator), epoch_acc / len(iterator)

# evaluation

def evaluate(model, iterator, criterion):

epoch_loss = 0

epoch_acc = 0

model.eval()

with torch.no_grad():

for batch in iterator:

predictions = model(batch.text)

loss = criterion(predictions, batch.label)

acc = categorical_accuracy(predictions, batch.label)

epoch_loss += loss.item()

epoch_acc += acc.item()

return epoch_loss / len(iterator), epoch_acc / len(iterator)

# Time statistics

import time

def epoch_time(start_time, end_time):

elapsed_time = end_time - start_time

elapsed_mins = int(elapsed_time / 60)

elapsed_secs = int(elapsed_time - (elapsed_mins * 60))

return elapsed_mins, elapsed_secs

# Training models

N_EPOCHS = 5

best_valid_loss = float('inf')

for epoch in range(N_EPOCHS):

start_time = time.time()

train_loss, train_acc = train(model, train_iterator, optimizer, criterion)

valid_loss, valid_acc = evaluate(model, valid_iterator, criterion)

end_time = time.time()

epoch_mins, epoch_secs = epoch_time(start_time, end_time)

if valid_loss < best_valid_loss:

best_valid_loss = valid_loss

torch.save(model.state_dict(), 'tut5-model.pt')

print(f'Epoch: {

epoch+1:02} | Epoch Time: {

epoch_mins}m {

epoch_secs}s')

print(f'\tTrain Loss: {

train_loss:.3f} | Train Acc: {

train_acc*100:.2f}%')

print(f'\t Val. Loss: {

valid_loss:.3f} | Val. Acc: {

valid_acc*100:.2f}%')

# test model

model.load_state_dict(torch.load('tut5-model.pt'))

test_loss, test_acc = evaluate(model, test_iterator, criterion)

print(f'Test Loss: {

test_loss:.3f} | Test Acc: {

test_acc*100:.2f}%')

边栏推荐

- 【数据库原理复习题】

- C graphical tutorial (Fourth Edition)_ Chapter 20 asynchronous programming: examples - using asynchronous

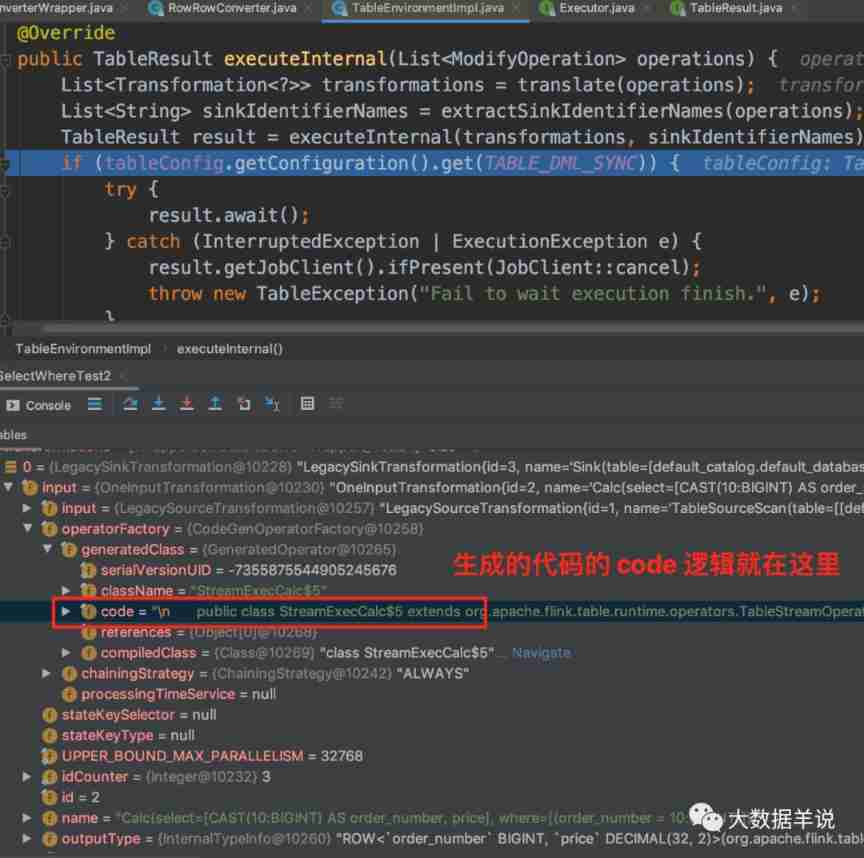

- Flink SQL knows why (16): dlink, a powerful tool for developing enterprises with Flink SQL

- C graphical tutorial (Fourth Edition)_ Chapter 13 entrustment: delegatesamplep245

- Leetcode234 palindrome linked list

- 剑指 Offer 14- I. 剪绳子

- Huffman coding experiment report

- [problem exploration and solution of one or more filters or listeners failing to start]

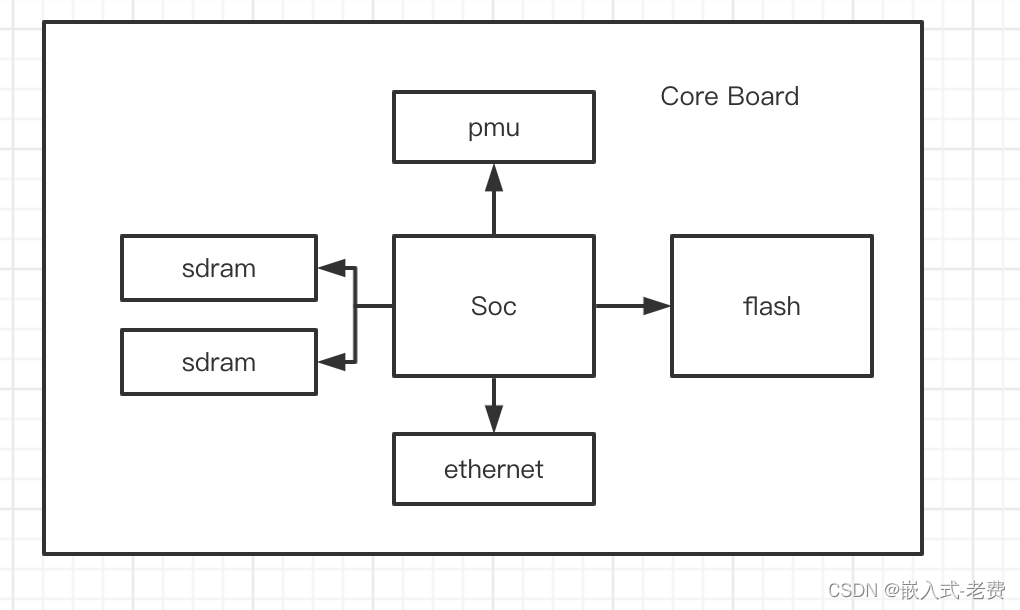

- stm32和电机开发(从mcu到架构设计)

- 关于CPU缓冲行的理解

猜你喜欢

Some thoughts on business

Image component in ETS development mode of openharmony application development

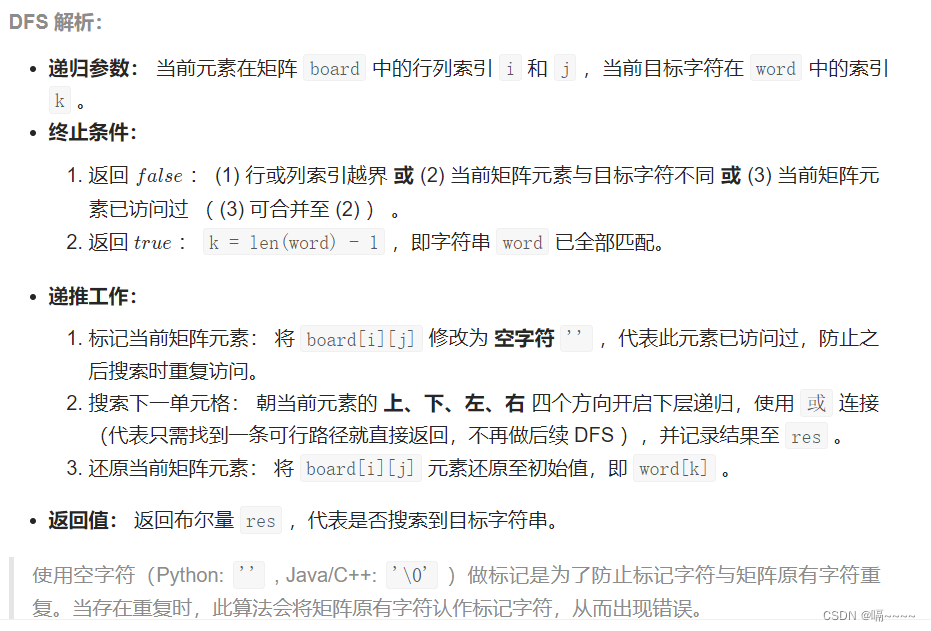

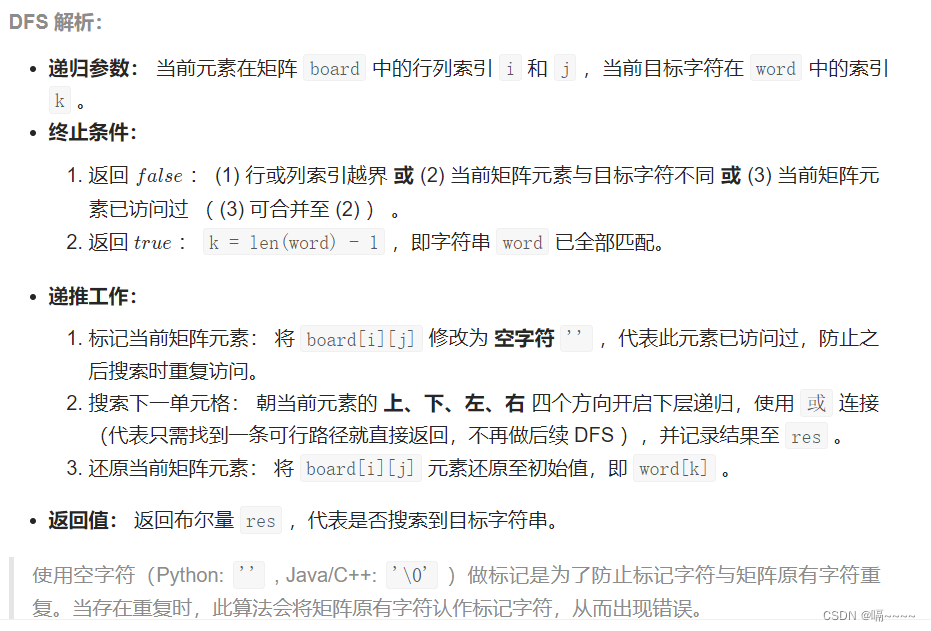

剑指 Offer 12. 矩阵中的路径

Flink SQL knows why (7): haven't you even seen the ETL and group AGG scenarios that are most suitable for Flink SQL?

Integer case study of packaging

![[comprehensive question] [Database Principle]](/img/d7/8c51306bb390e0383a017d9097e1e5.png)

[comprehensive question] [Database Principle]

Huffman coding experiment report

stm32和电机开发(从mcu到架构设计)

Sword finger offer 12 Path in matrix

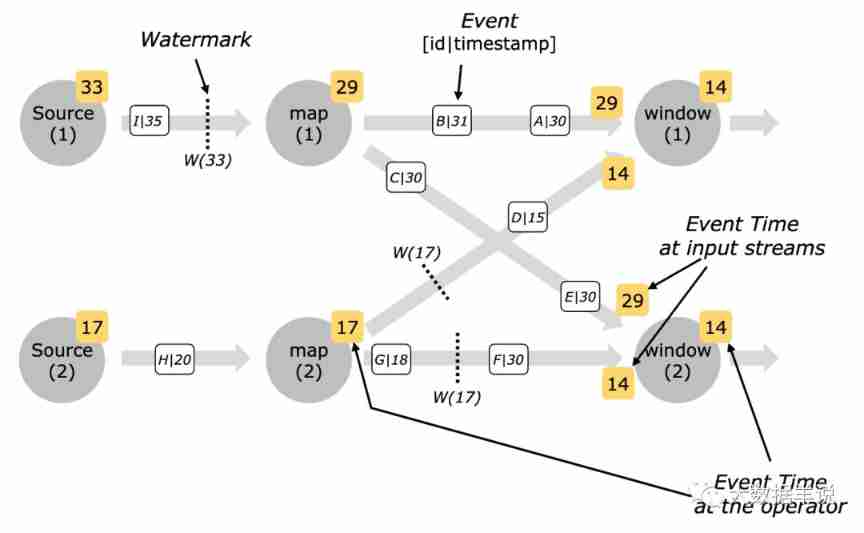

Flink code is written like this. It's strange that the window can be triggered (bad programming habits)

随机推荐

2022-02-14 analysis of the startup and request processing process of the incluxdb cluster Coordinator

【習題五】【數據庫原理】

[exercise 7] [Database Principle]

阿南的疑惑

php:&nbsp; The document cannot be displayed in Chinese

Understanding of CPU buffer line

【習題七】【數據庫原理】

2022-02-14 incluxdb cluster write data writetoshard parsing

mysqlbetween实现选取介于两个值之间的数据范围

Integer case study of packaging

今日睡眠质量记录77分

2022-02-13 plan for next week

The foreground uses RSA asymmetric security to encrypt user information

regular expression

C graphical tutorial (Fourth Edition)_ Chapter 20 asynchronous programming: examples - cases without asynchronous

Reptile

道路建设问题

February 14, 2022, incluxdb survey - mind map

Luogup3694 Bangbang chorus standing in line

Flink SQL knows why (XV): changed the source code and realized a batch lookup join (with source code attached)