Golang from 1.5 And it started to introduce three colors GC, After many improvements , Current 1.9 Version of GC The pause time can already be very short .

The reduction in pause time means " Maximum response time " The shortening of , It also makes go It is more suitable for writing network service program .

This article will analyze golang The source code to explain go Three colors in GC Implementation principle of .

This series analyzes golang The source code is Google Officially realized 1.9.2 edition , Not applicable to other versions and gccgo And so on ,

The operating environment is Ubuntu 16.04 LTS 64bit.

First, I will explain the basic concepts , Then explain the distributor , Let's talk about the implementation of the collector .

Basic concepts

Memory structure

go A block of virtual memory will be allocated when the program starts. The address is continuous memory , The structure is as follows :

This memory is divided into 3 Regions , stay X64 The upper sizes are 512M, 16G and 512G, Their functions are as follows :

arena

arena Area is what we usually call heap, go from heap The allocated memory is in this area .

bitmap

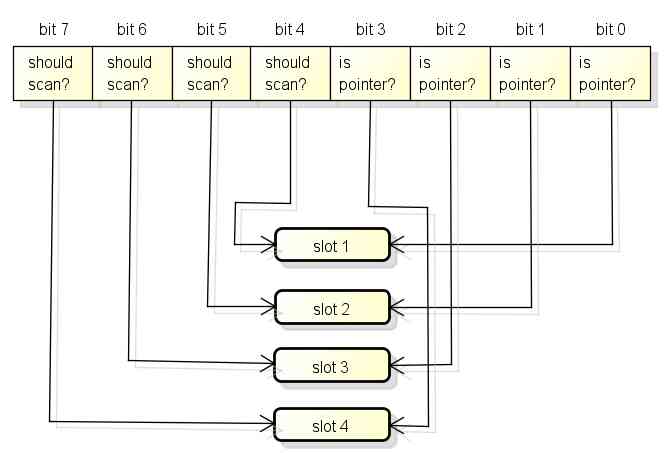

bitmap Areas are used to indicate arena Which address in the region holds the object , And which addresses in the object contain The pointer .

bitmap One of the areas byte(8 bit) Corresponding arena Four pointer size memory in the region , That is to say 2 bit Memory corresponding to a pointer size .

therefore bitmap The size of the area is 512GB / Pointer size (8 byte) / 4 = 16GB.

bitmap One of the areas byte Corresponding arena The four pointer size memory structure of the region is as follows ,

Each pointer size of memory will have two bit Indicates whether the scan should continue and whether the pointer should be included :

bitmap Medium byte and arena From the end of , That is, as memory allocation expands to both sides :

spans

spans Areas are used to indicate arena A page in the section (Page) Which is it? span, What is? span It will be introduced below .

spans A pointer in the region (8 byte) Corresponding arena A page in the area ( stay go Middle page =8KB).

therefore spans Its size is 512GB / Page size (8KB) * Pointer size (8 byte) = 512MB.

spans A pointer to a region corresponds to arena The structure of the page of the area is as follows , and bitmap The difference is that correspondence starts at the beginning :

When from Heap Assigned to

A lot of explanation go It has been mentioned in both articles and books , go Automatically determines which objects should be placed on the stack , Which objects should be placed on the heap .

In a nutshell , When the contents of an object may be accessed after the end of the function that generated the object , Then the object will be allocated on the heap .

Allocation of objects on the heap includes :

- Returns a pointer to the object

- Pass the pointer of the object to other functions

- Objects are used in closures and need to be modified

- Use new

stay C It's very dangerous for functions to return pointers to objects on the stack , But in go But it's safe , Because this object is automatically allocated on the heap .

go The process of deciding whether to use the heap to allocate objects is also called " Escape analysis ".

GC Bitmap

GC When marking, you need to know where to include the pointer , For example, the above mentioned bitmap The area covers arena Pointer information in the region .

besides , GC You also need to know where in the stack space there are pointers ,

Because stack space doesn't belong to arena Area , Stack space pointer information will be in The function of information Inside .

in addition , GC When assigning an object, you also need to set it according to the type of the object bitmap Area , The source pointer information will be in Type information Inside .

Sum up go There are the following GC Bitmap:

- bitmap Area : covers arena Area , Use 2 bit Represents a pointer size memory

- The function of information : Covering the stack space of the function , Use 1 bit Represents a pointer size memory ( be located stackmap.bytedata)

- Type information : When assigning objects, it will be copied to bitmap Area , Use 1 bit Represents a pointer size memory ( be located _type.gcdata)

Span

span Is the block used to allocate objects , Here is a simple illustration of Span The internal structure of :

Usually a span Contains multiple elements of the same size , An element holds an object , Unless :

- span Used to save large objects , This situation span There's only one element

- span Object used to hold tiny objects without pointers (tiny object), This situation span You can save multiple objects with one element

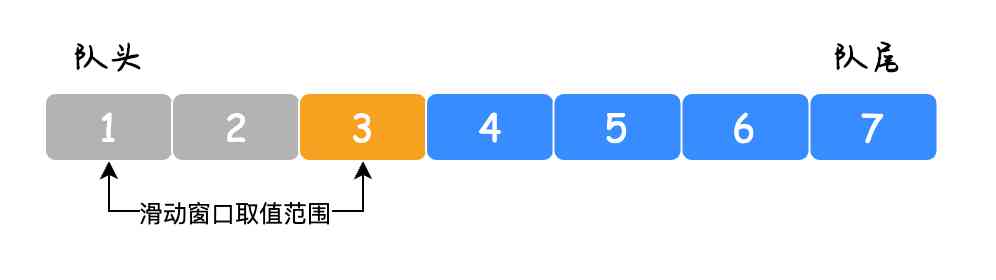

span There is one of them. freeindex Mark the address where the next assignment should start , After the distribution freeindex Will increase ,

stay freeindex All previous elements are assigned , stay freeindex The following elements may have been assigned , It may not be allocated .

span Every time GC Some elements may be recycled in the future , allocBits Used to mark which elements are assigned , Which elements are unallocated .

Use freeindex + allocBits You can skip assigned elements when allocating , Set the object in an unassigned element ,

But because every time I visit allocBits Efficiency will be slower , span There is an integer type in allocCache Used to cache freeindex At the beginning bitmap, The cache bit The value is opposite to the original value .

gcmarkBits Used in gc Mark which objects are alive when , Every time gc in the future gcmarkBits Will turn into allocBits.

It should be noted that span The memory of the structure itself is allocated from the system , As mentioned above spans Areas and bitmap The region is just an index .

Span The type of

span It can be divided into 67 A type of , as follows :

// class bytes/obj bytes/span objects tail waste max waste

// 1 8 8192 1024 0 87.50%

// 2 16 8192 512 0 43.75%

// 3 32 8192 256 0 46.88%

// 4 48 8192 170 32 31.52%

// 5 64 8192 128 0 23.44%

// 6 80 8192 102 32 19.07%

// 7 96 8192 85 32 15.95%

// 8 112 8192 73 16 13.56%

// 9 128 8192 64 0 11.72%

// 10 144 8192 56 128 11.82%

// 11 160 8192 51 32 9.73%

// 12 176 8192 46 96 9.59%

// 13 192 8192 42 128 9.25%

// 14 208 8192 39 80 8.12%

// 15 224 8192 36 128 8.15%

// 16 240 8192 34 32 6.62%

// 17 256 8192 32 0 5.86%

// 18 288 8192 28 128 12.16%

// 19 320 8192 25 192 11.80%

// 20 352 8192 23 96 9.88%

// 21 384 8192 21 128 9.51%

// 22 416 8192 19 288 10.71%

// 23 448 8192 18 128 8.37%

// 24 480 8192 17 32 6.82%

// 25 512 8192 16 0 6.05%

// 26 576 8192 14 128 12.33%

// 27 640 8192 12 512 15.48%

// 28 704 8192 11 448 13.93%

// 29 768 8192 10 512 13.94%

// 30 896 8192 9 128 15.52%

// 31 1024 8192 8 0 12.40%

// 32 1152 8192 7 128 12.41%

// 33 1280 8192 6 512 15.55%

// 34 1408 16384 11 896 14.00%

// 35 1536 8192 5 512 14.00%

// 36 1792 16384 9 256 15.57%

// 37 2048 8192 4 0 12.45%

// 38 2304 16384 7 256 12.46%

// 39 2688 8192 3 128 15.59%

// 40 3072 24576 8 0 12.47%

// 41 3200 16384 5 384 6.22%

// 42 3456 24576 7 384 8.83%

// 43 4096 8192 2 0 15.60%

// 44 4864 24576 5 256 16.65%

// 45 5376 16384 3 256 10.92%

// 46 6144 24576 4 0 12.48%

// 47 6528 32768 5 128 6.23%

// 48 6784 40960 6 256 4.36%

// 49 6912 49152 7 768 3.37%

// 50 8192 8192 1 0 15.61%

// 51 9472 57344 6 512 14.28%

// 52 9728 49152 5 512 3.64%

// 53 10240 40960 4 0 4.99%

// 54 10880 32768 3 128 6.24%

// 55 12288 24576 2 0 11.45%

// 56 13568 40960 3 256 9.99%

// 57 14336 57344 4 0 5.35%

// 58 16384 16384 1 0 12.49%

// 59 18432 73728 4 0 11.11%

// 60 19072 57344 3 128 3.57%

// 61 20480 40960 2 0 6.87%

// 62 21760 65536 3 256 6.25%

// 63 24576 24576 1 0 11.45%

// 64 27264 81920 3 128 10.00%

// 65 28672 57344 2 0 4.91%

// 66 32768 32768 1 0 12.50%

By type (class) by 1 Of span For example ,

span The size of the elements in is 8 byte, span It's up to you 1 Page means 8K, You can save 1024 Objects .

When assigning objects , Depending on the size of the object, what type of span,

for example 16 byte Objects that use span 2, 17 byte Objects that use span 3, 32 byte Objects that use span 3.

You can also see from this example that , Distribute 17 and 32 byte Objects of span 3, In other words, part of the size of the object in the allocation will waste a certain amount of space .

Some people may notice that , The biggest one up there span The size of the element is 32K, So the distribution exceeds 32K Where are the objects assigned to ?

exceed 32K The object of is called " Big object ", When assigning large objects , Directly from heap Assign a special span,

This special span The type of (class) yes 0, It contains only one big object , span The size of the object is determined by the size of the object .

special span Plus 66 A standard span, It's made up of 67 individual span type .

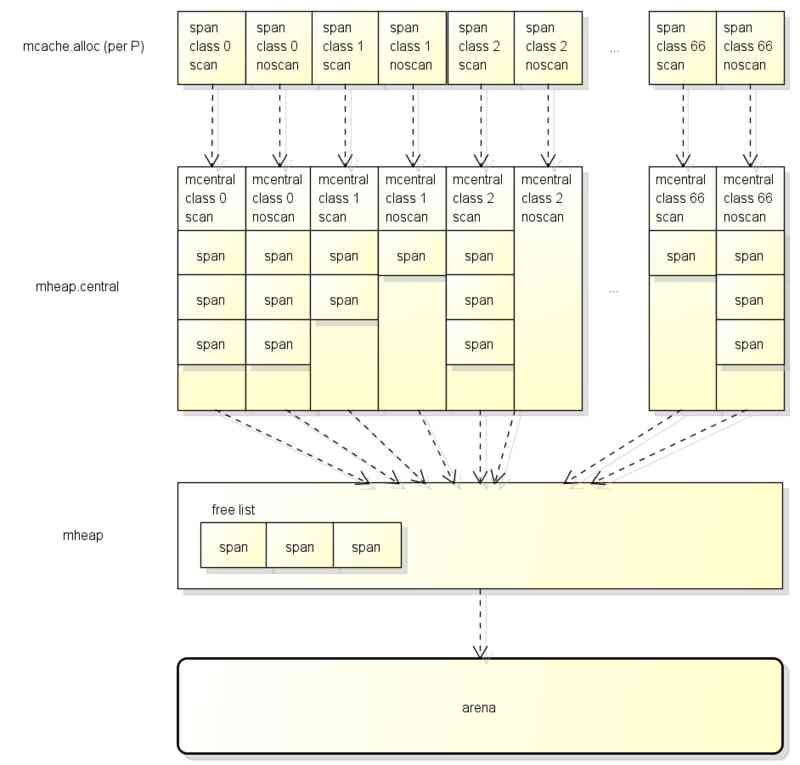

Span The location of

stay The previous I mentioned in P It's a virtual resource , Only one thread can access the same P, therefore P Data in does not need locks .

For better performance when allocating objects , each P There are span The cache of ( Also called mcache), The structure of the cache is as follows :

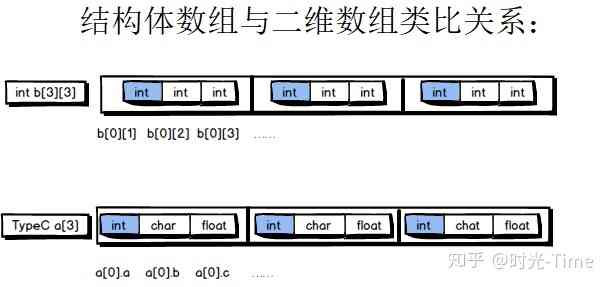

each P Press span Different types , Yes 67*2=134 individual span The cache of ,

among scan and noscan The difference is that ,

If the object contains a pointer , When assigning objects, you use scan Of span,

If the object does not contain a pointer , When assigning objects, you use noscan Of span.

hold span It is divided into scan and noscan The meaning of is ,

GC When you scan the object, you have to noscan Of span You don't have to check bitmap Area to mark sub objects , This can greatly improve the efficiency of tagging .

When assigning objects, the appropriate ones will be obtained from the following locations span Used to allocate :

- First of all, from the P The cache of (mcache) obtain , If there's a cache span And not full, use , There is no need to lock this step

- Then cache from the global (mcentral) obtain , If successful, set to P, This step requires a lock

- Finally from the mheap obtain , Set to global cache after getting , This step requires a lock

stay P Medium cache span It's like CoreCLR Thread cache allocation context in (Allocation Context) It's similar to ,

You can allocate objects without thread locks most of the time , Improve the performance of the distribution .

Processing of allocation objects

The process of assigning objects

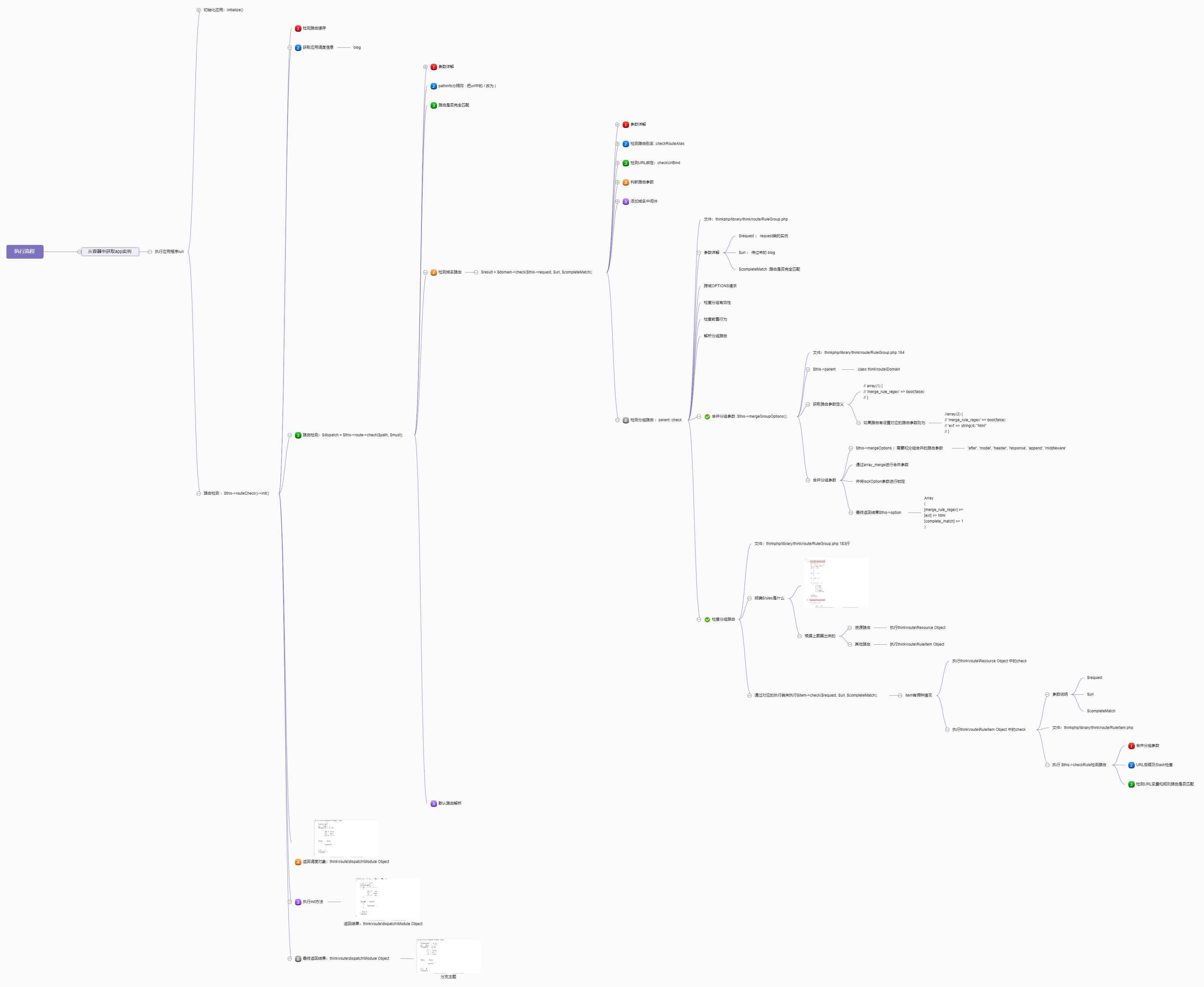

go When an object is allocated from the heap newobject function , The flow of this function is as follows :

First of all, I will check GC Whether at work , If GC At work and currently G If a certain amount of memory is allocated, assistance is needed GC Do a certain job ,

This mechanism is called GC Assist, Used to prevent too fast allocation of memory GC Recycling doesn't keep up .

After that, we will judge whether it is a small object or a large object , If it is a large object, call largeAlloc Distribute from the heap ,

If it's a small object, divide 3 Get the available span, And then from span Objects assigned in :

- First of all, from the P The cache of (mcache) obtain

- Then cache from the global (mcentral) obtain , There are available in the global cache span A list of

- Finally from the mheap obtain , mheap There are also span Free list of , If both of them fail, they will get from arena Regional distribution

The detailed structure of these three stages is shown in the figure below :

Definition of data type

The data types involved in the allocation object include :

p: The previous article mentioned , P Is used to run in the coroutine go Virtual resources of code

m: The previous article mentioned , M Currently represents system threads

g: The previous article mentioned , G Namely goroutine

mspan: Blocks used to allocate objects

mcentral: Overall mspan cache , Altogether 67*2=134 individual

mheap: Used to manage heap The object of , There is only one global

Source code analysis

go When an object is allocated from the heap newobject function , Let's start with this function :

// implementation of new builtin

// compiler (both frontend and SSA backend) knows the signature

// of this function

func newobject(typ *_type) unsafe.Pointer {

return mallocgc(typ.size, typ, true)

}

newobject Called mallocgc function :

// Allocate an object of size bytes.

// Small objects are allocated from the per-P cache's free lists.

// Large objects (> 32 kB) are allocated straight from the heap.

func mallocgc(size uintptr, typ *_type, needzero bool) unsafe.Pointer {

if gcphase == _GCmarktermination {

throw("mallocgc called with gcphase == _GCmarktermination")

}

if size == 0 {

return unsafe.Pointer(&zerobase)

}

if debug.sbrk != 0 {

align := uintptr(16)

if typ != nil {

align = uintptr(typ.align)

}

return persistentalloc(size, align, &memstats.other_sys)

}

// Judge whether to assist GC Work

// gcBlackenEnabled stay GC The marking phase of will open

// assistG is the G to charge for this allocation, or nil if

// GC is not currently active.

var assistG *g

if gcBlackenEnabled != 0 {

// Charge the current user G for this allocation.

assistG = getg()

if assistG.m.curg != nil {

assistG = assistG.m.curg

}

// Charge the allocation against the G. We'll account

// for internal fragmentation at the end of mallocgc.

assistG.gcAssistBytes -= int64(size)

// Will judge the need of assistance according to the size of the allocation GC How much work has been done

// The specific algorithm will be explained when the collector is explained below

if assistG.gcAssistBytes < 0 {

// This G is in debt. Assist the GC to correct

// this before allocating. This must happen

// before disabling preemption.

gcAssistAlloc(assistG)

}

}

// Add the current G Corresponding M Of lock Count , To prevent this G Be seized

// Set mp.mallocing to keep from being preempted by GC.

mp := acquirem()

if mp.mallocing != 0 {

throw("malloc deadlock")

}

if mp.gsignal == getg() {

throw("malloc during signal")

}

mp.mallocing = 1

shouldhelpgc := false

dataSize := size

// Get current G Corresponding M Corresponding P The local span cache (mcache)

// because M In a P I'll put P Of mcache Set to M in , What's back here is getg().m.mcache

c := gomcache()

var x unsafe.Pointer

noscan := typ == nil || typ.kind&kindNoPointers != 0

// Judge whether it is a small object , maxSmallSize The current value is 32K

if size <= maxSmallSize {

// If the object does not contain a pointer , And the size of the object is less than 16 bytes, Special treatment can be done

// Here's the optimization for very small objects , because span The smallest element of can only be 8 byte, If the object is smaller, a lot of space is wasted

// Very small objects can be integrated into "class 2 noscan" The elements of ( The size is 16 byte) in

if noscan && size < maxTinySize {

// Tiny allocator.

//

// Tiny allocator combines several tiny allocation requests

// into a single memory block. The resulting memory block

// is freed when all subobjects are unreachable. The subobjects

// must be noscan (don't have pointers), this ensures that

// the amount of potentially wasted memory is bounded.

//

// Size of the memory block used for combining (maxTinySize) is tunable.

// Current setting is 16 bytes, which relates to 2x worst case memory

// wastage (when all but one subobjects are unreachable).

// 8 bytes would result in no wastage at all, but provides less

// opportunities for combining.

// 32 bytes provides more opportunities for combining,

// but can lead to 4x worst case wastage.

// The best case winning is 8x regardless of block size.

//

// Objects obtained from tiny allocator must not be freed explicitly.

// So when an object will be freed explicitly, we ensure that

// its size >= maxTinySize.

//

// SetFinalizer has a special case for objects potentially coming

// from tiny allocator, it such case it allows to set finalizers

// for an inner byte of a memory block.

//

// The main targets of tiny allocator are small strings and

// standalone escaping variables. On a json benchmark

// the allocator reduces number of allocations by ~12% and

// reduces heap size by ~20%.

off := c.tinyoffset

// Align tiny pointer for required (conservative) alignment.

if size&7 == 0 {

off = round(off, 8)

} else if size&3 == 0 {

off = round(off, 4)

} else if size&1 == 0 {

off = round(off, 2)

}

if off+size <= maxTinySize && c.tiny != 0 {

// The object fits into existing tiny block.

x = unsafe.Pointer(c.tiny + off)

c.tinyoffset = off + size

c.local_tinyallocs++

mp.mallocing = 0

releasem(mp)

return x

}

// Allocate a new maxTinySize block.

span := c.alloc[tinySpanClass]

v := nextFreeFast(span)

if v == 0 {

v, _, shouldhelpgc = c.nextFree(tinySpanClass)

}

x = unsafe.Pointer(v)

(*[2]uint64)(x)[0] = 0

(*[2]uint64)(x)[1] = 0

// See if we need to replace the existing tiny block with the new one

// based on amount of remaining free space.

if size < c.tinyoffset || c.tiny == 0 {

c.tiny = uintptr(x)

c.tinyoffset = size

}

size = maxTinySize

} else {

// Otherwise, it is assigned to ordinary small objects

// First get the size of the object, which should be used span type

var sizeclass uint8

if size <= smallSizeMax-8 {

sizeclass = size_to_class8[(size+smallSizeDiv-1)/smallSizeDiv]

} else {

sizeclass = size_to_class128[(size-smallSizeMax+largeSizeDiv-1)/largeSizeDiv]

}

size = uintptr(class_to_size[sizeclass])

// be equal to sizeclass * 2 + (noscan ? 1 : 0)

spc := makeSpanClass(sizeclass, noscan)

span := c.alloc[spc]

// Try to quickly get from this span The distribution of

v := nextFreeFast(span)

if v == 0 {

// Allocation failed , May need to be from mcentral perhaps mheap In order to get

// If from mcentral perhaps mheap Got new span, be shouldhelpgc It will be equal to true

// shouldhelpgc It will be equal to true You will decide whether to trigger it below GC

v, span, shouldhelpgc = c.nextFree(spc)

}

x = unsafe.Pointer(v)

if needzero && span.needzero != 0 {

memclrNoHeapPointers(unsafe.Pointer(v), size)

}

}

} else {

// Directly from large objects mheap Distribute , there s It's a special one span, its class yes 0

var s *mspan

shouldhelpgc = true

systemstack(func() {

s = largeAlloc(size, needzero, noscan)

})

s.freeindex = 1

s.allocCount = 1

x = unsafe.Pointer(s.base())

size = s.elemsize

}

// Set up arena Corresponding bitmap, Record where the pointer is contained , GC Will use bitmap Scan all accessible objects

var scanSize uintptr

if !noscan {

// If allocating a defer+arg block, now that we've picked a malloc size

// large enough to hold everything, cut the "asked for" size down to

// just the defer header, so that the GC bitmap will record the arg block

// as containing nothing at all (as if it were unused space at the end of

// a malloc block caused by size rounding).

// The defer arg areas are scanned as part of scanstack.

if typ == deferType {

dataSize = unsafe.Sizeof(_defer{})

}

// This function is very long , You can see if you are interested

// https://github.com/golang/go/blob/go1.9.2/src/runtime/mbitmap.go#L855

// Although the code is very long, the content of the settings is the same as that mentioned above bitmap The structure of the region is the same

// Set according to type information scan bit Follow pointer bit, scan bit Yes, it means scanning should continue , pointer bit Yes means that the position is a pointer

// What needs to be noticed is

// - If a type only contains a pointer at the beginning , for example [ptr, ptr, large non-pointer data]

// So the back part scan bit Will be 0, This can greatly improve the efficiency of tagging

// - the second slot Of scan bit It's a special use , It's not used to mark whether to continue scan, It's a sign checkmark

// What is? checkmark

// - because go Parallel of GC More complicated , To check that the implementation is correct , go There needs to be a mechanism to check whether all objects that should be marked are marked

// The mechanism is checkmark, In the open checkmark when go It stops the world at the end of the tagging phase, and then executes the tag again

// The second one above slot Of scan bit It is used to mark objects in checkmark Whether marked or not

// - Some people may find a second slot Requires the object to have at least two pointer sizes , What about an object the size of a pointer ?

// Objects with only one pointer size can be divided into two situations

// An object is a pointer , Because the size is just 1 A pointer, so you don't need to look at bitmap Area , This is the first one slot Namely checkmark

// Object is not a pointer , Because there is tiny alloc The mechanism of , Objects that are not pointers and have only one pointer size are assigned to two pointers span in

// You don't need to look at bitmap Area , So the same as above, the first one slot Namely checkmark

heapBitsSetType(uintptr(x), size, dataSize, typ)

if dataSize > typ.size {

// Array allocation. If there are any

// pointers, GC has to scan to the last

// element.

if typ.ptrdata != 0 {

scanSize = dataSize - typ.size + typ.ptrdata

}

} else {

scanSize = typ.ptrdata

}

c.local_scan += scanSize

}

// Memory barrier , because x86 and x64 Of store It's not out of order, so it's just a barrier against compilers , In the compilation it is ret

// Ensure that the stores above that initialize x to

// type-safe memory and set the heap bits occur before

// the caller can make x observable to the garbage

// collector. Otherwise, on weakly ordered machines,

// the garbage collector could follow a pointer to x,

// but see uninitialized memory or stale heap bits.

publicationBarrier()

// If it's in GC in , You need to immediately mark the assigned object as " black ", To prevent it from being recycled

// Allocate black during GC.

// All slots hold nil so no scanning is needed.

// This may be racing with GC so do it atomically if there can be

// a race marking the bit.

if gcphase != _GCoff {

gcmarknewobject(uintptr(x), size, scanSize)

}

// Race Detector To deal with ( Used to detect thread conflicts )

if raceenabled {

racemalloc(x, size)

}

// Memory Sanitizer To deal with ( Used to detect memory problems such as dangerous pointers )

if msanenabled {

msanmalloc(x, size)

}

// Re allow the current G Be seized

mp.mallocing = 0

releasem(mp)

// Debug records

if debug.allocfreetrace != 0 {

tracealloc(x, size, typ)

}

// Profiler Record

if rate := MemProfileRate; rate > 0 {

if size < uintptr(rate) && int32(size) < c.next_sample {

c.next_sample -= int32(size)

} else {

mp := acquirem()

profilealloc(mp, x, size)

releasem(mp)

}

}

// gcAssistBytes subtract " Actual allocation size - Request allocation size ", Adjust to the exact value

if assistG != nil {

// Account for internal fragmentation in the assist

// debt now that we know it.

assistG.gcAssistBytes -= int64(size - dataSize)

}

// If you've got a new one before span, Then judge whether it is necessary to start in the background GC

// The logic of judgment here (gcTrigger) It will be explained in detail below

if shouldhelpgc {

if t := (gcTrigger{kind: gcTriggerHeap}); t.test() {

gcStart(gcBackgroundMode, t)

}

}

return x

}

Next, let's see how to get from span It's assigned to people , The first call nextFreeFast Try to allocate quickly :

// nextFreeFast returns the next free object if one is quickly available.

// Otherwise it returns 0.

func nextFreeFast(s *mspan) gclinkptr {

// Get the first non 0 Of bit It's the number one bit, Which element is unallocated

theBit := sys.Ctz64(s.allocCache) // Is there a free object in the allocCache?

// Find unallocated elements

if theBit < 64 {

result := s.freeindex + uintptr(theBit)

// The index value should be less than the number of elements

if result < s.nelems {

// next freeindex

freeidx := result + 1

// Can be 64 Division requires special treatment ( Reference resources nextFree)

if freeidx%64 == 0 && freeidx != s.nelems {

return 0

}

// to update freeindex and allocCache( High places are 0, It will be updated after exhaustion )

s.allocCache >>= uint(theBit + 1)

s.freeindex = freeidx

// Returns the address of the element

v := gclinkptr(result*s.elemsize + s.base())

// Add assigned element count

s.allocCount++

return v

}

}

return 0

}

If in freeindex Can't quickly find unallocated elements , You need to call nextFree Make more complicated processing :

// nextFree returns the next free object from the cached span if one is available.

// Otherwise it refills the cache with a span with an available object and

// returns that object along with a flag indicating that this was a heavy

// weight allocation. If it is a heavy weight allocation the caller must

// determine whether a new GC cycle needs to be started or if the GC is active

// whether this goroutine needs to assist the GC.

func (c *mcache) nextFree(spc spanClass) (v gclinkptr, s *mspan, shouldhelpgc bool) {

// Find the next one freeindex And update allocCache

s = c.alloc[spc]

shouldhelpgc = false

freeIndex := s.nextFreeIndex()

// If span All the elements in it have been assigned , You need to get new span

if freeIndex == s.nelems {

// The span is full.

if uintptr(s.allocCount) != s.nelems {

println("runtime: s.allocCount=", s.allocCount, "s.nelems=", s.nelems)

throw("s.allocCount != s.nelems && freeIndex == s.nelems")

}

// Apply for a new span

systemstack(func() {

c.refill(spc)

})

// After obtaining a new application span, And set the need to check whether it is executed GC

shouldhelpgc = true

s = c.alloc[spc]

freeIndex = s.nextFreeIndex()

}

if freeIndex >= s.nelems {

throw("freeIndex is not valid")

}

// Returns the address of the element

v = gclinkptr(freeIndex*s.elemsize + s.base())

// Add assigned element count

s.allocCount++

if uintptr(s.allocCount) > s.nelems {

println("s.allocCount=", s.allocCount, "s.nelems=", s.nelems)

throw("s.allocCount > s.nelems")

}

return

}

If mcache Of the type specified in span Is full , You need to call refill Function application new span:

// Gets a span that has a free object in it and assigns it

// to be the cached span for the given sizeclass. Returns this span.

func (c *mcache) refill(spc spanClass) *mspan {

_g_ := getg()

// prevent G Be seized

_g_.m.locks++

// Return the current cached span to the central lists.

s := c.alloc[spc]

// Make sure that the current span All elements have been assigned

if uintptr(s.allocCount) != s.nelems {

throw("refill of span with free space remaining")

}

// Set up span Of incache attribute , Unless it's empty for global use span( That is to say mcache Inside span The default value of the pointer )

if s != &emptymspan {

s.incache = false

}

// towards mcentral Apply for a new span

// Get a new cached span from the central lists.

s = mheap_.central[spc].mcentral.cacheSpan()

if s == nil {

throw("out of memory")

}

if uintptr(s.allocCount) == s.nelems {

throw("span has no free space")

}

// Set up the new span To mcache in

c.alloc[spc] = s

// allow G Be seized

_g_.m.locks--

return s

}

towards mcentral Apply for a new span Will pass cacheSpan function :

mcentral First try to reuse the original from the internal linked list span, If reuse fails, the mheap apply .

// Allocate a span to use in an MCache.

func (c *mcentral) cacheSpan() *mspan {

// Let the current G Assist a part of sweep Work

// Deduct credit for this span allocation and sweep if necessary.

spanBytes := uintptr(class_to_allocnpages[c.spanclass.sizeclass()]) * _PageSize

deductSweepCredit(spanBytes, 0)

// Yes mcentral locked , Because there may be more than one M(P) Simultaneous access

lock(&c.lock)

traceDone := false

if trace.enabled {

traceGCSweepStart()

}

sg := mheap_.sweepgen

retry:

// mcentral There are two in it span The linked list of

// - nonempty Indicates that the span There is at least one unallocated element

// - empty It means you are not sure span There is at least one unallocated element

// This is the first place to look for nonempty The linked list of

// sweepgen Every time GC Will increase 2

// - sweepgen == overall situation sweepgen, Express span already sweep too

// - sweepgen == overall situation sweepgen-1, Express span is sweep

// - sweepgen == overall situation sweepgen-2, Express span wait for sweep

var s *mspan

for s = c.nonempty.first; s != nil; s = s.next {

// If span wait for sweep, Try atomic modification sweepgen For the whole sweepgen-1

if s.sweepgen == sg-2 && atomic.Cas(&s.sweepgen, sg-2, sg-1) {

// If the modification is successful span Move to empty Linked list , sweep It then jumps to havespan

c.nonempty.remove(s)

c.empty.insertBack(s)

unlock(&c.lock)

s.sweep(true)

goto havespan

}

// If this span Being made by other threads sweep, Just skip.

if s.sweepgen == sg-1 {

// the span is being swept by background sweeper, skip

continue

}

// span already sweep too

// because nonempty In the list span Make sure there is at least one unallocated element , You can use it directly here

// we have a nonempty span that does not require sweeping, allocate from it

c.nonempty.remove(s)

c.empty.insertBack(s)

unlock(&c.lock)

goto havespan

}

// lookup empty The linked list of

for s = c.empty.first; s != nil; s = s.next {

// If span wait for sweep, Try atomic modification sweepgen For the whole sweepgen-1

if s.sweepgen == sg-2 && atomic.Cas(&s.sweepgen, sg-2, sg-1) {

// hold span Put it in empty At the end of the list

// we have an empty span that requires sweeping,

// sweep it and see if we can free some space in it

c.empty.remove(s)

// swept spans are at the end of the list

c.empty.insertBack(s)

unlock(&c.lock)

// Try sweep

s.sweep(true)

// sweep In the future, we need to detect whether there are unallocated objects , If you have it, you can use it

freeIndex := s.nextFreeIndex()

if freeIndex != s.nelems {

s.freeindex = freeIndex

goto havespan

}

lock(&c.lock)

// the span is still empty after sweep

// it is already in the empty list, so just retry

goto retry

}

// If this span Being made by other threads sweep, Just skip.

if s.sweepgen == sg-1 {

// the span is being swept by background sweeper, skip

continue

}

// Can't find any with unassigned objects span

// already swept empty span,

// all subsequent ones must also be either swept or in process of sweeping

break

}

if trace.enabled {

traceGCSweepDone()

traceDone = true

}

unlock(&c.lock)

// Can't find any with unassigned objects span, Need from mheap Distribute

// Add to after distribution empty In the list

// Replenish central list if empty.

s = c.grow()

if s == nil {

return nil

}

lock(&c.lock)

c.empty.insertBack(s)

unlock(&c.lock)

// At this point s is a non-empty span, queued at the end of the empty list,

// c is unlocked.

havespan:

if trace.enabled && !traceDone {

traceGCSweepDone()

}

// Statistics span The number of unallocated elements in , Add to mcentral.nmalloc in

// Statistics span Total size of unallocated elements in , Add to memstats.heap_live in

cap := int32((s.npages << _PageShift) / s.elemsize)

n := cap - int32(s.allocCount)

if n == 0 || s.freeindex == s.nelems || uintptr(s.allocCount) == s.nelems {

throw("span has no free objects")

}

// Assume all objects from this span will be allocated in the

// mcache. If it gets uncached, we'll adjust this.

atomic.Xadd64(&c.nmalloc, int64(n))

usedBytes := uintptr(s.allocCount) * s.elemsize

atomic.Xadd64(&memstats.heap_live, int64(spanBytes)-int64(usedBytes))

// Tracking processing

if trace.enabled {

// heap_live changed.

traceHeapAlloc()

}

// If it's in GC in , because heap_live Changed the , To readjust G The value of the auxiliary tag work

// Please refer to the following for details revise Analysis of function

if gcBlackenEnabled != 0 {

// heap_live changed.

gcController.revise()

}

// Set up span Of incache attribute , Express span is mcache in

s.incache = true

// according to freeindex to update allocCache

freeByteBase := s.freeindex &^ (64 - 1)

whichByte := freeByteBase / 8

// Init alloc bits cache.

s.refillAllocCache(whichByte)

// Adjust the allocCache so that s.freeindex corresponds to the low bit in

// s.allocCache.

s.allocCache >>= s.freeindex % 64

return s

}

mcentral towards mheap Apply for a new span Will use grow function :

// grow allocates a new empty span from the heap and initializes it for c's size class.

func (c *mcentral) grow() *mspan {

// according to mcentral Type calculation needs to apply for span Size ( Divide 8K = How many pages are there ) And how many elements can be saved

npages := uintptr(class_to_allocnpages[c.spanclass.sizeclass()])

size := uintptr(class_to_size[c.spanclass.sizeclass()])

n := (npages << _PageShift) / size

// towards mheap Apply for a new span, Page (8K) In units of

s := mheap_.alloc(npages, c.spanclass, false, true)

if s == nil {

return nil

}

p := s.base()

s.limit = p + size*n

// Assign and initialize span Of allocBits and gcmarkBits

heapBitsForSpan(s.base()).initSpan(s)

return s

}

mheap Distribute span The function of is alloc:

func (h *mheap) alloc(npage uintptr, spanclass spanClass, large bool, needzero bool) *mspan {

// stay g0 In the stack space alloc_m function

// About systemstack Please refer to the previous article

// Don't do any operations that lock the heap on the G stack.

// It might trigger stack growth, and the stack growth code needs

// to be able to allocate heap.

var s *mspan

systemstack(func() {

s = h.alloc_m(npage, spanclass, large)

})

if s != nil {

if needzero && s.needzero != 0 {

memclrNoHeapPointers(unsafe.Pointer(s.base()), s.npages<<_PageShift)

}

s.needzero = 0

}

return s

}

alloc The function will be in the g0 In the stack space alloc_m function :

// Allocate a new span of npage pages from the heap for GC'd memory

// and record its size class in the HeapMap and HeapMapCache.

func (h *mheap) alloc_m(npage uintptr, spanclass spanClass, large bool) *mspan {

_g_ := getg()

if _g_ != _g_.m.g0 {

throw("_mheap_alloc not on g0 stack")

}

// Yes mheap locked , The lock here is a global lock

lock(&h.lock)

// In order to prevent heap It's growing too fast , In distribution n Before the page sweep And recycling n page

// Will enumerate first busy List and then enumerate busyLarge The list goes on sweep, Specific reference reclaim and reclaimList function

// To prevent excessive heap growth, before allocating n pages

// we need to sweep and reclaim at least n pages.

if h.sweepdone == 0 {

// TODO(austin): This tends to sweep a large number of

// spans in order to find a few completely free spans

// (for example, in the garbage benchmark, this sweeps

// ~30x the number of pages its trying to allocate).

// If GC kept a bit for whether there were any marks

// in a span, we could release these free spans

// at the end of GC and eliminate this entirely.

if trace.enabled {

traceGCSweepStart()

}

h.reclaim(npage)

if trace.enabled {

traceGCSweepDone()

}

}

// hold mcache Add local statistics in to global

// transfer stats from cache to global

memstats.heap_scan += uint64(_g_.m.mcache.local_scan)

_g_.m.mcache.local_scan = 0

memstats.tinyallocs += uint64(_g_.m.mcache.local_tinyallocs)

_g_.m.mcache.local_tinyallocs = 0

// call allocSpanLocked Distribute span, allocSpanLocked The function request is currently on mheap locked

s := h.allocSpanLocked(npage, &memstats.heap_inuse)

if s != nil {

// Record span info, because gc needs to be

// able to map interior pointer to containing span.

// Set up span Of sweepgen = overall situation sweepgen

atomic.Store(&s.sweepgen, h.sweepgen)

// Put it all together span In the list , there sweepSpans Is the length of the 2

// sweepSpans[h.sweepgen/2%2] Save what is currently in use span list

// sweepSpans[1-h.sweepgen/2%2] Save and wait sweep Of span list

// Because every time gcsweepgen Metropolitan plus 2, Every time gc Both lists are swapped

h.sweepSpans[h.sweepgen/2%2].push(s) // Add to swept in-use list.

// initialization span member

s.state = _MSpanInUse

s.allocCount = 0

s.spanclass = spanclass

if sizeclass := spanclass.sizeclass(); sizeclass == 0 {

s.elemsize = s.npages << _PageShift

s.divShift = 0

s.divMul = 0

s.divShift2 = 0

s.baseMask = 0

} else {

s.elemsize = uintptr(class_to_size[sizeclass])

m := &class_to_divmagic[sizeclass]

s.divShift = m.shift

s.divMul = m.mul

s.divShift2 = m.shift2

s.baseMask = m.baseMask

}

// update stats, sweep lists

h.pagesInUse += uint64(npage)

// above grow The function will pass in true, That is, through grow Call here large It will be equal to true

// Add assigned span To busy list , If the number of pages exceeds _MaxMHeapList(128 page =8K*128=1M) Is on the busylarge list

if large {

memstats.heap_objects++

mheap_.largealloc += uint64(s.elemsize)

mheap_.nlargealloc++

atomic.Xadd64(&memstats.heap_live, int64(npage<<_PageShift))

// Swept spans are at the end of lists.

if s.npages < uintptr(len(h.busy)) {

h.busy[s.npages].insertBack(s)

} else {

h.busylarge.insertBack(s)

}

}

}

// If it's in GC in , because heap_live Changed the , To readjust G The value of the auxiliary tag work

// Please refer to the following for details revise Analysis of function

// heap_scan and heap_live were updated.

if gcBlackenEnabled != 0 {

gcController.revise()

}

// Tracking processing

if trace.enabled {

traceHeapAlloc()

}

// h.spans is accessed concurrently without synchronization

// from other threads. Hence, there must be a store/store

// barrier here to ensure the writes to h.spans above happen

// before the caller can publish a pointer p to an object

// allocated from s. As soon as this happens, the garbage

// collector running on another processor could read p and

// look up s in h.spans. The unlock acts as the barrier to

// order these writes. On the read side, the data dependency

// between p and the index in h.spans orders the reads.

unlock(&h.lock)

return s

}

Keep looking at allocSpanLocked function :

// Allocates a span of the given size. h must be locked.

// The returned span has been removed from the

// free list, but its state is still MSpanFree.

func (h *mheap) allocSpanLocked(npage uintptr, stat *uint64) *mspan {

var list *mSpanList

var s *mspan

// Try to mheap Free list assignment in

// The number of pages is less than _MaxMHeapList(128 page =1M) Freedom span Will be in free In the list

// The number of pages is greater than _MaxMHeapList Freedom span Will be in freelarge In the list

// Try in fixed-size lists up to max.

for i := int(npage); i < len(h.free); i++ {

list = &h.free[i]

if !list.isEmpty() {

s = list.first

list.remove(s)

goto HaveSpan

}

}

// free If you can't find the list, look for freelarge list

// If you can't find it, go to arena Area application for a new span Add to freelarge in , Then look for freelarge list

// Best fit in list of large spans.

s = h.allocLarge(npage) // allocLarge removed s from h.freelarge for us

if s == nil {

if !h.grow(npage) {

return nil

}

s = h.allocLarge(npage)

if s == nil {

return nil

}

}

HaveSpan:

// Mark span in use.

if s.state != _MSpanFree {

throw("MHeap_AllocLocked - MSpan not free")

}

if s.npages < npage {

throw("MHeap_AllocLocked - bad npages")

}

// If span Some have been released ( Break the relationship between virtual memory and physical memory ) Page of , Remind them that these pages will be used and update the statistics

if s.npreleased > 0 {

sysUsed(unsafe.Pointer(s.base()), s.npages<<_PageShift)

memstats.heap_released -= uint64(s.npreleased << _PageShift)

s.npreleased = 0

}

// If I get it span More pages than required

// Split the remaining pages to another span And put it on the free list

if s.npages > npage {

// Trim extra and put it back in the heap.

t := (*mspan)(h.spanalloc.alloc())

t.init(s.base()+npage<<_PageShift, s.npages-npage)

s.npages = npage

p := (t.base() - h.arena_start) >> _PageShift

if p > 0 {

h.spans[p-1] = s

}

h.spans[p] = t

h.spans[p+t.npages-1] = t

t.needzero = s.needzero

s.state = _MSpanManual // prevent coalescing with s

t.state = _MSpanManual

h.freeSpanLocked(t, false, false, s.unusedsince)

s.state = _MSpanFree

}

s.unusedsince = 0

// Set up spans Area , Which address corresponds to which mspan object

p := (s.base() - h.arena_start) >> _PageShift

for n := uintptr(0); n < npage; n++ {

h.spans[p+n] = s

}

// Update Statistics

*stat += uint64(npage << _PageShift)

memstats.heap_idle -= uint64(npage << _PageShift)

//println("spanalloc", hex(s.start<<_PageShift))

if s.inList() {

throw("still in list")

}

return s

}

Keep looking at allocLarge function :

// allocLarge allocates a span of at least npage pages from the treap of large spans.

// Returns nil if no such span currently exists.

func (h *mheap) allocLarge(npage uintptr) *mspan {

// Search treap for smallest span with >= npage pages.

return h.freelarge.remove(npage)

}

freelarge The type is mTreap, call remove The function searches the tree for at least one npage And the smallest in the tree span return :

// remove searches for, finds, removes from the treap, and returns the smallest

// span that can hold npages. If no span has at least npages return nil.

// This is slightly more complicated than a simple binary tree search

// since if an exact match is not found the next larger node is

// returned.

// If the last node inspected > npagesKey not holding

// a left node (a smaller npages) is the "best fit" node.

func (root *mTreap) remove(npages uintptr) *mspan {

t := root.treap

for t != nil {

if t.spanKey == nil {

throw("treap node with nil spanKey found")

}

if t.npagesKey < npages {

t = t.right

} else if t.left != nil && t.left.npagesKey >= npages {

t = t.left

} else {

result := t.spanKey

root.removeNode(t)

return result

}

}

return nil

}

towards arena Regional application new span The function of is mheap Class grow function :

// Try to add at least npage pages of memory to the heap,

// returning whether it worked.

//

// h must be locked.

func (h *mheap) grow(npage uintptr) bool {

// Ask for a big chunk, to reduce the number of mappings

// the operating system needs to track; also amortizes

// the overhead of an operating system mapping.

// Allocate a multiple of 64kB.

npage = round(npage, (64<<10)/_PageSize)

ask := npage << _PageShift

if ask < _HeapAllocChunk {

ask = _HeapAllocChunk

}

// call mheap.sysAlloc Function request

v := h.sysAlloc(ask)

if v == nil {

if ask > npage<<_PageShift {

ask = npage << _PageShift

v = h.sysAlloc(ask)

}

if v == nil {

print("runtime: out of memory: cannot allocate ", ask, "-byte block (", memstats.heap_sys, " in use)n")

return false

}

}

// Create a new span And add it to the free list

// Create a fake "in use" span and free it, so that the

// right coalescing happens.

s := (*mspan)(h.spanalloc.alloc())

s.init(uintptr(v), ask>>_PageShift)

p := (s.base() - h.arena_start) >> _PageShift

for i := p; i < p+s.npages; i++ {

h.spans[i] = s

}

atomic.Store(&s.sweepgen, h.sweepgen)

s.state = _MSpanInUse

h.pagesInUse += uint64(s.npages)

h.freeSpanLocked(s, false, true, 0)

return true

}

Keep looking at mheap Of sysAlloc function :

// sysAlloc allocates the next n bytes from the heap arena. The

// returned pointer is always _PageSize aligned and between

// h.arena_start and h.arena_end. sysAlloc returns nil on failure.

// There is no corresponding free function.

func (h *mheap) sysAlloc(n uintptr) unsafe.Pointer {

// strandLimit is the maximum number of bytes to strand from

// the current arena block. If we would need to strand more

// than this, we fall back to sysAlloc'ing just enough for

// this allocation.

const strandLimit = 16 << 20

// If arena Region currently not enough submitted areas , Call sysReserve Reserve more space , And then update arena_end

// sysReserve stay linux It's called mmap function

// mmap(v, n, _PROT_NONE, _MAP_ANON|_MAP_PRIVATE, -1, 0)

if n > h.arena_end-h.arena_alloc {

// If we haven't grown the arena to _MaxMem yet, try

// to reserve some more address space.

p_size := round(n+_PageSize, 256<<20)

new_end := h.arena_end + p_size // Careful: can overflow

if h.arena_end <= new_end && new_end-h.arena_start-1 <= _MaxMem {

// TODO: It would be bad if part of the arena

// is reserved and part is not.

var reserved bool

p := uintptr(sysReserve(unsafe.Pointer(h.arena_end), p_size, &reserved))

if p == 0 {

// TODO: Try smaller reservation

// growths in case we're in a crowded

// 32-bit address space.

goto reservationFailed

}

// p can be just about anywhere in the address

// space, including before arena_end.

if p == h.arena_end {

// The new block is contiguous with

// the current block. Extend the

// current arena block.

h.arena_end = new_end

h.arena_reserved = reserved

} else if h.arena_start <= p && p+p_size-h.arena_start-1 <= _MaxMem && h.arena_end-h.arena_alloc < strandLimit {

// We were able to reserve more memory

// within the arena space, but it's

// not contiguous with our previous

// reservation. It could be before or

// after our current arena_used.

//

// Keep everything page-aligned.

// Our pages are bigger than hardware pages.

h.arena_end = p + p_size

p = round(p, _PageSize)

h.arena_alloc = p

h.arena_reserved = reserved

} else {

// We got a mapping, but either

//

// 1) It's not in the arena, so we

// can't use it. (This should never

// happen on 32-bit.)

//

// 2) We would need to discard too

// much of our current arena block to

// use it.

//

// We haven't added this allocation to

// the stats, so subtract it from a

// fake stat (but avoid underflow).

//

// We'll fall back to a small sysAlloc.

stat := uint64(p_size)

sysFree(unsafe.Pointer(p), p_size, &stat)

}

}

}

// When the space reserved is enough, just add arena_alloc

if n <= h.arena_end-h.arena_alloc {

// Keep taking from our reservation.

p := h.arena_alloc

sysMap(unsafe.Pointer(p), n, h.arena_reserved, &memstats.heap_sys)

h.arena_alloc += n

if h.arena_alloc > h.arena_used {

h.setArenaUsed(h.arena_alloc, true)

}

if p&(_PageSize-1) != 0 {

throw("misrounded allocation in MHeap_SysAlloc")

}

return unsafe.Pointer(p)

}

// Processing after failed reservation space

reservationFailed:

// If using 64-bit, our reservation is all we have.

if sys.PtrSize != 4 {

return nil

}

// On 32-bit, once the reservation is gone we can

// try to get memory at a location chosen by the OS.

p_size := round(n, _PageSize) + _PageSize

p := uintptr(sysAlloc(p_size, &memstats.heap_sys))

if p == 0 {

return nil

}

if p < h.arena_start || p+p_size-h.arena_start > _MaxMem {

// This shouldn't be possible because _MaxMem is the

// whole address space on 32-bit.

top := uint64(h.arena_start) + _MaxMem

print("runtime: memory allocated by OS (", hex(p), ") not in usable range [", hex(h.arena_start), ",", hex(top), ")n")

sysFree(unsafe.Pointer(p), p_size, &memstats.heap_sys)

return nil

}

p += -p & (_PageSize - 1)

if p+n > h.arena_used {

h.setArenaUsed(p+n, true)

}

if p&(_PageSize-1) != 0 {

throw("misrounded allocation in MHeap_SysAlloc")

}

return unsafe.Pointer(p)

}

That's the whole process of assigning objects , The next analysis GC Handling of marked and recycled objects .

Recycle object processing

The process of reclaiming objects

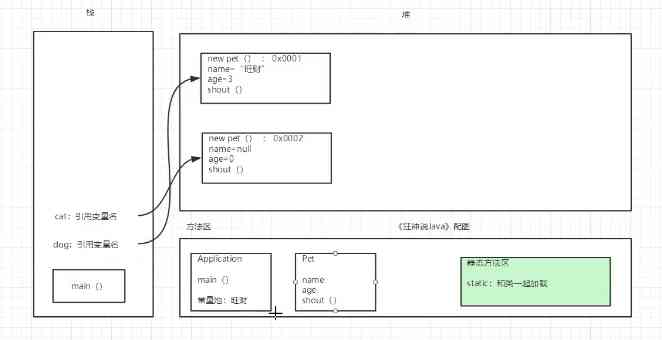

GO Of GC It's parallel GC, That is to say GC Most of the processing and ordinary go The code is running at the same time , This makes GO Of GC The process is complicated .

First GC There are four stages , They are :

- Sweep Termination: For the unclean span Carry out the cleaning , Only the last round GC We can start a new round only after the cleaning work of GC

- Mark: Scan all root objects , And all the objects that the root object can reach , Mark them not to be recycled

- Mark Termination: Complete the marking work , Rescan some root objects ( requirement STW)

- Sweep: Clean according to the marked results span

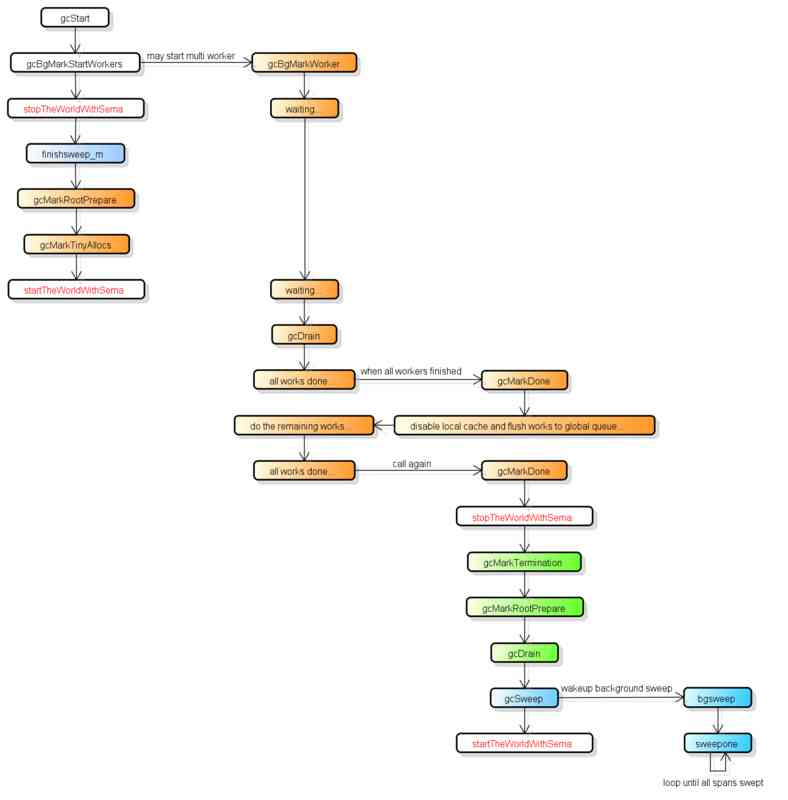

The picture below is more complete GC technological process , The four stages are classified by color :

stay GC There are two kinds of background tasks in the process (G), One is the background task for marking , One is the background task for cleaning .

Background tasks for tagging will start when needed , The number of background tasks that can work at the same time is about P The quantity of 25%, That is to say go Let's talk about 25% Of cpu Use in GC On the basis of .

The background task for cleaning will start a , Wake up when entering the cleaning phase .

At present, the whole GC The process will take place twice STW(Stop The World), The first is Mark The beginning of the stage , The second, Mark Termination Stage .

for the first time STW Will be ready to scan the root object , Start write barrier (Write Barrier) And the auxiliary GC(mutator assist).

The second time STW Some root objects will be rescanned , Disable write barrier (Write Barrier) And the auxiliary GC(mutator assist).

It should be noted that , Not all root object scans require STW, For example, scanning an object on a stack only needs to stop owning the stack G.

from go 1.9 Start , The implementation of the write barrier uses Hybrid Write Barrier, A significant reduction in the second time STW Time for .

GC The trigger condition of

GC It will be triggered when certain conditions are met , The trigger conditions are as follows :

- gcTriggerAlways: Force trigger GC

- gcTriggerHeap: When the current allocated memory reaches a certain value GC

- gcTriggerTime: When a certain period of time has not been implemented GC It triggers GC

- gcTriggerCycle: Ask for a new round of GC, If started, skip , Manual trigger GC Of

runtime.GC()Will use this condition

The judgment of trigger condition is in gctrigger Of test function .

among gcTriggerHeap and gcTriggerTime These two conditions are naturally triggered , gcTriggerHeap The judgment code for is as follows :

return memstats.heap_live >= memstats.gc_trigger

heap_live As can be seen from the code analysis of the allocator above , When the duty reaches gc_trigger It will trigger GC, that gc_trigger How it was decided ?

gc_trigger Is calculated in gcSetTriggerRatio Function , Formula is :

trigger = uint64(float64(memstats.heap_marked) * (1 + triggerRatio))

Multiply the size of the current tag to survive 1+ coefficient triggerRatio, It's about starting next time GC The amount of allocation needed .

triggerRatio In every time GC It will be adjusted after , Calculation triggerRatio The function of is encCycle, Formula is :

const triggerGain = 0.5

// The goal is Heap growth rate , The default is 1.0

goalGrowthRatio := float64(gcpercent) / 100

// actual Heap growth rate , Equal to the total size of / Survival size -1

actualGrowthRatio := float64(memstats.heap_live)/float64(memstats.heap_marked) - 1

// GC The usage time of the marking phase ( because endCycle Is in Mark Termination Phase called )

assistDuration := nanotime() - c.markStartTime

// GC Marking stage of CPU Occupancy rate , The target value is 0.25

utilization := gcGoalUtilization

if assistDuration > 0 {

// assistTime yes G auxiliary GC Total time spent marking objects

// (nanosecnds spent in mutator assists during this cycle)

// additional CPU Occupancy rate = auxiliary GC The total time the object was marked / (GC Mark usage time * P The number of )

utilization += float64(c.assistTime) / float64(assistDuration*int64(gomaxprocs))

}

// Trigger factor offset value = Target growth rate - The original trigger factor - CPU Occupancy rate / The goal is CPU Occupancy rate * ( Real growth rate - The original trigger factor )

// Parameter analysis :

// The greater the real growth rate , The smaller the trigger factor offset is , Less than 0 Trigger next time GC It's going to be early

// CPU The more occupancy , The smaller the trigger factor offset is , Less than 0 Trigger next time GC It's going to be early

// The bigger the original trigger coefficient , The smaller the trigger factor offset is , Less than 0 Trigger next time GC It's going to be early

triggerError := goalGrowthRatio - memstats.triggerRatio - utilization/gcGoalUtilization*(actualGrowthRatio-memstats.triggerRatio)

// Adjust the trigger factor according to the offset value , Adjust only half of the offset at a time ( Gradual adjustment )

triggerRatio := memstats.triggerRatio + triggerGain*triggerError

Formula " The goal is Heap growth rate " You can set environment variables by "GOGC" adjustment , The default value is 100, Increasing its value can reduce GC Trigger .

Set up "GOGC=off" You can turn it off completely GC.

gcTriggerTime The judgment code for is as follows :

lastgc := int64(atomic.Load64(&memstats.last_gc_nanotime))

return lastgc != 0 && t.now-lastgc > forcegcperiod

forcegcperiod Is defined as 2 minute , That is to say 2 No execution in minutes GC It will force trigger .

The definition of tricolor ( black , ash , white )

I saw the three colors GC Of " Tricolor " The best article to explain this concept is This article 了 , It is highly recommended to read the explanation in this article first .

" Tricolor " The concept of "can be simply understood as :

- black : The object is this time GC Has been marked in , And the child objects contained in this object are also marked

- gray : The object is this time GC Has been marked in , But this object contains child objects that are not marked

- white : The object is this time GC Not marked in

stay go Internal objects do not have properties that hold colors , Tricolor is just a description of their state ,

The white object is where it is span Of gcmarkBits Corresponding bit by 0,

The gray object is where it is span Of gcmarkBits Corresponding bit by 1, And the object is in the tag queue ,

The black object is where it is span Of gcmarkBits Corresponding bit by 1, And the object has been removed from the tag queue and processed .

gc After completion , gcmarkBits Will move to allocBits And then reassign one that's all for 0 Of bitmap, So the black object turns white .

Write barriers (Write Barrier)

because go Support parallel GC, GC Scanning and go Code can run at the same time , The problem with this is GC In the process of scanning go The code may have changed the dependency tree of the object ,

For example, the root object is found at the beginning of the scan A and B, B Have C The pointer to , GC Scan first A, then B hold C Hand over the pointer of A, GC Scan again B, At this time C It won't be scanned .

To avoid this problem , go stay GC The markup phase of the will enable the write barrier (Write Barrier).

Write barrier enabled (Write Barrier) after , When B hold C Hand over the pointer of A when , GC Would think that in this round of scanning C The pointer is alive ,

Even if A It may be lost later C, that C It's just the next round of recycling .

The write barrier is enabled only for pointers , And only in GC The marking phase of the , Usually, the value will be written directly to the target address .

go stay 1.9 It's starting to work Hybrid write barrier (Hybrid Write Barrier), The pseudocode is as follows :

writePointer(slot, ptr):

shade(*slot)

if any stack is grey:

shade(ptr)

*slot = ptr

The hybrid write barrier will mark the pointer to write to the target at the same time " The original pointer " and “ New pointer ".

The reason to mark the original pointer is , Other running threads may copy the value of this pointer to local variables on the register or stack at the same time ,

because Copying a pointer to a register or a local variable on a stack does not pass through a write barrier , So it may cause the pointer not to be marked , Imagine the following :

[go] b = obj

[go] oldx = nil

[gc] scan oldx...

[go] oldx = b.x // Copy b.x To local variables , Not through the writing barrier

[go] b.x = ptr // Writing barriers should be marked b.x Original value of

[gc] scan b...

If the write barrier does not mark the original value , that oldx It won't be scanned .

The reason for marking the new pointer is , It is possible for other running threads to shift the pointer position , Imagine the following :

[go] a = ptr

[go] b = obj

[gc] scan b...

[go] b.x = a // Writing barriers should be marked b.x The new value of

[go] a = nil

[gc] scan a...

If the write barrier does not mark a new value , that ptr It won't be scanned .

The hybrid write barrier allows GC There is no need to rescan each... After parallel marking G The stack , Can reduce the Mark Termination Medium STW Time .

Besides writing barriers , stay GC All newly assigned objects will immediately turn black during the process , Above mallocgc You can see in the function .

auxiliary GC(mutator assist)

In order to prevent heap It's growing too fast , stay GC In the process of execution, if it runs at the same time G Allocated memory , So this G Will be asked to assist GC Do part of the work .

stay GC At the same time in the process of G be called "mutator", "mutator assist" The mechanism is G auxiliary GC The mechanism for doing part of the work .

auxiliary GC There are two types of work done , One is the mark (Mark), The other is cleaning (Sweep).

The trigger of the auxiliary marker can be viewed from the above mallocgc function , When triggered G Will help scan " workload " Objects , The formula for calculating the workload is :

debtBytes * assistWorkPerByte

It means the size of the distribution multiplied by the coefficient assistWorkPerByte, assistWorkPerByte In function revise in , Formula is :

// Number of objects waiting to be scanned = Number of objects not scanned - Number of objects scanned

scanWorkExpected := int64(memstats.heap_scan) - c.scanWork

if scanWorkExpected < 1000 {

scanWorkExpected = 1000

}

// Distance triggered GC Of Heap size = Expect to trigger GC Of Heap size - Current Heap size

// Be careful next_gc The calculation of the following gc_trigger Dissimilarity , next_gc be equal to heap_marked * (1 + gcpercent / 100)

heapDistance := int64(memstats.next_gc) - int64(atomic.Load64(&memstats.heap_live))

if heapDistance <= 0 {

heapDistance = 1

}

// Every distribution 1 byte The number of objects that need to be scanned auxiliary = Number of objects waiting to be scanned / Distance triggered GC Of Heap size

c.assistWorkPerByte = float64(scanWorkExpected) / float64(heapDistance)

c.assistBytesPerWork = float64(heapDistance) / float64(scanWorkExpected)

What's different from the auxiliary marker is , Auxiliary cleaning application new span Only when you check , The auxiliary marker checks every time an object is allocated .

The trigger of auxiliary cleaning can be seen from the above cacheSpan function , When triggered G Will help recycle " workload " The object of the page , The formula for calculating the workload is :

spanBytes * sweepPagesPerByte // Not exactly the same , To be specific, see deductSweepCredit function

It means the size of the distribution multiplied by the coefficient sweepPagesPerByte, sweepPagesPerByte In function gcSetTriggerRatio in , Formula is :

// Current Heap size

heapLiveBasis := atomic.Load64(&memstats.heap_live)

// Distance triggered GC Of Heap size = Next trigger GC Of Heap size - Current Heap size

heapDistance := int64(trigger) - int64(heapLiveBasis)

heapDistance -= 1024 * 1024

if heapDistance < _PageSize {

heapDistance = _PageSize

}

// The number of pages cleaned

pagesSwept := atomic.Load64(&mheap_.pagesSwept)

// The number of pages not cleaned = Number of pages in use - The number of pages cleaned

sweepDistancePages := int64(mheap_.pagesInUse) - int64(pagesSwept)

if sweepDistancePages <= 0 {

mheap_.sweepPagesPerByte = 0

} else {

// Every distribution 1 byte( Of span) The number of pages that need to be cleaned up = The number of pages not cleaned / Distance triggered GC Of Heap size

mheap_.sweepPagesPerByte = float64(sweepDistancePages) / float64(heapDistance)

}

Root object

stay GC The first thing that needs to be marked is " Root object ", All objects that are reachable from the root object are considered alive .

The root object contains global variables , each G Variables on the stack of , GC The root object is scanned first, and then all objects that the root object can reach .

Scanning the root object involves a series of tasks , They are defined in [https://github.com/golang/go/blob/go1.9.2/src/runtime/mgcmark.go#L54] function :

Fixed Roots: Special scanning work

- fixedRootFinalizers: Scan the constructor queue

- fixedRootFreeGStacks: Release suspended G The stack

- Flush Cache Roots: Release mcache All in span, requirement STW

- Data Roots: Scan global variables for read and write

- BSS Roots: Scan read-only global variables

- Span Roots: Scan each one span A special object in ( Destructor list )

- Stack Roots: Scan each one G The stack

Marking stage (Mark) Will do one of them "Fixed Roots", "Data Roots", "BSS Roots", "Span Roots", "Stack Roots".

Complete the marking phase (Mark Termination) Will do one of them "Fixed Roots", "Flush Cache Roots".

Tag queue

GC The marking phase of the " Tag queue " To make sure that all objects reachable from the root object are marked , As mentioned above " gray " Is the object in the tag queue .

for instance , If there is [A, B, C] These three root objects , Then when you scan the root objects, you put them in the tag queue :

work queue: [A, B, C]

Background marking tasks are taken out of the tag queue A, If A Refer to the D, Then put D Put in the tag queue :

work queue: [B, C, D]

The background tag task is taken from the tag queue B, If B It also quotes D, This is because D stay gcmarkBits Corresponding bit It's already 1 So I will skip :

work queue: [C, D]

If it runs in parallel go The code assigns an object E, object E Will be immediately marked , But it doesn't get into the tag queue ( Because sure E There are no references to other objects ).

And then run in parallel go Code puts objects F Set to object E Members of , The write barrier marks the object F And then put the object F Add to the run queue :

work queue: [C, D, F]

The background tag task is taken from the tag queue C, If C There are no references to other objects , There is no need to deal with :

work queue: [D, F]

The background tag task is taken from the tag queue D, If D Refer to the X, Then put X Put in the tag queue :

work queue: [F, X]

The background tag task is taken from the tag queue F, If F There are no references to other objects , There is no need to deal with .

The background tag task is taken from the tag queue X, If X There are no references to other objects , There is no need to deal with .

Finally, the marking queue is empty , Mark complete , The living objects are [A, B, C, D, E, F, X].

The actual situation will be a little more complicated than the situation described above .

The tag queue is divided into global tag queue and each tag queue P Local tag queue for , This is similar to a running queue in a coroutine .

The queue is empty and marked after , You also need to stop the whole world and ban writing barriers , Then check again if it's empty .

Source code analysis

go Trigger gc From gcStart Function to :

// gcStart transitions the GC from _GCoff to _GCmark (if

// !mode.stwMark) or _GCmarktermination (if mode.stwMark) by

// performing sweep termination and GC initialization.

//

// This may return without performing this transition in some cases,

// such as when called on a system stack or with locks held.

func gcStart(mode gcMode, trigger gcTrigger) {

// Judge the present G Is it possible to seize , Don't trigger when you can't preempt GC

// Since this is called from malloc and malloc is called in

// the guts of a number of libraries that might be holding

// locks, don't attempt to start GC in non-preemptible or

// potentially unstable situations.

mp := acquirem()

if gp := getg(); gp == mp.g0 || mp.locks > 1 || mp.preemptoff != "" {

releasem(mp)

return

}

releasem(mp)

mp = nil

// Clean up the last round in parallel GC Not cleaned span

// Pick up the remaining unswept/not being swept spans concurrently

//

// This shouldn't happen if we're being invoked in background

// mode since proportional sweep should have just finished

// sweeping everything, but rounding errors, etc, may leave a

// few spans unswept. In forced mode, this is necessary since

// GC can be forced at any point in the sweeping cycle.

//

// We check the transition condition continuously here in case

// this G gets delayed in to the next GC cycle.

for trigger.test() && gosweepone() != ^uintptr(0) {

sweep.nbgsweep++

}

// locked , And then check again gcTrigger Is the condition of , If it doesn't work, it doesn't trigger GC

// Perform GC initialization and the sweep termination

// transition.

semacquire(&work.startSema)

// Re-check transition condition under transition lock.

if !trigger.test() {

semrelease(&work.startSema)

return

}

// Whether the record is forced to trigger , gcTriggerCycle yes runtime.GC With

// For stats, check if this GC was forced by the user.

work.userForced = trigger.kind == gcTriggerAlways || trigger.kind == gcTriggerCycle

// Determines whether the prohibition of parallelism is specified GC Parameters of

// In gcstoptheworld debug mode, upgrade the mode accordingly.

// We do this after re-checking the transition condition so

// that multiple goroutines that detect the heap trigger don't

// start multiple STW GCs.

if mode == gcBackgroundMode {

if debug.gcstoptheworld == 1 {

mode = gcForceMode

} else if debug.gcstoptheworld == 2 {

mode = gcForceBlockMode

}

}

// Ok, we're doing it! Stop everybody else

semacquire(&worldsema)

// Tracking processing

if trace.enabled {

traceGCStart()

}

// Start the background scan task (G)

if mode == gcBackgroundMode {

gcBgMarkStartWorkers()

}

// Reset flag related state

gcResetMarkState()

// Reset parameters

work.stwprocs, work.maxprocs = gcprocs(), gomaxprocs

work.heap0 = atomic.Load64(&memstats.heap_live)

work.pauseNS = 0

work.mode = mode

// Record the start time

now := nanotime()

work.tSweepTerm = now

work.pauseStart = now

// Stop all running G, And they are forbidden to run

systemstack(stopTheWorldWithSema)

// !!!!!!!!!!!!!!!!

// The world has stopped (STW)...

// !!!!!!!!!!!!!!!!

// Clean up the last round GC Not cleaned span, Make sure the last round GC Completed

// Finish sweep before we start concurrent scan.

systemstack(func() {

finishsweep_m()

})

// Clean sched.sudogcache and sched.deferpool

// clearpools before we start the GC. If we wait they memory will not be

// reclaimed until the next GC cycle.

clearpools()

// increase GC Count

work.cycles++

// Judge whether it is parallel or not GC Pattern

if mode == gcBackgroundMode { // Do as much work concurrently as possible

// Mark a new round GC Started

gcController.startCycle()

work.heapGoal = memstats.next_gc

// Set the GC Status as _GCmark

// Then enable the write barrier

// Enter concurrent mark phase and enable

// write barriers.

//

// Because the world is stopped, all Ps will

// observe that write barriers are enabled by

// the time we start the world and begin

// scanning.

//

// Write barriers must be enabled before assists are

// enabled because they must be enabled before

// any non-leaf heap objects are marked. Since

// allocations are blocked until assists can

// happen, we want enable assists as early as

// possible.

setGCPhase(_GCmark)

// Reset the count of background marking tasks

gcBgMarkPrepare() // Must happen before assist enable.

// Calculate the number of tasks to scan the root object

gcMarkRootPrepare()

// Mark all tiny alloc Objects waiting to be merged

// Mark all active tinyalloc blocks. Since we're

// allocating from these, they need to be black like

// other allocations. The alternative is to blacken

// the tiny block on every allocation from it, which

// would slow down the tiny allocator.

gcMarkTinyAllocs()

// Enable auxiliary GC

// At this point all Ps have enabled the write

// barrier, thus maintaining the no white to

// black invariant. Enable mutator assists to

// put back-pressure on fast allocating

// mutators.

atomic.Store(&gcBlackenEnabled, 1)

// Record the start time of the mark

// Assists and workers can start the moment we start

// the world.

gcController.markStartTime = now

// Restart the world

// The background tag task created earlier will start to work , After all background marking tasks have been completed , Enter the completion marking stage

// Concurrent mark.

systemstack(startTheWorldWithSema)

// !!!!!!!!!!!!!!!

// The world has rebooted ...

// !!!!!!!!!!!!!!!

// How long has the record stopped , And mark the start of the phase

now = nanotime()

work.pauseNS += now - work.pauseStart

work.tMark = now

} else {

// It's not parallel GC Pattern

// Record the start time of the completion marking phase

t := nanotime()

work.tMark, work.tMarkTerm = t, t

work.heapGoal = work.heap0

// Skip the marking phase , Execution completion marking phase

// All work will stop in the state of the world

// ( The marking phase will set up work.markrootDone=true, If you skip, its value is false, The completion of the marking phase will do all the work )

// Completing the tagging phase will restart the world

// Perform mark termination. This will restart the world.

gcMarkTermination(memstats.triggerRatio)

}

semrelease(&work.startSema)

}

Next, analyze one by one gcStart Called function , It is suggested to cooperate with the above " The process of reclaiming objects " In the picture understanding .

function gcBgMarkStartWorkers Used to start the background marking task , First, separate each of them P Start a :

// gcBgMarkStartWorkers prepares background mark worker goroutines.

// These goroutines will not run until the mark phase, but they must

// be started while the work is not stopped and from a regular G

// stack. The caller must hold worldsema.

func gcBgMarkStartWorkers() {

// Background marking is performed by per-P G's. Ensure that

// each P has a background GC G.

for _, p := range &allp {

if p == nil || p.status == _Pdead {

break

}

// If it has been started, it will not be started repeatedly

if p.gcBgMarkWorker == 0 {

go gcBgMarkWorker(p)

// Wait for the task notification semaphore after startup bgMarkReady Go on

notetsleepg(&work.bgMarkReady, -1)

noteclear(&work.bgMarkReady)

}

}

}

Although here for each P Started a background marking task , But the only thing that can work at the same time is 25%, This logic is in the coroutine M obtain G Called when findRunnableGCWorker in :

// findRunnableGCWorker returns the background mark worker for _p_ if it

// should be run. This must only be called when gcBlackenEnabled != 0.

func (c *gcControllerState) findRunnableGCWorker(_p_ *p) *g {

if gcBlackenEnabled == 0 {

throw("gcControllerState.findRunnable: blackening not enabled")

}

if _p_.gcBgMarkWorker == 0 {

// The mark worker associated with this P is blocked

// performing a mark transition. We can't run it

// because it may be on some other run or wait queue.

return nil

}

if !gcMarkWorkAvailable(_p_) {

// No work to be done right now. This can happen at

// the end of the mark phase when there are still

// assists tapering off. Don't bother running a worker

// now because it'll just return immediately.

return nil

}

// Atoms decrease by the corresponding value , If the decrease is greater than or equal to 0 Then return to true, Otherwise return to false

decIfPositive := func(ptr *int64) bool {

if *ptr > 0 {

if atomic.Xaddint64(ptr, -1) >= 0 {

return true

}

// We lost a race

atomic.Xaddint64(ptr, +1)

}

return false

}