当前位置:网站首页>tensor.nozero(),面具,面具

tensor.nozero(),面具,面具

2022-08-05 00:23:00 【Le0v1n】

1. nozero()

https://pytorch.org/docs/stable/generated/torch.nonzero.html?highlight=nonzero#torch.nonzero

torch.nonzero(input, *, out=None, as_tuple=False) → LongTensor or tuple of LongTensors

torch.nonzero(..., as_tuple=False) (default)returns a2-Dtensor where each row is the index for a nonzero value.torch.nonzero(..., as_tuple=False) (默认)返回一个2-D张量,where each row is an index with a non-zero value.

tensor.nozero()默认返回一个2维的tensor, Inside is eligible indexes.

举个例子:

import torch

x = torch.randint(low=2, high=3, size=[2, 3])

idx = x.nonzero()

print(f"x:\n{

x}\n")

print(f"idx:\n{

idx}\n")

print(f"x.size: {

x.size()}")

print(f"idx.size: {

idx.size()}")

结果:

x:

tensor([[2, 2, 2],

[2, 2, 2]])

idx:

tensor([[0, 0],

[0, 1],

[0, 2],

[1, 0],

[1, 1],

[1, 2]])

x.size: torch.Size([2, 3])

idx.size: torch.Size([6, 2])

可以看到, 这里的idx是用2-D tensor来表示xthe index of the eligible element in.

因为

x有两个维度, 所以idx中的size()[1]也是2

如果输入是多维的tensor, Then it means that it will only be used2-D的tensor. 例子如下:

import torch

x = torch.randint(low=2, high=3, size=[2, 3, 4])

idx = x.nonzero()

print(f"x:\n{

x}\n")

print(f"idx:\n{

idx}\n")

print(f"x.size: {

x.size()}")

print(f"idx.size: {

idx.size()}")

结果:

x:

tensor([[[2, 2, 2, 2],

[2, 2, 2, 2],

[2, 2, 2, 2]],

[[2, 2, 2, 2],

[2, 2, 2, 2],

[2, 2, 2, 2]]])

idx:

tensor([[0, 0, 0],

[0, 0, 1],

[0, 0, 2],

[0, 0, 3],

[0, 1, 0],

[0, 1, 1],

[0, 1, 2],

[0, 1, 3],

[0, 2, 0],

[0, 2, 1],

[0, 2, 2],

[0, 2, 3],

[1, 0, 0],

[1, 0, 1],

[1, 0, 2],

[1, 0, 3],

[1, 1, 0],

[1, 1, 1],

[1, 1, 2],

[1, 1, 3],

[1, 2, 0],

[1, 2, 1],

[1, 2, 2],

[1, 2, 3]])

x.size: torch.Size([2, 3, 4])

idx.size: torch.Size([24, 3])

因为

x有3个维度, 所以idx中的size()[1]也是3

再举个例子:

import torch

x = torch.randint(low=2, high=3, size=[2, 3, 4, 2])

idx = x.nonzero()

print(f"x:\n{

x}\n")

print(f"idx:\n{

idx}\n")

print(f"x.size: {

x.size()}")

print(f"idx.size: {

idx.size()}")

结果:

x:

tensor([[[[2, 2],

[2, 2],

[2, 2],

[2, 2]],

[[2, 2],

[2, 2],

[2, 2],

[2, 2]],

[[2, 2],

[2, 2],

[2, 2],

[2, 2]]],

[[[2, 2],

[2, 2],

[2, 2],

[2, 2]],

[[2, 2],

[2, 2],

[2, 2],

[2, 2]],

[[2, 2],

[2, 2],

[2, 2],

[2, 2]]]])

idx:

tensor([[0, 0, 0, 0],

[0, 0, 0, 1],

[0, 0, 1, 0],

[0, 0, 1, 1],

[0, 0, 2, 0],

[0, 0, 2, 1],

[0, 0, 3, 0],

[0, 0, 3, 1],

[0, 1, 0, 0],

[0, 1, 0, 1],

[0, 1, 1, 0],

[0, 1, 1, 1],

[0, 1, 2, 0],

[0, 1, 2, 1],

[0, 1, 3, 0],

[0, 1, 3, 1],

[0, 2, 0, 0],

[0, 2, 0, 1],

[0, 2, 1, 0],

[0, 2, 1, 1],

[0, 2, 2, 0],

[0, 2, 2, 1],

[0, 2, 3, 0],

[0, 2, 3, 1],

[1, 0, 0, 0],

[1, 0, 0, 1],

[1, 0, 1, 0],

[1, 0, 1, 1],

[1, 0, 2, 0],

[1, 0, 2, 1],

[1, 0, 3, 0],

[1, 0, 3, 1],

[1, 1, 0, 0],

[1, 1, 0, 1],

[1, 1, 1, 0],

[1, 1, 1, 1],

[1, 1, 2, 0],

[1, 1, 2, 1],

[1, 1, 3, 0],

[1, 1, 3, 1],

[1, 2, 0, 0],

[1, 2, 0, 1],

[1, 2, 1, 0],

[1, 2, 1, 1],

[1, 2, 2, 0],

[1, 2, 2, 1],

[1, 2, 3, 0],

[1, 2, 3, 1]])

x.size: torch.Size([2, 3, 4, 2])

idx.size: torch.Size([48, 4])

因为

x有4个维度, 所以idx中的size()[1]也是4

终于, We can find the conclusion:

tensor.nozero()默认返回的是一个2-Dtensor, Rows represent indices of non-zero elements, 列的大小 = 输入tensor维度的数量

2. mask

in handYOLOv3时, 需要写mask, Generally, it is judged whether it is a positive sample according to whether the confidence level is greater than the threshold.:

- ≥ thresh: 张样本

- < thresh: 负样本

在YOLOv3中, The forward reasoning results are generally of the network [N, H, W, 3, 8], 其中:

N: batch sizeH: heightW: weight3: 3prediction scale8: conf + loc + cls = 1 + 4 + 3

To get confidenceconf, 因此output[..., 0], 由此就可以得到mask:

output = net(input) # [N, H, W, 3, 8]

mask = output[..., 0] > thresh # [N, H, W, 3]

那么问题来了: 为什么得到的mask的size为[N, H, W, 3]呢?

我们讲一下maskThis method is doing.

import torch

x = torch.randint(low=2, high=3, size=[2, 3])

mask = x[..., 0] > 1 # The first element in the last dimension if greater than1, then the previous dimension is returnedTrue, 否则返回False

print(f"x:\n{

x}\n")

print(f"x[..., 0]:\n{

x[..., 0]}\n")

print(f"x[..., 0].size:\n{

x[..., 0].size()}\n")

print(f"mask:\n{

mask}\n")

print(f"mask.size:\n{

mask.size()}\n")

x:

tensor([[2, 2, 2],

[2, 2, 2]])

x[..., 0]:

tensor([2, 2])

x[..., 0].size:

torch.Size([2])

mask:

tensor([True, True])

mask.size:

torch.Size([2])

Maybe it's not obvious enough, 那么我们将x的值修改一下:

import torch

x = torch.tensor(data=[[0, 2, 2],

[0, 2, 2]], dtype=torch.int8)

mask = x[..., 0] > 1 # The first element in the last dimension if greater than1, then the previous dimension is returnedTrue, 否则返回False

print(f"x:\n{

x}\n")

print(f"x[..., 0]:\n{

x[..., 0]}\n")

print(f"x[..., 0].size:\n{

x[..., 0].size()}\n")

print(f"mask:\n{

mask}\n")

print(f"mask.size:\n{

mask.size()}\n")

结果:

x:

tensor([[0, 2, 2],

[0, 2, 2]], dtype=torch.int8)

x[..., 0]:

tensor([0, 0], dtype=torch.int8)

x[..., 0].size:

torch.Size([2])

mask:

tensor([False, False])

mask.size:

torch.Size([2])

看起来, This function takes the first column(The description here is not accurate). 那么我们在将xExpand the dimension of:

import torch

x = torch.randint(low=0, high=3, size=[2, 3, 2])

mask = x[..., 0] > 1 # The first element in the last dimension if greater than1, then the previous dimension is returnedTrue, 否则返回False

print(f"x:\n{

x}\n")

print(f"x[..., 0]:\n{

x[..., 0]}\n")

print(f"x[..., 0].size:\n{

x[..., 0].size()}\n")

print(f"mask:\n{

mask}\n")

print(f"mask.size:\n{

mask.size()}\n")

结果:

x:

tensor([[[2, 1],

[2, 1],

[1, 0]],

[[2, 0],

[1, 2],

[0, 0]]])

x[..., 0]:

tensor([[2, 2, 1],

[2, 1, 0]])

x[..., 0].size:

torch.Size([2, 3])

mask:

tensor([[ True, True, False],

[ True, False, False]])

mask.size:

torch.Size([2, 3])

The conclusion is still true, 我们再将x扩充至 [N, H, W, 3]:

import torch

x = torch.randint(low=0, high=3, size=[2, 3, 2, 1])

mask = x[..., 0] > 1 # The first element in the last dimension if greater than1, then the previous dimension is returnedTrue, 否则返回False

print(f"x:\n{

x}\n")

print(f"x[..., 0]:\n{

x[..., 0]}\n")

print(f"x[..., 0].size:\n{

x[..., 0].size()}\n")

print(f"mask:\n{

mask}\n")

print(f"mask.size:\n{

mask.size()}\n")

x:

tensor([[[[0],

[0]],

[[0],

[1]],

[[2],

[2]]],

[[[1],

[2]],

[[2],

[2]],

[[2],

[2]]]])

x[..., 0]:

tensor([[[0, 0],

[0, 1],

[2, 2]],

[[1, 2],

[2, 2],

[2, 2]]])

x[..., 0].size:

torch.Size([2, 3, 2])

mask:

tensor([[[False, False],

[False, False],

[ True, True]],

[[False, True],

[ True, True],

[ True, True]]])

mask.size:

torch.Size([2, 3, 2])

结论是对的.

3. [mask]

上面我们分析了maskwhat are you taking, 那么将mask应用到x中(x[mask])会是怎么样的呢?

import torch

x = torch.randint(low=0, high=3, size=[2, 3])

mask = x[..., 0] > 1 # The first element in the last dimension if greater than1, then the previous dimension is returnedTrue, 否则返回False

filtered_x = x[mask]

print(f"x:\n{

x}\n")

print(f"x.size:\n{

x.size()}\n")

print("-" * 50)

print(f"x[..., 0]:\n{

x[..., 0]}\n")

print(f"x[..., 0].size:\n{

x[..., 0].size()}\n")

print("-" * 50)

print(f"mask:\n{

mask}\n")

print(f"mask.size:\n{

mask.size()}\n")

print("-" * 50)

print(f"filtered_x:\n{

filtered_x}\n")

print(f"filtered_x.size:\n{

filtered_x.size()}\n")

结果2:

x:

tensor([[2, 2, 1],

[0, 0, 2]])

x.size:

torch.Size([2, 3])

--------------------------------------------------

x[..., 0]:

tensor([2, 0])

x[..., 0].size:

torch.Size([2])

--------------------------------------------------

mask:

tensor([ True, False])

mask.size:

torch.Size([2])

--------------------------------------------------

filtered_x:

tensor([[2, 2, 1]])

filtered_x.size:

torch.Size([1, 3])

mask负责筛选"列", x[mask]Responsible for fetching eligible rows, and this line is a2D tensor.

结果2:

x:

tensor([[0, 1, 0],

[1, 0, 0]])

x.size:

torch.Size([2, 3])

--------------------------------------------------

x[..., 0]:

tensor([0, 1])

x[..., 0].size:

torch.Size([2])

--------------------------------------------------

mask:

tensor([False, False])

mask.size:

torch.Size([2])

--------------------------------------------------

filtered_x:

tensor([], size=(0, 3), dtype=torch.int64)

filtered_x.size:

torch.Size([0, 3])

mask负责筛选"列", x[mask]Responsible for fetching eligible rows, and this line is a2D tensor.

我们将xdimensional improvement:

import torch

x = torch.randint(low=0, high=3, size=[2, 3, 2])

mask = x[..., 0] > 1 # The first element in the last dimension if greater than1, then the previous dimension is returnedTrue, 否则返回False

filtered_x = x[mask]

print(f"x:\n{

x}\n")

print(f"x.size:\n{

x.size()}\n")

print("-" * 50)

print(f"x[..., 0]:\n{

x[..., 0]}\n")

print(f"x[..., 0].size:\n{

x[..., 0].size()}\n")

print("-" * 50)

print(f"mask:\n{

mask}\n")

print(f"mask.size:\n{

mask.size()}\n")

print("-" * 50)

print(f"filtered_x:\n{

filtered_x}\n")

print(f"filtered_x.size:\n{

filtered_x.size()}\n")

结果1:

x:

tensor([[[0, 2],

[0, 2],

[0, 2]],

[[2, 2],

[1, 0],

[1, 2]]])

x.size:

torch.Size([2, 3, 2])

--------------------------------------------------

x[..., 0]:

tensor([[0, 0, 0],

[2, 1, 1]])

x[..., 0].size:

torch.Size([2, 3])

--------------------------------------------------

mask:

tensor([[False, False, False],

[ True, False, False]])

mask.size:

torch.Size([2, 3])

--------------------------------------------------

filtered_x:

tensor([[2, 2]])

filtered_x.size:

torch.Size([1, 2])

结果2:

x:

tensor([[[1, 0],

[2, 0],

[2, 1]],

[[2, 0],

[2, 0],

[2, 1]]])

x.size:

torch.Size([2, 3, 2])

--------------------------------------------------

x[..., 0]:

tensor([[1, 2, 2],

[2, 2, 2]])

x[..., 0].size:

torch.Size([2, 3])

--------------------------------------------------

mask:

tensor([[False, True, True],

[ True, True, True]])

mask.size:

torch.Size([2, 3])

--------------------------------------------------

filtered_x:

tensor([[2, 0],

[2, 1],

[2, 0],

[2, 0],

[2, 1]])

filtered_x.size:

torch.Size([5, 2])

我们可以发现, 结论 mask负责筛选"列", x[mask]Responsible for fetching eligible rows, and this line is a2D tensor 依然是符合的, Let's expand the dimensions:

import torch

x = torch.randint(low=0, high=3, size=[2, 3, 2, 2])

mask = x[..., 0] > 1 # The first element in the last dimension if greater than1, then the previous dimension is returnedTrue, 否则返回False

filtered_x = x[mask]

print(f"x:\n{

x}\n")

print(f"x.size:\n{

x.size()}\n")

print("-" * 50)

print(f"x[..., 0]:\n{

x[..., 0]}\n")

print(f"x[..., 0].size:\n{

x[..., 0].size()}\n")

print("-" * 50)

print(f"mask:\n{

mask}\n")

print(f"mask.size:\n{

mask.size()}\n")

print("-" * 50)

print(f"filtered_x:\n{

filtered_x}\n")

print(f"filtered_x.size:\n{

filtered_x.size()}\n")

结果1:

x:

tensor([[[[0, 0],

[0, 1]],

[[1, 1],

[0, 0]],

[[0, 1],

[2, 1]]],

[[[2, 2],

[0, 1]],

[[0, 2],

[0, 2]],

[[0, 0],

[2, 2]]]])

x.size:

torch.Size([2, 3, 2, 2])

--------------------------------------------------

x[..., 0]:

tensor([[[0, 0],

[1, 0],

[0, 2]],

[[2, 0],

[0, 0],

[0, 2]]])

x[..., 0].size:

torch.Size([2, 3, 2])

--------------------------------------------------

mask:

tensor([[[False, False],

[False, False],

[False, True]],

[[ True, False],

[False, False],

[False, True]]])

mask.size:

torch.Size([2, 3, 2])

--------------------------------------------------

filtered_x:

tensor([[2, 1],

[2, 2],

[2, 2]])

filtered_x.size:

torch.Size([3, 2])

结果2:

x:

tensor([[[[2, 2],

[2, 2]],

[[1, 0],

[0, 2]],

[[0, 2],

[2, 2]]],

[[[0, 0],

[1, 0]],

[[1, 1],

[0, 1]],

[[2, 1],

[1, 0]]]])

x.size:

torch.Size([2, 3, 2, 2])

--------------------------------------------------

x[..., 0]:

tensor([[[2, 2],

[1, 0],

[0, 2]],

[[0, 1],

[1, 0],

[2, 1]]])

x[..., 0].size:

torch.Size([2, 3, 2])

--------------------------------------------------

mask:

tensor([[[ True, True],

[False, False],

[False, True]],

[[False, False],

[False, False],

[ True, False]]])

mask.size:

torch.Size([2, 3, 2])

--------------------------------------------------

filtered_x:

tensor([[2, 2],

[2, 2],

[2, 2],

[2, 1]])

filtered_x.size:

torch.Size([4, 2])

The conclusion remains!

4. 结论

Now we finally understand,

output = net(input) # [N, H, W, 3, 8]

mask = output[..., 0] > thresh # [N, H, W, 3]

mask: Take out the columns that meet the conditions (The dimension used for judgment disappears, 所以[N, H, W, 3, 8]->[N, H, W, 3])output[mask]: 取出符合条件的行 (是一个2-Dtensor)

举个例子:

def get_idx_and_info(self, output, thresh):

output = output.permute(0, 2, 3, 1) # [N, 24, H, W] -> [N, H, W, 3*8]

N, H, W, _ = output.size()

output = output.reshape(N, H, W, 3, -1) # N, H, W, 24] -> N, H, W, 3, 8]

# 定义掩码(符合条件的行)

mask = output[..., 0] > thresh # 置信度 > thresh # [N, H, W, 3] (It should be automaticsqueeze了)

idx = mask.nonzero() # size -> [Number of eligible numbers, 4] # 因为maskThe number of dimensions has4个, 所以这里是4,

info = output[mask] # size -> [Number of eligible numbers, 8] # 8 = c + loc + cls

return idx, info

边栏推荐

- tiup telemetry

- IDEA file encoding modification

- typeScript - Partially apply a function

- 2022杭电多校第三场 K题 Taxi

- Flask框架 根据源码分析可扩展点

- "No title"

- 软件测试面试题:您以往所从事的软件测试工作中,是否使用了一些工具来进行软件缺陷(Bug)的管理?如果有,请结合该工具描述软件缺陷(Bug)跟踪管理的流程?

- 【论文笔记】—低照度图像增强—Unsupervised—EnlightenGAN—2019-TIP

- 论文解读( AF-GCL)《Augmentation-Free Graph Contrastive Learning with Performance Guarantee》

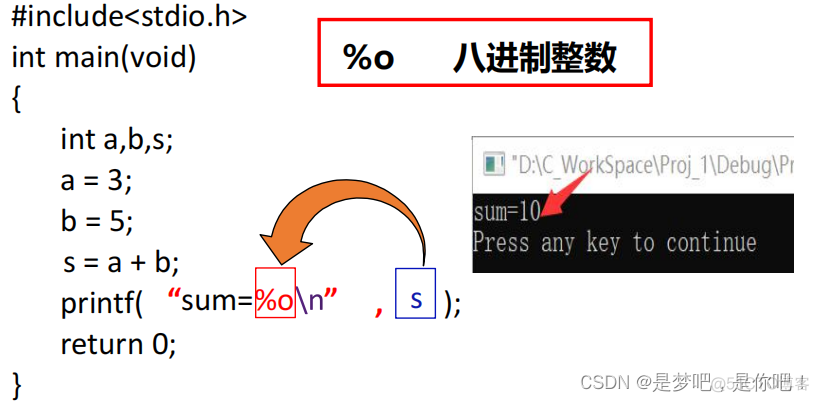

- 简单的顺序结构程序(C语言)

猜你喜欢

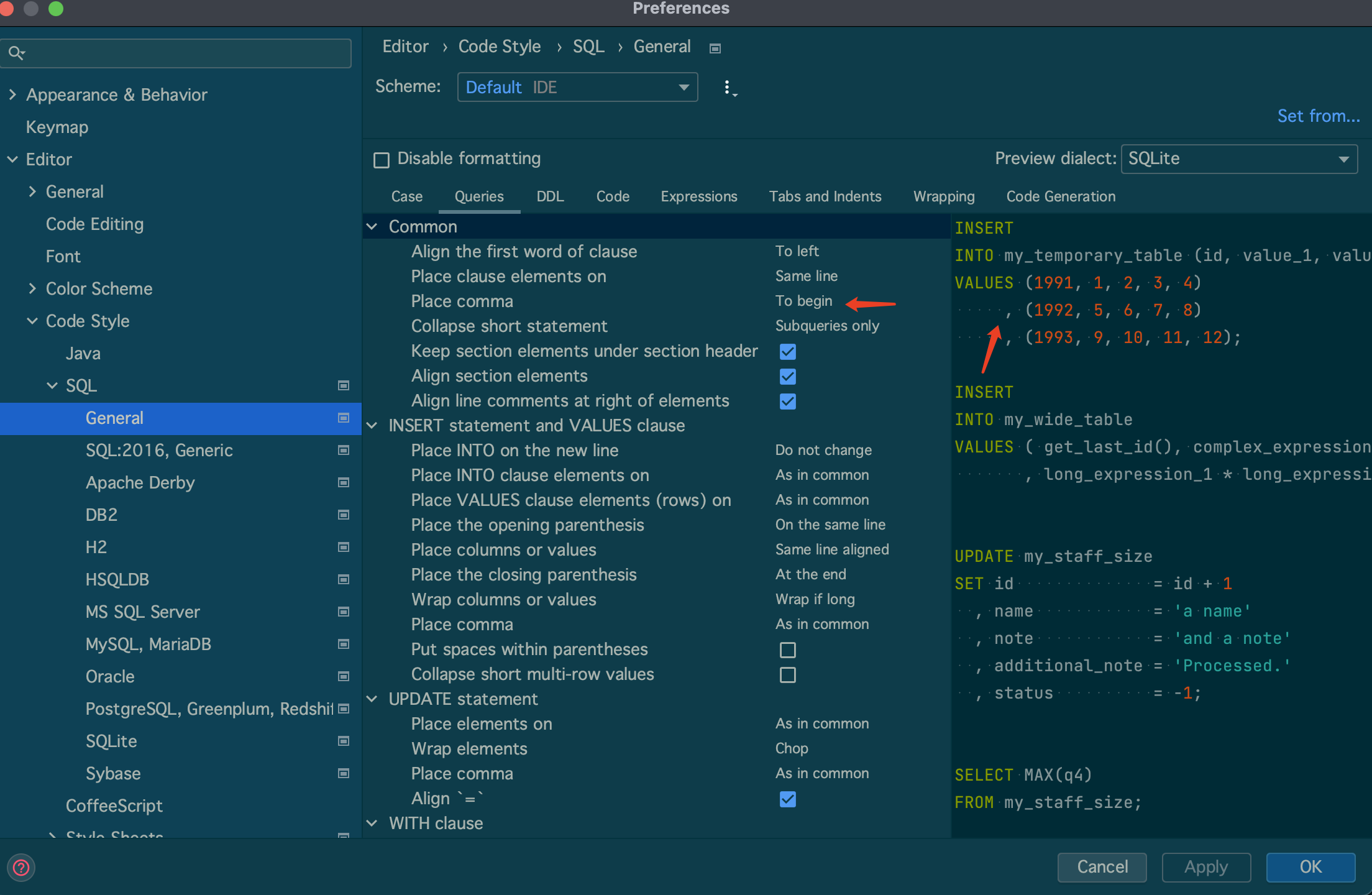

【idea】idea配置sql格式化

性能测试如何准备测试数据

简单的顺序结构程序(C语言)

Will domestic websites use Hong Kong servers be blocked?

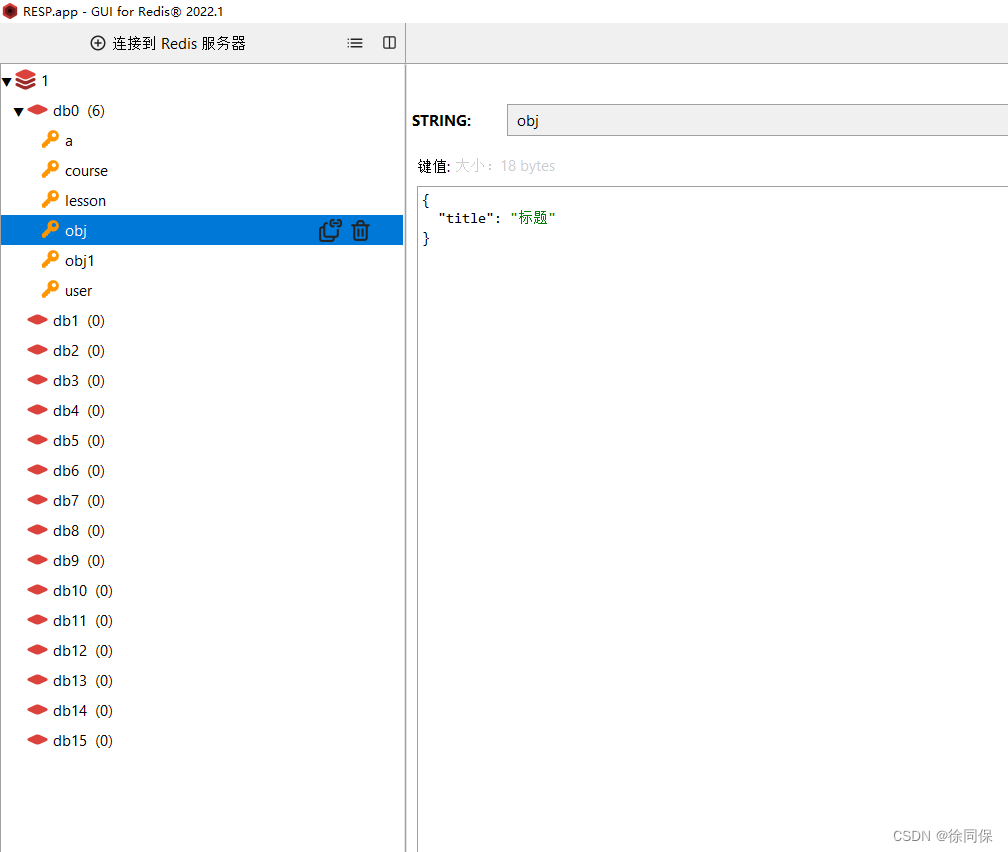

redis可视化管理软件Redis Desktop Manager2022

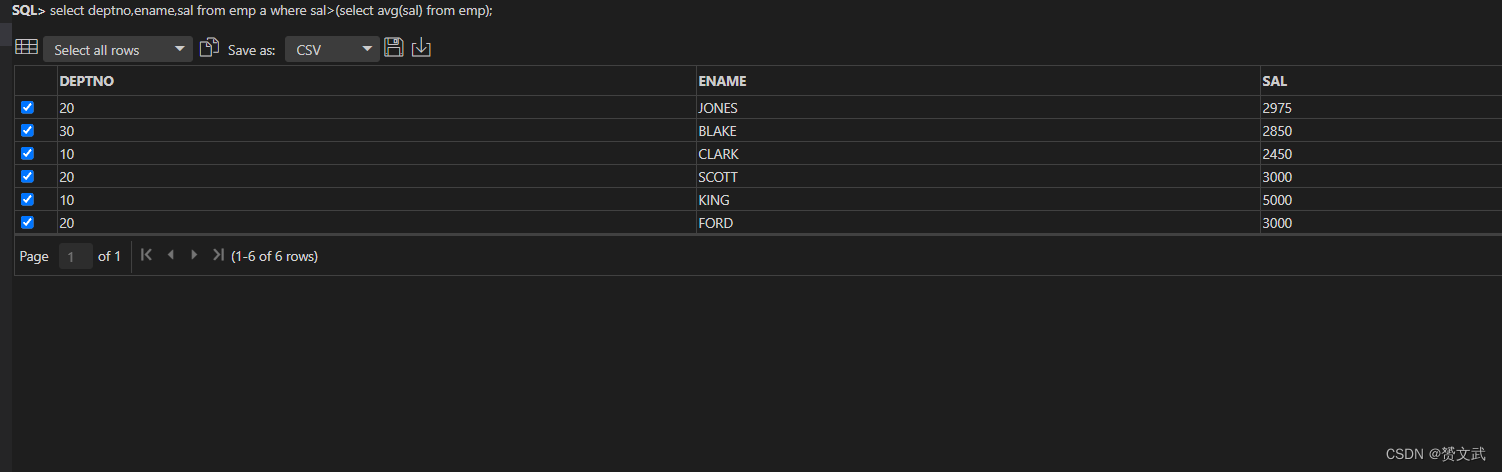

子连接中的参数传递

leetcode: 266. All Palindromic Permutations

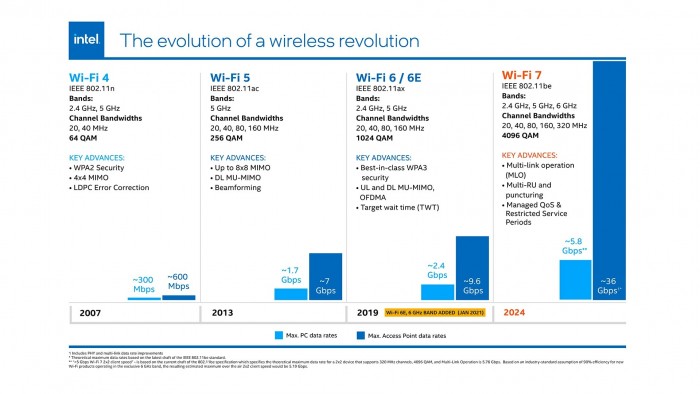

英特尔WiFi 7产品将于2024年亮相 最高速度可达5.8Gbps

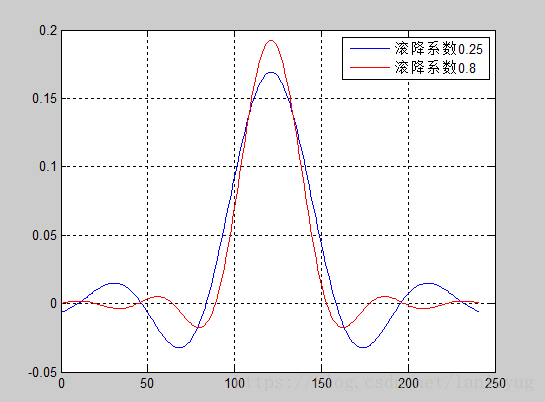

matlab中rcosdesign函数升余弦滚降成型滤波器

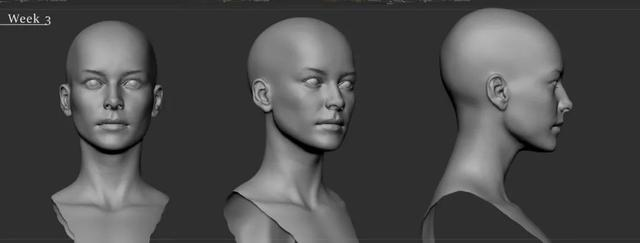

The master teaches you the 3D real-time character production process, the game modeling process sharing

随机推荐

【LeetCode】双指针题解汇总

E - Distance Sequence (前缀和优化dp

动态上传jar包热部署

NMS原理及其代码实现

英特尔WiFi 7产品将于2024年亮相 最高速度可达5.8Gbps

2022牛客多校训练第二场 J题 Link with Arithmetic Progression

关于使用read table 语句

matlab中rcosdesign函数升余弦滚降成型滤波器

[230] Execute command error after connecting to Redis MISCONF Redis is configured to save RDB snapshots

STC89C52RC的P4口的应用问题

元宇宙:未来我们的每一个日常行为是否都能成为赚钱工具?

【LeetCode】图解 904. 水果成篮

翁恺C语言程序设计网课笔记合集

怎样进行在不改变主线程执行的时候,进行日志的记录

2022杭电多校 第三场 B题 Boss Rush

[230]连接Redis后执行命令错误 MISCONF Redis is configured to save RDB snapshots

D - I Hate Non-integer Number (选数的计数dp

2022 Hangzhou Electric Multi-School Training Session 3 1009 Package Delivery

2022牛客多校第三场 A Ancestor

软件测试面试题:软件测试类型都有哪些?