当前位置:网站首页>College entrance examination admission score line crawler

College entrance examination admission score line crawler

2022-07-02 15:38:00 【jidawanghao】

# -*- coding: utf-8 -*-

'''

author : dy

Development time : 2021/6/15 17:15

'''

import aiohttp

import asyncio

import pandas as pd

from pathlib import Path

from tqdm import tqdm

import time

current_path = Path.cwd()

def get_url_list(max_id):

url = 'https://static-data.eol.cn/www/2.0/school/%d/info.json'

not_crawled = set(range(max_id))

if Path.exists(Path(current_path, 'college_info.csv')):

df = pd.read_csv(Path(current_path, 'college_info.csv'))

not_crawled -= set(df[' School id'].unique())

return [url%id for id in not_crawled]

async def get_json_data(url, semaphore):

async with semaphore:

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/87.0.4280.88 Safari/537.36',

}

async with aiohttp.ClientSession(connector=aiohttp.TCPConnector(ssl=False), trust_env=True) as session:

try:

async with session.get(url=url, headers=headers, timeout=6) as response:

# Change the encoding format of the corresponding data

response.encoding = 'utf-8'

# encounter IO Request to suspend the current task , etc. IO The code after the operation is completed and executed , When the collaboration hangs , Event loops can perform other tasks .

json_data = await response.json()

if json_data != '':

# print(f"{url} collection succeeded!")

return save_to_csv(json_data['data'])

except:

return None

def save_to_csv(json_info):

save_info = {}

save_info[' School id'] = json_info['school_id'] # School id

save_info[' School name '] = json_info['name'] # The name of the school

level = ""

if json_info['f985'] == '1' and json_info['f211'] == '1':

level += "985 211"

elif json_info['f211'] == '1':

level += "211"

else:

level += json_info['level_name']

save_info[' School level '] = level # School level

save_info[' Soft science ranking '] = json_info['rank']['ruanke_rank'] # Soft science ranking

save_info[' Alumni Association ranking '] = json_info['rank']['xyh_rank'] # Alumni Association ranking

save_info[' Ranking of martial arts company '] = json_info['rank']['wsl_rank'] # Ranking of martial arts company

save_info['QS World rankings '] = json_info['rank']['qs_world'] # QS World rankings

save_info['US World rankings '] = json_info['rank']['us_rank'] # US World rankings

save_info[' School type '] = json_info['type_name'] # School type

save_info[' Province '] = json_info['province_name'] # Province

save_info[' City '] = json_info['city_name'] # The city name

save_info[' Location '] = json_info['town_name'] # Location

save_info[' Phone number of Admissions Office '] = json_info['phone'] # Phone number of Admissions Office

save_info[' Official website of Admissions Office '] = json_info['site'] # Official website of Admissions Office

df = pd.DataFrame(save_info, index=[0])

header = False if Path.exists(Path(current_path, 'college_info.csv')) else True

df.to_csv(Path(current_path, 'college_info.csv'), index=False, mode='a', header=header)

async def main(loop):

# obtain url list

url_list = get_url_list(5000)

# Limit the amount of concurrency

semaphore = asyncio.Semaphore(500)

# Create a task object and add it to the task list

tasks = [loop.create_task(get_json_data(url, semaphore)) for url in url_list]

# Pending task list

for t in tqdm(asyncio.as_completed(tasks), total=len(tasks)):

await t

if __name__ == '__main__':

start = time.time()

# Modify the strategy of event loop

asyncio.set_event_loop_policy(asyncio.WindowsSelectorEventLoopPolicy())

# Create an event loop object

loop = asyncio.get_event_loop()

# Add the task to the event loop and run the loop until it is finished

loop.run_until_complete(main(loop))

# Close the event loop object

loop.close()

df = pd.read_csv(Path(current_path, 'college_info.csv'))

df.drop_duplicates(keep='first', inplace=True)

df.reset_index(drop=True, inplace=True)

df.sort_values(' School id', inplace=True)

df.loc[df[' Soft science ranking '] == 0, ' Soft science ranking '] = 999

df.to_csv(Path(current_path, 'college_info.csv'), index=False)

print(f' Acquisition complete , Total time consuming :{round(time.time() - start, 2) } second ')+680边栏推荐

- 4. Data splitting of Flink real-time project

- 面对“缺芯”挑战,飞凌如何为客户产能提供稳定强大的保障?

- [leetcode] 283 move zero

- Leetcode skimming -- incremental ternary subsequence 334 medium

- 百变大7座,五菱佳辰产品力出众,人性化大空间,关键价格真香

- LeetCode刷题——递增的三元子序列#334#Medium

- 【LeetCode】417-太平洋大西洋水流问题

- Be a good gatekeeper on the road of anti epidemic -- infrared thermal imaging temperature detection system based on rk3568

- YOLOV5 代码复现以及搭载服务器运行

- 做好抗“疫”之路的把关人——基于RK3568的红外热成像体温检测系统

猜你喜欢

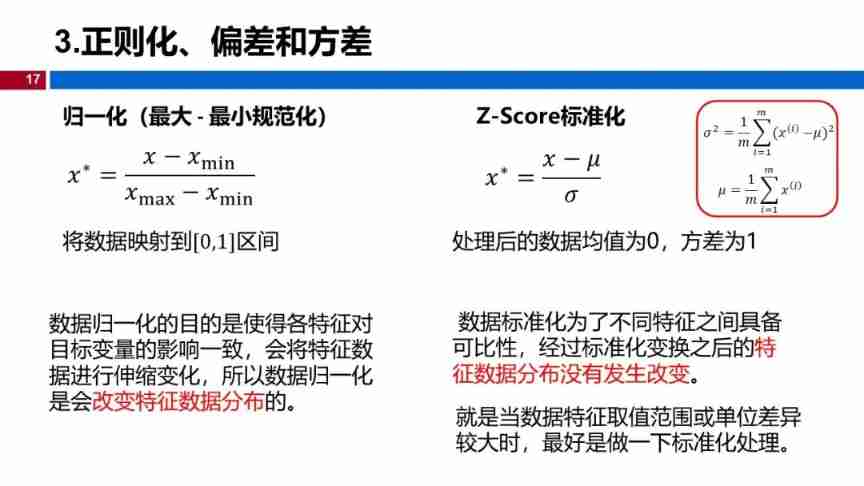

(Video + graphic) machine learning introduction series - Chapter 5 machine learning practice

15_ Redis_ Redis. Conf detailed explanation

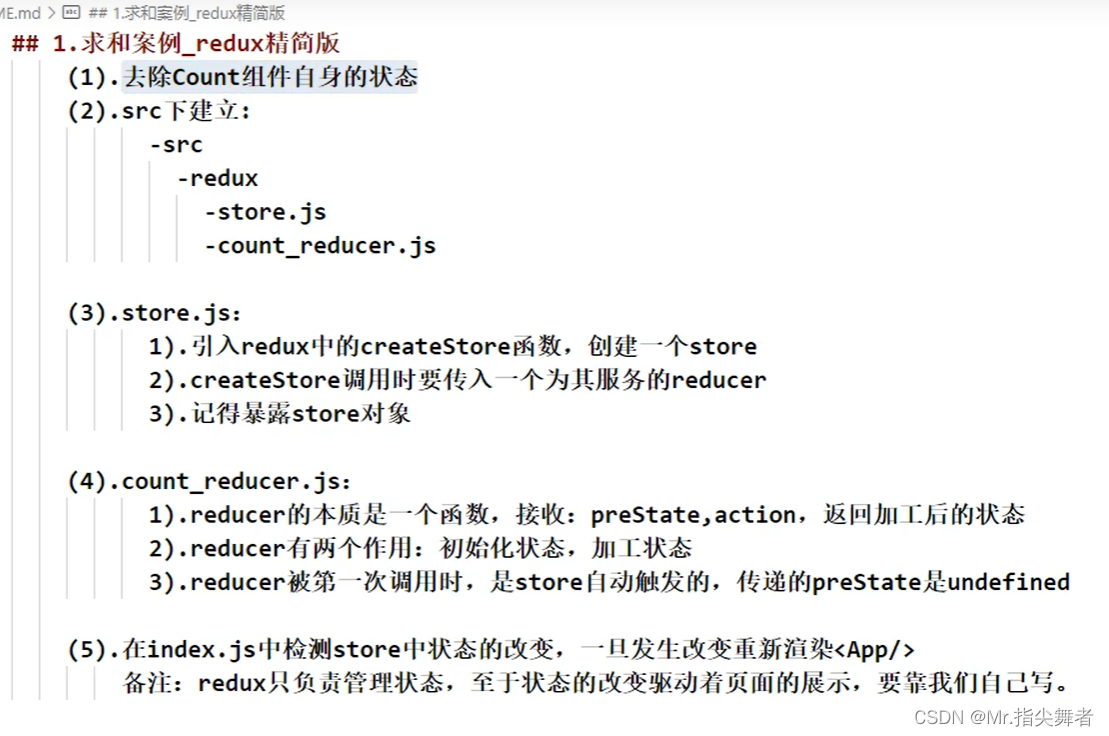

Redux——详解

Solution of Queen n problem

让您的HMI更具优势,FET-G2LD-C核心板是个好选择

14_Redis_乐观锁

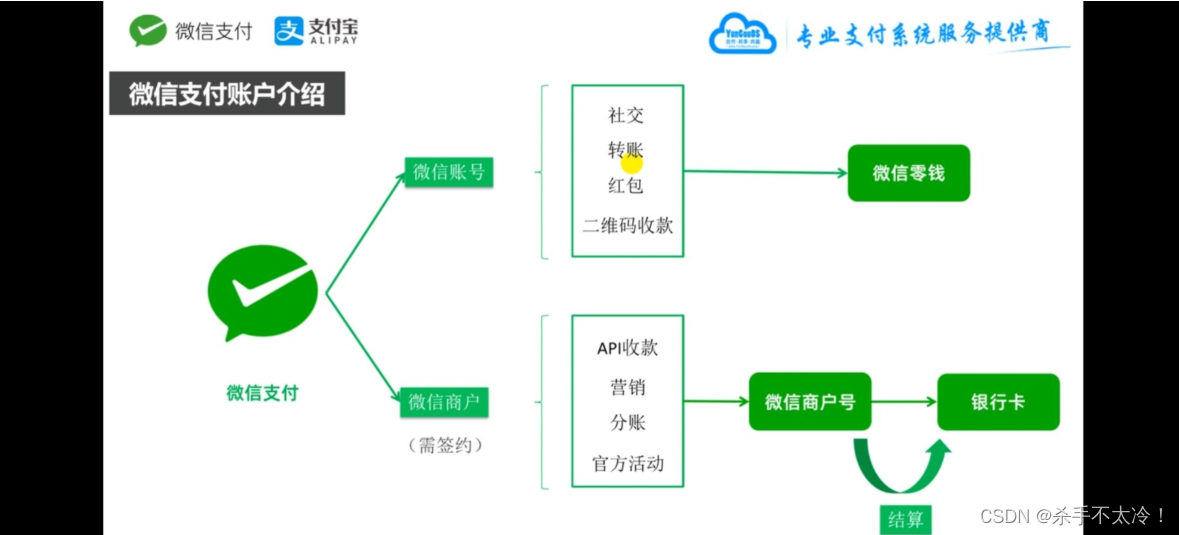

微信支付宝账户体系和支付接口业务流程

4. Jctree related knowledge learning

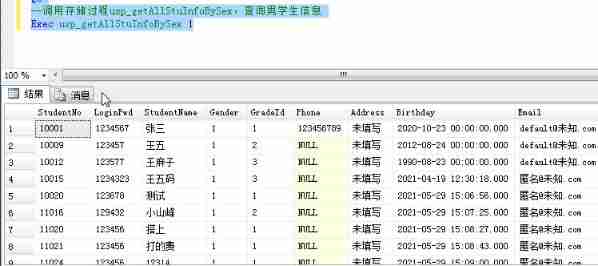

SQL stored procedure

Leetcode skimming -- incremental ternary subsequence 334 medium

随机推荐

10_ Redis_ geospatial_ command

【LeetCode】486-预测赢家

语义分割学习笔记(一)

Data analysis thinking analysis methods and business knowledge - business indicators

4. Data splitting of Flink real-time project

[leetcode] 695 - maximum area of the island

Steps for Navicat to create a new database

12_ Redis_ Bitmap_ command

【LeetCode】283-移动零

Guangzhou Emergency Management Bureau issued a high temperature and high humidity chemical safety reminder in July

Bing.com網站

Leetcode skimming -- verifying the preorder serialization of binary tree # 331 # medium

03.golang初步使用

[leetcode] 486 predict winners

. Solution to the problem of Chinese garbled code when net core reads files

List set & UML diagram

Storage read-write speed and network measurement based on rz/g2l | ok-g2ld-c development board

Solve the problem of frequent interruption of mobaxterm remote connection

[leetcode] 167 - sum of two numbers II - enter an ordered array

(4) Flink's table API and SQL table schema