当前位置:网站首页>Three schemes of SVM to realize multi classification

Three schemes of SVM to realize multi classification

2022-07-06 21:01:00 【wx5d786476cd8b2】

SVM It is a binary classifier

SVM The algorithm was originally designed for binary classification problems , When dealing with multiple types of problems , We need to construct a suitable multi class classifier .

at present , structure SVM There are two main methods of multi class classifier

(1) direct method , Modify directly on the objective function , The parameter solutions of multiple classification surfaces are combined into an optimization problem , By solving the optimization problem “ Disposable ” Implementation of multi class classification . This method seems simple , But its computational complexity is relatively high , It's more difficult to achieve , Only suitable for small problems ;

(2) indirect method , Mainly through the combination of multiple two classifiers to achieve the construction of multiple classifiers , Common methods are one-against-one and one-against-all Two kinds of .

One to many (one-versus-rest, abbreviation OVR SVMs)

During the training, the samples of a certain category are classified into one category in turn , The rest of the samples fall into another category , such k Samples of categories construct k individual SVM. In classification, the unknown samples are classified into the category with the maximum classification function value .

If I had four categories ( That is to say 4 individual Label), They are A、B、C、D.

So when I was extracting the training set , Separate extraction

(1)A The corresponding vector is a positive set ,B,C,D The corresponding vector is a negative set ;

(2)B The corresponding vector is a positive set ,A,C,D The corresponding vector is a negative set ;

(3)C The corresponding vector is a positive set ,A,B,D The corresponding vector is a negative set ;

(4)D The corresponding vector is a positive set ,A,B,C The corresponding vector is a negative set ;

Use these four training sets to train separately , Then we get four training result files .

During the test , The corresponding test vectors are tested by using the four training result files .

In the end, each test has a result f1(x),f2(x),f3(x),f4(x).

So the final result is the largest of the four values as the classification result .

evaluation :

There's a flaw in this approach , Because the training set is 1:M, In this case there is biased. So it's not very practical . When extracting data sets , One third of the complete negative set is taken as the training negative set .

One on one (one-versus-one, abbreviation OVO SVMs perhaps pairwise)

This is done by designing a... Between any two types of samples SVM, therefore k Samples of each category need to be designed k(k-1)/2 individual SVM.

When classifying an unknown sample , The last category with the most votes is the category of the unknown sample .

Libsvm The multi class classification in is based on this method .

Suppose there are four types A,B,C,D Four types of . In training, I choose A,B; A,C; A,D; B,C; B,D;C,D The corresponding vector is used as the training set , And then we get six training results , During the test , Test the six results with the corresponding vectors , And then take the form of a vote , Finally, we get a set of results .

The vote is like this :

A=B=C=D=0;

(A,B)-classifier If it is A win, be A=A+1;otherwise,B=B+1;

(A,C)-classifier If it is A win, be A=A+1;otherwise, C=C+1;

...

(C,D)-classifier If it is A win, be C=C+1;otherwise,D=D+1;

The decision is the Max(A,B,C,D)

evaluation : This method is good , But when there are many categories ,model The number of is n*(n-1)/2, The cost is still considerable .

边栏推荐

- use. Net analysis Net talent challenge participation

- OAI 5g nr+usrp b210 installation and construction

- 【DSP】【第一篇】开始DSP学习

- 性能测试过程和计划

- Mécanisme de fonctionnement et de mise à jour de [Widget Wechat]

- 'class file has wrong version 52.0, should be 50.0' - class file has wrong version 52.0, should be 50.0

- What is the difference between procedural SQL and C language in defining variables

- Can novices speculate in stocks for 200 yuan? Is the securities account given by qiniu safe?

- Math symbols in lists

- 数据湖(八):Iceberg数据存储格式

猜你喜欢

Redis insert data garbled solution

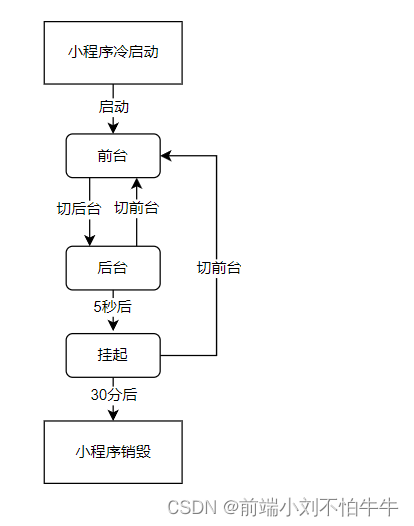

【微信小程序】運行機制和更新機制

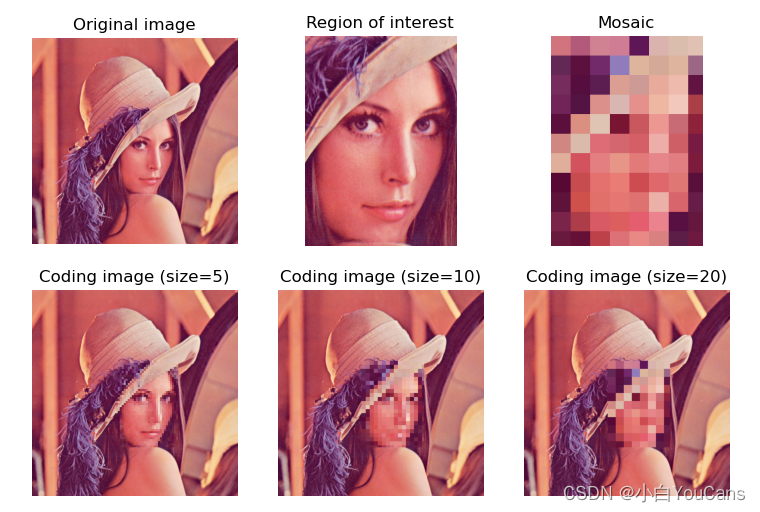

【OpenCV 例程200篇】220.对图像进行马赛克处理

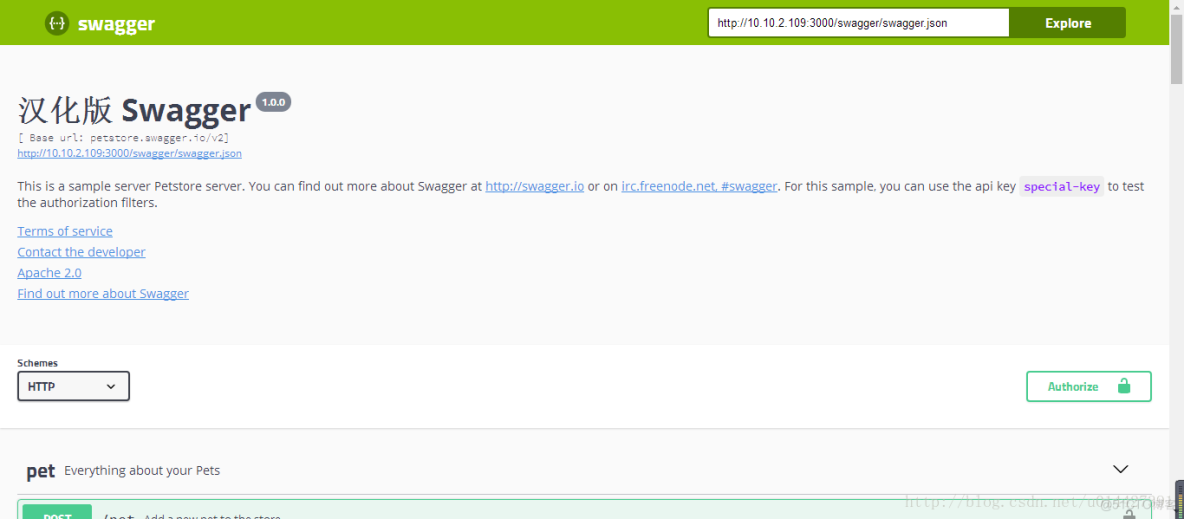

Swagger UI tutorial API document artifact

![[DIY]如何制作一款个性的收音机](/img/fc/a371322258131d1dc617ce18490baf.jpg)

[DIY]如何制作一款个性的收音机

Logic is a good thing

LLVM之父Chris Lattner:为什么我们要重建AI基础设施软件

Pycharm remote execution

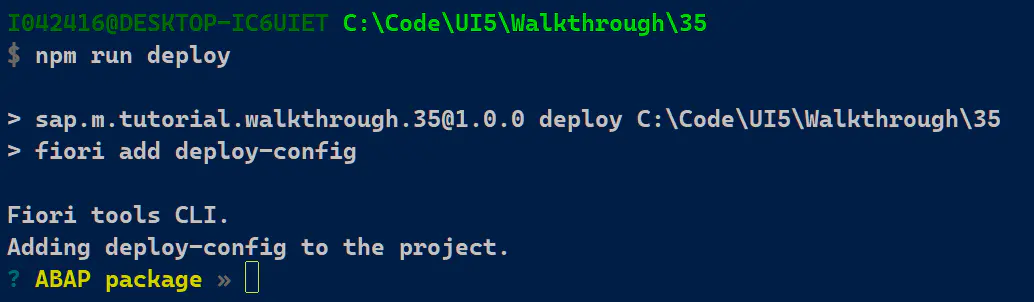

Introduction to the use of SAP Fiori application index tool and SAP Fiori tools

Intel 48 core new Xeon run point exposure: unexpected results against AMD zen3 in 3D cache

随机推荐

Recyclerview GridLayout bisects the middle blank area

[weekly pit] information encryption + [answer] positive integer factorization prime factor

[weekly pit] output triangle

Reinforcement learning - learning notes 5 | alphago

What is the difference between procedural SQL and C language in defining variables

Can novices speculate in stocks for 200 yuan? Is the securities account given by qiniu safe?

强化学习-学习笔记5 | AlphaGo

Intel 48 core new Xeon run point exposure: unexpected results against AMD zen3 in 3D cache

快过年了,心也懒了

数据湖(八):Iceberg数据存储格式

What are RDB and AOF

Infrared thermometer based on STM32 single chip microcomputer (with face detection)

性能测试过程和计划

Common English vocabulary that every programmer must master (recommended Collection)

每个程序员必须掌握的常用英语词汇(建议收藏)

c#使用oracle存储过程获取结果集实例

SSO single sign on

Swagger UI tutorial API document artifact

15 millions d'employés sont faciles à gérer et la base de données native du cloud gaussdb rend le Bureau des RH plus efficace

全网最全的知识库管理工具综合评测和推荐:FlowUs、Baklib、简道云、ONES Wiki 、PingCode、Seed、MeBox、亿方云、智米云、搜阅云、天翎