当前位置:网站首页>What key progress has been made in deep learning in 2021?

What key progress has been made in deep learning in 2021?

2022-07-06 20:35:00 【woshicver】

link :https://www.zhihu.com/question/504050716

edit : Deep learning and computer vision

Statement : Just for academic sharing , Invasion and deletion

author : Astral evil

https://www.zhihu.com/question/504050716/answer/2280529580

Dare not comment on , It's just personal opinion ,GitHub Someone summed up 2021 Amazing AI papers, I think it's more pertinent , Basically, it can be regarded as a collection of papers with great influence this year :https://github.com/louisfb01/best_AI_papers_2021

I think one of them is Transformer Occupy all fields , In especial Swin Transformer Kill the Quartet ; The second is that of major research institutions . Pre training large model release and its amazing performance in downstream tasks , Of course, this is also inseparable self-supervised+transformer; The third is what everyone mentioned MAE, Of course, it is still inseparable transformer; Another important thing I think is based on NeRF A series of work also began to break out this year , Include CVPR best paper GIRAFFE, However, this work is mainly concentrated in foreign research teams

author :Riser

https://www.zhihu.com/question/504050716/answer/2285962009

I just saw Andrew Ng The teacher's “ A gift of rose , Fragrance in hand ” Christmas message , And review the 2021 year AI Community development , And prospects for the future development of the community .

Link to the original text :https://read.deeplearning.ai/the-batch/issue-123/

Teacher Wu Enda mainly talked about : Multimodal AI Take off of , A large model with trillions of parameters ,Transformer framework , And teacher Enda —AI Generate audio content , Artificial intelligence related laws have been promulgated , The first three topics are also my concerns , Combined with teacher Enda's talk Express a little bit of your understanding .

Personally feel Open AI Of CLIP Is absolutely 2021 Multimodal AI The outstanding representative of , The image classification task is modeled as image text matching , Using a large amount of text information on the Internet to supervise image tasks , Feeling “ Text + Images ”, even to the extent that “ Text + Images + Knowledge map " It is a line with good future prospects , There are a lot of lab Research on this area has begun . in addition Open AI Of Dall·E( Generate the corresponding image according to the input text ),DeepMind Of Perceiver IO ( Text 、 Images 、 Classify videos and point clouds ), Stanford University ConVIRT( For medicine X Add text labels to radiographic images ) It is also a good beginning for this topic .

Obviously, the past year , The model has experienced a development process from larger to larger .

From Google parameters 1.6 Trillions of Switch Transformer, Go to Beijing Artificial Intelligence Research Institute 1.75 Trillion enlightenment 2.0, Refresh the model level online again and again , Regardless of the magnitude of the model , Their original motivation and Bert It's all the same , Provide more for many downstream tasks general Better language pre training model , Maybe this “general learning” Our thoughts will also be transferred to CV field ( in fact , Many tasks we do will also migrate imagenet Pre training model of ), Higher level general CV model Maybe we need to think about the characteristics of image data format and self-monitoring training mode .

The other is Transformer At the top of each major visual and machine learning will kill crazy ,Swin Transformer trample VIT,Detr And many other visual Transformer Take it off the shoulder of the precursor ICCV2021 best paper, Proved Transformer Applicability in visual tasks ,Transformer In audio text and other sequence tasks, it has been basically proved that RNN My life , And this year , We see Transformer Start to challenge CNN In the supremacy of visual tasks , Of course, the organic integration of the two is also a hot and promising point at present .DeepMind Released AlphaFold 2 Open source version of , Its use transformers Prediction of protein composition based on amino acid sequence 3D structure , Shocked the medical community , He has made outstanding contributions to the field of human natural biology . All this proves Transformer It has good universality , We also look forward to more and more superior model architectures , Solve more problems .

Another thing that cannot be ignored is based on nerf(Neural Radiance Fields) The explosion of a series of work , It almost dominates many topics such as 3D reconstruction , Strictly speaking nerf yes 2020 Years of work , I always feel that I didn't get it in that year ECCV Of best paper unfortunately ( Of course Raft It's also very strong ..), however GIRAFFE Take this year's CVPR2021 best paper It also makes up for this regret .

All in all ,2021 Many years AI The research is still exciting , Let's look forward to and experience 2022 AI The development of !!!

author : Anonymous users

https://www.zhihu.com/question/504050716/answer/2280944226

Theoretically, I feel like I'm watering . The only work that may be considered as critical progress may be Proxy Convexity: A Unified Framework for the Analysis of Neural Networks Trained by Gradient Descent; Frei & Gu 2021. This article is the epitome of deep learning optimization theory .

author : Grey pupil sextant https://www.zhihu.com/question/504050716/answer/2280495756

My areas of concern are relatively small , There is nothing very amazing

In hot work ,MAE It's really interesting , But I always feel that there is still no NLP Use in mask It's a natural

Always feel CV The future of self-monitoring pre training is not as close as you think , The faint feeling will be related to the three-dimensional reconstruction of the two-dimensional picture

author : Anonymous users

https://www.zhihu.com/question/504050716/answer/2279821079

The key thing in my heart is clip… I think clip Than vit Be interesting . Of course vit It also opens a very important direction ,rethinking architecture for vision tasks

dalle It's a very impressive Of work.gan There are a lot of , such as styleganv3 and gaugan2.nerf Of followup There are a lot of .

besides , also ssl Well , But I don't think it's essential breakthrough... even mae It just proves the previous self reconstruction Yes vit Of backbone Very effective

author : Cat eat fish

https://www.zhihu.com/question/504050716/answer/2279784861

Seeing this problem, the first thing that comes to mind may be this year ICCV Of best paper:swin transformer 了 . This paper is also for the current transformer stay CV Hot in the field ViT(Vision Transformer) An inheritance of .

Including this year transformer At the top of computer vision CVPR and ICCV Application on , be used transformerz It's a big part of it , You can see in the CV Domain use transformer It will be an upsurge . and Swin Transformer It is also the peak work , Currently in CV The field should have no effect more than Swin Transformer The structure of .

So I think Swin Transformer It can be said to be the key progress in the field of in-depth learning this year .

* END *

If you see this , Show that you like this article , Please forward 、 give the thumbs-up . WeChat search 「uncle_pn」, Welcome to add Xiaobian wechat 「 woshicver」, Update a high-quality blog post in your circle of friends every day .

↓ Scan QR code and add small code ↓

边栏推荐

- Deep learning classification network -- zfnet

- 5. 無線體內納米網:十大“可行嗎?”問題

- [network planning] Chapter 3 data link layer (3) channel division medium access control

- Leetcode question 448 Find all missing numbers in the array

- Trends of "software" in robotics Engineering

- Rhcsa Road

- use. Net analysis Net talent challenge participation

- B-杰哥的树(状压树形dp)

- 知识图谱构建流程步骤详解

- Tencent cloud database public cloud market ranks top 2!

猜你喜欢

随机推荐

New generation garbage collector ZGC

永磁同步电机转子位置估算专题 —— 基波模型类位置估算概要

Boder radius has four values, and boder radius exceeds four values

Problems encountered in using RT thread component fish

Special topic of rotor position estimation of permanent magnet synchronous motor -- Summary of position estimation of fundamental wave model

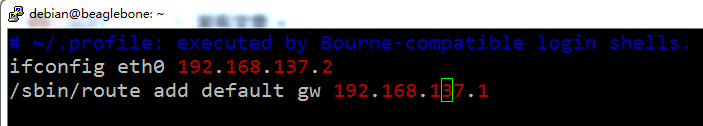

BeagleBoneBlack 上手记

Is it difficult for small and micro enterprises to make accounts? Smart accounting gadget quick to use

知识图谱之实体对齐二

Logic is a good thing

Jupyter launch didn't respond after Anaconda was installed & the web page was opened and ran without execution

5. Nano - Net in wireless body: Top 10 "is it possible?" Questions

自定义限流注解

Rhcsa Road

Node.js: express + MySQL实现注册登录,身份认证

Recyclerview GridLayout bisects the middle blank area

Function optimization and arrow function of ES6

Groovy basic syntax collation

Rhcsa Road

Leetcode question 283 Move zero

In line elements are transformed into block level elements, and display transformation and implicit transformation

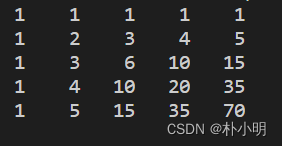

![[weekly pit] output triangle](/img/d8/a367c26b51d9dbaf53bf4fe2a13917.png)

![[DIY]自己设计微软MakeCode街机,官方开源软硬件](/img/a3/999c1d38491870c46f380c824ee8e7.png)

![[DIY]如何制作一款個性的收音機](/img/fc/a371322258131d1dc617ce18490baf.jpg)