当前位置:网站首页>Integration of ongdb graph database and spark

Integration of ongdb graph database and spark

2022-07-04 16:40:00 【Ma Chao's blog】

Quickly explore graph data and graph calculation

Graph computing is the study of anything in the objective world and the relationship between things , Make a complete description of it 、 A technique of calculation and analysis . Graph computation depends on the underlying graph data model , Calculate and analyze on the basis of graph data model Spark Is a very popular and mature and stable computing engine . The following article starts from ONgDB And Spark Start of integration 【 Use TensorFlow The scheme of analyzing graph data with equal depth learning framework is beyond the scope of this paper , Only from the field of graph database Spark The integration of is a popular solution , You can do some calculation and pre training of basic map data and submit it to TensorFlow】, Introduce the specific integration implementation scheme . Downloading the source code of the case project can help novices quickly start exploring , No need to step on the pit . The general process is first Spark Cluster integration diagram database plug-in , Then use specific API Build graph data analysis code .

stay Spark Cluster installation neo4j-spark plug-in unit

- Download components

https://github.com/ongdb-contrib/neo4j-spark-connector/releases/tag/2.4.1-M1

- Download components on spark Installation directory jars Folder

E:\software\ongdb-spark\spark-2.4.0-bin-hadoop2.7\jars

The basic component depends on information

- Version information

Spark 2.4.0 http://archive.apache.org/dist/spark/spark-2.4.0/

ONgDB 3.5.x

Neo4j-Java-Driver 1.7.5

Scala 2.11

JDK 1.8

hadoop-2.7.7

https://mirrors.tuna.tsinghua.edu.cn/apache/hadoop/common/

neo4j-spark-connector-full-2.4.1-M1 https://github.com/neo4j-contrib/neo4j-spark-connector

- Download the installation package for

hadoop-2.7.7

spark-2.4.0-bin-hadoop2.7

winutils

neo4j-spark-connector-full-2.4.1-M1 【 hold jar Put the bag in spark/jars Folder 】

scala-2.11.12

Create test data

UNWIND range(1,100) as id

CREATE (p:Person {id:id}) WITH collect(p) as people

UNWIND people as p1

UNWIND range(1,10) as friend

WITH p1, people[(p1.id + friend) % size(people)] as p2

CREATE (p1)-[:KNOWS {years: abs(p2.id - p2.id)}]->(p2)

FOREACH (x in range(1,1000000) | CREATE (:Person {name:"name"+x, age: x%100}));

UNWIND range(1,1000000) as x

MATCH (n),(m) WHERE id(n) = x AND id(m)=toInt(rand()*1000000)

CREATE (n)-[:KNOWS]->(m);

remarks

- Case project 【 To avoid stepping on this under the pit Java-Scala The mixed case project can be referred to 】

https://github.com/ongdb-contrib/ongdb-spark-java-scala-example

If there is a problem downloading the dependent package, please check whether the following website can be downloaded normally Spark dependent JAR package

http://dl.bintray.com/spark-packages/maven

- Screenshot of case project 【 Start locally before use Spark】

- Please read the original text for the installation of relevant components and other references

边栏推荐

- What does IOT engineering learn and work for?

- 基于check-point实现图数据构建任务

- 《吐血整理》保姆级系列教程-玩转Fiddler抓包教程(2)-初识Fiddler让你理性认识一下

- CMPSC311 Linear Device

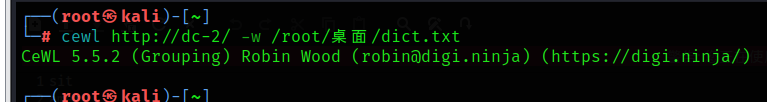

- DC-2靶场搭建及渗透实战详细过程(DC靶场系列)

- Can I "reverse" a Boolean value- Can I 'invert' a bool?

- Digital recognition system based on OpenCV

- What is torch NN?

- Big God explains open source buff gain strategy live broadcast

- Accounting regulations and professional ethics [11]

猜你喜欢

Cut! 39 year old Ali P9, saved 150million

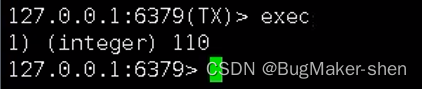

Redis' optimistic lock and pessimistic lock for solving transaction conflicts

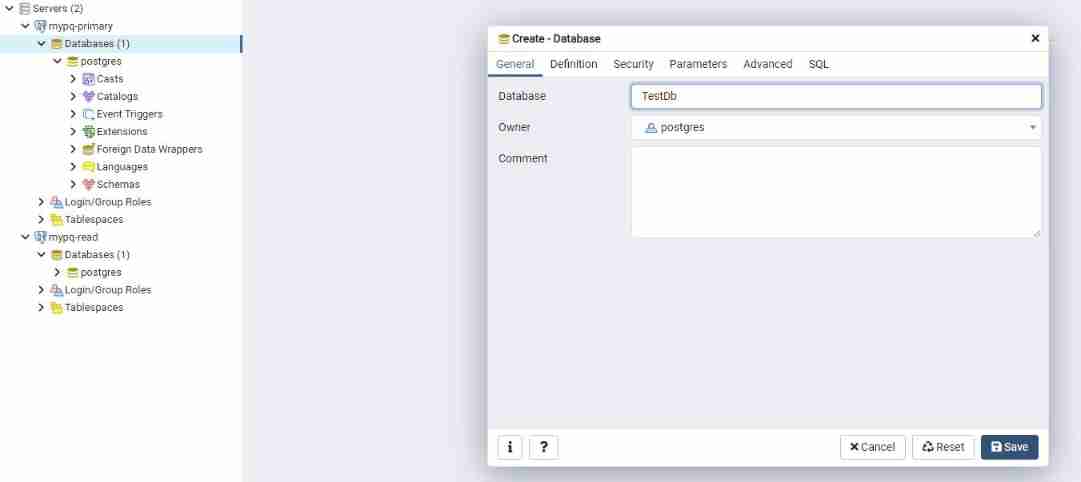

Talking about Net core how to use efcore to inject multiple instances of a context annotation type for connecting to the master-slave database

Detailed process of DC-2 range construction and penetration practice (DC range Series)

程序员怎么才能提高代码编写速度?

《吐血整理》保姆级系列教程-玩转Fiddler抓包教程(2)-初识Fiddler让你理性认识一下

![[hcie TAC] question 5 - 1](/img/e0/1b546de7628695ebed422ae57942e4.jpg)

[hcie TAC] question 5 - 1

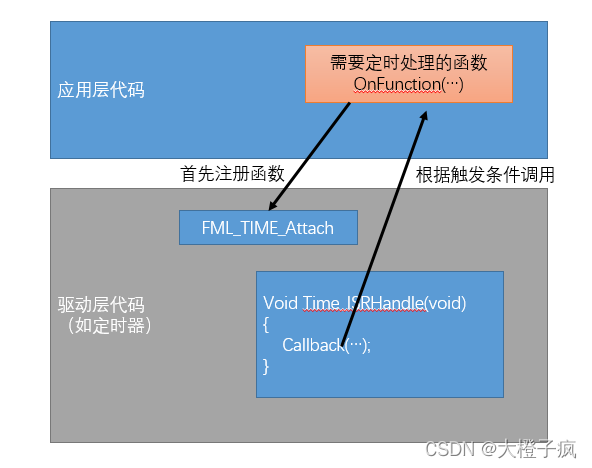

嵌入式软件架构设计-函数调用

Scientific research cartoon | what else to do after connecting with the subjects?

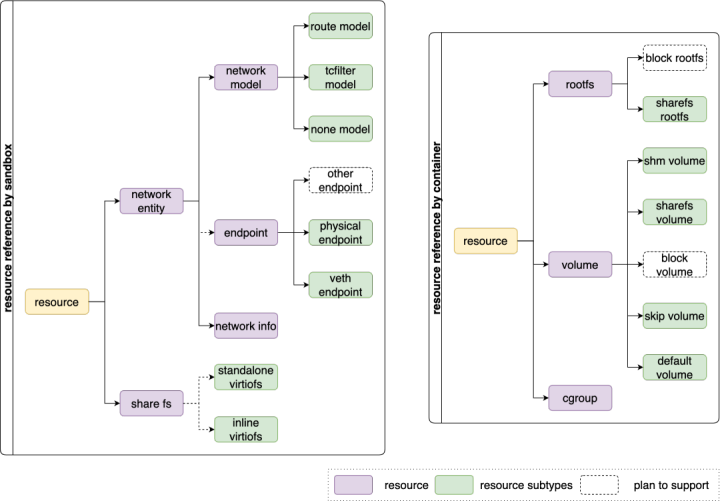

多年锤炼,迈向Kata 3.0 !走进开箱即用的安全容器体验之旅| 龙蜥技术

随机推荐

Model fusion -- stacking principle and Implementation

Daily notes~

DIY a low-cost multi-functional dot matrix clock!

error: ‘connect‘ was not declared in this scope connect(timer, SIGNAL(timeout()), this, SLOT(up

Research Report on market supply and demand and strategy of China's four sided flat bag industry

.Net 应用考虑x64生成

[North Asia data recovery] data recovery case of database data loss caused by HP DL380 server RAID disk failure

[flask] ORM one to many relationship

. Net delay queue

Big God explains open source buff gain strategy live broadcast

Market trend report, technical innovation and market forecast of taillight components in China

Vscode prompt Please install clang or check configuration 'clang executable‘

高度剩余法

China Indonesia adhesive market trend report, technological innovation and market forecast

Recommend 10 excellent mongodb GUI tools

Opencv learning -- arithmetic operation of image of basic operation

基于check-point机制的任务状态回滚和数据分块任务

Web components series - detailed slides

Expression #1 of ORDER BY clause is not in SELECT list, references column ‘d.dept_ no‘ which is not i

The four most common errors when using pytorch