当前位置:网站首页>Deep learning classification network -- Network in network

Deep learning classification network -- Network in network

2022-07-02 06:00:00 【occasionally.】

Deep learning classification network Summary

List of articles

Preface

Network in Network It puts forward two important ideas , Respectively :

- 1×1 Convolution realizes cross channel information interaction , Add nonlinear expression

- Combined with enhanced local modeling , You can replace the full connection layer with global average pooling , More explanatory , Reduce over fitting

These two points were later Inception Series network 、ResNet and CAM Visualization technology .

1. Network structure

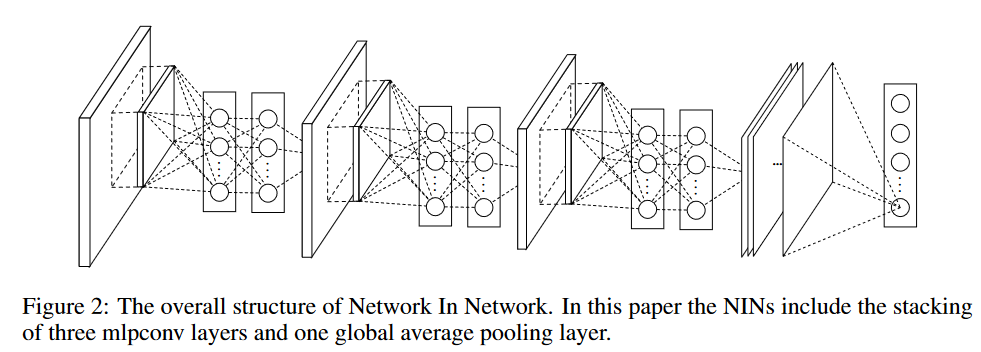

The network structure is relatively simple , By three mlpconv Layer and a global average pool layer . So-called mlpconv When it is implemented 3×3 Convolution + Two 1×1 Convolution .

2. The main points of

2.1 mlpconv

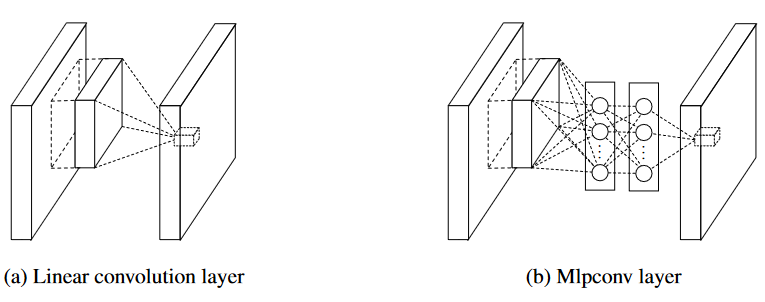

ZFNet The visualization technology used in this paper reveals some potential concepts corresponding to the activation value in the characteristic graph ( Category information ), The higher the activation value , Corresponding input patch The greater the probability of containing potential concepts . When the underlying concept is linearly separable , Traditional linear convolution + The nonlinear activation mode can identify , however The confidence level of the potential concept is usually a highly nonlinear function of the input . therefore , The use of micro network Instead of linear convolution + Nonlinear activation , To enhance local potential conceptual modeling . The author uses multi-layer perceptron (MLP) As micro network Instantiation , because MLP It is a general function approximator and a neural network that can be trained by back propagation , be based on MLP The establishment of a micro network Was named mlpconv.

Here's a quote This blog A picture in , Be clear at a glance , I think it can explain clearly Mlpconv layer .

2.2 Global average pooling

stay NIN The traditional full connection layer is not used for classification , Instead, the last one is output directly through the global average pooling layer mlpconv The average value of the feature map of the layer is used as the confidence of the category , Then input the obtained vector softmax layer . There are two advantages to doing so :

- More interpretable : The category information is directly obtained from the global average pool of characteristic graphs , Strengthen the correspondence between feature mapping and categories ;(CAM Visualization technique This idea is used for reference )

- Avoid overfitting : Global average pooling itself is a structural regularizer , It can prevent over fitting of the overall structure .

Reference material

[1] Lin M, Chen Q, Yan S. Network in network[J]. arXiv preprint arXiv:1312.4400, 2013.

[2] 【 Intensive reading 】Network In Network(1*1 Convolution layer instead of FC layer global average pooling)

边栏推荐

- "Simple" infinite magic cube

- 【LeetCode】Day92-盛最多水的容器

- Stc8h8k series assembly and C51 actual combat - serial port sending menu interface to select different functions

- PHP extensions

- Software testing - concept

- Stc8h8k series assembly and C51 actual combat - digital display ADC, key serial port reply key number and ADC value

- Several keywords in C language

- 页面打印插件print.js

- php继承(extends)

- OLED12864 液晶屏

猜你喜欢

RGB infinite cube (advanced version)

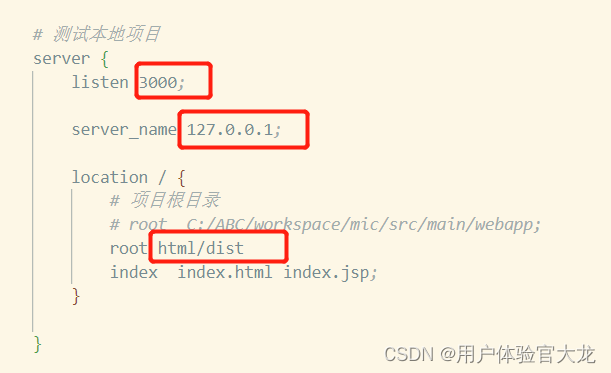

Can't the dist packaged by vite be opened directly in the browser

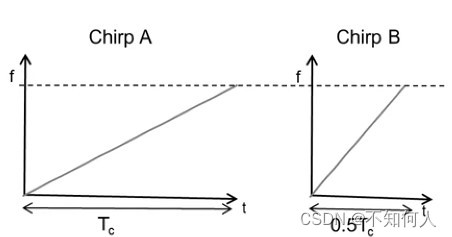

Ti millimeter wave radar learning (I)

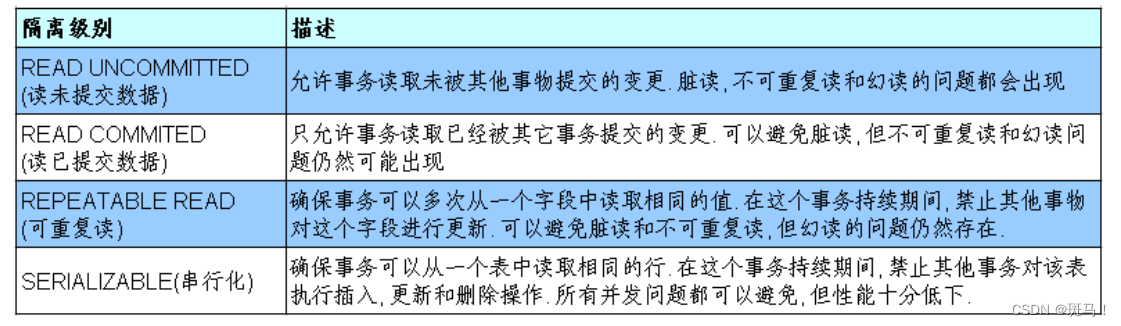

mysql事务和隔离级别

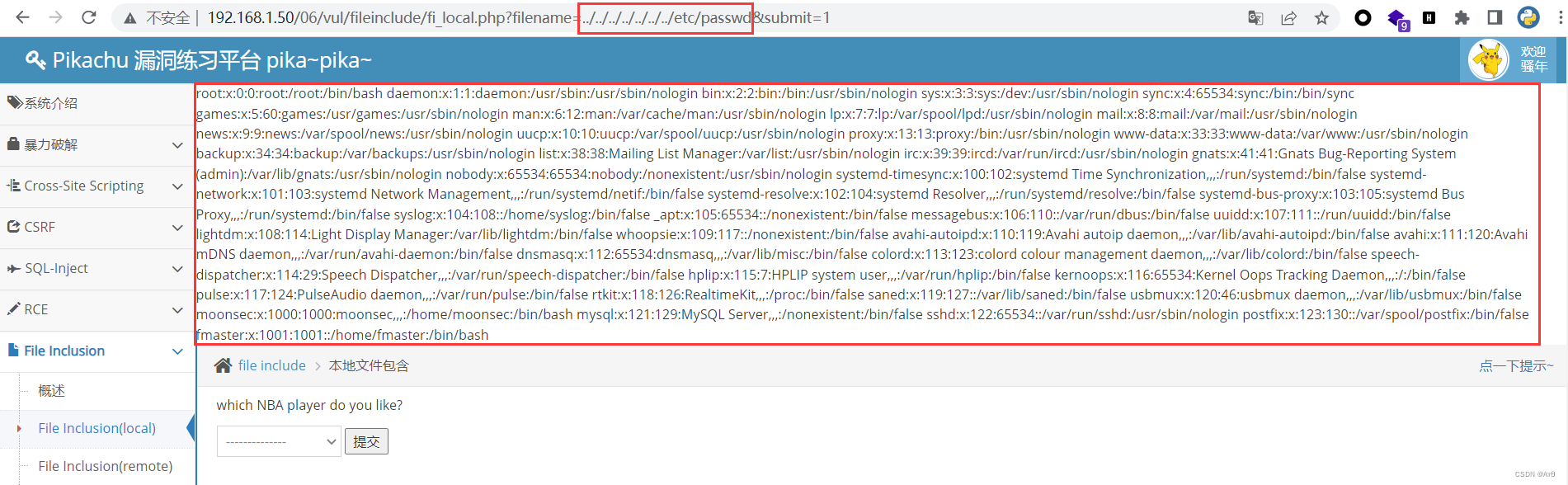

File contains vulnerability (I)

Keepalived installation, use and quick start

数理统计与机器学习

深度学习分类网络--VGGNet

Detailed notes of ES6

Shenji Bailian 3.54-dichotomy of dyeing judgment

随机推荐

Redis Key-Value数据库【初级】

[C language] simple implementation of mine sweeping game

图片裁剪插件cropper.js

页面打印插件print.js

Common websites for Postgraduates in data mining

cookie插件和localForage离线储存插件

Redis Key-Value数据库 【高级】

Software testing - concept

PHP array to XML

Vite打包后的dist不能直接在浏览器打开吗

ROS2----LifecycleNode生命周期节点总结

Go language web development is very simple: use templates to separate views from logic

神机百炼3.52-Prim

Mock simulate the background return data with mockjs

php按照指定字符,获取字符串中的部分值,并重组剩余字符串为新的数组

Matplotlib double Y axis + adjust legend position

正则表达式总结

File contains vulnerabilities (II)

php父类(parent)

如何使用MITMPROXy