当前位置:网站首页>dynamic programming

dynamic programming

2022-07-07 00:27:00 【Evergreen AAS】

Dynamic programming

Dynamic programming (Dynamic Programming, abbreviation DP) It is a method to solve complex problems by decomposing the original problem into relatively simple subproblems .

Dynamic programming is often applied to problems with the following properties :

- With optimal substructure (Optimal substructure)

- Principle of optimality applies

- Optimal solution can be decomposed into subproblems

- Overlapping subproblems (Overlapping subproblems)

- Subproblems recur many times

- Solutions can be cached and reused

Dynamic programming usually takes much less time than simple solution .

Markov decision process MDP Meet the above two properties :

- Behrman equation provides the structure of recursive decomposition ;

- The value function can save and reuse the results of recursion .

Use dynamic programming to solve MDP/MRP

Dynamic planning needs to meet MDP The process is known (model-based).

- For Predict:

- Input:MDP And strategy π Or is it MRP

- Output: Value function v~π~

- For Control:

- Input:MDP

- Output: The optimal value function v∗ Or the optimal strategy π∗

Strategy assessment

Strategy assessment (Policy Evaluation) It refers to calculating the value of a given strategy , Here's the problem “How to evaluate a policy”.

The idea of strategy evaluation : The iteration uses the Behrman expectation equation ( About MDP For the form of Behrman's expectation equation, see 《 Markov decision process 》).

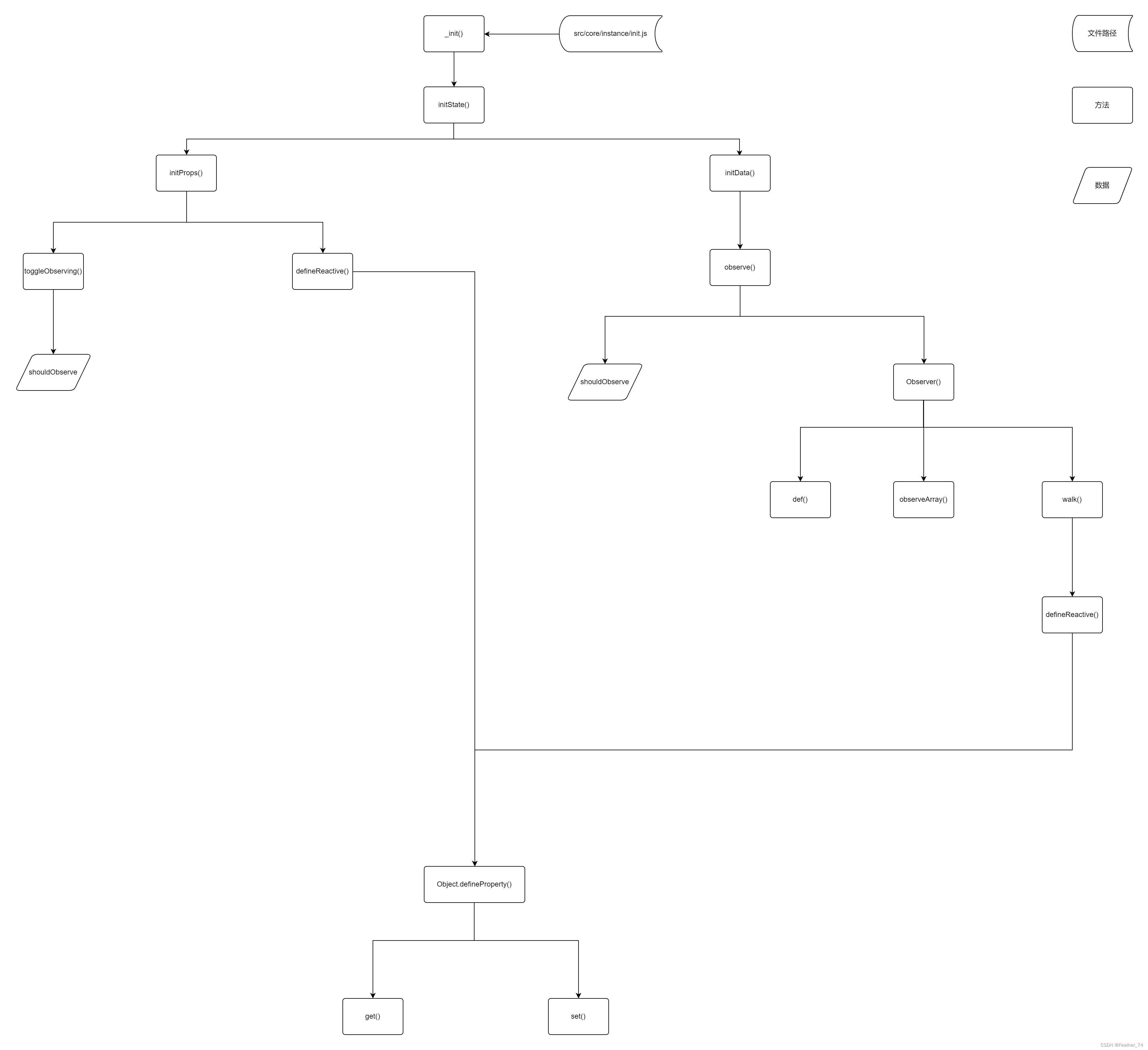

The strategy evaluation process is shown in the figure below :

v_{k+1} = \sum_{a\in A}\pi(a|s) \Bigl( R_{s}^a + \gamma\sum_{s'\in S}P_{ss'}^a v_{k}(s') \Bigr)

Use vector form to express :

\mathbf{v^{k+1}} = \mathbf{R^{\pi}} + \gamma \mathbf{P^{\pi}v^{k}}

Strategy iteration

Strategy iteration (Policy Iteration, abbreviation PI) Here's the problem “How to improve a policy”.

Given a strategy π :

- Assessment strategy π

v_{\pi}(s) = E[R_{t+1} + \gamma R_{t+2} + ...| S_t = s]

- Promotion strategy : Improve strategy by adopting greedy methods : \pi ' = \text{greedy}(v_{\pi})

Can prove that , The strategy iteration can always converge to the optimal strategy , namely π′=π∗

Policy iterations can be formally described using the following figure :

Generalized strategy iteration

Through the strategic evaluation mentioned above, it is not difficult to find , Strategy evaluation is an iterative process :

v_{\pi}(s) = E[R_{t+1} + \gamma R_{t+2} + ...| S_t = s]

So here comes the question ,Does policy evaluation need to converge to vπvπ? Can we introduce a stop rule or specify in the iteration kk Stop policy evaluation after times ? Think more about , Why don't we improve the strategy in each iteration of strategy evaluation ( Equivalent to strategy evaluation iteration 1 Stop after )? notes : This is equivalent to the value iteration to be introduced later .

Therefore, we can generalize the above strategy iteration process , That is, generalized strategy iteration (Generalised Policy Iteration, abbreviation GPI) frame :

Value iteration

Before introducing value iteration , Let's first introduce the principle of optimization .

The principle of optimization

The principle of optimization (Principle of Optimality) Definition :

The optimal decision of a process has such properties : That is, regardless of its initial state and initial decision , For the process of taking the state formed by the first decision as the initial state , Must constitute an optimal strategy .

If the optimization principle is described in a little mathematical language, it is :

In state ss As the starting point , Strategy π(a|s) The optimal value can be obtained v_{\pi}(s) = v_*(s) If and only if :

- Any state s′ For the State s Can reach ;

- In state s′ As the starting point , Strategy π The optimal value can be obtained v_{\pi}(s’) = v_*(s’)

According to the optimization principle , If we get the solution of the subproblem v∗(s′)v∗(s′), Then in the state ss Is the optimal solution of the starting point v∗(s)v∗(s) You can step back (one-step lookahead) Can get :

v_*(s) ← \max_{a\in A}\Bigl(R_s^a + \gamma \sum_{s'\in S}P_{ss'}^{a}v_*(s') \Bigr)

in other words , We can go back from the end to get the optimal solution , Value iteration is to update iteratively based on the above idea .

MDP Value iteration

Value iteration (Value Iteration, abbreviation VI) The problem to be solved is also “Find optimal policy ππ”. But different from the strategy iteration using Behrman expectation equation , Value iteration uses the bellman optimal equation for iterative lifting .

The difference between value iteration and policy iteration is :

- Use Bellman optimal function, rather than Bellman expectation function

- Unlike policy iteration, there is no explicit policy

- Intermediate value functions may not correspond to any policy

As shown in the figure below :

v_{k+1}(s) = \max_{a\in A}\Bigl(R_s^a + \gamma\sum_{s'\in S}P_{ss'}^a v_k(s') \Bigr)

The corresponding vector is expressed as :

\mathbf{v}_{k+1} = \max_{a\in A}\mathbf{R}^a + \gamma \mathbf{P^av}^k

The following figure is a summary of the three methods :

Dynamic programming extension

Asynchronous dynamic programming (Asynchronous Dynamic Programming)

- In-place dynamic programming

- Prioritised sweeping

- Real-time dynamic programming

Full-Width Backups vs. Sample Backups

Full-Width Backups

- DP uses full-width backups(DP is model-based)

- Every successor state and action is considered

- Using knowledge of the MDP transitions and reward function

- DP is effective for medium-sized problems (millions of states)

- For large problems, DP suffers Bellman’s curse of dimensionality( Dimension disaster )

Dimension disaster :Number of states n=|S| grows exponentially with number of state variables

- Even one backup can be too expensive

Sample Backups

The timing difference method to be discussed later

- Using sample rewards and sample transitions *S,A,R,S’*

- Instead of reward function R and transition dynamics P

- Advantages:

- Model-free: no advance knowledge of MDP required

- Breaks the curse of dimensionality through sampling

- Cost of backup is constant, independent of n=|S|

边栏推荐

- Leecode brushes questions to record interview questions 17.16 massagist

- 【vulnhub】presidential1

- Three application characteristics of immersive projection in offline display

- 陀螺仪的工作原理

- DAY ONE

- 2022/2/11 summary

- PostgreSQL使用Pgpool-II实现读写分离+负载均衡

- JWT signature does not match locally computed signature. JWT validity cannot be asserted and should

- On February 19, 2021ccf award ceremony will be held, "why in Hengdian?"

- X.509 certificate based on go language

猜你喜欢

随机推荐

Use type aliases in typescript

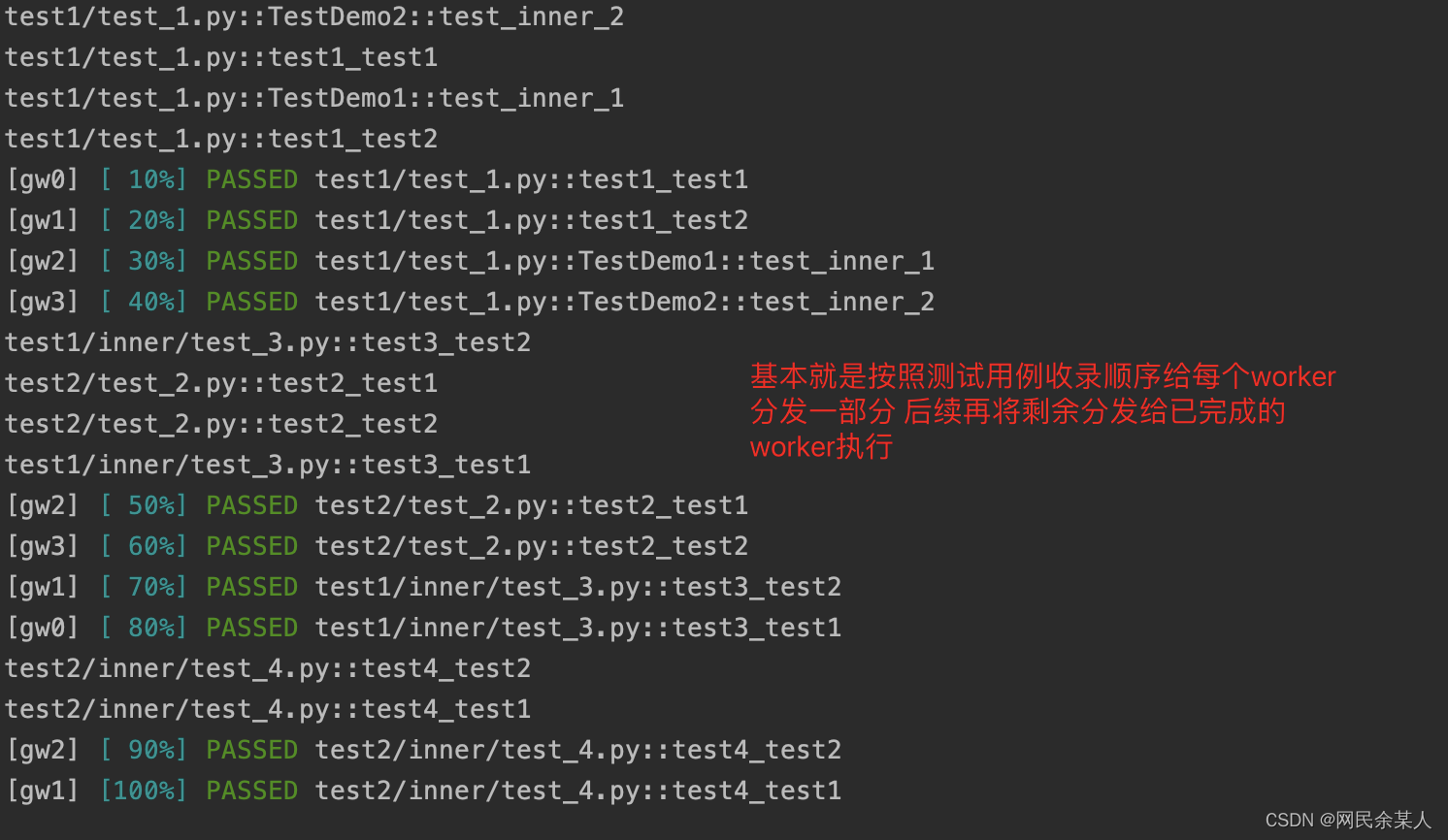

pytest多进程/多线程执行测试用例

从外企离开,我才知道什么叫尊重跟合规…

Leecode brush questions record interview questions 32 - I. print binary tree from top to bottom

openresty ngx_ Lua subrequest

DAY THREE

DAY TWO

量子时代计算机怎么保证数据安全?美国公布四项备选加密算法

How about the order management of okcc call center

okcc呼叫中心的订单管理时怎么样的

Testers, how to prepare test data

Quickly use various versions of PostgreSQL database in docker

What is web penetration testing_ Infiltration practice

[automated testing framework] what you need to know about unittest

How engineers treat open source -- the heartfelt words of an old engineer

web渗透测试是什么_渗透实战

@TableId can‘t more than one in Class: “com.example.CloseContactSearcher.entity.Activity“.

基於GO語言實現的X.509證書

《LaTex》LaTex数学公式简介「建议收藏」

uniapp中redirectTo和navigateTo的区别

![[automated testing framework] what you need to know about unittest](/img/4d/0f0e0a67ec41e41541e0a2b5ca46d9.png)