当前位置:网站首页>Core knowledge of distributed cache

Core knowledge of distributed cache

2022-07-07 00:12:00 【chandfy】

Write it at the front

This is the core knowledge of distributed cache , It can be used to review the eight part essay , It can also be used to deepen knowledge , It is suggested that you read in the mode of breaking through the barrier , Then check and fill in the gaps according to the content , Welcome to ask questions and learn from each other .

It's done before

- new edition javase Essential core knowledge , Click to learn

- Necessary core knowledge of concurrent programming , Click to learn

- Message queue of middleware

- Mysql Core knowledge

- http Core knowledge of the agreement

- Spring-Mybatis Core knowledge

Each relevant article has the latest learning gains , Will make corresponding updates and modifications

Distributed cache core knowledge article knowledge overview

Why redis, Why not use other caches , such as memcached Well

Redis What are the common data structures ? What are the usage scenarios of these structures

redis A single thread , Why so soon? ?

redis Persistence of , And the difference between them

Common cache elimination strategies

Cache breakdown 、 through 、 The difference and solution of avalanche

Why redis, Why not use other caches , such as memcached Well

redis Data structure ratio memcached Richer , Basically, it can completely replace

redis The community is more active , Performance is also powerful , It also supports functions such as persistence

The most important thing is to integrate with the business

Redis What are the common data structures ? What are the usage scenarios of these structures

String

ordinary kv Storage , Use scenarios :

hash

Store the object , One key There are multiple values , Use scenarios :

list

Tabular data 、 Message queuing, etc , Use scenarios :

set

unordered set 、 duplicate removal , intersection 、 And set etc. , For example, check your common friends , In terms of social relationships 、 Data duplication, etc. can be usedsroted set

Ordered set , duplicate removal , Make a list

redis A single thread , Why so soon? ?

Memory based , Most requests are purely memory operations ,CPU No Redis Bottleneck ( Single thread reason )

Avoid unnecessary CPU Context switching and other competitive conditions , Such as lock operation, etc

The bottom layer is the use of multiple channels I/O Reuse model , Non blocking IO

Redis6 Support multithreading after , But it is not enabled by default

redis Persistence of , And the difference between them

Support AOF and RDB Persistence

AOF

Log every write processed by the server 、 Delete operation , The query operation will not record , Record as text

Support second level persistence 、 Compatibility is good. , For the same number of data sets ,AOF Files are usually larger than RDB file , So recovery is better than RDB slow

RDB

Write the data set snapshot in memory to disk within the specified time interval , You can specify a time to archive data , But you can't do real-time persistence

The files are compact , Small volume , For disaster recovery ,RDB It's a very good choice , Compared with AOF Mechanism , If the data set is large ,RDB Speed ratio when recovering large data sets AOF It's faster to recover

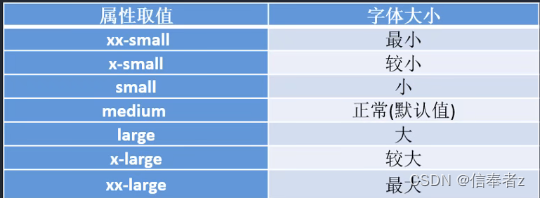

Common cache elimination strategies

fifo (FIFO)First In,First Out

Newly accessed data insertion FIFO Queue tail , The data is in FIFO Move in sequence in the queue , Eliminate FIFO Data in the queue headerRecently at least use (LRU) Least recently used

The data is eliminated according to the historical access record of the data , If the data has been accessed recently , So the chances of being interviewed in the future are higher

Insert new data into linked list header , Whenever cached data is accessed , Move the data to the head of the linked list , When the list is full , Discard the data at the end of the list .Not often used recently (LFU) Least Frequently Used

Eliminate data based on its historical access frequency , If data has been accessed more than once , So the frequency of future visits will be higher

Add data to the linked list , Sort by frequency , A data has been accessed , Take its frequency +1, When elimination occurs , Eliminate those with low frequency

Cache breakdown 、 through 、 The difference and solution of avalanche

Cache breakdown ( Some hot spot key Cache failed )

There's no data in the cache, but there's data in the database , If it's hot data , that key At the moment the cache expires , There are a lot of requests at the same time , All of these requests go to DB, Cause instantaneous DB A lot of requests 、 Pressure builds up .

The difference between cache avalanche and cache avalanche is that this is for a certain key cache , The latter are many key.

The prevention of : Set hotspot data not to expire , Scheduled tasks regularly update the cache , Or set a mutex

Cache penetration ( Query data does not exist )

Query a nonexistent data , Because the cache is not hit , And for the sake of fault tolerance , If initiated as id by “-1” Nonexistent data

If the data cannot be found from the storage layer, it will not be written to the cache, which will cause the nonexistent data to be queried from the storage layer every request , It loses the meaning of caching . There are a lot of data that does not exist for query , Probably DB It's gone , This is also a non-existent way for hackers to take advantage of key A way to attack applications frequently .

The prevention of : Verification is added to the interface layer , Data rationality verification , Cache unreachable data , In the database, there is no access to , At this time, you can also key-value Write as key-null, Set a shorter expiration time , Prevent the same key Being attacked all the time

Cache avalanche ( Multiple hotspots key It's all overdue )

a large number of key Set the same expiration time , This causes all caches to fail at the same time , Cause instantaneous DB A lot of requests 、 The pressure surged , Cause an avalanche

The prevention of : The expiration time of stored data is set randomly , Prevent a large number of data expiration at the same time , Set hotspot data never to expire , Scheduled tasks are updated regularly

边栏推荐

- "Latex" Introduction to latex mathematical formula "suggestions collection"

- DAY TWO

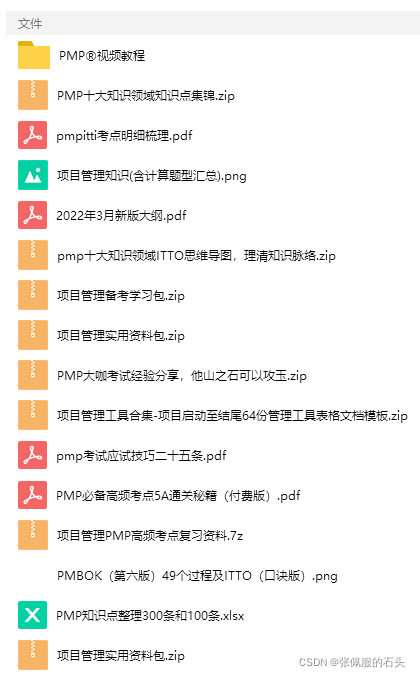

- 2022 PMP project management examination agile knowledge points (9)

- 1000 words selected - interface test basis

- 2022/2/10 summary

- [automated testing framework] what you need to know about unittest

- 【向量检索研究系列】产品介绍

- 什么是响应式对象?响应式对象的创建过程?

- DAY SIX

- Unity 颜色板|调色板|无级变色功能

猜你喜欢

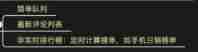

Imeta | Chen Chengjie / Xia Rui of South China Agricultural University released a simple method of constructing Circos map by tbtools

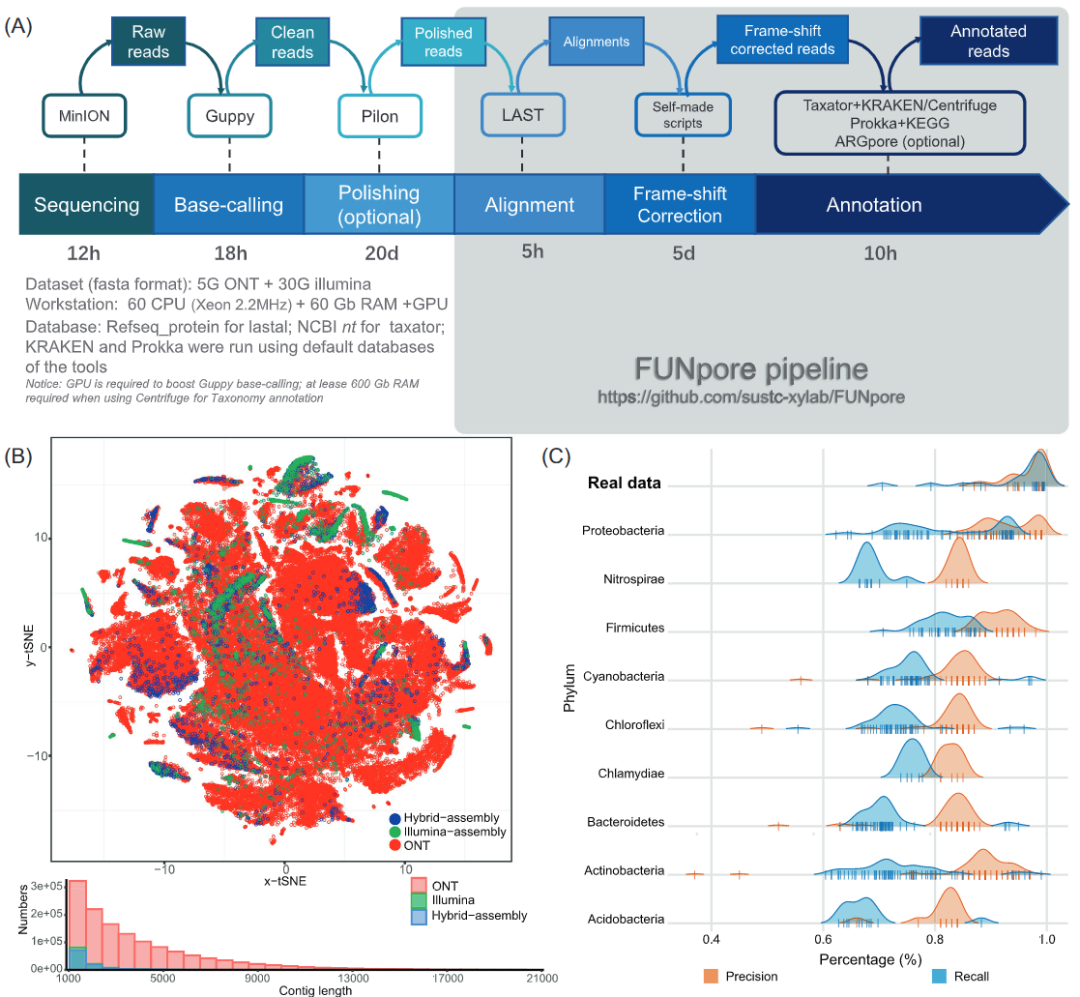

Racher integrates LDAP to realize unified account login

What can the interactive slide screen demonstration bring to the enterprise exhibition hall

DAY SIX

DAY THREE

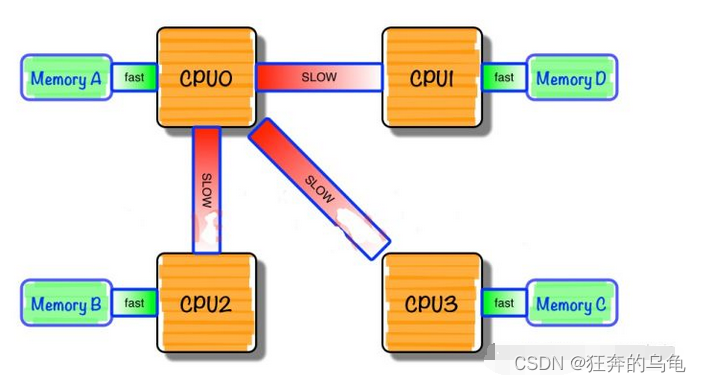

服务器SMP、NUMA、MPP体系学习笔记。

![[boutique] Pinia Persistence Based on the plug-in Pinia plugin persist](/img/53/95ab85bfd99d943f98881596d0aa8c.png)

[boutique] Pinia Persistence Based on the plug-in Pinia plugin persist

Hydrogen future industry accelerates | the registration channel of 2022 hydrogen energy specialty special new entrepreneurship competition is opened!

48页数字政府智慧政务一网通办解决方案

2022年PMP项目管理考试敏捷知识点(9)

随机推荐

Why is bat still addicted to 996 when the four-day working system is being tried out in Britain?

DAY FOUR

Local deployment Zeppelin 0.10.1

Tourism Management System Based on jsp+servlet+mysql framework [source code + database + report]

Data operation platform - data collection [easy to understand]

使用yum来安装PostgreSQL13.3数据库

[automated testing framework] what you need to know about unittest

How does win11 restore the traditional right-click menu? Win11 right click to change back to traditional mode

SuperSocket 1.6 创建一个简易的报文长度在头部的Socket服务器

Asset security issues or constraints on the development of the encryption industry, risk control + compliance has become the key to breaking the platform

TypeScript增量编译

Server SMP, NUMA, MPP system learning notes.

What is a responsive object? How to create a responsive object?

js导入excel&导出excel

iMeta | 华南农大陈程杰/夏瑞等发布TBtools构造Circos图的简单方法

三句话简要介绍子网掩码

(LeetCode)两数之和

Huawei mate8 battery price_ Huawei mate8 charges very slowly after replacing the battery

Matplotlib draws a histogram and adds values to the graph

17、 MySQL - high availability + read / write separation + gtid + semi synchronous master-slave replication cluster