Federal learning yes machine learning A very hot field in , It refers to the joint training model of multiple parties without transmitting data . With Federal learning The development of , Federal learning Systems are also emerging in endlessly , for example FATE, FedML, PaddleFL, TensorFlow-Federated wait . However , Most of the Federal learning The system does not support tree model Federal learning Training . Compared with neural network , Tree model has fast training , Strong explanatory ability , Suitable for tabular data . Tree model in finance , Medical care , The Internet and other fields have a wide range of application scenarios , For example, it is used for advertising and recommendation 、 Stock forecast, etc . The representative model of decision tree is Gradient Boosting Decision Tree (GBDT). Due to the limited prediction ability of a tree ,GBDT adopt boosting The method trains multiple trees in series , Through the way that each tree is used to fit the residuals of the current predicted value and the tag value , Finally, a good prediction effect is achieved . Representative GBDT The system has XGBoost, LightGBM, CatBoost, ThunderGBM, among XGBoost Many times KDD cup Used by the champion team . However , None of these systems support Federal learning In the scene GBDT Training . In recent days, , From the National University of Singapore and Tsinghua University The researchers of have proposed a training tree model Federal learning New system FedTree.

- Address of thesis :https://github.com/Xtra-Computing/FedTree/blob/main/FedTree_draft_paper.pdf

- Project address :https://github.com/Xtra-Computing/FedTree

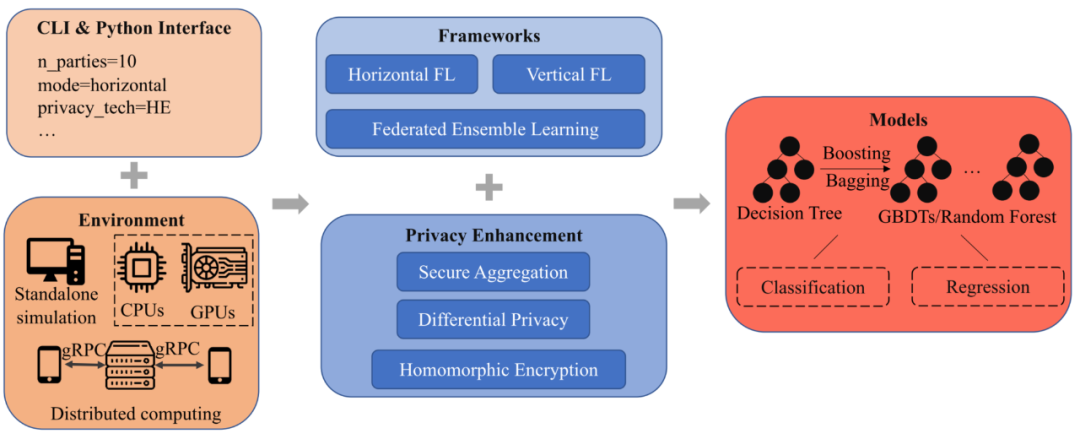

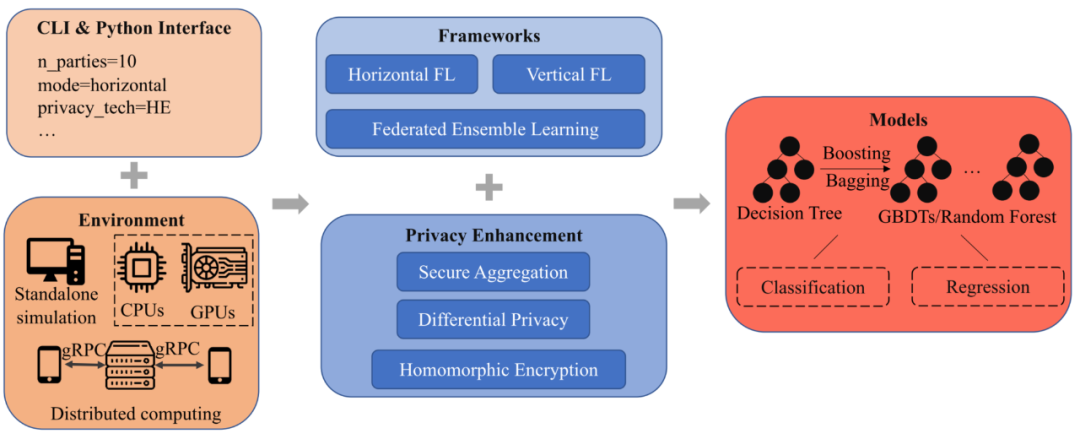

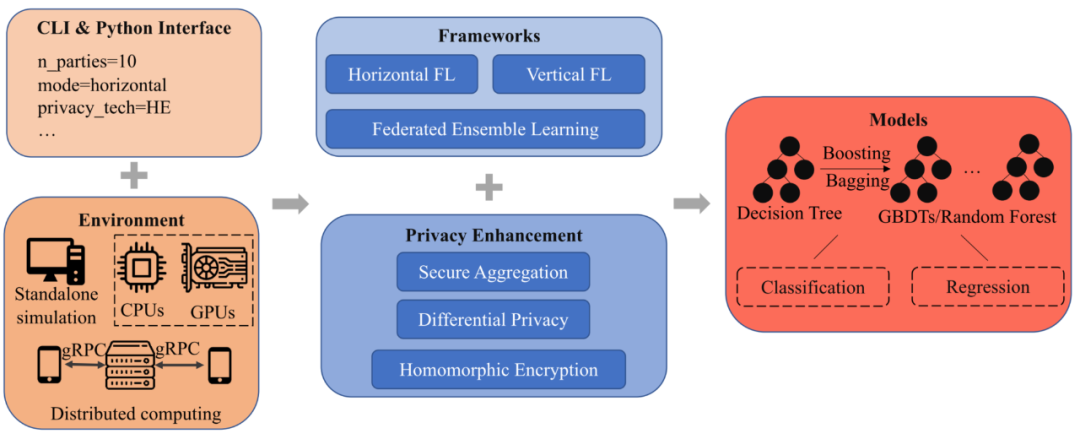

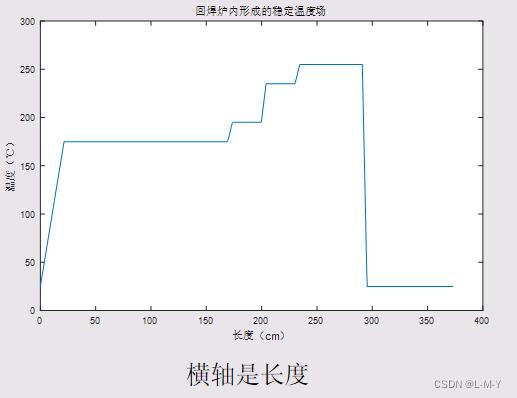

FedTree System introduction FedTree The architecture is shown in the figure 1 Shown , share 5 A module : Interface , Environmental Science , frame , Privacy protection and model .

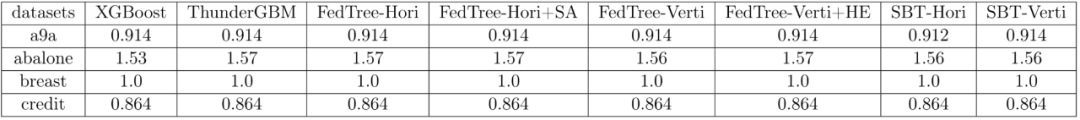

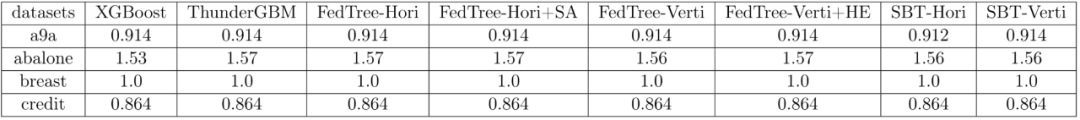

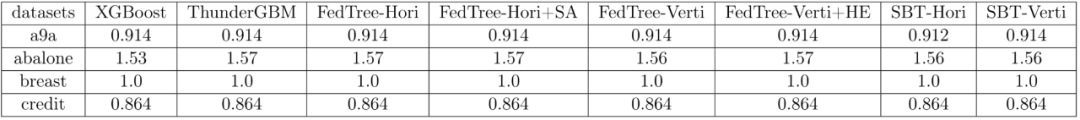

chart 1: FedTree System architecture diagram Interface :FedTree Two interfaces are supported : Command line interface and Python Interface . Users only need to give Parameters ( Number of participants , Federal scenes, etc ), You can run with one line of command FedTree Training .FedTree Of Python Interface and scikit-learn compatible , You can call fit() and predict() To train and predict . Environmental Science :FedTree Support simulated deployment on a single machine Federal learning , And deploying distributed on multiple machines Federal learning . On a stand-alone environment ,FedTree Support the division of data into multiple sub datasets , Each sub data set is trained as a participant . In a multi machine environment ,FedTree Support each machine as a participant , Between machines adopt gRPC communicate . meanwhile , except CPU outside ,FedTree Support use GPU To speed up training . frame :FedTree Support horizontal and vertical Federal learning scenario GBDT Training for . Horizontal scene , Different participants have different training samples and the same feature space . Vertical scene , Different participants have different feature spaces and the same training samples . To guarantee performance , In these two situations , Many participants participate in the training of each node . besides ,FedTree And support Integrated learning , Participants train the tree in parallel and then aggregate , So as to reduce the communication overhead between participants . privacy : Because the gradient passed in the training process may leak the information of the training data ,FedTree Provide different privacy protection methods to further protect gradient information , Including homomorphic encryption (HE) And security aggregation (SA). meanwhile ,FedTree Differential privacy is provided to protect the final trained model . Model : Based on training a tree ,FedTree adopt boosting/bagging Methods to support training GBDT/random forest. By setting up different Loss function ,FedTree The trained model supports multiple tasks , Including classification and regression . surface 1 Summarizes the different systems in a9a, breast and credit Upper AUC and abalone Upper RMSE,FedTree Model effect and training with all data GBDT( XGBoost, ThunderGBM) as well as FATE Medium SecureBoost (SBT) Almost unanimously . and , Privacy Policy SA and HE It will not affect the performance of the model .

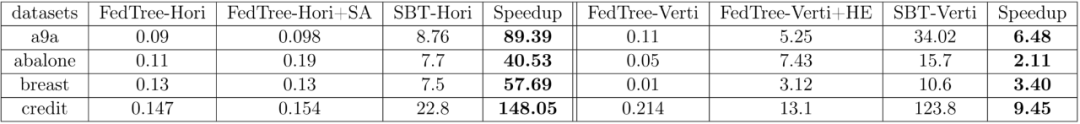

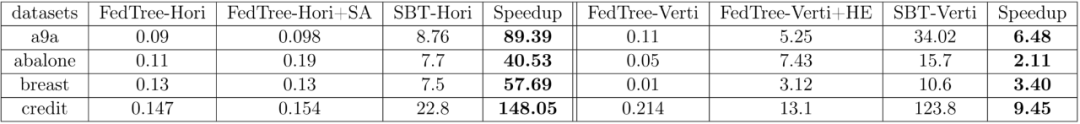

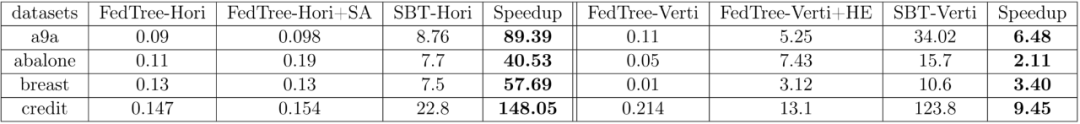

surface 1: Comparison of model effects of different systems surface 2 The training time of each tree in different systems is summarized ( Company : second ), You can see FedTree Compared with FATE Much faster. , In the horizontal Federal learning It can achieve 100 More than times the acceleration ratio .

surface 2: Comparison of training time of each tree in different systems For more research details, please refer to FedTree Original paper . 原网站版权声明

本文为[Heart of machine]所创,转载请带上原文链接,感谢

https://yzsam.com/2022/187/202207061631507860.html

![Tourism Management System Based on jsp+servlet+mysql framework [source code + database + report]](/img/41/94488f4c7627a1dfcf80f170101347.png)