当前位置:网站首页>Tensorflow - tensorflow Foundation

Tensorflow - tensorflow Foundation

2022-07-03 10:28:00 【JallinRichel】

TensorFlow Basics

source ——TensorFlow Official website 1

The article is not complete

Tensorflow It is an end-to-end machine learning platform , It supports the following :

- Numerical calculation based on multidimensional array ( and NumPy similar )

- GPU And distributed processing

- Automatic differentiation

- model building , Training and output

- wait

tensor ( It's actually a vector )

Tensorflow Run multidimensional arrays or tensor representation objects tf.Tensor, The most important parameters are shap and dtype:

- Tensor.shap: Represents the size of the tensor along each axis

- Tensor.dtype: Represents the data type of all elements in the tensor

Tensorflow Implement standard mathematical operations on tensors , And many operations dedicated to machine learning

If you want to improve the speed of the program, you can GPU Up operation , stay CPU It is slow to perform large-scale operations on

Variable

tf.Tensor Objects are generally invariant . have access to tf.Variable Store model weights ( Or other variable states )

Automatic differentiation

Gradient descent and correlation algorithm are the basis of machine learning .Tensorflow Automatic differentiation is realized , Use calculus to calculate the gradient . Usually , You can use this to calculate the gradient of the error or loss of the model relative to its weight

Tensorflow The gradient of any number of non scalar tensors can be calculated at the same time

Images and functions (tf.function)

You can use it like Python Use interactively like libraries Tensorflow

Tensorflow Also for the performance optimization 、 Output Tools are provided

- performance optimization : Speed up training and derivation

- Output : After training, you can save your model

You can use tf.function Will be pure TensorFlow Code and the Python Separate

@tf.function

def my_func(x):

print('Tracing.\n')

return tf.reduce_sum(x)

The first run tf.function, Although in Python In the implementation of , It captures a complete optimization diagram , Represents the... Completed in the function TensorFlow Calculation . In subsequent calls ,TensorFlow Only execute optimization graph , Skip any non TensorFlow step . For having different characteristic codes ( Shape and data type ) The input of , Graphics may not be reusable , Therefore, a new graphic will be generated to replace .

These captured images offer two benefits :

- Most of the time , They can significantly speed up execution

- You can use tf.saved_model Export these images , Then run on other systems , No installation required Python

modular , Layers and models

tf.Modules It's a management tf.Variabel The class of the object , also tf.function Object on which to run

tf.Modules Classes are necessary to support two important functions :

- You can use tf.train.Checkpoint Save and restore the values of variables .

- You can use tf.saved_model Import and export tf.Variable Values and tf.function Images

Here is a simple export tf.Module Examples of objects :

class MyModule(tf.Module):

def __init__(self,value):

self.weight = tf.Variable(value)

@tf.function

def multiply(self, x):

return x * self.weight

mod = MyModule(3)

mod.multiply(tf.constant([1,2,3]))

Save the model :

save_path = './saved'

tf.saved_model.save(mod, save_path)

The last saved model is independent of the code we created

Training cycle

Now? , Put these together to build a basic model and train from scratch

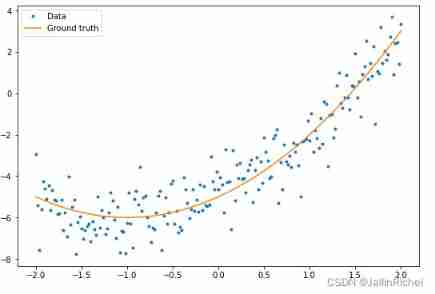

First , Create some sample data , This generates a point cloud loosely along a conic

import matplotlib

from matplotlib import pyplot as plt

matplotlib.rcParams['figure.figsize'] = [9,6]

x = tf.linspace(-2, 2, 201)

x = tf.cast(x, tf.float32)

def f(x):

y = x**2 + 2*x - 5

return y

y = f(x) + tf.random.normal(shape=[201])

plt.plot(x.numpy(), y.numpy(), '.', label='Data')

plt.plot(x, f(x), label='Ground truth')

plt.legend();

Build a model :

class Model(tf.keras.Model):

def __init__(self, units):

super().__init__()

self.dense1 = tf.keras.layers.Dense(units=units,

activation=tf.nn.relu,

kernel_initializer=tf.random.normal,

bias_initializer=tf.random.normal)

self.dense2 = tf.keras.layers.Dense(1)

def call(self, x, training=True):

# For Keras layers/models, implement `call` instead of `__call__`.

x = x[:, tf.newaxis]

x = self.dense1(x)

x = self.dense2(x)

return tf.squeeze(x, axis=1)

model = Model(64)

plt.plot(x.numpy(), y.numpy(), '.', label='data')

plt.plot(x, f(x), label='Ground truth')

plt.plot(x, model(x), label='Untrained predictions')

plt.title('Before training')

plt.legend();

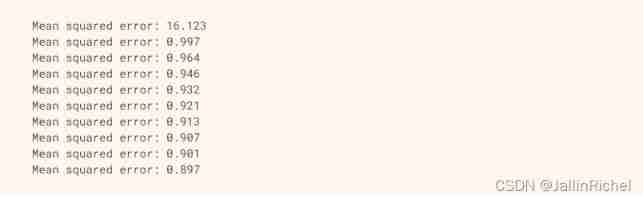

Write a basic training cycle :

variables = model.variables

optimizer = tf.optimizers.SGD(learning_rate=0.01)

for step in range(1000):

with tf.GradientTape() as tape:

prediction = model(x)

error = (y-prediction)**2

mean_error = tf.reduce_mean(error)

gradient = tape.gradient(mean_error, variables)

optimizer.apply_gradients(zip(gradient, variables))

if step % 100 == 0:

print(f'Mean squared error: {

mean_error.numpy():0.3f}')

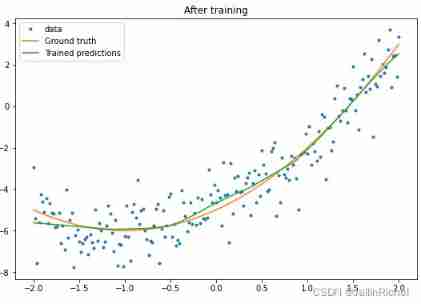

plt.plot(x.numpy(),y.numpy(), '.', label="data")

plt.plot(x, f(x), label='Ground truth')

plt.plot(x, model(x), label='Trained predictions')

plt.title('After training')

plt.legend();

This is feasible , But in tf.keras General training procedures are provided in the module , So before writing your own training cycle, you might as well consider what has been provided . use Model.compile and Model.fit Methods implement your training cycle

new_model = Model(64)

new_model.compile(

loss=tf.keras.losses.MSE,

optimizer=tf.optimizers.SGD(learning_rate=0.01))

history = new_model.fit(x, y,

epochs=100,

batch_size=32,

verbose=0)

model.save('./my_model')

plt.plot(history.history['loss'])

plt.xlabel('Epoch')

plt.ylim([0, max(plt.ylim())])

plt.ylabel('Loss [Mean Squared Error]')

plt.title('Keras training progress');

边栏推荐

- CV learning notes - scale invariant feature transformation (SIFT)

- 2.2 DP: Value Iteration & Gambler‘s Problem

- [LZY learning notes -dive into deep learning] math preparation 2.1-2.4

- Rewrite Boston house price forecast task (using paddlepaddlepaddle)

- openCV+dlib实现给蒙娜丽莎换脸

- 20220602数学:Excel表列序号

- 20220604数学:x的平方根

- CV learning notes - clustering

- Octave instructions

- Judging the connectivity of undirected graphs by the method of similar Union and set search

猜你喜欢

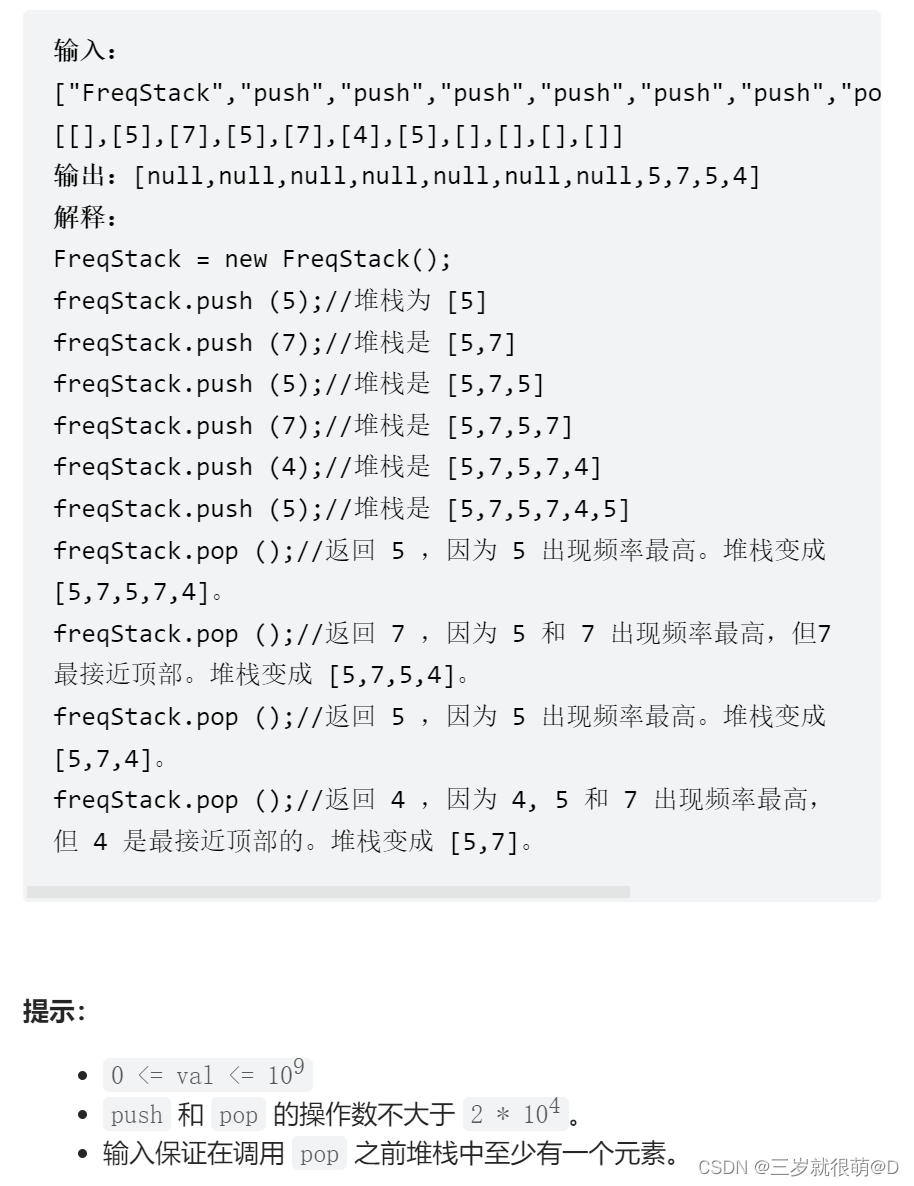

LeetCode - 703 数据流中的第 K 大元素(设计 - 优先队列)

Leetcode - 895 maximum frequency stack (Design - hash table + priority queue hash table + stack)*

Leetcode-106: construct a binary tree according to the sequence of middle and later traversal

CV learning notes - Stereo Vision (point cloud model, spin image, 3D reconstruction)

Are there any other high imitation projects

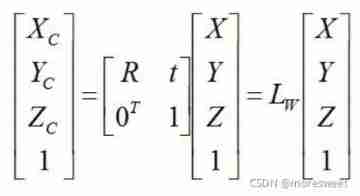

CV learning notes - camera model (Euclidean transformation and affine transformation)

LeetCode - 5 最长回文子串

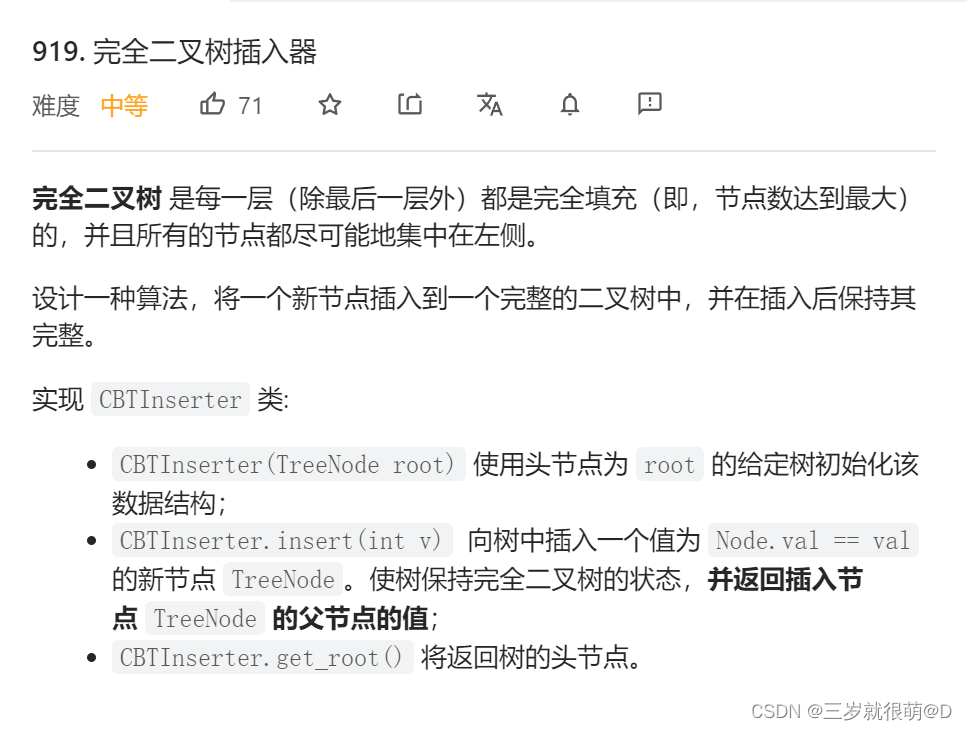

LeetCode - 919. Full binary tree inserter (array)

Stroke prediction: Bayesian

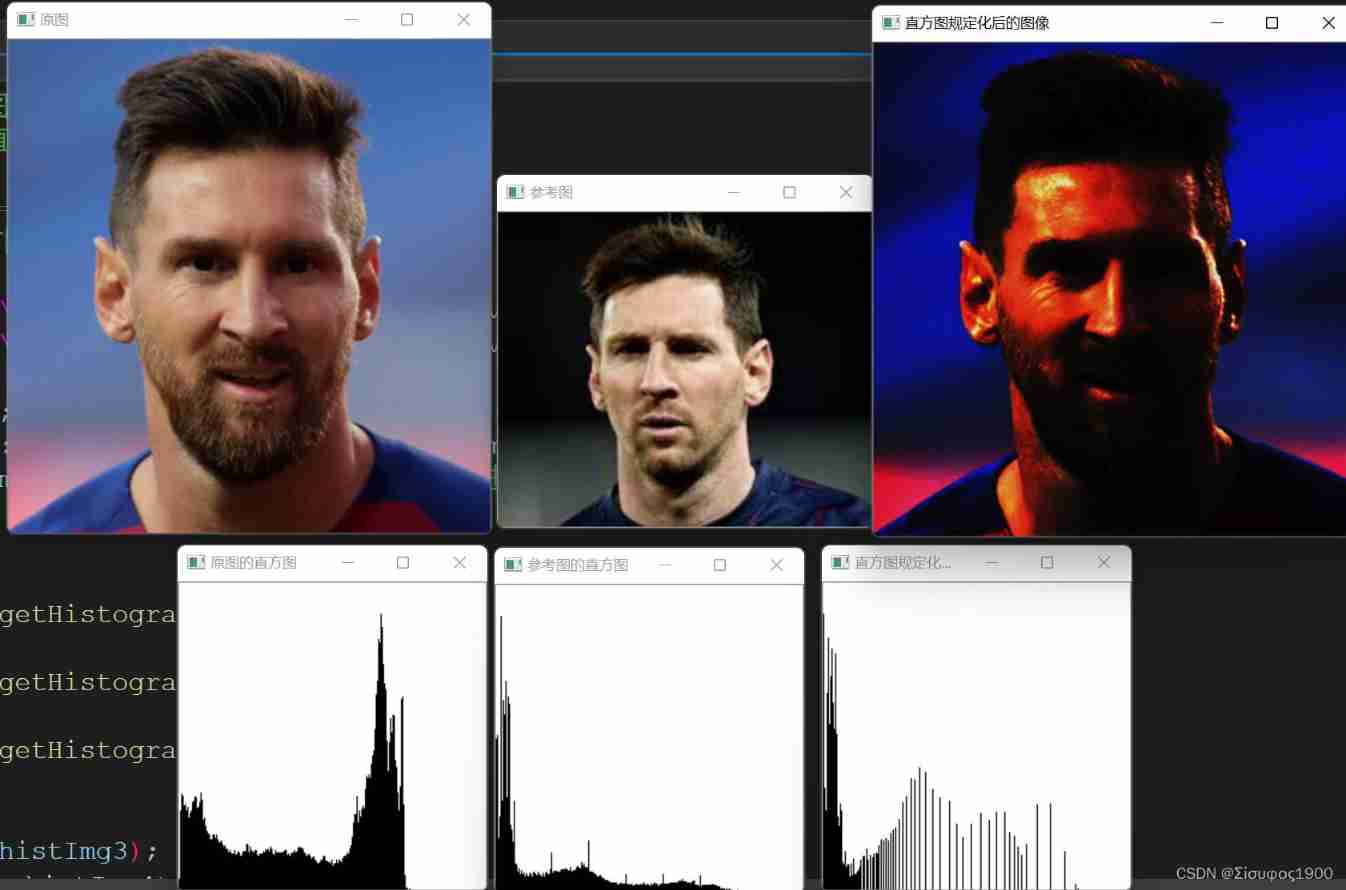

Opencv gray histogram, histogram specification

随机推荐

Deep learning by Pytorch

[LZY learning notes dive into deep learning] 3.1-3.3 principle and implementation of linear regression

1. Finite Markov Decision Process

Advantageous distinctive domain adaptation reading notes (detailed)

CV learning notes - scale invariant feature transformation (SIFT)

Standard library header file

Powshell's set location: unable to find a solution to the problem of accepting actual parameters

Leetcode - 1670 design front, middle and rear queues (Design - two double ended queues)

Rewrite Boston house price forecast task (using paddlepaddlepaddle)

High imitation wechat

2.1 Dynamic programming and case study: Jack‘s car rental

3.1 Monte Carlo Methods & case study: Blackjack of on-Policy Evaluation

LeetCode - 1670 设计前中后队列(设计 - 两个双端队列)

CV learning notes - Stereo Vision (point cloud model, spin image, 3D reconstruction)

LeetCode - 703 数据流中的第 K 大元素(设计 - 优先队列)

CV learning notes convolutional neural network

Leetcode 300 longest ascending subsequence

Hands on deep learning pytorch version exercise solution - 2.4 calculus

Neural Network Fundamentals (1)

LeetCode - 933 最近的请求次数