当前位置:网站首页>The less successful implementation and lessons of RESNET

The less successful implementation and lessons of RESNET

2022-07-03 09:09:00 【weixin_ thirty-seven million six hundred and eighty-two thousan】

import torch

from torch import nn

from torch.nn import functional as F

class ResBlk(nn.Module):

""" resnet block """

def __init__(self, ch_in, ch_out):

""" :param ch_in: :param ch_out: """

super(ResBlk, self).__init__()

self.conv1 = nn.Conv2d(ch_in, ch_out, kernel_size=3, stride=1, padding=1)

self.bn1 = nn.BatchNorm2d(ch_out)

self.conv2 = nn.Conv2d(ch_out, ch_out, kernel_size=3, stride=1, padding=1)

self.bn2 = nn.BatchNorm2d(ch_out)

self.extra = nn.Sequential()

if ch_out != ch_in:

# [b, ch_in, h, w] => [b, ch_out, h, w]

self.extra = nn.Sequential(

nn.Conv2d(ch_in, ch_out, kernel_size=1, stride=1),

nn.BatchNorm2d(ch_out)

)

def forward(self, x):

""" :param x: [b, ch, h, w] :return: """

out = F.relu(self.bn1(self.conv1(x)))

out = self.bn2(self.conv2(out))

# short cut.

# extra module: [b, ch_in, h, w] => [b, ch_out, h, w]

# element-wise add:

out = self.extra(x) + out

return out

class ResNet18(nn.Module):

def __init__(self):

super(ResNet18, self).__init__()

self.conv1 = nn.Sequential(

nn.Conv2d(3, 16, kernel_size=3, stride=3, padding=0),

nn.BatchNorm2d(16)

)

# followed 4 blocks

# [b, 64, h, w] => [b, 128, h ,w]

self.blk1 = ResBlk(16, 32)

# [b, 128, h, w] => [b, 256, h, w]

self.blk2 = ResBlk(32, 64)

# # [b, 256, h, w] => [b, 512, h, w]

self.blk3 = ResBlk(64, 128)

# # [b, 512, h, w] => [b, 1024, h, w]

self.blk4 = ResBlk(128, 256)

self.outlayer = nn.Linear(256*10*10, 10)

def forward(self, x):

""" :param x: :return: """

x = F.relu(self.conv1(x))

# [b, 64, h, w] => [b, 1024, h, w]

x = self.blk1(x)

x = self.blk2(x)

x = self.blk3(x)

x = self.blk4(x)

# print(x.shape)

x = x.view(x.size(0), -1)

x = self.outlayer(x)

return x

def main():

blk = ResBlk(64, 128)

tmp = torch.randn(2, 64,32, 32)

out = blk(tmp)

print('blkk', out.shape)

model = ResNet18()

tmp = torch.randn(2, 3, 32, 32)

out = model(tmp)

print('resnet:', out.shape)

if __name__ == '__main__':

main()

This is the code written by the teacher , Don't post your own code if it's a little messy

The biggest feeling is CNN There is stride and padding after ,[b, chn, h,w], It's too messy It's easy to come out x And the next step to accept x Not right .

It mainly affects h,w

And then there was cnn Pick up fc Remember to level the back chn* H * W, namely

hold cnn Of [b, chn ,h ,w] Make it even [b, chnhw]

There is a key short cut

mistake

out = self.extra(x) + out

It's written in

out = self.extra(out) +x

It took two hours to find this problem , Still not familiar with the dimension transformation in the middle of the network ,

Yes short cut I don't understand well

from resnet_teacher import ResNet18

def main():

batchsz = 32

cifar_train = datasets.CIFAR10('cifar', True, transform=transforms.Compose([

transforms.Resize((32, 32)),

transforms.ToTensor()

]), download=True)

cifar_train = DataLoader(cifar_train, batch_size=batchsz, shuffle=True)

cifar_test = datasets.CIFAR10('cifar', False, transform=transforms.Compose([

transforms.Resize((32, 32)),

transforms.ToTensor()

]), download=True)

cifar_test = DataLoader(cifar_test, batch_size=batchsz, shuffle=True)

x, label = iter(cifar_train).next()

print('x:', x.shape, 'label:', label.shape)

device = torch.device('cuda')

# model = Lenet5().to(device)

model = ResNet18()

criteon = nn.CrossEntropyLoss().to(device)

optimizer = optim.Adam(model.parameters(), lr=1e-3)

print(model)

for epoch in range(1000):

model.train()

for batchidx, (x, label) in enumerate(cifar_train):

# [b, 3, 32, 32]

# [b]

#x, label = x.to(device), label.to(device)

logits = model(x)

# logits: [b, 10]

# label: [b]

# loss: tensor scalar

loss = criteon(logits, label)

# backprop

optimizer.zero_grad()

loss.backward()

optimizer.step()

#

print(epoch, 'loss:', loss.item())

model.eval()

with torch.no_grad():

# test

total_correct = 0

total_num = 0

for x, label in cifar_test:

# [b, 3, 32, 32]

# [b]

#x, label = x.to(device), label.to(device)

# [b, 10]

logits = model(x)

# [b]

pred = logits.argmax(dim=1)

# [b] vs [b] => scalar tensor

correct = torch.eq(pred, label).float().sum().item()

total_correct += correct

total_num += x.size(0)

# print(correct)

acc = total_correct / total_num

print(epoch, 'acc:', acc)

if __name__ == '__main__':

main()

边栏推荐

- With low code prospect, jnpf is flexible and easy to use, and uses intelligence to define a new office mode

- Debug debugging - Visual Studio 2022

- 即时通讯IM,是时代进步的逆流?看看JNPF怎么说

- 【点云处理之论文狂读经典版8】—— O-CNN: Octree-based Convolutional Neural Networks for 3D Shape Analysis

- 【点云处理之论文狂读前沿版9】—Advanced Feature Learning on Point Clouds using Multi-resolution Features and Learni

- 求组合数 AcWing 885. 求组合数 I

- 【点云处理之论文狂读经典版11】—— Mining Point Cloud Local Structures by Kernel Correlation and Graph Pooling

- LeetCode 75. Color classification

- Markdown learning

- 【点云处理之论文狂读经典版13】—— Adaptive Graph Convolutional Neural Networks

猜你喜欢

Find the combination number acwing 885 Find the combination number I

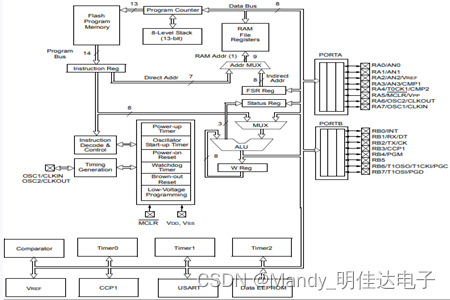

PIC16F648A-E/SS PIC16 8位 微控制器,7KB(4Kx14)

Excel is not as good as jnpf form for 3 minutes in an hour. Leaders must praise it when making reports like this!

![[point cloud processing paper crazy reading frontier version 8] - pointview gcn: 3D shape classification with multi view point clouds](/img/ee/3286e76797a75c0f999c728fd2b555.png)

[point cloud processing paper crazy reading frontier version 8] - pointview gcn: 3D shape classification with multi view point clouds

Data mining 2021-4-27 class notes

I made mistakes that junior programmers all over the world would make, and I also made mistakes that I shouldn't have made

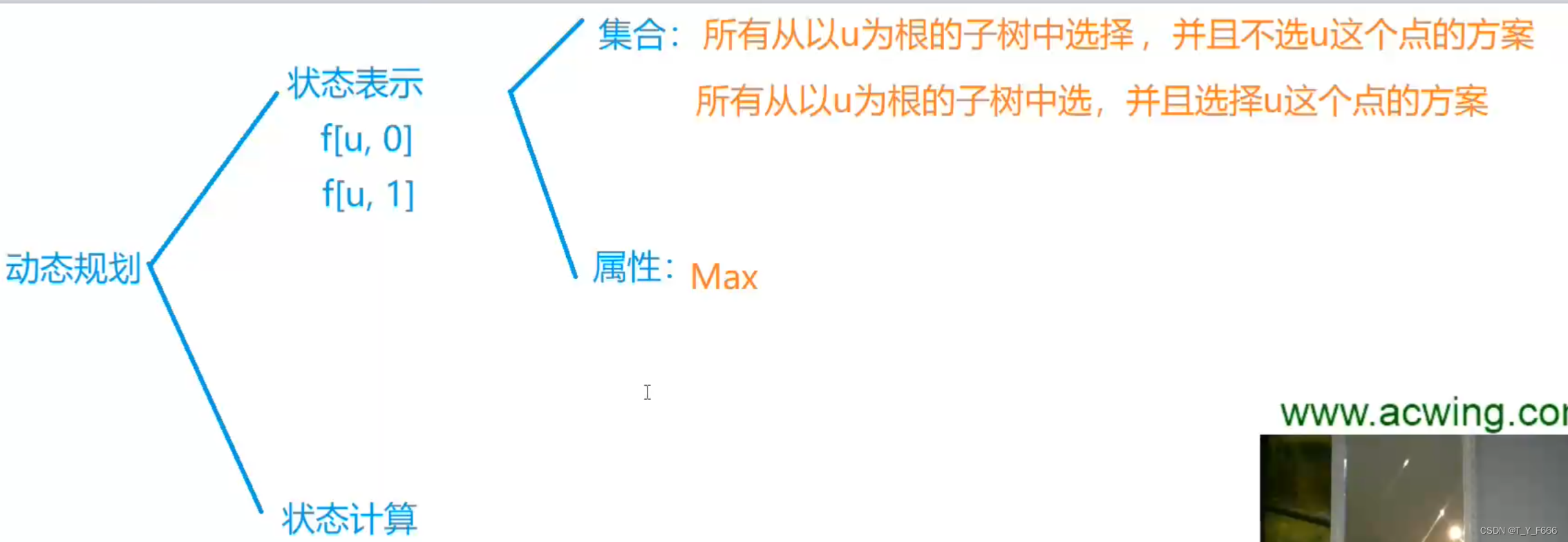

Tree DP acwing 285 A dance without a boss

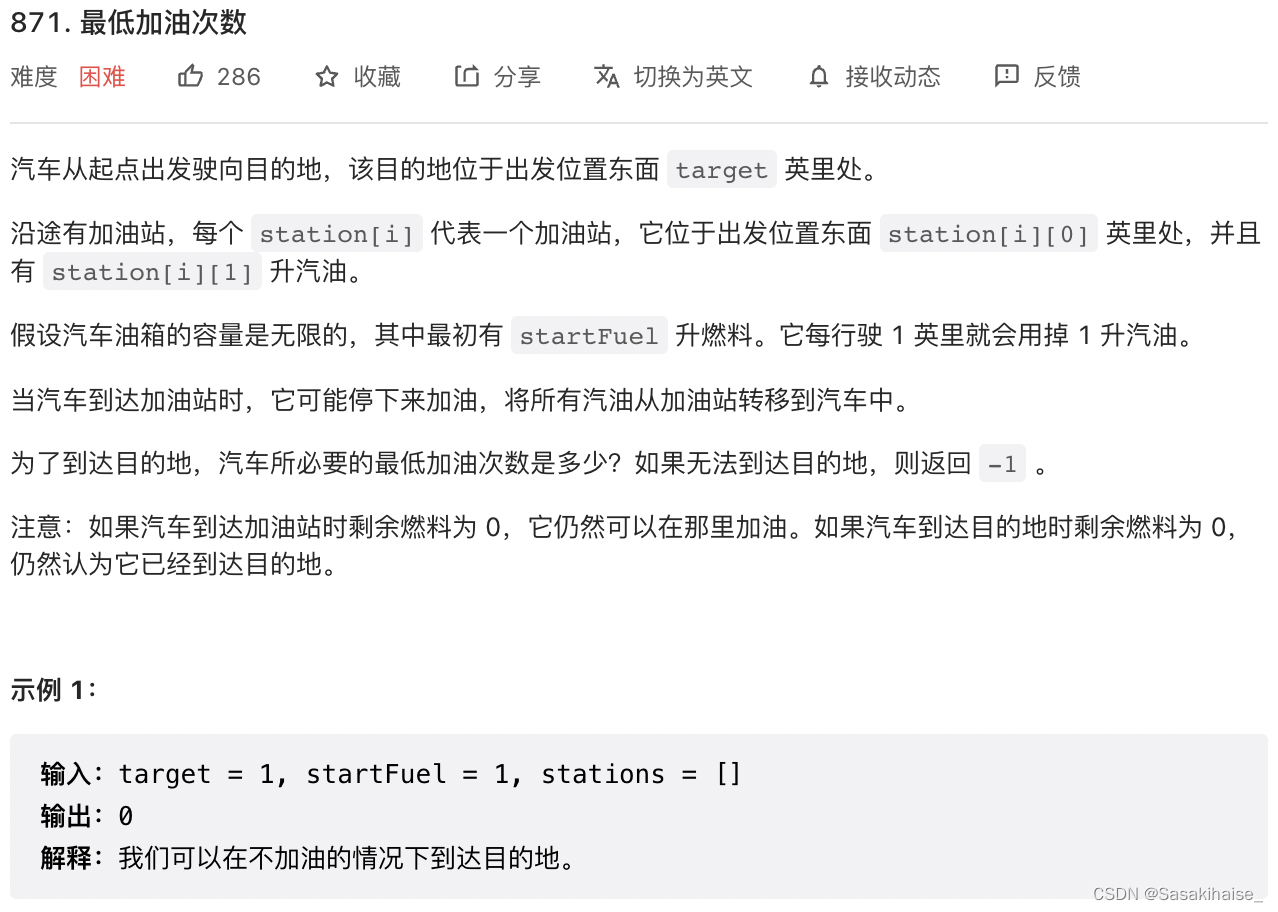

LeetCode 871. Minimum refueling times

20220630 learning clock in

网络安全必会的基础知识

随机推荐

How to check whether the disk is in guid format (GPT) or MBR format? Judge whether UEFI mode starts or legacy mode starts?

excel一小时不如JNPF表单3分钟,这样做报表,领导都得点赞!

【点云处理之论文狂读经典版12】—— FoldingNet: Point Cloud Auto-encoder via Deep Grid Deformation

too many open files解决方案

dried food! What problems will the intelligent management of retail industry encounter? It is enough to understand this article

Severity code description the project file line prohibits the display of status error c2440 "initialization": unable to convert from "const char [31]" to "char *"

On the setting of global variable position in C language

Save the drama shortage, programmers' favorite high-score American drama TOP10

LeetCode 75. Color classification

使用dlv分析golang进程cpu占用高问题

Find the combination number acwing 885 Find the combination number I

Character pyramid

求组合数 AcWing 885. 求组合数 I

LeetCode 438. Find all letter ectopic words in the string

LeetCode 241. 为运算表达式设计优先级

Discussion on enterprise informatization construction

LeetCode 871. 最低加油次数

Noip 2002 popularity group selection number

Vscode connect to remote server

How to use Jupiter notebook