当前位置:网站首页>Crawling exercise: Notice of crawling Henan Agricultural University

Crawling exercise: Notice of crawling Henan Agricultural University

2022-07-06 07:07:00 【Rong AI holiday】

Notice of Henan Agricultural University

Introduction to reptiles

With the rapid development of network , The world wide web has become the carrier of a lot of information , How to effectively extract and use this information has become a huge challenge . Search engine (Search Engine), For example, the traditional general search engine AltaVista, Baidu ,Yahoo! and Google etc. , As a tool to assist people in retrieving information, it has become an entrance and guide for users to access the World Wide Web . however , These general search engines also have some limitations , Such as :

(1) Different fields 、 Users with different backgrounds often have different retrieval purposes and requirements , The results returned by general search engines contain a large number of web pages that users don't care about .

(2) The goal of general search engines is to maximize the network coverage , The contradiction between limited search engine server resources and unlimited network data resources will be further deepened .

(3) The rich data form of the world wide web and the continuous development of network technology , picture 、 database 、 Audio / Video multimedia and other different data appear in large quantities , General search engines are often powerless to these data with dense information content and certain structure , Can't find and get .

(4) General search engines mostly provide keyword based search , It is difficult to support queries based on semantic information .

In order to solve the above problems , Focused crawlers, which can capture relevant web resources directionally, emerge as the times require . Focus crawler is a program that automatically downloads Web pages , It grabs the target according to the set target , Selective access to web pages and related links on the world wide web , Get the information you need . With universal crawlers (generalpurpose web crawler) Different , Focused crawlers don't seek big coverage , The goal is to capture the web pages related to a particular topic , Preparing data resources for topic oriented user queries .

Crawl Henan Agricultural University notice announcement

Crawling purpose

It's school season , I want to pay attention to the dynamic of the school . See if there is any information about the beginning of school .

Learn the code , The program completes the notice and announcement of Henan Agricultural University

https://www.henau.edu.cn/news/xwgg/index.shtml Data capture and storage

Analysis steps

1. Import library

import requests

from bs4 import BeautifulSoup

import csv

from tqdm import tqdm

2. Analyze the web

Compare the following links

https://www.henau.edu.cn/news/xwgg/index.shtml

https://www.henau.edu.cn/news/xwgg/index_2.shtml

https://www.henau.edu.cn/news/xwgg/index_3.shtml

It is obvious that the law , In this way, you can use the cycle to crawl

3. Single page analysis

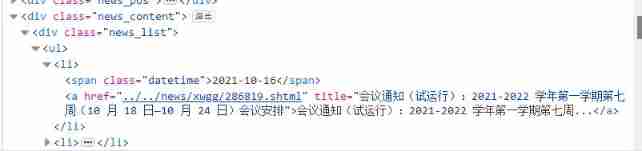

It can be seen that the general structure is consistent , Just select .news_list The information in

4. Save the file

with open(' Notice notice 1.csv', 'w', newline='', encoding='utf-8-sig') as file:

fileWriter = csv.writer(file)

fileWriter.writerow([' date ',' title '])

fileWriter.writerows(info_list)

- with open(‘ Notice notice 1.csv’, ‘w’, newline=’’, encoding=‘utf-8-sig’) as file: This sentence must be written like this to prevent garbled code

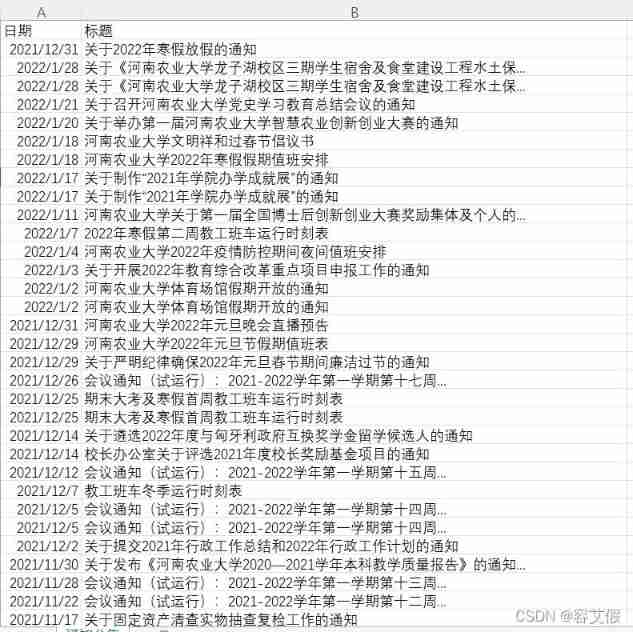

Crawling results show

Code

Part of the code explains

t = li.find('span')

if t :

date = t.string

t = li.find('a')

if t :

title = t.string

Be sure to use find, Because there's only one span Elements , Out-of-service find_all function , In addition, judge t If there is a value , Otherwise there will be problems

for i in tqdm(range(1,5)):

if i==1 :

url = base_url + "index.shtml"

else:

str = "index_%s.shtml" % (i)

url = base_url + str

The core code for paging crawling

All the code

import requests

from bs4 import BeautifulSoup

import csv

from tqdm import tqdm

def get_page(url):

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/95.0.4638.69 Safari/537.36'

}

resp = requests.get(url,headers)

resp.encoding = resp.status_code

resp.encoding = resp.apparent_encoding

#resp.encoding = 'utf-8'

page_text = resp.text

r = BeautifulSoup(page_text,'lxml')

lists = r.select('.news_list ul li')

#print(lists)

info_list_page = []

for li in lists:

t = li.find('span')

if t :

date = t.string

t = li.find('a')

if t :

title = t.string

info = [date,title]

info_list_page.append(info)

return info_list_page

def main():

info_list = []

base_url = "https://www.henau.edu.cn/news/xwgg/"

print(" Topic crawling ...........")

for i in tqdm(range(1,5)):

if i==1 :

url = base_url + "index.shtml"

else:

str = "index_%s.shtml" % (i)

url = base_url + str

#print()

info_page = get_page(url)

#print(info_page)

info_list+=info_page

print(" Climb to success !!!!!!!!!!")

with open(' Notice notice 1.csv', 'w', newline='', encoding='utf-8-sig') as file:

fileWriter = csv.writer(file)

fileWriter.writerow([' date ',' title '])

fileWriter.writerows(info_list)

if __name__ == "__main__" :

main()

If this article is helpful to my friends , I hope you can give me some praise and support ~ Thank you very much. ~

边栏推荐

- AttributeError: Can‘t get attribute ‘SPPF‘ on <module ‘models. common‘ from ‘/home/yolov5/models/comm

- RichView TRVStyle 模板样式的设置与使用

- 变量的命名规则十二条

- Applied stochastic process 01: basic concepts of stochastic process

- Apache dolphin scheduler source code analysis (super detailed)

- leetcode841. 钥匙和房间(中等)

- The ECU of 21 Audi q5l 45tfsi brushes is upgraded to master special adjustment, and the horsepower is safely and stably increased to 305 horsepower

- 中青看点阅读新闻

- Simple use of JWT

- 18.多级页表与快表

猜你喜欢

Upgraded wechat tool applet source code for mobile phone detection - supports a variety of main traffic modes

Win10 64 bit Mitsubishi PLC software appears oleaut32 DLL access denied

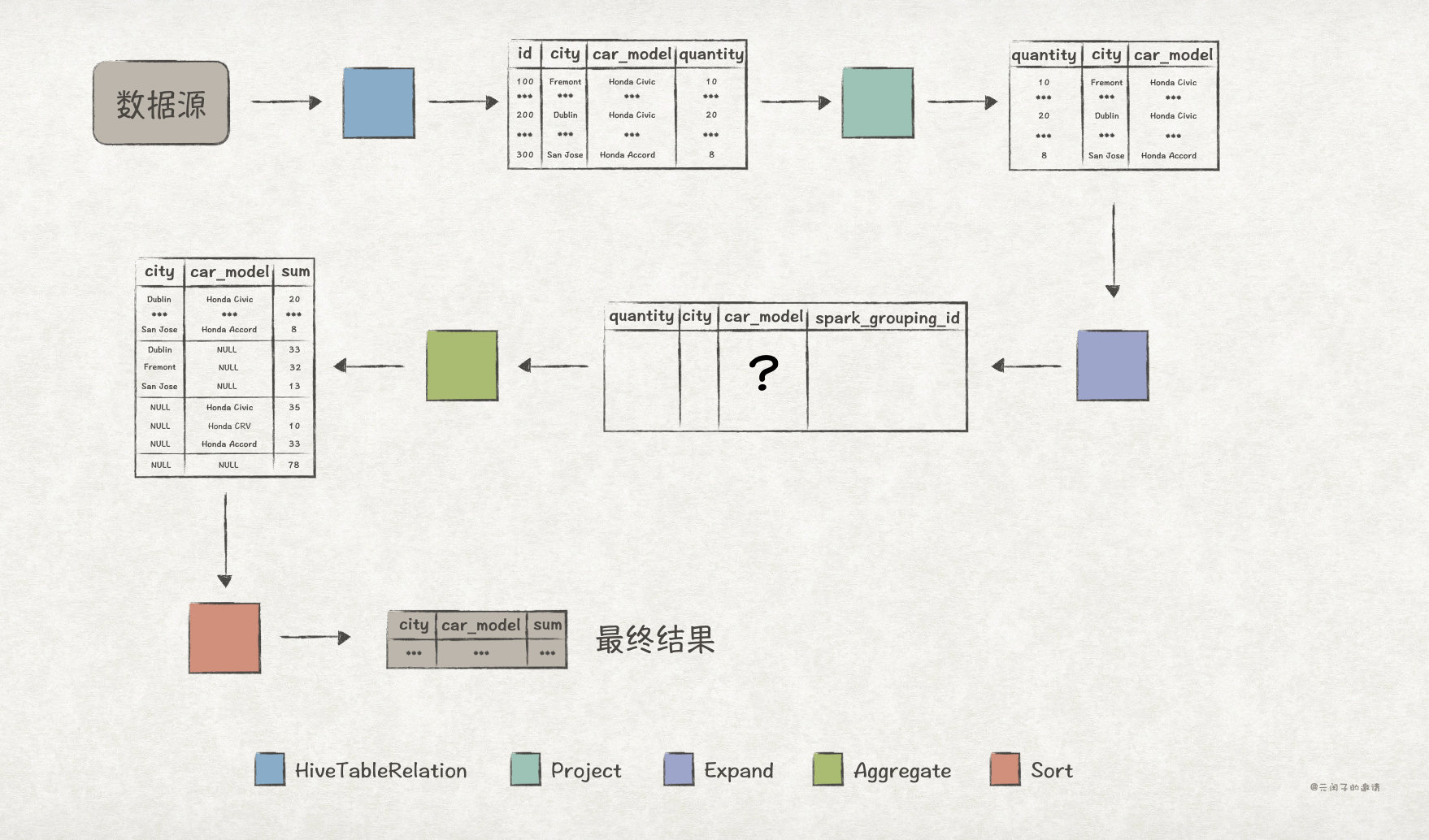

Explain in detail the functions and underlying implementation logic of the groups sets statement in SQL

《从0到1:CTFer成长之路》书籍配套题目(周更)

C language_ Double create, pre insert, post insert, traverse, delete

AI on the cloud makes earth science research easier

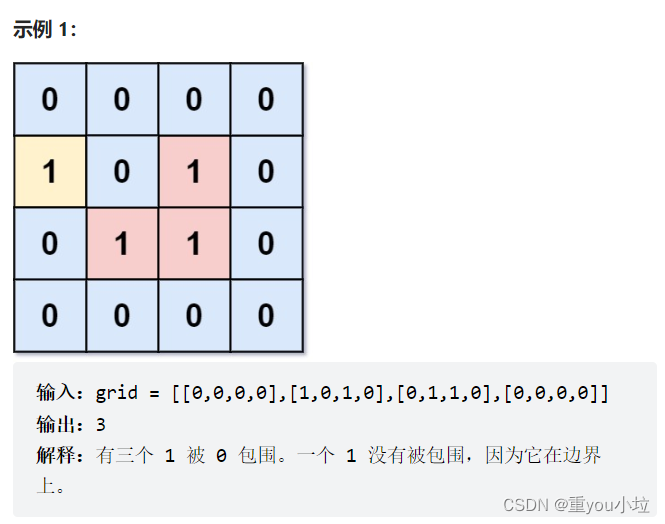

leetcode1020. 飞地的数量(中等)

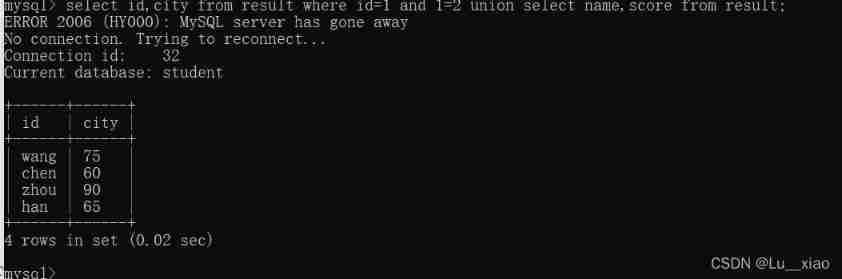

Database basics exercise part 2

Proteus -- Serial Communication parity flag mode

The ECU of 21 Audi q5l 45tfsi brushes is upgraded to master special adjustment, and the horsepower is safely and stably increased to 305 horsepower

随机推荐

Supporting title of the book from 0 to 1: ctfer's growth road (Zhou Geng)

First knowledge of OpenGL es learning (1)

Configure raspberry pie access network

微信脑力比拼答题小程序_支持流量主带最新题库文件

Huawei equipment configuration ospf-bgp linkage

Three methods of adding color to latex text

Interface automation test framework: pytest+allure+excel

GET 和 POST 请求类型的区别

Do you really know the use of idea?

NFT on fingertips | evaluate ambire on G2, and have the opportunity to obtain limited edition collections

树莓派串口登录与SSH登录方法

[daily question] 729 My schedule I

【每日一题】729. 我的日程安排表 I

UDP攻击是什么意思?UDP攻击防范措施

The first Baidu push plug-in of dream weaving fully automatic collection Optimization SEO collection module

The ECU of 21 Audi q5l 45tfsi brushes is upgraded to master special adjustment, and the horsepower is safely and stably increased to 305 horsepower

ROS2安装及基础知识介绍

Leetcode 78: subset

数据仓库建设思维导图

软件测试外包到底要不要去?三年真实外包感受告诉你