当前位置:网站首页>Ternsort model integration summary

Ternsort model integration summary

2022-07-04 11:20:00 【Ascetic monk runnercai】

Sometimes I'm dealing with deep learning When modeling , We need to use the model c++ Integrate . Here is an overview of , Lest I forget it next time :

The following are some comparisons of deep learning open source libraries  I use PyTorch、TensorRT

I use PyTorch、TensorRT

PyTorch : yes Torch7 Team developed , You can see from its name that , And Torch The difference is that PyTorch Used Python As a development language . So-called “Python first”, It also shows that it is a Python Priority deep learning framework , Not only can you achieve powerful GPU Speed up , It also supports dynamic neural networks , This is now a mainstream framework, such as Tensorflow And so on .PyTorch It can be seen as joining GPU Supported by numpy, At the same time, it can also be regarded as a powerful deep neural network with automatic derivation function , except Facebook outside , It has also been Twitter、CMU and Salesforce And so on

Link to the original text :https://blog.csdn.net/broadview2006/article/details/80133047( Why use pytorch)

Pytorch Chinese learning website

https://www.pytorch123.com/

TensorRT: yes NVIDIA The high-performance deep learning reasoning optimizer , Usually used based on ResNet-50 and BERT Applications for . Use TensorRT and TensorFlow 2.0, Developers can achieve up to... In reasoning 7 Double acceleration .

Pytorch After the environment is configured , from github Download it. yolov5 Source code , And configure the corresponding environment on your computer and run , Generated weight file yolov5s.pt, Continue to compress it into onnx Model , Continue to use TensorRT Reasoning accelerates generation engine Model , Can be achieved through TensorRT Deploy yolov5 Model .

Generally speaking, deep learning is divided into two parts: training and deployment :

Training phase The first and most important thing is to build the network structure , Prepare the dataset , Use various frameworks to train , Training should include validation( verification ) and test( test ) The process of .

Deployment phase ,latency( Interaction delay ) Is a very important point , and TensorRT It's optimized for the deployment side , at present TensorRT Support most mainstream deep learning applications , Of course, the best is CNN( Convolutional neural networks ) field , But it's true TensorRT 3.0 There is a RNN Of API, That means we can do it inside RNN To infer (Inference)

Link to the original text :https://blog.csdn.net/Tosonw/article/details/92643231

TensorRT and PyTorch The story of the model

( Here mainly with yolo As a case study )

Yolov5 It's an algorithm , The default is to use pytorch Framework implementations :

https://github.com/ultralytics/yolov5

There are two ways to export models ,

The first is output wts The weight , And then convert it to engine Model , The final will be .engine Change it to .trt That's all right.

Reference resources :yolov5 The seventh step of deployment is completed tensorRT Model reasoning is accelerated

github Go to the warehouse :https://github.com/wang-xinyu/tensorrtx

The second is output onnx Intermediate model , And then convert it to .trt That's all right.

https://github.com/onnx/onnx-tensorrt

NVIDIA TensorRT It's a C ++ library , Can be done NVIDIA GPU High performance inference .

TensorRT Focus on GPU Reasoning on the network quickly and effectively , And generate a highly optimized runtime engine .

TensorRT adopt C ++ and Python Provide API, It can be done by API、 The parser loads the predefined model .

TensorRT Provides a runtime, Can be found in kepler All over a generation NVIDIA GPU On the implementation .

Link to the original text :https://blog.csdn.net/Tosonw/article/details/92643231

Foregoing :

TensorRT All in all 5 Stages : Creating networks 、 Constructive reasoning Engine、 Serialization engine 、 Deserialization engine and execution reasoning Engine

1) Creating networks 、 Constructive reasoning Engine、 Serialization engine

The three steps , We usually perform it alone after training the model , Store the serialized engine as a file after execution , When you reason, you don't have to do it every time , But one thing to note is that this is related to gpu of , That computer makes reasoning , Just use which computer to do these three .

2)TensorRT Deploy

TensorRT Develop Chinese manuals

TensorRT Professional introduction

TensorRT course

边栏推荐

- Locust learning record I

- Fundamentals of database operation

- thread

- Getting started with window functions

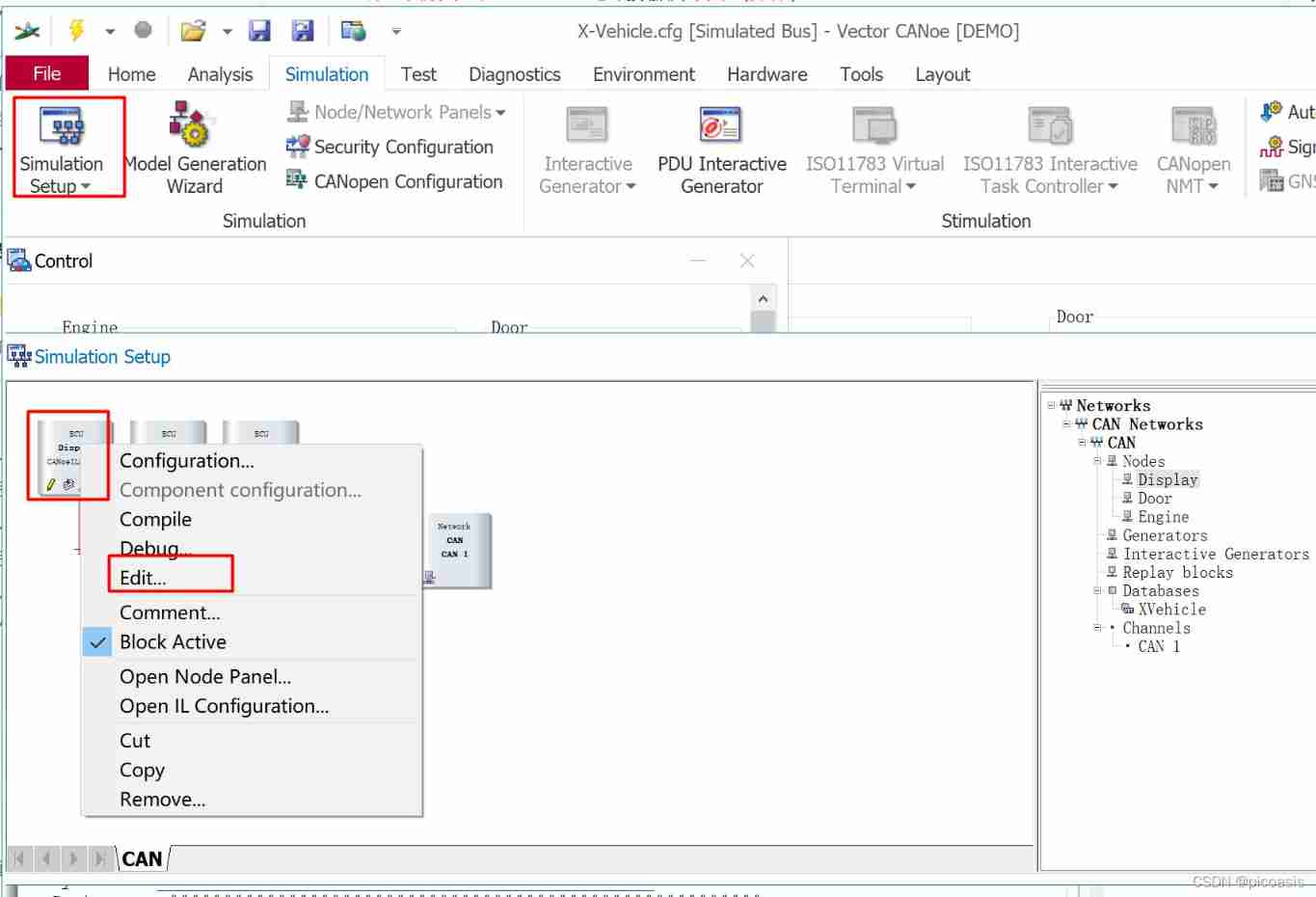

- Canoe-the second simulation project-xvehicle-1 bus database design (idea)

- Four sorts: bubble, select, insert, count

- QQ group administrators

- LVS+Keepalived实现四层负载及高可用

- 本地Mysql忘记密码的修改方法(windows)[通俗易懂]

- Simple understanding of string

猜你喜欢

Attributes and methods in math library

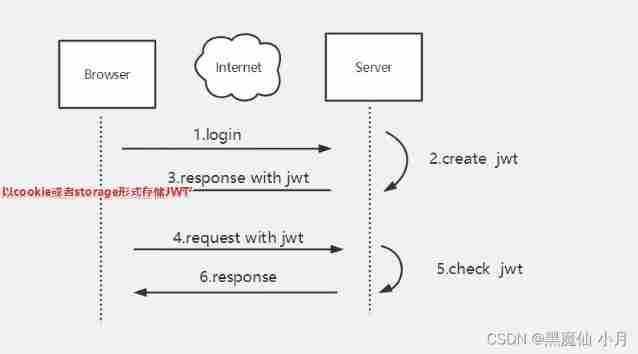

Simple understanding of seesion, cookies, tokens

Oracle11g | getting started with database. It's enough to read this 10000 word analysis

Take advantage of the world's sleeping gap to improve and surpass yourself -- get up early

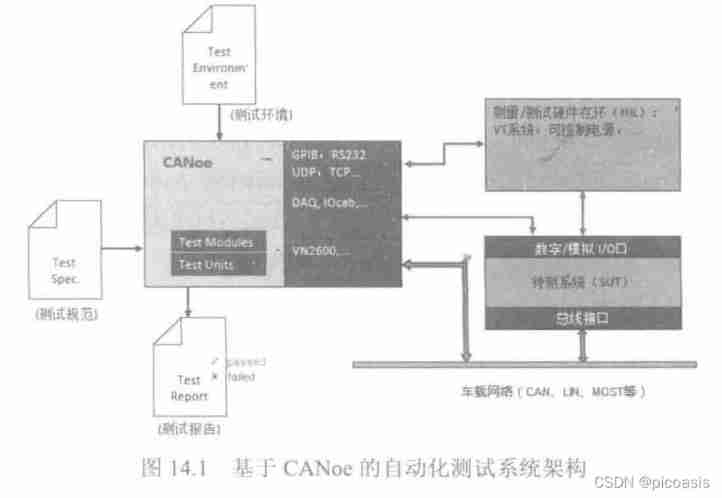

Introduction to canoe automatic test system

Elevator dispatching (pairing project) ④

Canoe the second simulation engineering xvehicle 3 CAPL programming (operation)

os. Path built-in module

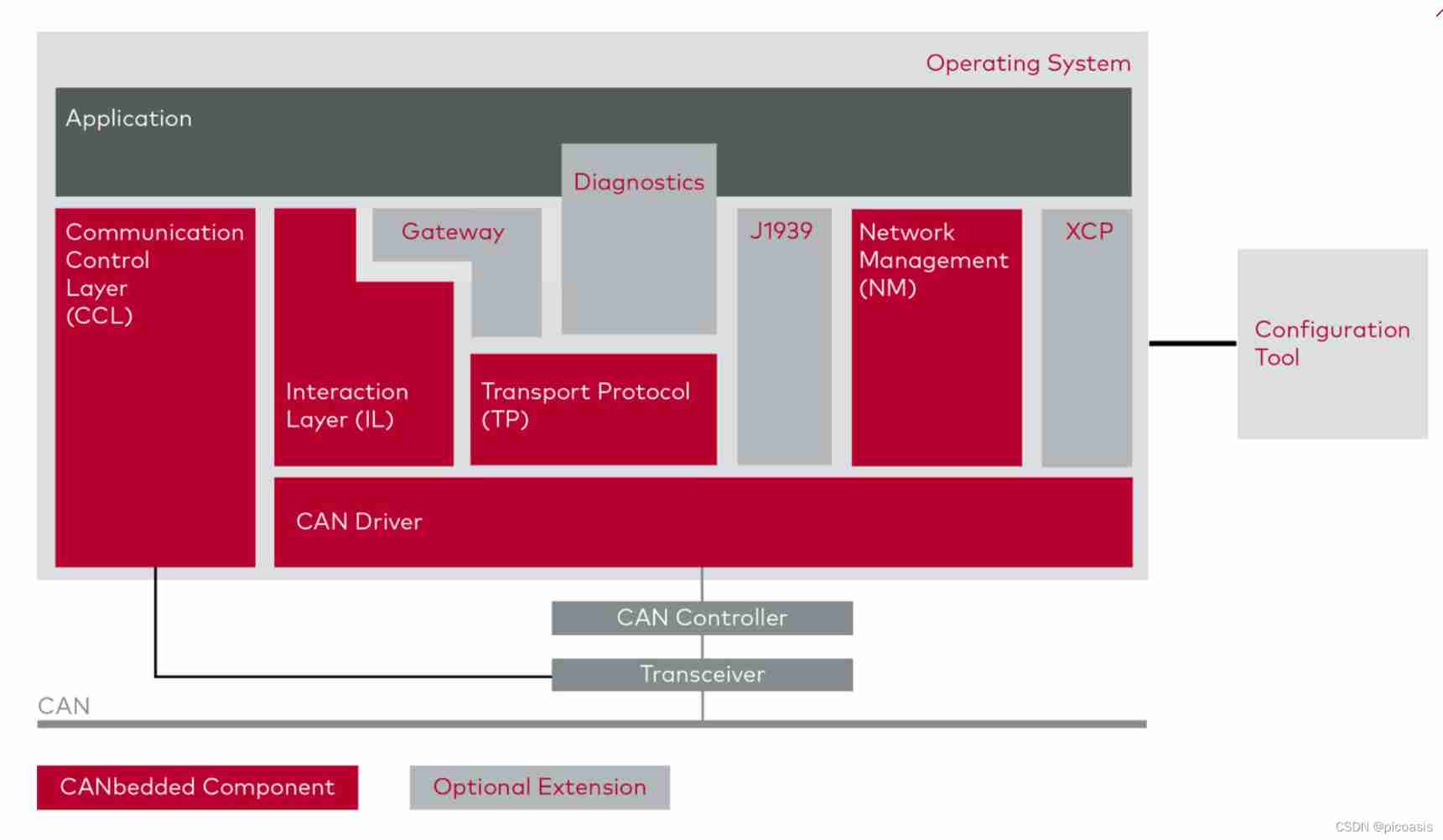

Function introduction of canbedded component

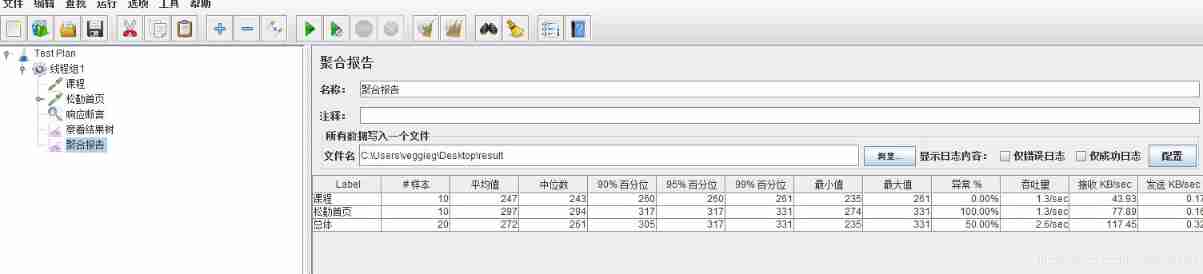

JMeter Foundation

随机推荐

MBG combat zero basis

QQ group collection

Safety testing aspects

JMeter assembly point technology and logic controller

Usage of case when then else end statement

Canoe - the second simulation engineering - xvehicle - 2 panel design (operation)

Canoe: the difference between environment variables and system variables

Configure SSH key to realize login free

Canoe - description of common database attributes

Number and math classes

Some summaries of the 21st postgraduate entrance examination 823 of network security major of Shanghai Jiaotong University and ideas on how to prepare for the 22nd postgraduate entrance examination pr

51 data analysis post

Oracle11g | getting started with database. It's enough to read this 10000 word analysis

2021 annual summary - it seems that I have done everything except studying hard

Video analysis

re. Sub() usage

Heartbeat error attempted replay attack

Take advantage of the world's sleeping gap to improve and surpass yourself -- get up early

Aike AI frontier promotion (2.14)

Summary of automated testing framework