当前位置:网站首页>Train 100 pictures for 1 hour, and the style of the photos changes at will. There is a demo at the end of the article | siggraph 2021

Train 100 pictures for 1 hour, and the style of the photos changes at will. There is a demo at the end of the article | siggraph 2021

2022-07-06 16:54:00 【ByteDance Technology】

Photo becomes portrait , Now we have the latest technology .

Pictures of normal people :

It can automatically become a character in a cartoon movie , With big eyes 、 The skin looks slippery , It also retains many original characters :

It can also become a martial arts game style , Girls have sharp Chins , Boys' hair is elegant , It seems that the next second is going to fix immortals :

Or become a character in oil painting , The facial contour is still full , But the light and shadow lines and texture convey a renaissance temperament :

A more special style is no problem , For example, first look at these two photos of people :

It can become hand-painted , eyelash 、 Lips 、 hair , The strokes of every detail are extremely real :

Become a sculpture , It looks like a picture taken in a Museum :

Or sketch , It looks as if I walked into the classroom of the Academy of Fine Arts , Even the hollowed out earrings of the figure on the right can be accurately depicted :

Turn into a doll , Three dimensional feeling is quite strong , It's like the refined product map of online store :

Men, women, old and young 、 How about skin color, hair style and appearance characteristics , Can achieve very good results :

Create models of these changes , named AgileGAN, Jointly produced by ByteDance overseas technical team and Nanyang Technological University in Singapore , Has been selected as the top student in graphics SIGGRAPH 2021.

Make the model generation so beautiful 、 HD pictures , Is it necessary to heap massive amounts of data and computing power ?

no .

Train a new style , Just need to Men and women 100 Zhang Zuo Make a training set with pictures of , stay A piece of NVIDIA Tesla V100 Last training for an hour , You can get the generated 1024×1024 High definition picture model .

Even with this model , You can realize rich photo editing functions . Such as the original picture :

You can modify the light and shadow effect of the generated photos , Backlighting when taking pictures can also save :

Even modify the angle of the generated photo :

The R & D team of this technology is also planning to apply it to Tiktok 、 Flying books and other products , Maybe in the near future , You can be in these App Get a more interesting interactive experience on .

But the good news is , Now? ,AgileGAN Has released Demo( See the end of the article for links ), You can also use your own 、 Relatives and friends 、idol Let's try our photos .

What kind of GAN can 「 Draw 」 Such realistic effect ?

The model that generates these effects consists of two parts :

The front end is hierarchical variational self encoder mapping (hVAE) Enter pictures into StyleGAN2 Hidden space ; The back end is the stylized generator of decoding .

Both parts are based on pre training StyleGAN2—— A method that can generate all kinds of faces GAN Model .

StyleGAN2 Generated faces

StyleGAN2 There are two hidden spaces for image generation , One is with standard Gaussian distribution Z Space ; The other is obtained by a series of nonlinear mappings W Space ,W Space is decoupled , But the distribution is very complex . Usually , Industry R & D personnel are using StyleGAN2 When reconstructing a user entered image , I usually choose W Space .

But this time , The research team found that if the distribution of hidden space can be reflected in the picture , Conform to the original StyleGAN2 Gaussian distribution in hidden space , When generating pictures of various styles , You can reduce the noise , Generate better looking pictures .

In order to achieve such a goal , The team abandoned the commonly used W Space , So as to select the one with standard Gaussian distribution Z Space ; And it adds its hierarchical dimension to express more complex pictures .

after , They also use variational expression to simulate distribution , Then train such a front-end layered variational self encoder , The back end of the encoder is pre trained StyleGAN2 The generator .

In order to better generate user attribute features , They also proposed an attribute aware generator , stay StyleGAN2 Based on the pre training model , Fine tuned the generator , Let it generate cartoons 、 Hand painted and other different styles . And a dynamic stop strategy is adopted , To avoid over fitting small training data sets .

These two training stages can be performed independently , You can train in parallel .

The layered variational automatic encoder introduced here , Structure is shown in figure :

Last , How to measure AgileGAN Generation effect of ?

Evaluate from two perspectives , One is 「 Beauty is not beauty 」, Whether it can meet the preferences of users ; Two is 「 Does it look similar? 」, The generated pictures need to be like art style .

To judge 「 Beauty is not beauty 」, The research team found 100 Famous melon eaters , Show everyone the random 10 Pictures created by Zhang , The models that generate them include AgileGAN And pictures generated by several well-known models before , Let them choose the best picture .

result , As shown in the middle column of the following table ,57.9% Of the votes cast for this work AgileGAN.

And to verify 「 Does it look similar? 」, They evaluated several GAN Model Fréchet Inception Distance(FID)—— A common way to GAN Scoring method , Compare the art style with the neural network feature distribution of the generated image , The lower the score , The more the image 「 Take care of yourself 」,AgileGAN Still the highest fidelity model .

Produced by ByteDance intelligent creation team in North America

The researchers of this achievement are from ByteDance and Nanyang Technological University in Singapore , Some of the ByteDance R & D students are from base The intelligent creative team in mountain view, North America —— It's the one in Tiktok 、TikTok The technical team that creates all kinds of popular special effects .

AgileGAN The implementation of has experienced as long as 8 Months of process , Their initial inspiration came from a group of paintings they saw on social networking sites , An artist turned various portraits into cartoons . These works not only have the exaggerated expression of cartoons , Have a round head 、 Big eyes and flowing hair , And the facial features of the original portrait are properly preserved , Some have a tall nose , Some eye sockets are deep .

They thought of , If you let the algorithm produce a similar effect , It can provide users with better 、 More interesting interactive experience .

therefore , They tried SEAN、CycleGAN、MUNIT as well as 3D warpping And so on , A lot of optimization and debugging have been done on each different idea , Constantly seek the most advanced in the industry 、 The most practical solution , Overcome difficulties on the core effect , Finally, I chose Toonify And StyleGAN Combination of ideas , And found some of the core limitations , Creatively solved the problem , Let the model produce the best effect . This year, 8 month ,AgileGAN Will also be at the top of graphics SIGGRAPH 2021 Show on .

except AgileGAN outside ,base Members of the ByteDance intelligent creation team in mountain view city and Los Angeles have also done things including 3D、 Virtual human 、 Various technologies related to character image, including image generation .

such as , Turn into Mona Lisa in the oil frame :

Or put a virtual wig on the character , If you look carefully , You will find that these dynamic wigs are not only lifelike , It can also match the real light and shadow in the scene .

also 「 Turn into a beauty 」 Special effects props , Let users see what sex transfer looks like :

Change the look ,「 Bald challenge 」:

「 Dynamic old photos 」 The props , Let you move as you did years ago :

Let the still pictures move , Just like the portrait on the wall of Hogwarts , It can also dance with people's movements :

These various effects will be applied to Tiktok 、TikTok And other applications , Bring more rich and novel experience to users .

Related links

Address of thesis :

https://guoxiansong.github.io/homepage/paper/AgileGAN.pdf

Official website of the project :

https://guoxiansong.github.io/homepage/agilegan.html

On-line Demo:

http://www.agilegan.com/

边栏推荐

- After the subscript is used to assign a value to the string type, the cout output variable is empty.

- Codeforces Round #771 (Div. 2)

- Conception du système de thermomètre numérique DS18B20

- LeetCode1556. Thousand separated number

- LeetCode 1552. Magnetic force between two balls

- ~Introduction to form 80

- LeetCode 1566. Repeat the pattern with length m at least k times

- 面试集锦库

- J'ai traversé le chemin le plus fou, le circuit cérébral d'un programmeur de saut d'octets

- ~71 abbreviation attribute of font

猜你喜欢

Business system compatible database oracle/postgresql (opengauss) /mysql Trivia

字节跳动技术新人培训全记录:校招萌新成长指南

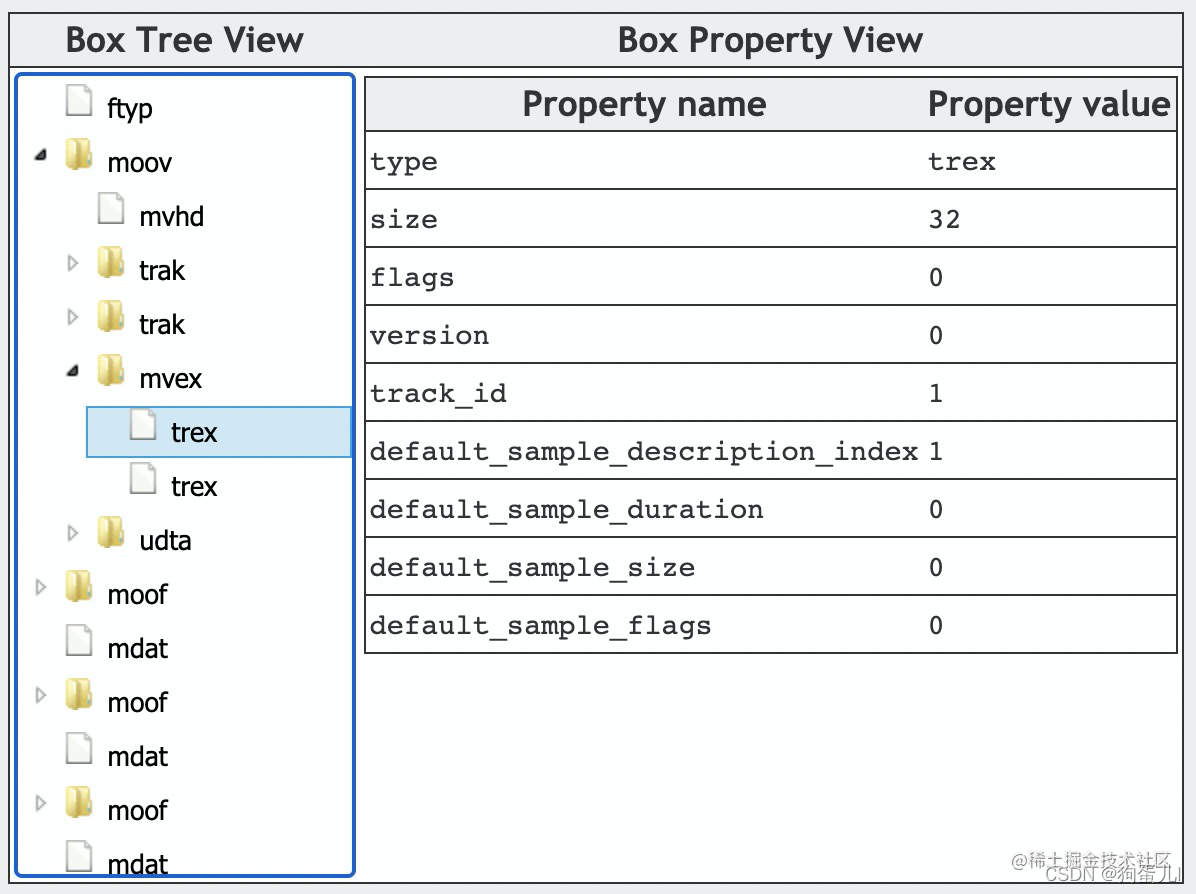

Mp4 format details

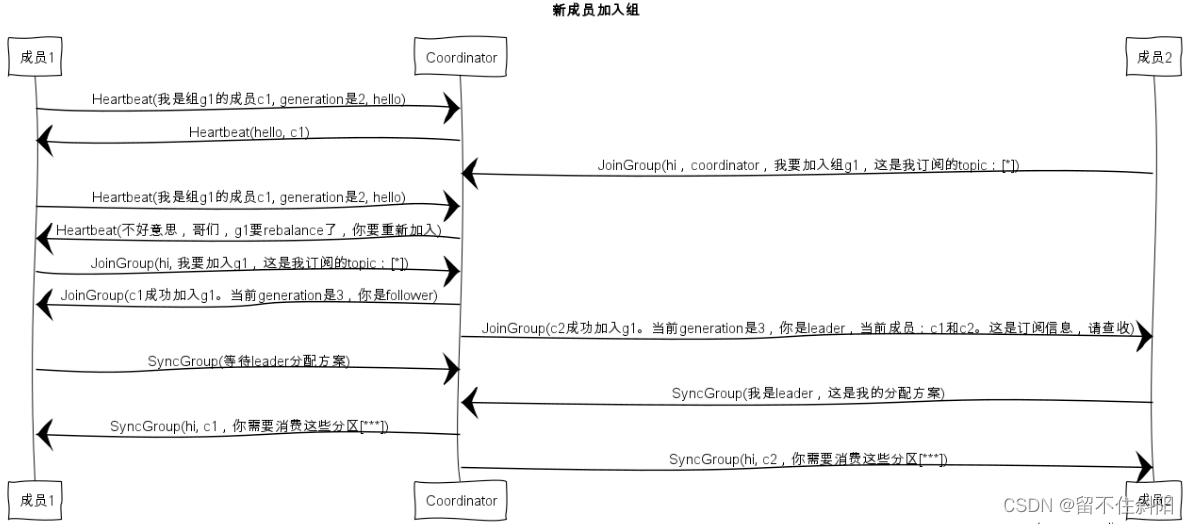

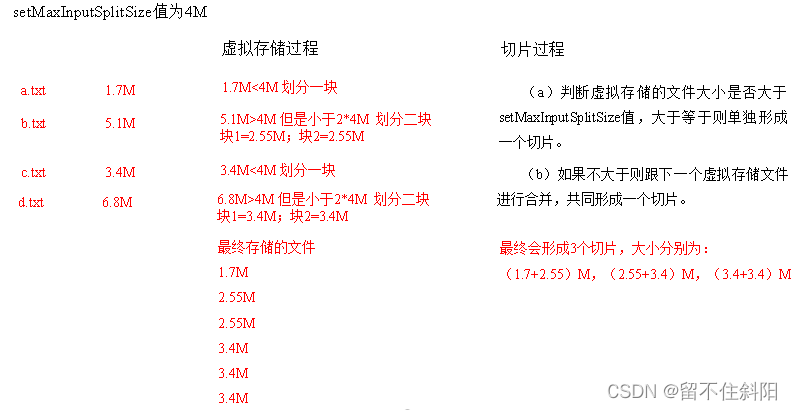

Chapter 6 rebalance details

~83 form introduction

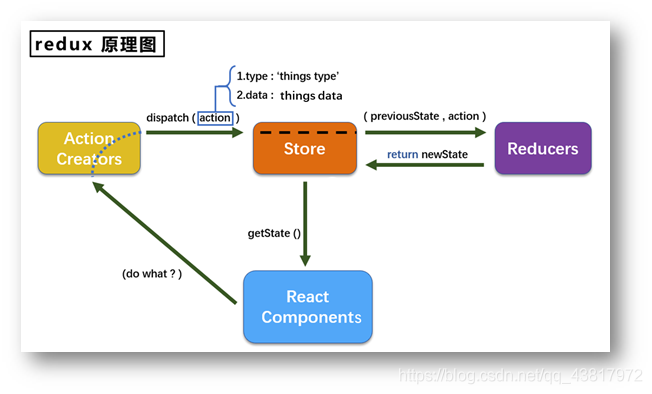

redux使用说明

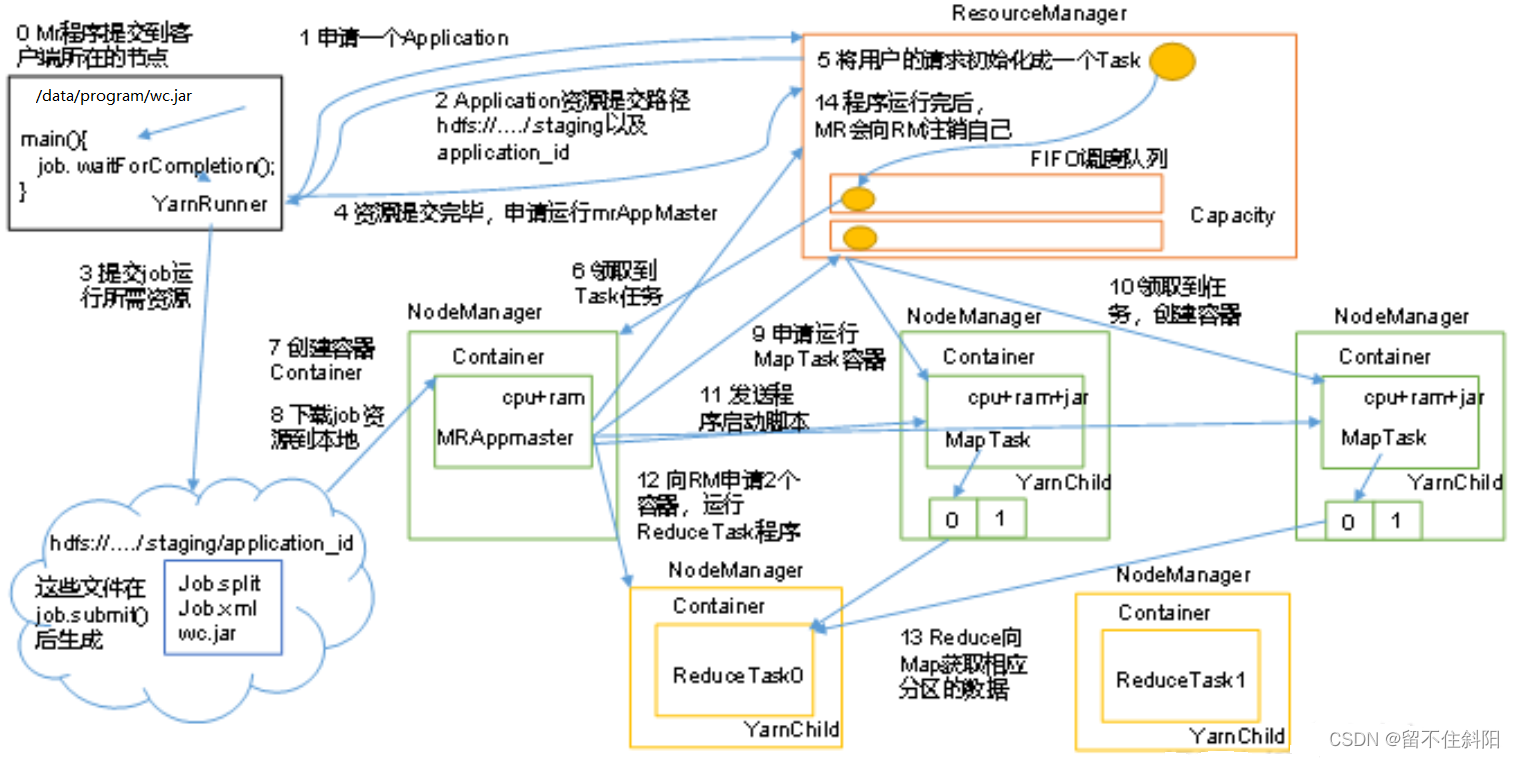

Chapter 5 yarn resource scheduler

~85 transition

LeetCode 1641. Count the number of Lexicographic vowel strings

Chapter III principles of MapReduce framework

随机推荐

7-4 harmonic average

J'ai traversé le chemin le plus fou, le circuit cérébral d'un programmeur de saut d'octets

FLV格式详解

这116名学生,用3天时间复刻了字节跳动内部真实技术项目

Fdog series (VI): use QT to communicate between the client and the client through the server (less information, recommended Collection)

SQL quick start

LeetCode 1545. Find the k-th bit in the nth binary string

Gridhome, a static site generator that novices must know

Shell_ 00_ First meeting shell

How to configure hosts when setting up Eureka

The 116 students spent three days reproducing the ByteDance internal real technology project

LeetCode 1020. Number of enclaves

Use JQ to realize the reverse selection of all and no selection at all - Feng Hao's blog

Restful style interface design

SQL快速入门

Tencent interview algorithm question

ffmpeg命令行使用

MP4格式详解

原型链继承

@RequestMapping、@GetMapping