当前位置:网站首页>Chapter 2 shell operation of hfds

Chapter 2 shell operation of hfds

2022-07-06 16:35:00 【Can't keep the setting sun】

2.1 Basic grammar

hadoop fs Specific commands

or

hdfs dfs Specific commands

2.2 The command of

The specific command is as follows

Usage: hadoop fs [generic options]

[-appendToFile <localsrc> ... <dst>]

[-cat [-ignoreCrc] <src> ...]

[-checksum <src> ...]

[-chgrp [-R] GROUP PATH...]

[-chmod [-R] <MODE[,MODE]... | OCTALMODE> PATH...]

[-chown [-R] [OWNER][:[GROUP]] PATH...]

[-copyFromLocal [-f] [-p] [-l] <localsrc> ... <dst>]

[-copyToLocal [-p] [-ignoreCrc] [-crc] <src> ... <localdst>]

[-count [-q] [-h] [-v] [-x] <path> ...]

[-cp [-f] [-p | -p[topax]] <src> ... <dst>]

[-createSnapshot <snapshotDir> [<snapshotName>]]

[-deleteSnapshot <snapshotDir> <snapshotName>]

[-df [-h] [<path> ...]]

[-du [-s] [-h] [-x] <path> ...]

[-expunge]

[-find <path> ... <expression> ...]

[-get [-p] [-ignoreCrc] [-crc] <src> ... <localdst>]

[-getfacl [-R] <path>]

[-getfattr [-R] {

-n name | -d} [-e en] <path>]

[-getmerge [-nl] <src> <localdst>]

[-help [cmd ...]]

[-ls [-C] [-d] [-h] [-q] [-R] [-t] [-S] [-r] [-u] [<path> ...]]

[-mkdir [-p] <path> ...]

[-moveFromLocal <localsrc> ... <dst>]

[-moveToLocal <src> <localdst>]

[-mv <src> ... <dst>]

[-put [-f] [-p] [-l] <localsrc> ... <dst>]

[-renameSnapshot <snapshotDir> <oldName> <newName>]

[-rm [-f] [-r|-R] [-skipTrash] <src> ...]

[-rmdir [--ignore-fail-on-non-empty] <dir> ...]

[-setfacl [-R] [{

-b|-k} {

-m|-x <acl_spec>} <path>]|[--set <acl_spec> <path>]]

[-setfattr {

-n name [-v value] | -x name} <path>]

[-setrep [-R] [-w] <rep> <path> ...]

[-stat [format] <path> ...]

[-tail [-f] <file>]

[-test -[defsz] <path>]

[-text [-ignoreCrc] <src> ...]

[-touchz <path> ...]

[-usage [cmd ...]]

Generic options supported are

-conf <configuration file> specify an application configuration file

-D <property=value> use value for given property

-fs <local|namenode:port> specify a namenode

-jt <local|resourcemanager:port> specify a ResourceManager

-files <comma separated list of files> specify comma separated files to be copied to the map reduce cluster

-libjars <comma separated list of jars> specify comma separated jar files to include in the classpath.

-archives <comma separated list of archives> specify comma separated archives to be unarchived on the compute machines.

The general command line syntax is

bin/hadoop command [genericOptions] [commandOptions]

2.3 Common commands

Be careful : If you are prompted root Permission cannot write , Please switch to hdfs The user action

2.3.1 -help

Help order

hadoop fs -help rm

or

hdfs dfs -help rm

Be careful :hadoop Script 、hdfs Script , stay hadoop Installation directory bin Under the table of contents

2.3.2 -ls

Display directory information

hadoop fs -ls /

or

hdfs dfs –ls /

2.3.3 -mkdir

stay hdfs Create directory on

hadoop fs -mkdir -p /teaching/hdfs

or

hdfs dfs -mkdir -p /teaching/hdfs

2.3.4 -moveFromLocal

Cut and paste from local to hdfs

touch test.txt

hadoop fs -moveFromLocal ./test.txt /teaching/hdfs

or

hdfs dfs –moveFromLocal ./test.txt /teaching/hdfs

Be careful : The execution process is to copy , Delete after , If hdfs If the user does not have permission to delete local files, copy to hdfs To succeed , Deleting local files cannot succeed

2.3.5 -appendToFile

Appends a file to the end of an existing file

touch test2.txt

vi test2.txt

# Input

hello hdfs

hadoop fs -appendToFile test2.txt /teaching/hdfs/test.txt

or

hdfds dfs -appendToFile test2.txt /teaching/hdfs/test.txt

2.3.6 -cat

View file contents

hadoop fs -cat /teaching/hdfs/test.txt

or

hdfs dfs –cat /teaching/hdfs/test.txt

2.3.7 -tail

Show file last 1kb Content

hadoop fs -tail /teaching/hdfs/test.txt

or

hdfs dfs -tail /teaching/hdfs/test.txt

2.3.8 -chgrp、-chmod、-chown

Modify the group to which the file belongs 、 jurisdiction 、 user

- modify HDFS Stored files /teaching/hdfs/tt.txt File group , Default group :supergroup Options -R Recursive execution

$ hdfs dfs -ls /teaching/hdfs

-rw-r--r-- 3 hdfs supergroup 11 2019-08-12 14:40 /teaching/hdfs/tt.txt

$ hdfs dfs -chgrp lubin-group /teaching/hdfs/tt.txt

$ hdfs dfs -ls /teaching/hdfs

-rw-r--r-- 3 hdfs lubin-group 11 2019-08-12 14:40 /teaching/hdfs/tt.txt

- modify HDFS Stored files /teaching/hdfs/tt.txt File permissions

$ hdfs dfs -chmod 666 /teaching/hdfs/tt.txt

$ hdfs dfs -ls /teaching/hdfs

-rw-rw-rw- 3 hdfs lubin-group 11 2019-08-12 14:40 /teaching/hdfs/tt.txt

- modify HDFS Stored files /teaching/hdfs/tt.txt Users and groups to which the file belongs ,lubin1 For the user ,lubin2 As group

$ hdfs dfs -chown lubin1:lubin2 /teaching/hdfs/tt.txt

$ hdfs dfs -ls /teaching/hdfs/tt.txt

-rw-rw-rw- 3 lubin1 lubin2 11 2019-08-12 14:40 /teaching/hdfs/tt.txt

2.3.9 -copyFromLocal

Copy files from the local file system to hdfs route

hadoop fs -copyFromLocal README.txt /teaching/hdfs

or

hdfs dfs -copyFromLocal test3.txt /teaching/hdfs

2.3.10 -copyToLocal

from hdfs Copy to local

hadoop fs -copyToLocal /teaching/hdfs/test.txt /tmp

or

hdfs dfs –copyToLocal /teaching/hdfs/test.txt /tmp

2.3.11 -cp

from hdfs Copy a path to hdfs The other path

hadoop fs -cp /teaching/hdfs/test3.txt /teaching/hdfs2

or

hdfs dfs -cp /teaching/hdfs/test3.txt /teaching/hdfs2

2.3.12 -mv

stay hdfs Move files in the directory

hadoop fs -mv /teaching/hdfs/tt.txt /teaching/hdfs2

or

hdfs dfs -mv /teaching/hdfs/tt.txt /teaching/hdfs2

2.3.13 -get

Equate to copyToLocal, from hdfs Download files to local

hadoop fs -get /teaching/hdfs/test3.txt /tmp

or

hdfs dfs -get /teaching/hdfs/test3.txt /tmp

2.3.14 -getmerge

Merge and download multiple files ( The contents of multiple files are merged into one file ), such as hdfs The catalog of /aaa/ There are multiple files under :log.1, log.2,log.3,…

hadoop fs -getmerge /teaching/hdfs/log*.txt /tmp/log.txt

or

hdfs dfs -getmerge /teaching/hdfs/log*.txt /tmp/log.txt

2.3.15 -put

Equate to copyFromLocal

hadoop fs -put log1.txt /teaching/hdfs

or

hdfs dfs –put log1.txt /teaching/hdfs

2.3.16 -rm

Delete files or folders

hadoop fs -rm /teaching/hdfs2/log1.txt

or

hdfs dfs –rm /teaching/hdfs2/log2.txt

Be careful : If the user does not have permission to delete , You can delete :su hdfs -c “hadoop fs -rm /test/test.txt”

2.3.17 -rmdir

Delete empty directory

hadoop fs -rmdir /test

or

hdfs dfs –rmdir /test

Be careful : The above command can only delete empty directories , Delete non empty employment rm command

2.3.18 -du

Statistics folder size information

hadoop fs -du -s -h /teaching/hdfs

or

hdfs dfs -du -s -h /teaching/hdfs

58 174 /teaching/hdfs

Be careful :-s Display total ( Abstract ) size , Get rid of -s Then each matching file size is displayed ;

-h Format the size in a human readable way , Not in bytes ;

The first column shows the total file size under the directory , The second column indicates the total storage size of all files in the directory on the cluster ( Related to the number of copies ), The third column is the directory name of the query

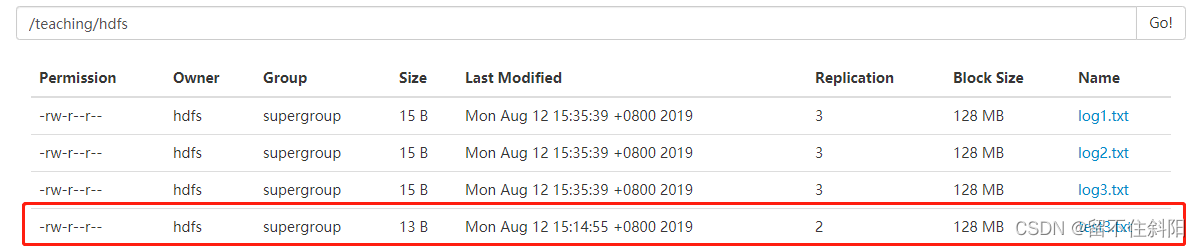

2.3.19 -setrep

Set up hdfs The number of copies of the file in

hadoop fs -setrep 10 /teaching/hdfs/test.txt

or

hdfs dfs –setrep 2 /teaching/hdfs /test.txt

The number of copies set is only recorded in NameNode In the metadata of , Whether there really will be so many copies , Have to see DataNode The number of . Because there is only 3 device , Most are 3 Copies , If set to 10, Only the number of nodes increases to 10 When the machine , Number of copies 10.

边栏推荐

猜你喜欢

QT按钮点击切换QLineEdit焦点(含代码)

1013. Divide the array into three parts equal to and

860. Lemonade change

业务系统兼容数据库Oracle/PostgreSQL(openGauss)/MySQL的琐事

软通乐学-js求字符串中字符串当中那个字符出现的次数多 -冯浩的博客

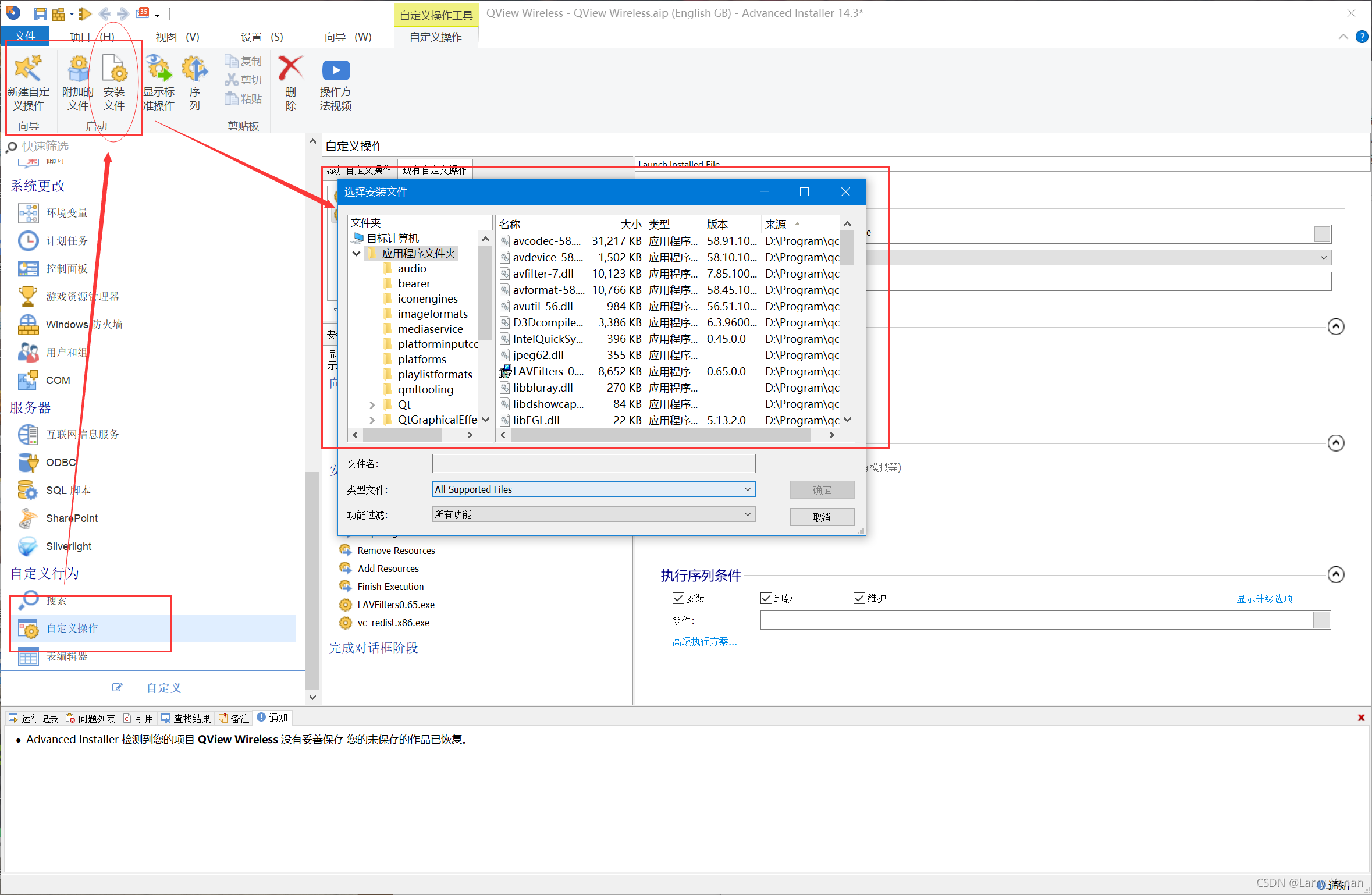

Advancedinstaller安装包自定义操作打开文件

Codeforces Round #799 (Div. 4)A~H

SF smart logistics Campus Technology Challenge (no T4)

Problem - 922D、Robot Vacuum Cleaner - Codeforces

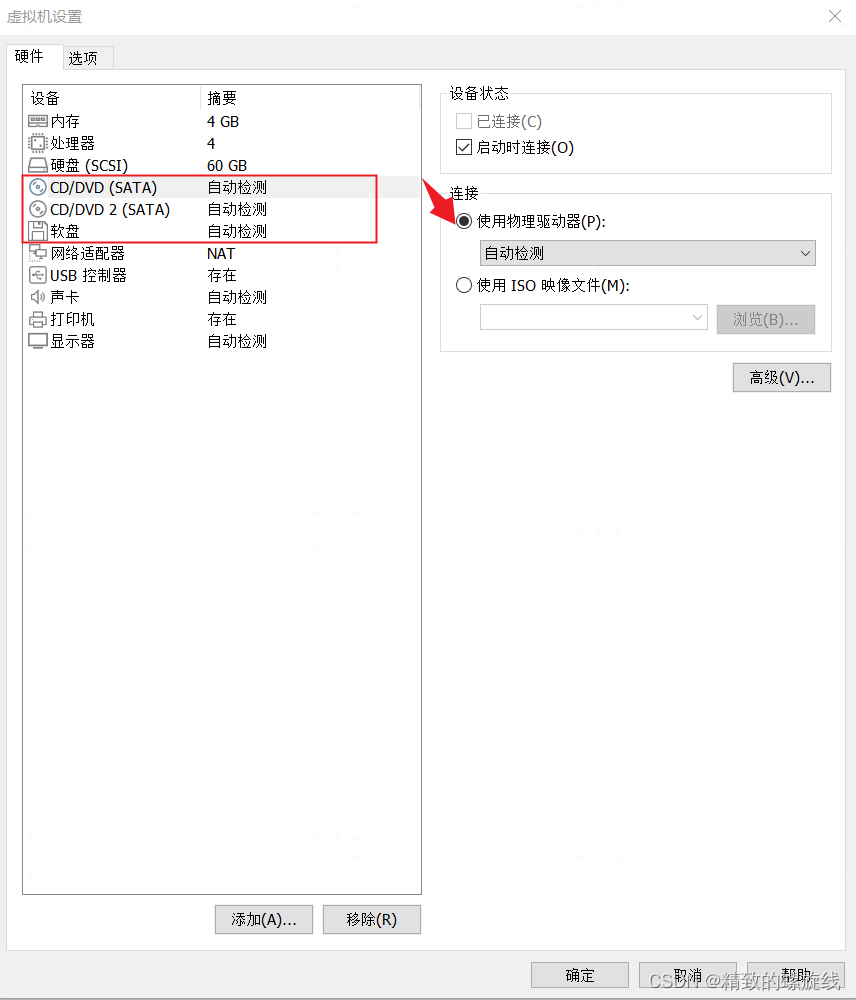

VMware Tools和open-vm-tools的安装与使用:解决虚拟机不全屏和无法传输文件的问题

随机推荐

Flag framework configures loguru logstore

QT simulates mouse events and realizes clicking, double clicking, moving and dragging

China tetrabutyl urea (TBU) market trend report, technical dynamic innovation and market forecast

Market trend report, technological innovation and market forecast of desktop electric tools in China

QT实现圆角窗口

Study notes of Tutu - process

QT按钮点击切换QLineEdit焦点(含代码)

Acwing: Game 58 of the week

解决Intel12代酷睿CPU单线程只给小核运行的问题

“鬼鬼祟祟的”新小行星将在本周安全掠过地球:如何观看

Flask框架配置loguru日志庫

VMware Tools和open-vm-tools的安装与使用:解决虚拟机不全屏和无法传输文件的问题

Summary of FTP function implemented by qnetworkaccessmanager

input 只能输入数字,限定输入

Openwrt build Hello ipk

1013. Divide the array into three parts equal to and

860. Lemonade change

Research Report on market supply and demand and strategy of Chinese table lamp industry

新手必会的静态站点生成器——Gridsome

简单尝试DeepFaceLab(DeepFake)的新AMP模型