当前位置:网站首页>R language for text mining Part4 text classification

R language for text mining Part4 text classification

2022-07-06 21:14:00 【Full stack programmer webmaster】

Hello everyone , I meet you again , I'm the king of the whole stack .

Part4 Text classification

Part3 Text clustering mentioned . Simple difference from clustering classification .

that , We need to sort out the classification of training sets , There is clearly classified text ; Test set , Can use training set to replace . Prediction set , Is unclassified text . It is the final application of classification method .

1. Data preparation

Training set preparation is a very tedious function , I didn't find any labor-saving way temporarily , Sort out manually according to the text content . Here is the official wechat data of a brand , According to the content of Weibo . I divide the main content of its Weibo into : Promotional information (promotion)、 Product promotion (product)、 Public welfare information (publicWelfare)、 Chicken soup (life)、 Fashion information (fashionNews)、 Movie entertainment (showbiz). Each category has 20-50 Data . For example, we can see the text number of each category under the training set below , There is no problem that the training set is classified as Chinese .

The training set is hlzj.train, It will also be used as a test set later .

The prediction set is Part2 Inside hlzj.

> hlzj.train <-read.csv(“hlzj_train.csv”,header=T,stringsAsFactors=F)

> length(hlzj.train)

[1] 2

> table(hlzj.train$type)

fashionNews life product

27 34 38

promotion publicWelfare showbiz

45 22 36

> length(hlzj)

[1] 1639

2. Word segmentation

Training set 、 Test set 、 Prediction sets need word segmentation before possible classification .

It will not be specified here , The process is similar to Part2 Talked about .

After word segmentation in the training set hlzjTrainTemp. Previous pair hlzj After word segmentation, the file is hlzjTemp.

And then they will hlzjTrainTemp and hlzjTemp Remove stop words .

> library(Rwordseg)

Load the required program package :rJava

# Version: 0.2-1

> hlzjTrainTemp <- gsub(“[0-90123456789 < > ~]”,””,hlzj.train$text)

> hlzjTrainTemp <-segmentCN(hlzjTrainTemp)

> hlzjTrainTemp2 <-lapply(hlzjTrainTemp,removeStopWords,stopwords)

>hlzjTemp2 <-lapply(hlzjTemp,removeStopWords,stopwords)

3. Get the matrix

stay Part3 Speak to the . When doing clustering, first convert the text into a matrix , The same process is needed for classification . be used tm software package . First, combine the results of the training set and the prediction set after removing the stop words into hlzjAll, Remember before 202(1:202) Data is a training set , after 1639(203:1841) Bars are prediction sets . obtain hlzjAll The corpus of , And get documents - Entry matrix . Convert it to a normal matrix .

> hlzjAll <- character(0)

> hlzjAll[1:202] <- hlzjTrainTemp2

> hlzjAll[203:1841] <- hlzjTemp2

> length(hlzjAll)

[1] 1841

> corpusAll <-Corpus(VectorSource(hlzjAll))

> (hlzjAll.dtm <-DocumentTermMatrix(corpusAll,control=list(wordLengths = c(2,Inf))))

<<DocumentTermMatrix(documents: 1841, terms: 10973)>>

Non-/sparse entries: 33663/20167630

Sparsity : 100%

Maximal term length: 47

Weighting : term frequency (tf)

> dtmAll_matrix <-as.matrix(hlzjAll.dtm)

4. classification

be used knn Algorithm (K Nearest neighbor algorithm ). The algorithm is class In the software package .

Before the matrix 202 Row data is a training set , There are already classifications , hinder 1639 Pieces of data are not classified . We should get the classification model according to the training set, and then make the classification prediction for it .

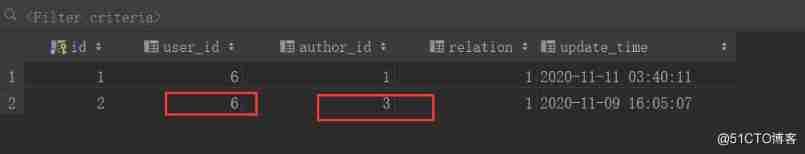

Put the classified results together with the original Weibo . use fix() see , You can see the classification results , The effect is quite obvious .

> rownames(dtmAll_matrix)[1:202] <-hlzj.train$type

> rownames(dtmAll_matrix)[203:1841]<- c(“”)

> train <- dtmAll_matrix[1:202,]

> predict <-dtmAll_matrix[203:1841,]

> trainClass <-as.factor(rownames(train))

> library(class)

> hlzj_knnClassify <-knn(train,predict,trainClass)

> length(hlzj_knnClassify)

[1] 1639

> hlzj_knnClassify[1:10]

[1] product product product promotion product fashionNews life

[8] product product fashionNews

Levels: fashionNews life productpromotion publicWelfare showbiz

> table(hlzj_knnClassify)

hlzj_knnClassify

fashionNews life product promotion publicWelfare showbiz

40 869 88 535 28 79

> hlzj.knnResult <-list(type=hlzj_knnClassify,text=hlzj)

> hlzj.knnResult <-as.data.frame(hlzj.knnResult)

> fix(hlzj.knnResult)

Knn Classification algorithm is the simplest one . Later, try to use neural network algorithm (nnet())、 Support vector machine algorithm (svm())、 Random forest algorithm (randomForest()) when . There is a problem of insufficient computer memory , My computer is 4G Of , When looking at memory monitoring, you can see that the maximum usage is 3.92G.

It seems that we need to change a computer with more power ╮(╯▽╰)╭

When the hardware conditions can be met , There should be no problem with classification . Relevant algorithms can be used :?? Method name , To view its documentation .

5. Classification effect

The test process is not mentioned above , For the example above , Namely knn The first two parameters are used train, Because using data sets is the same . Therefore, the accuracy of the obtained results can reach 100%. When there are many training sets . Can press it randomly 7:3 Or is it 8:2 Divided into two parts , The former is good for training and the latter is good for testing . I won't go into details here .

When the classification effect is not ideal . To improve the classification effect, we need to enrich the training set . Make the features of the training set as obvious as possible . This practical problem is a very tedious but cannot be perfunctory process .

What can be improved? Welcome to correct , Reprint please indicate the source , thank you !

Copyright notice : This article is the original article of the blogger , Blog , Do not reprint without permission .

Publisher : Full stack programmer stack length , Reprint please indicate the source :https://javaforall.cn/117093.html Link to the original text :https://javaforall.cn

边栏推荐

- Manifest of SAP ui5 framework json

- In JS, string and array are converted to each other (I) -- the method of converting string into array

- 【mysql】游标的基本使用

- Opencv learning example code 3.2.3 image binarization

- 2017 8th Blue Bridge Cup group a provincial tournament

- Le langage r visualise les relations entre plus de deux variables de classification (catégories), crée des plots Mosaiques en utilisant la fonction Mosaic dans le paquet VCD, et visualise les relation

- Three schemes of SVM to realize multi classification

- Tips for web development: skillfully use ThreadLocal to avoid layer by layer value transmission

- 968 edit distance

- 正则表达式收集

猜你喜欢

967- letter combination of telephone number

防火墙基础之外网服务器区部署和双机热备

Study notes of grain Mall - phase I: Project Introduction

Variable star --- article module (1)

Reviewer dis's whole research direction is not just reviewing my manuscript. What should I do?

LLVM之父Chris Lattner:为什么我们要重建AI基础设施软件

Why do job hopping take more than promotion?

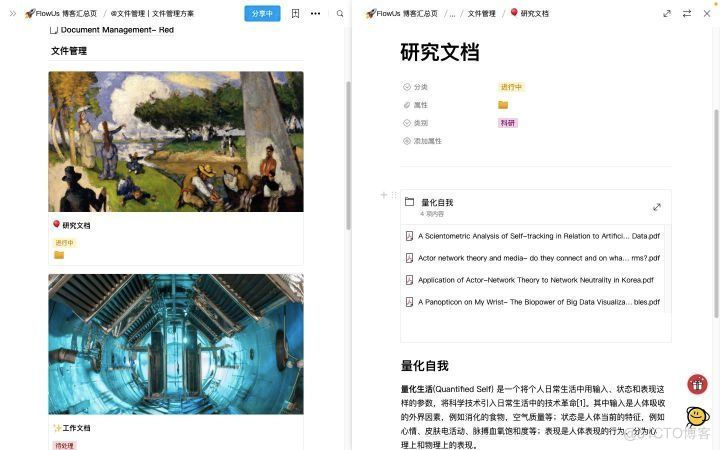

全网最全的新型数据库、多维表格平台盘点 Notion、FlowUs、Airtable、SeaTable、维格表 Vika、飞书多维表格、黑帕云、织信 Informat、语雀

【论文解读】用于白内障分级/分类的机器学习技术

1500萬員工輕松管理,雲原生數據庫GaussDB讓HR辦公更高效

随机推荐

硬件开发笔记(十): 硬件开发基本流程,制作一个USB转RS232的模块(九):创建CH340G/MAX232封装库sop-16并关联原理图元器件

拼多多败诉,砍价始终差0.9%一案宣判;微信内测同一手机号可注册两个账号功能;2022年度菲尔兹奖公布|极客头条

3D face reconstruction: from basic knowledge to recognition / reconstruction methods!

15million employees are easy to manage, and the cloud native database gaussdb makes HR office more efficient

Opencv learning example code 3.2.3 image binarization

【mysql】游标的基本使用

3D人脸重建:从基础知识到识别/重建方法!

js之遍历数组、字符串

Yyds dry inventory run kubeedge official example_ Counter demo counter

How do I remove duplicates from the list- How to remove duplicates from a list?

What's the best way to get TFS to output each project to its own directory?

PHP saves session data to MySQL database

OSPF多区域配置

js 根据汉字首字母排序(省份排序) 或 根据英文首字母排序——za排序 & az排序

C # use Oracle stored procedure to obtain result set instance

Ravendb starts -- document metadata

Seven original sins of embedded development

2022 fields Award Announced! The first Korean Xu Long'er was on the list, and four post-80s women won the prize. Ukrainian female mathematicians became the only two women to win the prize in history

Le langage r visualise les relations entre plus de deux variables de classification (catégories), crée des plots Mosaiques en utilisant la fonction Mosaic dans le paquet VCD, et visualise les relation

ICML 2022 | flowformer: task generic linear complexity transformer