当前位置:网站首页>ICML 2022 | flowformer: task generic linear complexity transformer

ICML 2022 | flowformer: task generic linear complexity transformer

2022-07-06 21:04:00 【Zhiyuan community】

Paper title :Flowformer: Linearizing Transformers with Conservation Flows

Thesis link :https://arxiv.org/pdf/2202.06258.pdf

Code link :https://github.com/thuml/Flowformer

This paper deeply studies the quadratic complexity of attention mechanism , By introducing the conservation principle in network flow into the design , Naturally, competition mechanism is introduced into attention Computing , It effectively avoids ordinary attention problems .

What we proposed Task common The backbone network Flowformer, Realized Linear complexity , At the same time Long sequence 、 Vision 、 Natural language 、 The time series 、 Reinforcement learning Achieved excellent results in five major tasks .

In the application of long sequence modeling , Such as protein structure prediction 、 Long text understanding, etc ,Flowformer It has good application potential . Besides ,Flowformer in “ No special inductive preference ” The design concept of is also of good enlightening significance to the research of general infrastructure .

Flow-Attention The pseudo-code is as follows :

Main experimental results :

边栏推荐

- Reviewer dis's whole research direction is not just reviewing my manuscript. What should I do?

- Pat 1085 perfect sequence (25 points) perfect sequence

- Database - how to get familiar with hundreds of tables of the project -navicat these unique skills, have you got it? (exclusive experience)

- Huawei device command

- Mécanisme de fonctionnement et de mise à jour de [Widget Wechat]

- 2022 refrigeration and air conditioning equipment installation and repair examination contents and new version of refrigeration and air conditioning equipment installation and repair examination quest

- Common doubts about the introduction of APS by enterprises

- Interviewer: what is the internal implementation of ordered collection in redis?

- [wechat applet] operation mechanism and update mechanism

- Application layer of tcp/ip protocol cluster

猜你喜欢

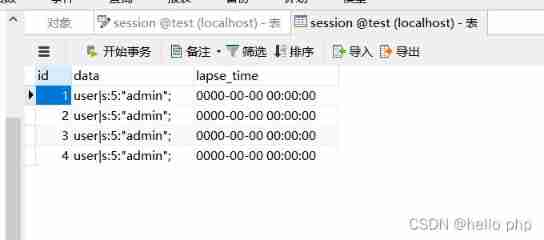

PHP saves session data to MySQL database

##无yum源安装spug监控

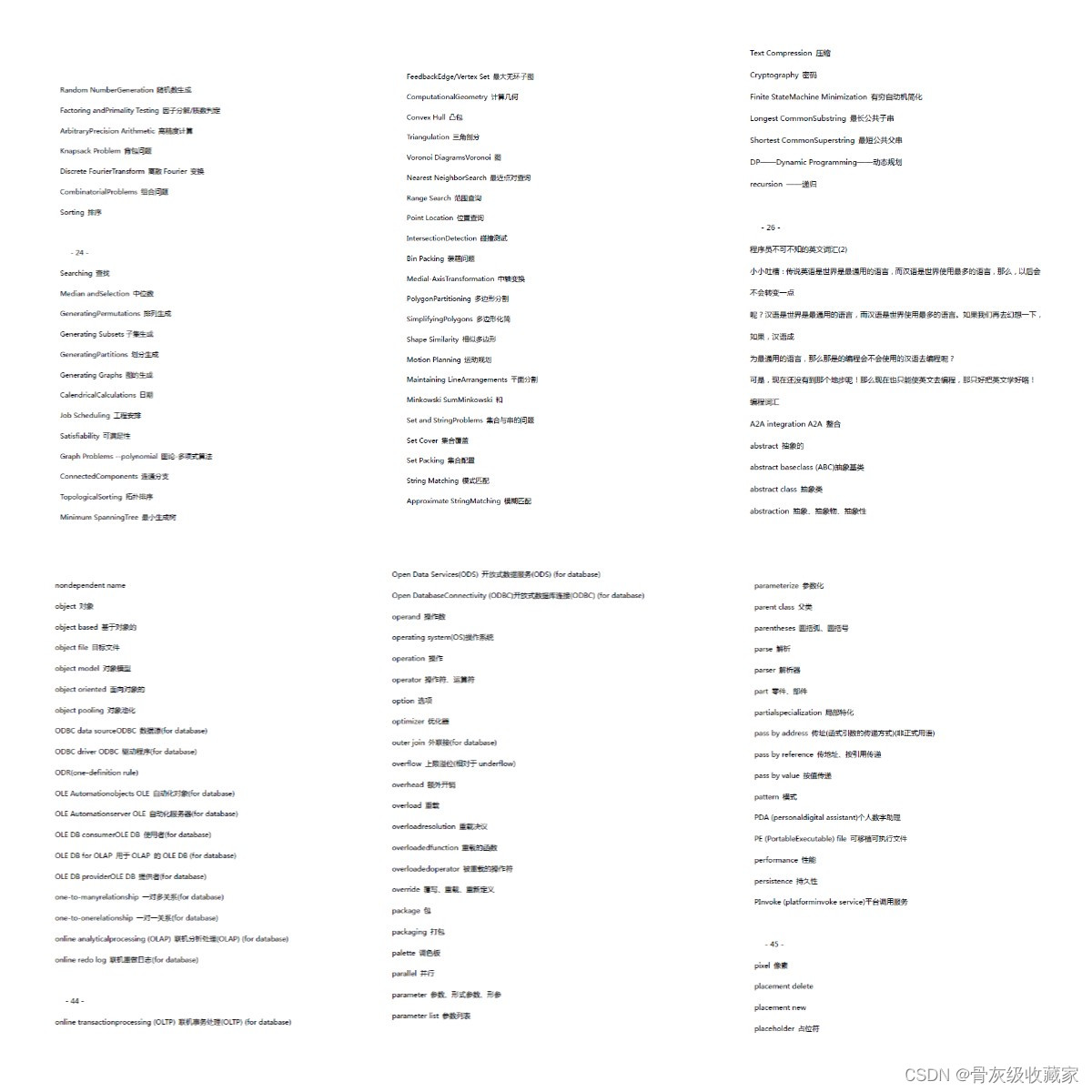

Common English vocabulary that every programmer must master (recommended Collection)

1500萬員工輕松管理,雲原生數據庫GaussDB讓HR辦公更高效

PHP online examination system version 4.0 source code computer + mobile terminal

Implementation of packaging video into MP4 format and storing it in TF Card

新型数据库、多维表格平台盘点 Notion、FlowUs、Airtable、SeaTable、维格表 Vika、飞书多维表格、黑帕云、织信 Informat、语雀

KDD 2022 | 通过知识增强的提示学习实现统一的对话式推荐

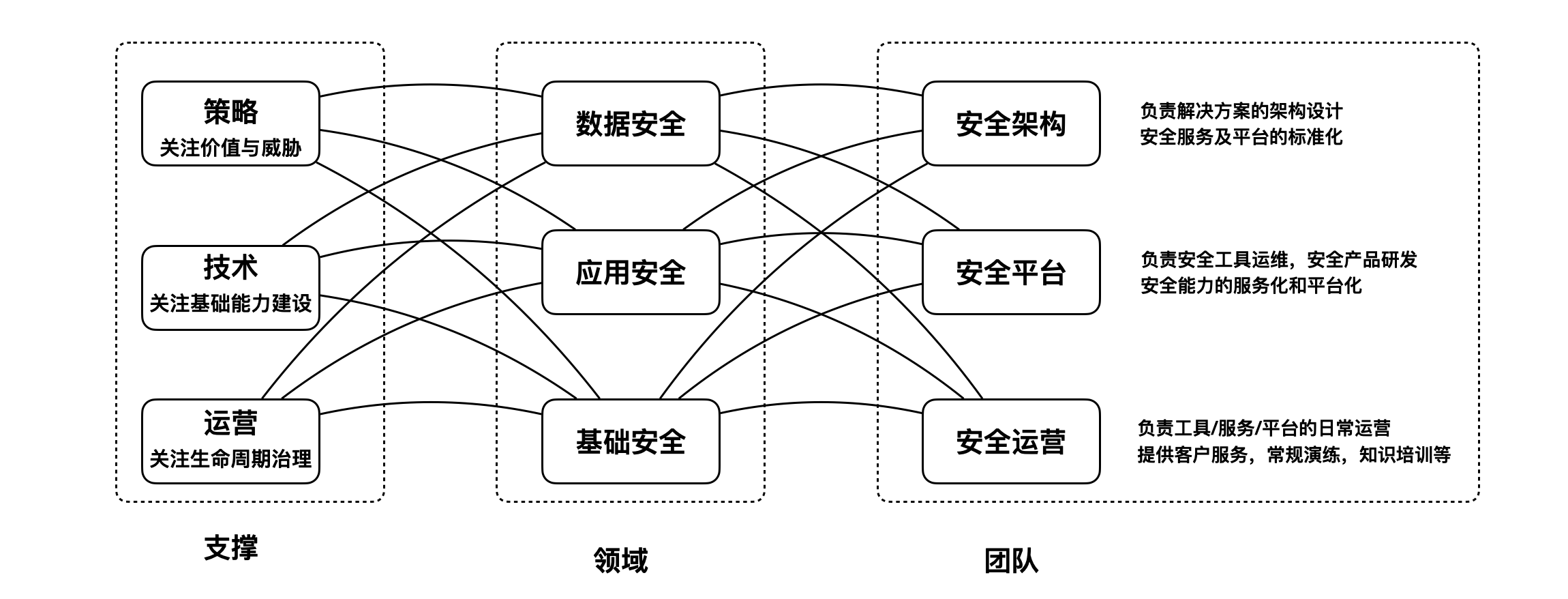

Design your security architecture OKR

No Yum source to install SPuG monitoring

随机推荐

请问sql group by 语句问题

C language operators

Mécanisme de fonctionnement et de mise à jour de [Widget Wechat]

【DSP】【第一篇】开始DSP学习

Summary of different configurations of PHP Xdebug 3 and xdebug2

【深度学习】PyTorch 1.12发布,正式支持苹果M1芯片GPU加速,修复众多Bug

2022 refrigeration and air conditioning equipment installation and repair examination contents and new version of refrigeration and air conditioning equipment installation and repair examination quest

It's almost the new year, and my heart is lazy

LLVM之父Chris Lattner:为什么我们要重建AI基础设施软件

How to turn a multi digit number into a digital list

强化学习-学习笔记5 | AlphaGo

User defined current limiting annotation

##无yum源安装spug监控

Laravel notes - add the function of locking accounts after 5 login failures in user-defined login (improve system security)

Pytest (3) - Test naming rules

The biggest pain point of traffic management - the resource utilization rate cannot go up

Manifest of SAP ui5 framework json

Common doubts about the introduction of APS by enterprises

2022 portal crane driver registration examination and portal crane driver examination materials

7、数据权限注解