author : Yili

Knative Is in Kubernetes Based on Serverless The technical framework of Computing , Can greatly simplify Kubernetes Application development and operation and maintenance experience . stay 2022 year 3 Month be CNCF Incubation projects .Knative It consists of two main parts : One is to support HTTP Online applied Knative Serving, One is to support CloudEvents And event driven applications Knative Eventing.

Knative It can support various containerized runtime environments , Let's explore the use of WebAssembly Technology as a new Serverless Runtime .

from WASM、WASI To WAGI

WebAssembly( abbreviation WASM) It's an emerging W3C standard . It is a virtual instruction set architecture (virtual ISA), Its initial goal is to C/C++ A program written in a language , It can run safely and efficiently in the browser . stay 2019 year 12 month ,W3C Officially announce WebAssembly The core specification of becomes Web standard , Greatly advanced WASM Technology popularization . today ,WebAssembly I've got Google Chrome、Microsoft Edge、Apple Safari、Mozilla Firefox Full support of streaming browser . And more importantly ,WebAssembly As a safe 、 portable 、 Efficient virtual machine sandbox , It can be anywhere 、 Any operating system , whatever CPU Run applications safely in the architecture .

Mozilla stay 2019 It was proposed that WebAssembly System Interface(WASI), It offers something like POSIX Such a standard API To standardize WebAssembly Application and file system , Interaction of system resources such as memory management .WASI The emergence of has greatly expanded WASM Application scenarios of , It can be used as a virtual machine to run various types of server applications . For further promotion WebAssembly Ecological development ,Mozilla、Fastly、 Intel and red hat have formed a bytecode alliance (Bytecode Alliance), Co lead WASI standard 、WebAssembly Runtime 、 Tools, etc . Subsequent Microsoft , Google 、ARM And other companies have also become members .

WebAssembly Technology is still evolving rapidly ,2022 year 4 month ,W3C released WebAssembly 2.0 The first draft of public work , This has also become an important symbol of its maturity and development .

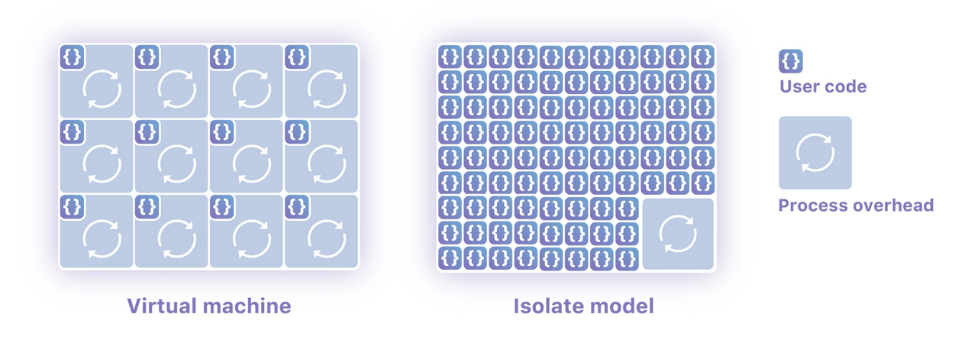

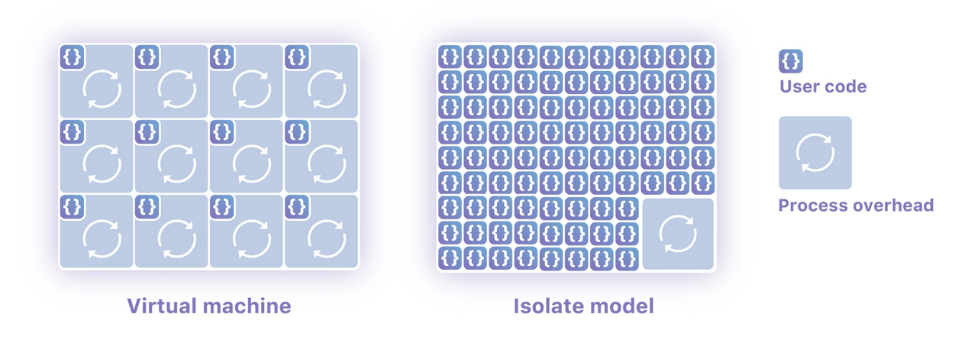

WASM/WASI As a new back-end technology , With native security 、 portable 、 High performance , Lightweight features , It is very suitable for distributed application running environment . Unlike the container, which is an isolated operating system process ,WASM Applications can achieve security isolation within a process , Support millisecond cold start time and extremely low resource consumption . As shown in the figure below :

picture source :cloudflare

at present WASM/WASI It's still in its infancy , There are many technical limitations , For example, threads are not supported , Cannot support low level Socket Network application and so on , This greatly limits WASM Application scenarios on the server side . Communities are exploring a fully adaptable WASM Application development model , Foster strengths and circumvent weaknesses . Microsoft Deislabs Engineers from HTTP Inspired by the history of server development , Put forward WAGI - WebAssembly Gateway Interface project

[

1]

. you 're right WAGI The concept of is from the ancient legend of the Internet ,CGI.

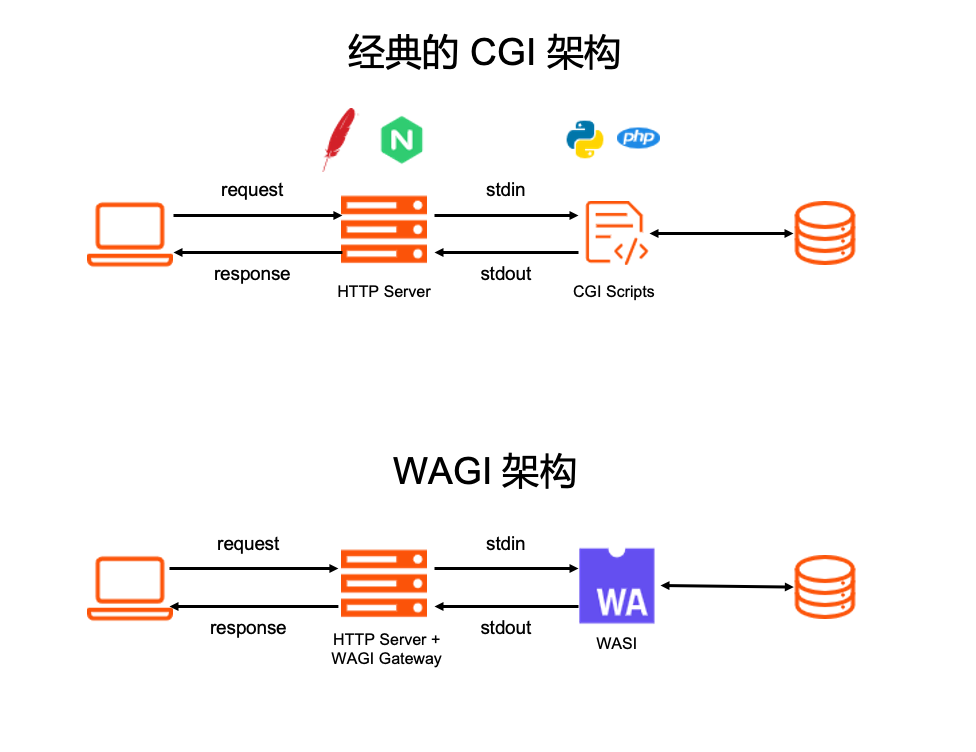

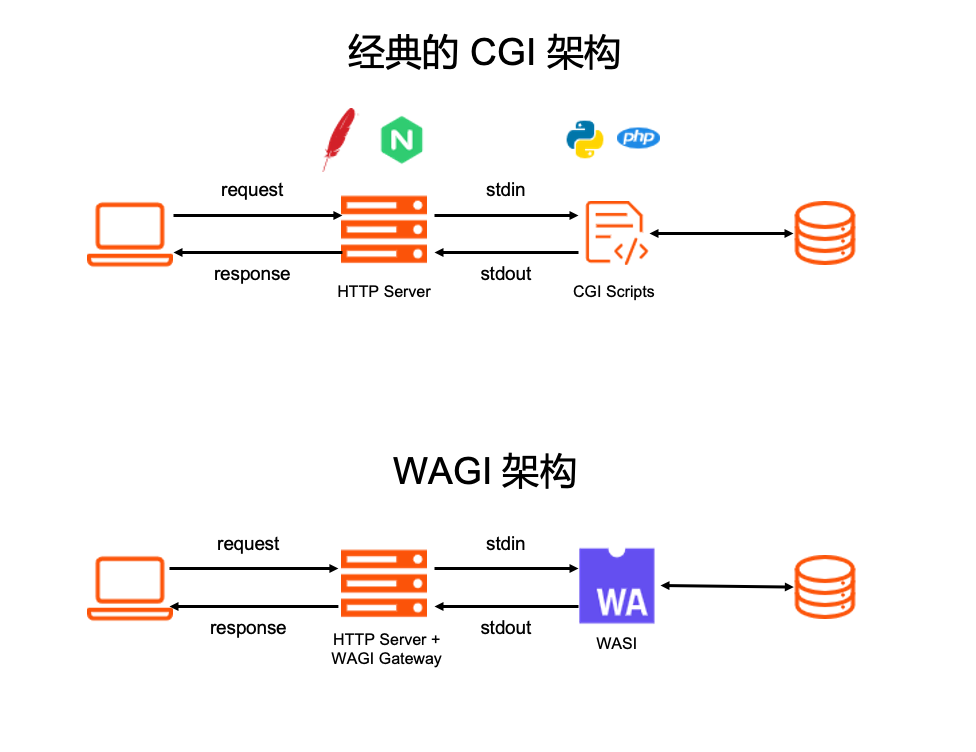

CGI yes “ Public gateway interface ”(Common Gateway Interface) For short , yes HTTP A specification for the interaction between a server and other programs .HTTP Server Through standard input 、 Output interface, etc CGI Script language for communication , Developers can use Python/PHP/Perl And other implementations HTTP request .

A very natural deduction , If we can go through CGI Specification to call WASI application , Developers can use it very easily WebAssembly To write the Web API Or micro service application , And there is no need to WASM Deal with too many network implementation details . The picture below is CGI And WAGI Conceptual architecture diagram comparison :

The two are highly similar in architecture , The difference is : Tradition CGI framework , Every time HTTP The request will create a OS Process to process , The security isolation is realized by the process mechanism of the operating system ; and WAGI in , Every time HTTP The request will be called in a separate thread WASI application , Use between applications WebAssembly Virtual machines realize security isolation . In theory ,WAGI There can be more than CGI Lower resource consumption and faster response time .

This article will not WAGI Its own architecture and WAGI Application development for analysis . Interested partners can read the project documents by themselves .

Further reflection , If we can put WAGI As a Knative Serving Runtime , We can build a city that will WebAssembly be applied to Serverless The bridge of the scene .

WAGI Apply cold start analysis and optimization

Cold start performance is Serverless Key indicators of the scenario . For a better understanding WAGI Execution efficiency , We can use ab Do a simple pressure test :

$ ab -k -n 10000 -c 100 http://127.0.0.1:3000/

...

Server Software:

Server Hostname: 127.0.0.1

Server Port: 3000

Document Path: /

Document Length: 12 bytes

Concurrency Level: 100

Time taken for tests: 7.632 seconds

Complete requests: 10000

Failed requests: 0

Keep-Alive requests: 10000

Total transferred: 1510000 bytes

HTML transferred: 120000 bytes

Requests per second: 1310.31 [#/sec] (mean)

Time per request: 76.318 [ms] (mean)

Time per request: 0.763 [ms] (mean, across all concurrent requests)

Transfer rate: 193.22 [Kbytes/sec] received

Connection Times (ms)

min mean[+/-sd] median max

Connect: 0 0 0.6 0 9

Processing: 8 76 29.6 74 214

Waiting: 1 76 29.6 74 214

Total: 8 76 29.5 74 214

Percentage of the requests served within a certain time (ms)

50% 74

66% 88

75% 95

80% 100

90% 115

95% 125

98% 139

99% 150

100% 214 (longest request)

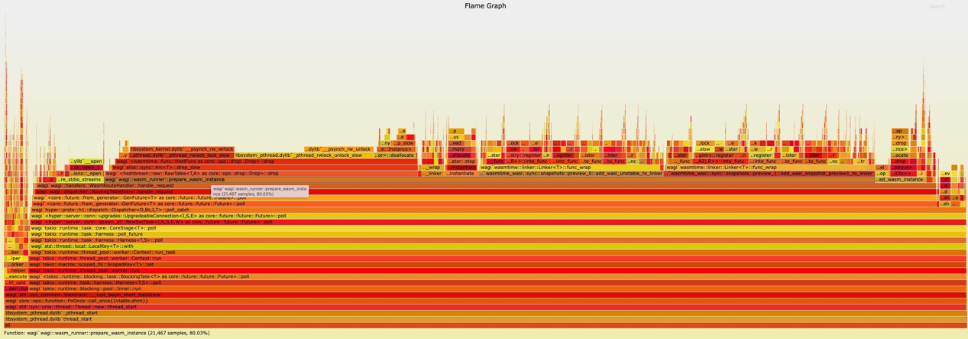

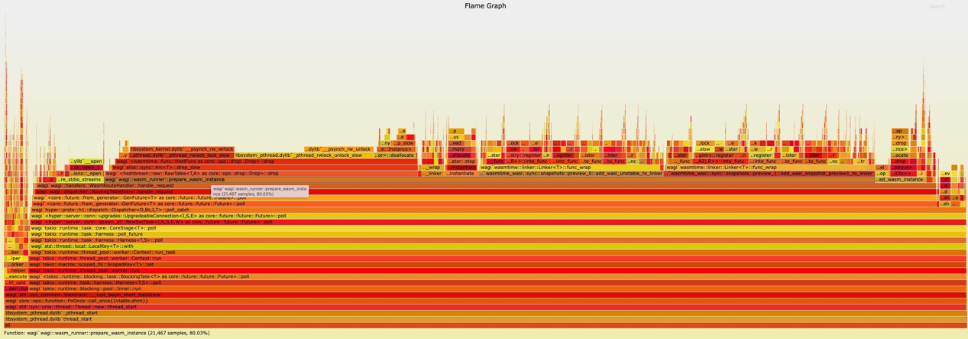

We can see P90 The request response time is 115ms, Is this ? This is right with us WASM The cognition of applying lightweight is different . Use the flame diagram , We can quickly locate the problem :prepare_wasm_instance Function consumes the whole application running 80% Time for .

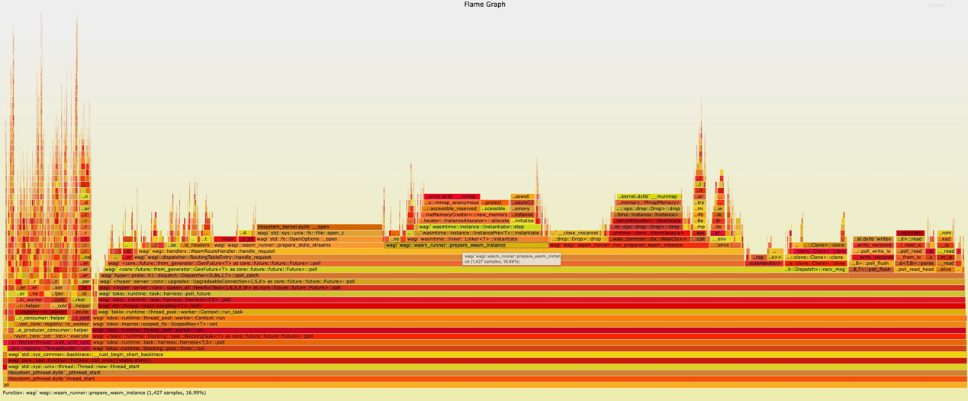

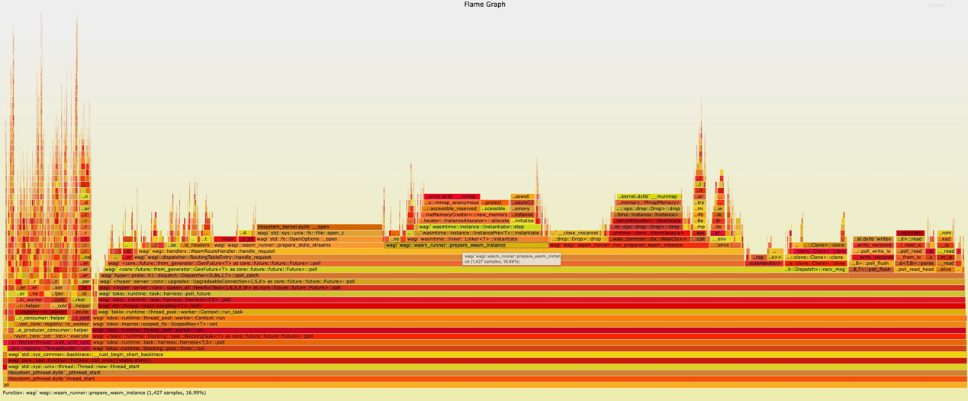

After analyzing the code , We found that in every response HTTP During the request ,WAGI Have to be compiled WSM application , Reconnect the WASI as well as wasi-http Expand and configure the environment . It takes a lot of time . It's positioning the problem , The solution is very simple , Refactoring execution logic , Let these preparations be performed only once during initialization , Not every time HTTP Repeat during the request . For details, refer to the optimized implementation

[

2]

Let's run the stress test again :

$ ab -k -n 10000 -c 100 http://127.0.0.1:3000/

...

Server Software:

Server Hostname: 127.0.0.1

Server Port: 3000

Document Path: /

Document Length: 12 bytes

Concurrency Level: 100

Time taken for tests: 1.328 seconds

Complete requests: 10000

Failed requests: 0

Keep-Alive requests: 10000

Total transferred: 1510000 bytes

HTML transferred: 120000 bytes

Requests per second: 7532.13 [#/sec] (mean)

Time per request: 13.276 [ms] (mean)

Time per request: 0.133 [ms] (mean, across all concurrent requests)

Transfer rate: 1110.70 [Kbytes/sec] received

Connection Times (ms)

min mean[+/-sd] median max

Connect: 0 0 0.6 0 9

Processing: 1 13 5.7 13 37

Waiting: 1 13 5.7 13 37

Total: 1 13 5.6 13 37

Percentage of the requests served within a certain time (ms)

50% 13

66% 15

75% 17

80% 18

90% 21

95% 23

98% 25

99% 27

100% 37 (longest request)

In the optimized implementation ,P90 Response time has fallen to 21ms, among prepare_wasm_instance The running time has decreased to 17%. The overall cold start efficiency has been greatly improved !

notes : This paper makes use of flamegraph

[

3]

Performance analysis conducted .

utilize Knative function WAGI application

In order to make WAGI It can be used as Knative Application and operation , We still need to be in WAGI Added a pair of SIGTERM Signal support , Give Way WAGI Containers support elegant offline . The details will not be repeated .

Knative For environmental preparation, please refer to Knative Installation document

[

4]

, utilize Minikube Create a local test environment .

notes : The premise is to have a certain network capability , Due to domestic inaccessibility in gcr.io Medium Knative Mirror image .

A simpler way is to use Alibaba cloud directly Serverless Container services ASK

[

5]

On Serverless K8s colony .ASK built-in Knative Support

[

6

]

, It can be developed and used without complicated configuration and installation process Knative application .

First, we use WAGI To define a Knative service :

apiVersion: serving.knative.dev/v1

kind: Service

metadata:

name: autoscale-wagi

namespace: default

spec:

template:

metadata:

annotations:

# Knative concurrency-based autoscaling (default).

autoscaling.knative.dev/class: kpa.autoscaling.knative.dev

autoscaling.knative.dev/metric: concurrency

# Target 10 requests in-flight per pod.

autoscaling.knative.dev/target: "10"

# Disable scale to zero with a min scale of 1.

autoscaling.knative.dev/min-scale: "1"

# Limit scaling to 100 pods.

autoscaling.knative.dev/max-scale: "10"

spec:

containers:

- image: registry.cn-hangzhou.aliyuncs.com/denverdino/knative-wagi:0.8.1-with-cache

among :

$ kubectl apply -f knative_test.yaml

$ kubectl get ksvc autoscale-wagi

NAME URL LATESTCREATED LATESTREADY READY REASON

autoscale-wagi http://autoscale-wagi.default.127.0.0.1.sslip.io autoscale-wagi-00002 autoscale-wagi-00002 True

$ curl http://autoscale-wagi.default.127.0.0.1.sslip.io

Oh hi world

$ curl http://autoscale-wagi.default.127.0.0.1.sslip.io/hello

hello world

You can also carry out some pressure tests , To learn Knative The elastic expansion ability of .

Postscript

This paper introduces WAGI This project , It can be HTTP Network processing details of the server , And WASM Apply logic to realize decoupling . In this way, you can easily WASM/WASI Application and Knative In this way Serverless Frame combination . On the one hand, we can reuse Knative/K8s Bring flexibility and large-scale resource scheduling ability , On the one hand, we can play WebAssembly Safety isolation 、 portable 、 Lightweight and other advantages .

Thinking in one continuous line , In the previous article 《

WebAssembly + Dapr = Next generation cloud native runtime ?》

in , I introduced an idea that WASM Applications depend on external services through Dapr Realize decoupling , To solve the contradiction between portability and diversified service capabilities .

Of course, these jobs are still simple toys , It is only used to verify the possibility boundary of the technology . The main purpose is to attract jade , Hear your thoughts and ideas about the next generation distributed application framework and runtime environment .

During the writing process , Suddenly remembered in 90 The age is based on RFC Norms to achieve HTTP Server And CGI Gateway Years of , That is a very simple and simple happiness . ad locum , I also wish every technician to remain curious , Enjoy the programming time every day .

Click on

, Learn about Alibaba cloud Serverless Container services ASK For more details !

Reference link

[1] WAGI - WebAssembly Gateway Interface project :

https://github.com/deislabs/wagi

[2] Optimized implementation :

https://github.com/denverdino/wagi/tree/with_cache

[3] flamegraph:

https://github.com/flamegraph-rs/flamegraph

[4] Knative Installation document :

https://knative.dev/docs/install/

[5] Alibaba cloud Serverless Container services ASK:

https://www.aliyun.com/product/cs/ask

[6] Knative Support :

https://help.aliyun.com/document_detail/121508.html

[7] project :

https://github.com/denverdino/knative-wagi

原网站版权声明

本文为[InfoQ]所创,转载请带上原文链接,感谢

https://yzsam.com/2022/188/202207062215184697.html